mirror of https://github.com/dapr/java-sdk.git

initial bulk publish impl for java (#789)

* initial bulk publish impl for java Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * add UTs and clean up java doc for new interface methods. Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * add more interface methods for bulk publish Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * adding examples and ITs for bulk publish Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * addressing review comments Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * use latest ref from dapr branch Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * add example validation Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix bindings example validation Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * make changes for latest bulk publish dapr changes Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix examples Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix examples Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix typo Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * test against java 11 only Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * change to latest dapr commit Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * run only pubsub IT, upload failsafe reports as run artifact Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix checkstyle Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix IT report upload condition Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix compile issues Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix spotbugs issue Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * run pubsubIT only Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * change upload artifact name for IT Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix tests Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * introduce sleep Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * test bulk publish with redis Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * change longvalues test to kafka Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * change bulk pub to kafka and revert long values changes Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * remove kafka pubsub from pubsub IT Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * change match order in examples Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * set fail fast as false Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix Internal Invoke exception in ITs Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * address review comments Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix IT Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix app scopes in examples Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * add content to daprdocs Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * address review comments Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix mm.py step comment Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * reset bindings examples readme Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> * fix example, IT and make classes immutable Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> Signed-off-by: Mukundan Sundararajan <65565396+mukundansundar@users.noreply.github.com> Co-authored-by: Artur Souza <asouza.pro@gmail.com>

This commit is contained in:

parent

eb8565cca0

commit

81591b9f5b

|

|

@ -100,9 +100,10 @@ jobs:

|

|||

- name: Codecov

|

||||

uses: codecov/codecov-action@v3.1.0

|

||||

- name: Install jars

|

||||

run: mvn install -q

|

||||

run: mvn install -q

|

||||

- name: Integration tests

|

||||

run: mvn -f sdk-tests/pom.xml verify -q

|

||||

id: integration_tests

|

||||

run: mvn -f sdk-tests/pom.xml verify

|

||||

- name: Upload test report for sdk

|

||||

uses: actions/upload-artifact@master

|

||||

with:

|

||||

|

|

@ -113,6 +114,19 @@ jobs:

|

|||

with:

|

||||

name: report-dapr-java-sdk-actors

|

||||

path: sdk-actors/target/jacoco-report/

|

||||

- name: Upload failsafe test report for sdk-tests on failure

|

||||

if: ${{ failure() && steps.integration_tests.conclusion == 'failure' }}

|

||||

uses: actions/upload-artifact@master

|

||||

with:

|

||||

name: failsafe-report-sdk-tests

|

||||

path: sdk-tests/target/failsafe-reports

|

||||

- name: Upload surefire test report for sdk-tests on failure

|

||||

if: ${{ failure() && steps.integration_tests.conclusion == 'failure' }}

|

||||

uses: actions/upload-artifact@master

|

||||

with:

|

||||

name: surefire-report-sdk-tests

|

||||

path: sdk-tests/target/surefire-reports

|

||||

|

||||

publish:

|

||||

runs-on: ubuntu-latest

|

||||

needs: build

|

||||

|

|

|

|||

|

|

@ -28,6 +28,7 @@ jobs:

|

|||

validate:

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

fail-fast: false # Keep running if one leg fails.

|

||||

matrix:

|

||||

java: [ 11, 13, 15, 16 ]

|

||||

env:

|

||||

|

|

@ -129,10 +130,10 @@ jobs:

|

|||

working-directory: ./examples

|

||||

run: |

|

||||

mm.py ./src/main/java/io/dapr/examples/state/README.md

|

||||

- name: Validate pubsub HTTP example

|

||||

- name: Validate pubsub example

|

||||

working-directory: ./examples

|

||||

run: |

|

||||

mm.py ./src/main/java/io/dapr/examples/pubsub/http/README.md

|

||||

mm.py ./src/main/java/io/dapr/examples/pubsub/README.md

|

||||

- name: Validate bindings HTTP example

|

||||

working-directory: ./examples

|

||||

run: |

|

||||

|

|

|

|||

|

|

@ -222,6 +222,35 @@ public class SubscriberController {

|

|||

}

|

||||

```

|

||||

|

||||

##### Bulk Publish Messages

|

||||

> Note: API is in Alpha stage

|

||||

|

||||

|

||||

```java

|

||||

import io.dapr.client.DaprClientBuilder;

|

||||

import io.dapr.client.DaprPreviewClient;

|

||||

import io.dapr.client.domain.BulkPublishResponse;

|

||||

import io.dapr.client.domain.BulkPublishResponseFailedEntry;

|

||||

import java.util.ArrayList;

|

||||

import java.util.List;

|

||||

class Solution {

|

||||

public void publishMessages() {

|

||||

try (DaprPreviewClient client = (new DaprClientBuilder()).buildPreviewClient()) {

|

||||

// Create a list of messages to publish

|

||||

List<String> messages = new ArrayList<>();

|

||||

for (int i = 0; i < NUM_MESSAGES; i++) {

|

||||

String message = String.format("This is message #%d", i);

|

||||

messages.add(message);

|

||||

System.out.println("Going to publish message : " + message);

|

||||

}

|

||||

|

||||

// Publish list of messages using the bulk publish API

|

||||

BulkPublishResponse<String> res = client.publishEvents(PUBSUB_NAME, TOPIC_NAME, "text/plain", messages).block()

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

- For a full guide on publishing messages and subscribing to a topic [How-To: Publish & subscribe]({{< ref howto-publish-subscribe.md >}}).

|

||||

- Visit [Java SDK examples](https://github.com/dapr/java-sdk/tree/master/examples/src/main/java/io/dapr/examples/pubsub/http) for code samples and instructions to try out pub/sub

|

||||

|

||||

|

|

|

|||

|

|

@ -10,3 +10,8 @@ spec:

|

|||

value: localhost:6379

|

||||

- name: redisPassword

|

||||

value: ""

|

||||

scopes:

|

||||

- publisher

|

||||

- bulk-publisher

|

||||

- subscriber

|

||||

- publisher-tracing

|

||||

|

|

@ -50,7 +50,7 @@ cd examples

|

|||

|

||||

Before getting into the application code, follow these steps in order to set up a local instance of Kafka. This is needed for the local instances. Steps are:

|

||||

|

||||

1. To run container locally run:

|

||||

1. To run container locally run:

|

||||

|

||||

<!-- STEP

|

||||

name: Setup kafka container

|

||||

|

|

|

|||

|

|

@ -0,0 +1,101 @@

|

|||

/*

|

||||

* Copyright 2023 The Dapr Authors

|

||||

* Licensed under the Apache License, Version 2.0 (the "License");

|

||||

* you may not use this file except in compliance with the License.

|

||||

* You may obtain a copy of the License at

|

||||

* http://www.apache.org/licenses/LICENSE-2.0

|

||||

* Unless required by applicable law or agreed to in writing, software

|

||||

* distributed under the License is distributed on an "AS IS" BASIS,

|

||||

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

* See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

*/

|

||||

|

||||

package io.dapr.examples.pubsub;

|

||||

|

||||

import io.dapr.client.DaprClient;

|

||||

import io.dapr.client.DaprClientBuilder;

|

||||

import io.dapr.client.DaprPreviewClient;

|

||||

import io.dapr.client.domain.BulkPublishResponse;

|

||||

import io.dapr.client.domain.BulkPublishResponseFailedEntry;

|

||||

import io.dapr.examples.OpenTelemetryConfig;

|

||||

import io.opentelemetry.api.OpenTelemetry;

|

||||

import io.opentelemetry.api.trace.Span;

|

||||

import io.opentelemetry.api.trace.Tracer;

|

||||

import io.opentelemetry.context.Scope;

|

||||

import io.opentelemetry.sdk.OpenTelemetrySdk;

|

||||

|

||||

import java.util.ArrayList;

|

||||

import java.util.List;

|

||||

|

||||

import static io.dapr.examples.OpenTelemetryConfig.getReactorContext;

|

||||

|

||||

/**

|

||||

* Message publisher.

|

||||

* 1. Build and install jars:

|

||||

* mvn clean install

|

||||

* 2. cd [repo root]/examples

|

||||

* 3. Run the program:

|

||||

* dapr run --components-path ./components/pubsub --app-id bulk-publisher -- \

|

||||

* java -Ddapr.grpc.port="50010" -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.BulkPublisher

|

||||

*/

|

||||

public class BulkPublisher {

|

||||

|

||||

private static final int NUM_MESSAGES = 10;

|

||||

|

||||

private static final String TOPIC_NAME = "bulkpublishtesting";

|

||||

|

||||

//The name of the pubsub

|

||||

private static final String PUBSUB_NAME = "messagebus";

|

||||

|

||||

/**

|

||||

* main method.

|

||||

*

|

||||

* @param args incoming args

|

||||

* @throws Exception any exception

|

||||

*/

|

||||

public static void main(String[] args) throws Exception {

|

||||

OpenTelemetry openTelemetry = OpenTelemetryConfig.createOpenTelemetry();

|

||||

Tracer tracer = openTelemetry.getTracer(BulkPublisher.class.getCanonicalName());

|

||||

Span span = tracer.spanBuilder("Bulk Publisher's Main").setSpanKind(Span.Kind.CLIENT).startSpan();

|

||||

try (DaprPreviewClient client = (new DaprClientBuilder()).buildPreviewClient()) {

|

||||

DaprClient c = (DaprClient) client;

|

||||

c.waitForSidecar(10000);

|

||||

try (Scope scope = span.makeCurrent()) {

|

||||

System.out.println("Using preview client...");

|

||||

List<String> messages = new ArrayList<>();

|

||||

System.out.println("Constructing the list of messages to publish");

|

||||

for (int i = 0; i < NUM_MESSAGES; i++) {

|

||||

String message = String.format("This is message #%d", i);

|

||||

messages.add(message);

|

||||

System.out.println("Going to publish message : " + message);

|

||||

}

|

||||

BulkPublishResponse<?> res = client.publishEvents(PUBSUB_NAME, TOPIC_NAME, "text/plain", messages)

|

||||

.subscriberContext(getReactorContext()).block();

|

||||

System.out.println("Published the set of messages in a single call to Dapr");

|

||||

if (res != null) {

|

||||

if (res.getFailedEntries().size() > 0) {

|

||||

// Ideally this condition will not happen in examples

|

||||

System.out.println("Some events failed to be published");

|

||||

for (BulkPublishResponseFailedEntry<?> entry : res.getFailedEntries()) {

|

||||

System.out.println("EntryId : " + entry.getEntry().getEntryId()

|

||||

+ " Error message : " + entry.getErrorMessage());

|

||||

}

|

||||

}

|

||||

} else {

|

||||

throw new Exception("null response from dapr");

|

||||

}

|

||||

}

|

||||

// Close the span.

|

||||

|

||||

span.end();

|

||||

// Allow plenty of time for Dapr to export all relevant spans to the tracing infra.

|

||||

Thread.sleep(10000);

|

||||

// Shutdown the OpenTelemetry tracer.

|

||||

OpenTelemetrySdk.getGlobalTracerManagement().shutdown();

|

||||

|

||||

System.out.println("Done");

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -0,0 +1,94 @@

|

|||

/*

|

||||

* Copyright 2023 The Dapr Authors

|

||||

* Licensed under the Apache License, Version 2.0 (the "License");

|

||||

* you may not use this file except in compliance with the License.

|

||||

* You may obtain a copy of the License at

|

||||

* http://www.apache.org/licenses/LICENSE-2.0

|

||||

* Unless required by applicable law or agreed to in writing, software

|

||||

* distributed under the License is distributed on an "AS IS" BASIS,

|

||||

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

* See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

*/

|

||||

|

||||

package io.dapr.examples.pubsub;

|

||||

|

||||

import io.dapr.client.DaprClientBuilder;

|

||||

import io.dapr.client.DaprPreviewClient;

|

||||

import io.dapr.client.domain.BulkPublishEntry;

|

||||

import io.dapr.client.domain.BulkPublishRequest;

|

||||

import io.dapr.client.domain.BulkPublishResponse;

|

||||

import io.dapr.client.domain.BulkPublishResponseFailedEntry;

|

||||

import io.dapr.client.domain.CloudEvent;

|

||||

|

||||

import java.util.ArrayList;

|

||||

import java.util.HashMap;

|

||||

import java.util.List;

|

||||

import java.util.Map;

|

||||

import java.util.UUID;

|

||||

|

||||

/**

|

||||

* Message publisher.

|

||||

* 1. Build and install jars:

|

||||

* mvn clean install

|

||||

* 2. cd [repo root]/examples

|

||||

* 3. Run the program:

|

||||

* dapr run --components-path ./components/pubsub --app-id publisher -- \

|

||||

* java -Ddapr.grpc.port="50010" \

|

||||

* -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.CloudEventBulkPublisher

|

||||

*/

|

||||

public class CloudEventBulkPublisher {

|

||||

|

||||

private static final int NUM_MESSAGES = 10;

|

||||

|

||||

private static final String TOPIC_NAME = "bulkpublishtesting";

|

||||

|

||||

//The name of the pubsub

|

||||

private static final String PUBSUB_NAME = "messagebus";

|

||||

|

||||

/**

|

||||

* main method.

|

||||

*

|

||||

* @param args incoming args

|

||||

* @throws Exception any exception

|

||||

*/

|

||||

public static void main(String[] args) throws Exception {

|

||||

try (DaprPreviewClient client = (new DaprClientBuilder()).buildPreviewClient()) {

|

||||

System.out.println("Using preview client...");

|

||||

List<BulkPublishEntry<CloudEvent<Map<String, String>>>> entries = new ArrayList<>();

|

||||

for (int i = 0; i < NUM_MESSAGES; i++) {

|

||||

CloudEvent<Map<String, String>> cloudEvent = new CloudEvent<>();

|

||||

cloudEvent.setId(UUID.randomUUID().toString());

|

||||

cloudEvent.setType("example");

|

||||

cloudEvent.setSpecversion("1");

|

||||

cloudEvent.setDatacontenttype("application/json");

|

||||

String val = String.format("This is message #%d", i);

|

||||

cloudEvent.setData(new HashMap<>() {

|

||||

{

|

||||

put("dataKey", val);

|

||||

}

|

||||

});

|

||||

BulkPublishEntry<CloudEvent<Map<String, String>>> entry = new BulkPublishEntry<>(

|

||||

"" + (i + 1), cloudEvent, CloudEvent.CONTENT_TYPE, null);

|

||||

entries.add(entry);

|

||||

}

|

||||

BulkPublishRequest<CloudEvent<Map<String, String>>> request = new BulkPublishRequest<>(PUBSUB_NAME, TOPIC_NAME,

|

||||

entries);

|

||||

BulkPublishResponse<?> res = client.publishEvents(request).block();

|

||||

if (res != null) {

|

||||

if (res.getFailedEntries().size() > 0) {

|

||||

// Ideally this condition will not happen in examples

|

||||

System.out.println("Some events failed to be published");

|

||||

for (BulkPublishResponseFailedEntry<?> entry : res.getFailedEntries()) {

|

||||

System.out.println("EntryId : " + entry.getEntry().getEntryId()

|

||||

+ " Error message : " + entry.getErrorMessage());

|

||||

}

|

||||

}

|

||||

} else {

|

||||

throw new Exception("null response");

|

||||

}

|

||||

System.out.println("Done");

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -11,7 +11,7 @@

|

|||

limitations under the License.

|

||||

*/

|

||||

|

||||

package io.dapr.examples.pubsub.http;

|

||||

package io.dapr.examples.pubsub;

|

||||

|

||||

import io.dapr.client.DaprClient;

|

||||

import io.dapr.client.DaprClientBuilder;

|

||||

|

|

@ -30,7 +30,7 @@ import static java.util.Collections.singletonMap;

|

|||

* 2. cd [repo root]/examples

|

||||

* 3. Run the program:

|

||||

* dapr run --components-path ./components/pubsub --app-id publisher -- \

|

||||

* java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.http.CloudEventPublisher

|

||||

* java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.CloudEventPublisher

|

||||

*/

|

||||

public class CloudEventPublisher {

|

||||

|

||||

|

|

@ -11,7 +11,7 @@

|

|||

limitations under the License.

|

||||

*/

|

||||

|

||||

package io.dapr.examples.pubsub.http;

|

||||

package io.dapr.examples.pubsub;

|

||||

|

||||

import io.dapr.client.DaprClient;

|

||||

import io.dapr.client.DaprClientBuilder;

|

||||

|

|

@ -26,7 +26,7 @@ import static java.util.Collections.singletonMap;

|

|||

* 2. cd [repo root]/examples

|

||||

* 3. Run the program:

|

||||

* dapr run --components-path ./components/pubsub --app-id publisher -- \

|

||||

* java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.http.Publisher

|

||||

* java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.Publisher

|

||||

*/

|

||||

public class Publisher {

|

||||

|

||||

|

|

@ -11,7 +11,7 @@

|

|||

limitations under the License.

|

||||

*/

|

||||

|

||||

package io.dapr.examples.pubsub.http;

|

||||

package io.dapr.examples.pubsub;

|

||||

|

||||

import io.dapr.client.DaprClient;

|

||||

import io.dapr.client.DaprClientBuilder;

|

||||

|

|

@ -31,7 +31,7 @@ import static io.dapr.examples.OpenTelemetryConfig.getReactorContext;

|

|||

* 2. cd [repo root]/examples

|

||||

* 3. Run the program:

|

||||

* dapr run --components-path ./components/pubsub --app-id publisher-tracing -- \

|

||||

* java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.http.PublisherWithTracing

|

||||

* java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.PublisherWithTracing

|

||||

*/

|

||||

public class PublisherWithTracing {

|

||||

|

||||

|

|

@ -6,7 +6,7 @@ Visit [this](https://docs.dapr.io/developing-applications/building-blocks/pubsub

|

|||

|

||||

## Pub-Sub Sample using the Java-SDK

|

||||

|

||||

This sample uses the HTTP Client provided in Dapr Java SDK for subscribing, and Dapr Spring Boot integration for publishing. This example uses Redis Streams (enabled in Redis versions => 5).

|

||||

This sample uses the HTTP Springboot integration provided in Dapr Java SDK for subscribing, and gRPC client for publishing. This example uses Redis Streams (enabled in Redis versions => 5).

|

||||

## Pre-requisites

|

||||

|

||||

* [Dapr and Dapr Cli](https://docs.dapr.io/getting-started/install-dapr/).

|

||||

|

|

@ -124,6 +124,8 @@ match_order: none

|

|||

expected_stdout_lines:

|

||||

- '== APP == Subscriber got: This is message #1'

|

||||

- '== APP == Subscriber got: This is message #2'

|

||||

- '== APP == Subscriber got from bulk published topic: This is message #2'

|

||||

- '== APP == Subscriber got from bulk published topic: This is message #3'

|

||||

- '== APP == Bulk Subscriber got: This is message #1'

|

||||

- '== APP == Bulk Subscriber got: This is message #2'

|

||||

background: true

|

||||

|

|

@ -131,14 +133,14 @@ sleep: 5

|

|||

-->

|

||||

|

||||

```bash

|

||||

dapr run --components-path ./components/pubsub --app-id subscriber --app-port 3000 -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.http.Subscriber -p 3000

|

||||

dapr run --components-path ./components/pubsub --app-id subscriber --app-port 3000 -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.Subscriber -p 3000

|

||||

```

|

||||

|

||||

<!-- END_STEP -->

|

||||

|

||||

### Running the publisher

|

||||

|

||||

The other component is the publisher. It is a simple java application with a main method that uses the Dapr HTTP Client to publish 10 messages to an specific topic.

|

||||

Another component is the publisher. It is a simple java application with a main method that uses the Dapr gRPC Client to publish 10 messages to a specific topic.

|

||||

|

||||

In the `Publisher.java` file, you will find the `Publisher` class, containing the main method. The main method declares a Dapr Client using the `DaprClientBuilder` class. Notice that this builder gets two serializer implementations in the constructor: One is for Dapr's sent and received objects, and second is for objects to be persisted. The client publishes messages using `publishEvent` method. The Dapr client is also within a try-with-resource block to properly close the client at the end. See the code snippet below:

|

||||

Dapr sidecar will automatically wrap the payload received into a CloudEvent object, which will later on parsed by the subscriber.

|

||||

|

|

@ -175,7 +177,7 @@ The `CloudEventPublisher.java` file shows how the same can be accomplished if th

|

|||

In this case, the app MUST override the content-type parameter via `withContentType()`, so Dapr sidecar knows that the payload is already a CloudEvent object.

|

||||

|

||||

```java

|

||||

public class Publisher {

|

||||

public class CloudEventPublisher {

|

||||

///...

|

||||

public static void main(String[] args) throws Exception {

|

||||

//Creating the DaprClient: Using the default builder client produces an HTTP Dapr Client

|

||||

|

|

@ -215,7 +217,7 @@ sleep: 15

|

|||

-->

|

||||

|

||||

```bash

|

||||

dapr run --components-path ./components/pubsub --app-id publisher -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.http.Publisher

|

||||

dapr run --components-path ./components/pubsub --app-id publisher -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.Publisher

|

||||

```

|

||||

|

||||

<!-- END_STEP -->

|

||||

|

|

@ -288,6 +290,196 @@ Once running, the Subscriber should print the output as follows:

|

|||

|

||||

Messages have been retrieved from the topic.

|

||||

|

||||

### Bulk Publish Messages

|

||||

> Note : This API is currently in Alpha stage in Dapr runtime, hence the API methods in SDK are part of the DaprPreviewClient class.

|

||||

|

||||

Another feature provided by the SDK is to allow users to publish multiple messages in a single call to the Dapr sidecar.

|

||||

For this example, we have a simple java application with a main method that uses the Dapr gPRC Preview Client to publish 10 messages to a specific topic in a single call.

|

||||

|

||||

In the `BulkPublisher.java` file, you will find the `BulkPublisher` class, containing the main method. The main method declares a Dapr Preview Client using the `DaprClientBuilder` class. Notice that this builder gets two serializer implementations in the constructor: One is for Dapr's sent and recieved objects, and second is for objects to be persisted.

|

||||

The client publishes messages using `publishEvents` method. The Dapr client is also within a try-with-resource block to properly close the client at the end. See the code snippet below:

|

||||

Dapr sidecar will automatically wrap the payload received into a CloudEvent object, which will later on be parsed by the subscriber.

|

||||

|

||||

```java

|

||||

public class BulkPublisher {

|

||||

private static final int NUM_MESSAGES = 10;

|

||||

private static final String TOPIC_NAME = "kafkatestingtopic";

|

||||

private static final String PUBSUB_NAME = "kafka-pubsub";

|

||||

|

||||

///...

|

||||

public static void main(String[] args) throws Exception {

|

||||

OpenTelemetry openTelemetry = OpenTelemetryConfig.createOpenTelemetry();

|

||||

Tracer tracer = openTelemetry.getTracer(BulkPublisher.class.getCanonicalName());

|

||||

Span span = tracer.spanBuilder("Bulk Publisher's Main").setSpanKind(Span.Kind.CLIENT).startSpan();

|

||||

try (DaprPreviewClient client = (new DaprClientBuilder()).buildPreviewClient()) {

|

||||

DaprClient c = (DaprClient)client;

|

||||

c.waitForSidecar(10000);

|

||||

try (Scope scope = span.makeCurrent()) {

|

||||

System.out.println("Using preview client...");

|

||||

List<String> messages = new ArrayList<>();

|

||||

System.out.println("Constructing the list of messages to publish");

|

||||

for (int i = 0; i < NUM_MESSAGES; i++) {

|

||||

String message = String.format("This is message #%d", i);

|

||||

messages.add(message);

|

||||

System.out.println("Going to publish message : " + message);

|

||||

}

|

||||

BulkPublishResponse res = client.publishEvents(PUBSUB_NAME, TOPIC_NAME, messages, "text/plain")

|

||||

.subscriberContext(getReactorContext()).block();

|

||||

System.out.println("Published the set of messages in a single call to Dapr");

|

||||

if (res != null) {

|

||||

if (res.getFailedEntries().size() > 0) {

|

||||

// Ideally this condition will not happen in examples

|

||||

System.out.println("Some events failed to be published");

|

||||

for (BulkPublishResponseFailedEntry entry : res.getFailedEntries()) {

|

||||

System.out.println("EntryId : " + entry.getEntryId() + " Error message : " + entry.getErrorMessage());

|

||||

}

|

||||

}

|

||||

} else {

|

||||

throw new Exception("null response from dapr");

|

||||

}

|

||||

}

|

||||

// Close the span.

|

||||

|

||||

span.end();

|

||||

// Allow plenty of time for Dapr to export all relevant spans to the tracing infra.

|

||||

Thread.sleep(10000);

|

||||

// Shutdown the OpenTelemetry tracer.

|

||||

OpenTelemetrySdk.getGlobalTracerManagement().shutdown();

|

||||

}

|

||||

}

|

||||

```

|

||||

The code uses the `DaprPreviewClient` created by the `DaprClientBuilder` is used for the `publishEvents` (BulkPublish) preview API.

|

||||

|

||||

In this case, when `publishEvents` call is made, one of the argument to the method is the content type of data, this being `text/plain` in the example.

|

||||

In this case, when parsing and printing the response, there is a concept of EntryID, which is automatically generated or can be set manually when using the `BulkPublishRequest` object.

|

||||

The EntryID is a request scoped ID, in this case automatically generated as the index of the message in the list of messages in the `publishEvents` call.

|

||||

|

||||

The response, will be empty if all events are published successfully or it will contain the list of events that have failed.

|

||||

|

||||

The code also shows the scenario where it is possible to start tracing in code and pass on that tracing context to Dapr.

|

||||

|

||||

The `CloudEventBulkPublisher.java` file shows how the same can be accomplished if the application must send a CloudEvent object instead of relying on Dapr's automatic CloudEvent "wrapping".

|

||||

In this case, the application **MUST** override the content-type parameter via `withContentType()`, so Dapr sidecar knows that the payload is already a CloudEvent object.

|

||||

|

||||

```java

|

||||

public class CloudEventBulkPublisher {

|

||||

///...

|

||||

public static void main(String[] args) throws Exception {

|

||||

try (DaprPreviewClient client = (new DaprClientBuilder()).buildPreviewClient()) {

|

||||

// Construct request

|

||||

BulkPublishRequest<CloudEvent<Map<String, String>>> request = new BulkPublishRequest<>(PUBSUB_NAME, TOPIC_NAME);

|

||||

List<BulkPublishRequestEntry<CloudEvent<Map<String, String>>>> entries = new ArrayList<>();

|

||||

for (int i = 0; i < NUM_MESSAGES; i++) {

|

||||

CloudEvent<Map<String, String>> cloudEvent = new CloudEvent<>();

|

||||

cloudEvent.setId(UUID.randomUUID().toString());

|

||||

cloudEvent.setType("example");

|

||||

cloudEvent.setSpecversion("1");

|

||||

cloudEvent.setDatacontenttype("application/json");

|

||||

String val = String.format("This is message #%d", i);

|

||||

cloudEvent.setData(new HashMap<>() {

|

||||

{

|

||||

put("dataKey", val);

|

||||

}

|

||||

});

|

||||

BulkPublishRequestEntry<CloudEvent<Map<String, String>>> entry = new BulkPublishRequestEntry<>();

|

||||

entry.setEntryID("" + (i + 1))

|

||||

.setEvent(cloudEvent)

|

||||

.setContentType(CloudEvent.CONTENT_TYPE);

|

||||

entries.add(entry);

|

||||

}

|

||||

request.setEntries(entries);

|

||||

|

||||

// Publish events

|

||||

BulkPublishResponse res = client.publishEvents(request).block();

|

||||

if (res != null) {

|

||||

if (res.getFailedEntries().size() > 0) {

|

||||

// Ideally this condition will not happen in examples

|

||||

System.out.println("Some events failed to be published");

|

||||

for (BulkPublishResponseFailedEntry entry : res.getFailedEntries()) {

|

||||

System.out.println("EntryId : " + entry.getEntryId() + " Error message : " + entry.getErrorMessage());

|

||||

}

|

||||

}

|

||||

} else {

|

||||

throw new Exception("null response");

|

||||

}

|

||||

System.out.println("Done");

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Use the follow command to execute the BulkPublisher example:

|

||||

|

||||

<!-- STEP

|

||||

name: Run Bulk Publisher

|

||||

match_order: sequential

|

||||

expected_stdout_lines:

|

||||

- '== APP == Published the set of messages in a single call to Dapr'

|

||||

background: true

|

||||

sleep: 20

|

||||

-->

|

||||

|

||||

```bash

|

||||

dapr run --components-path ./components/pubsub --app-id bulk-publisher -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.BulkPublisher

|

||||

```

|

||||

<!-- END_STEP -->

|

||||

|

||||

|

||||

Once running, the BulkPublisher should print the output as follows:

|

||||

|

||||

```txt

|

||||

✅ You're up and running! Both Dapr and your app logs will appear here.

|

||||

|

||||

== APP == Using preview client...

|

||||

== APP == Constructing the list of messages to publish

|

||||

== APP == Going to publish message : This is message #0

|

||||

== APP == Going to publish message : This is message #1

|

||||

== APP == Going to publish message : This is message #2

|

||||

== APP == Going to publish message : This is message #3

|

||||

== APP == Going to publish message : This is message #4

|

||||

== APP == Going to publish message : This is message #5

|

||||

== APP == Going to publish message : This is message #6

|

||||

== APP == Going to publish message : This is message #7

|

||||

== APP == Going to publish message : This is message #8

|

||||

== APP == Going to publish message : This is message #9

|

||||

== APP == Published the set of messages in a single call to Dapr

|

||||

== APP == Done

|

||||

|

||||

```

|

||||

|

||||

Messages have been published in the topic.

|

||||

|

||||

The Subscriber started previously [here](#running-the-subscriber) should print the output as follows:

|

||||

|

||||

```txt

|

||||

== APP == Subscriber got from bulk published topic: This is message #1

|

||||

== APP == Subscriber got: {"id":"323935ed-d8db-4ea2-ba28-52352b1d1b34","source":"bulk-publisher","type":"com.dapr.event.sent","specversion":"1.0","datacontenttype":"text/plain","data":"This is message #1","data_base64":null}

|

||||

== APP == Subscriber got from bulk published topic: This is message #0

|

||||

== APP == Subscriber got: {"id":"bb2f4833-0473-446b-a6cc-04a36de5ac0a","source":"bulk-publisher","type":"com.dapr.event.sent","specversion":"1.0","datacontenttype":"text/plain","data":"This is message #0","data_base64":null}

|

||||

== APP == Subscriber got from bulk published topic: This is message #5

|

||||

== APP == Subscriber got: {"id":"07bad175-4be4-4beb-a983-4def2eba5768","source":"bulk-publisher","type":"com.dapr.event.sent","specversion":"1.0","datacontenttype":"text/plain","data":"This is message #5","data_base64":null}

|

||||

== APP == Subscriber got from bulk published topic: This is message #6

|

||||

== APP == Subscriber got: {"id":"b99fba4d-732a-4d18-bf10-b37916dedfb1","source":"bulk-publisher","type":"com.dapr.event.sent","specversion":"1.0","datacontenttype":"text/plain","data":"This is message #6","data_base64":null}

|

||||

== APP == Subscriber got from bulk published topic: This is message #2

|

||||

== APP == Subscriber got: {"id":"2976f254-7859-449e-b66c-57fab4a72aef","source":"bulk-publisher","type":"com.dapr.event.sent","specversion":"1.0","datacontenttype":"text/plain","data":"This is message #2","data_base64":null}

|

||||

== APP == Subscriber got from bulk published topic: This is message #3

|

||||

== APP == Subscriber got: {"id":"f21ff2b5-4842-481d-9a96-e4c299d1c463","source":"bulk-publisher","type":"com.dapr.event.sent","specversion":"1.0","datacontenttype":"text/plain","data":"This is message #3","data_base64":null}

|

||||

== APP == Subscriber got from bulk published topic: This is message #4

|

||||

== APP == Subscriber got: {"id":"4bf50438-e576-4f5f-bb40-bd31c716ad02","source":"bulk-publisher","type":"com.dapr.event.sent","specversion":"1.0","datacontenttype":"text/plain","data":"This is message #4","data_base64":null}

|

||||

== APP == Subscriber got from bulk published topic: This is message #7

|

||||

== APP == Subscriber got: {"id":"f0c8b53b-7935-478e-856b-164d329d25ab","source":"bulk-publisher","type":"com.dapr.event.sent","specversion":"1.0","datacontenttype":"text/plain","data":"This is message #7","data_base64":null}

|

||||

== APP == Subscriber got from bulk published topic: This is message #9

|

||||

== APP == Subscriber got: {"id":"b280569f-cc29-471f-9cb7-682d8d6bd553","source":"bulk-publisher","type":"com.dapr.event.sent","specversion":"1.0","datacontenttype":"text/plain","data":"This is message #9","data_base64":null}

|

||||

== APP == Subscriber got from bulk published topic: This is message #8

|

||||

== APP == Subscriber got: {"id":"df20d841-296e-4c6b-9dcb-dd17920538e7","source":"bulk-publisher","type":"com.dapr.event.sent","specversion":"1.0","datacontenttype":"text/plain","data":"This is message #8","data_base64":null}

|

||||

```

|

||||

|

||||

> Note: Redis pubsub component does not have a native and uses Dapr runtime's default bulk publish implementation which is concurrent, thus the order of the events that are published are not guaranteed.

|

||||

|

||||

Messages have been retrieved from the topic.

|

||||

|

||||

### Bulk Subscription

|

||||

|

||||

You can also run the publisher to publish messages to `testingtopicbulk` topic, and receive messages using the bulk subscription.

|

||||

|

||||

<!-- STEP

|

||||

|

|

@ -300,12 +492,12 @@ sleep: 15

|

|||

-->

|

||||

|

||||

```bash

|

||||

dapr run --components-path ./components/pubsub --app-id publisher -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.http.Publisher testingtopicbulk

|

||||

dapr run --components-path ./components/pubsub --app-id publisher -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.Publisher testingtopicbulk

|

||||

```

|

||||

|

||||

<!-- END_STEP -->

|

||||

|

||||

Once running, the Publisher should print the same output as above. The Subscriber should print the output as follows:

|

||||

Once running, the Publisher should print the same output as seen [above](#running-the-publisher). The Subscriber should print the output as follows:

|

||||

|

||||

```txt

|

||||

== APP == Bulk Subscriber got 10 messages.

|

||||

|

|

@ -346,10 +538,15 @@ Once you click on the tracing event, you will see the details of the call stack

|

|||

|

||||

|

||||

|

||||

|

||||

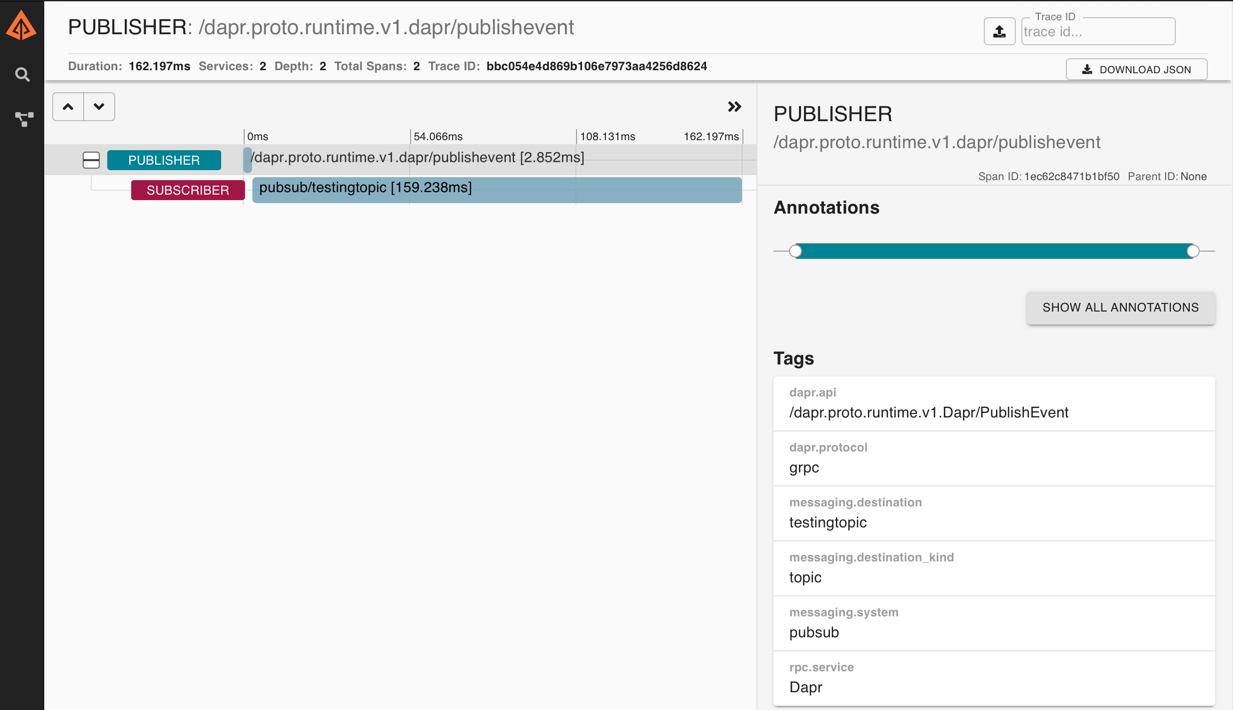

Once you click on the bulk publisher tracing event, you will see the details of the call stack starting in the client and then showing the service API calls right below.

|

||||

|

||||

|

||||

|

||||

If you would like to add a tracing span as a parent of the span created by Dapr, change the publisher to handle that. See `PublisherWithTracing.java` to see the difference and run it with:

|

||||

|

||||

<!-- STEP

|

||||

name: Run Publisher

|

||||

name: Run Publisher with tracing

|

||||

expected_stdout_lines:

|

||||

- '== APP == Published message: This is message #0'

|

||||

- '== APP == Published message: This is message #1'

|

||||

|

|

@ -358,7 +555,7 @@ sleep: 15

|

|||

-->

|

||||

|

||||

```bash

|

||||

dapr run --components-path ./components/pubsub --app-id publisher-tracing -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.http.PublisherWithTracing

|

||||

dapr run --components-path ./components/pubsub --app-id publisher-tracing -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.PublisherWithTracing

|

||||

```

|

||||

|

||||

<!-- END_STEP -->

|

||||

|

|

@ -387,12 +584,12 @@ mvn install

|

|||

|

||||

Run the publisher app:

|

||||

```sh

|

||||

dapr run --components-path ./components/pubsub --app-id publisher -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.http.Publisher

|

||||

dapr run --components-path ./components/pubsub --app-id publisher -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.Publisher

|

||||

```

|

||||

|

||||

Wait until all 10 messages are published like before, then wait for a few more seconds and run the subscriber app:

|

||||

```sh

|

||||

dapr run --components-path ./components/pubsub --app-id subscriber --app-port 3000 -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.http.Subscriber -p 3000

|

||||

dapr run --components-path ./components/pubsub --app-id subscriber --app-port 3000 -- java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.Subscriber -p 3000

|

||||

```

|

||||

|

||||

No message is consumed by the subscriber app and warnings messages are emitted from Dapr sidecar:

|

||||

|

|

@ -419,7 +616,7 @@ No message is consumed by the subscriber app and warnings messages are emitted f

|

|||

|

||||

```

|

||||

|

||||

For more details on Dapr Spring Boot integration, please refer to [Dapr Spring Boot](../../DaprApplication.java) Application implementation.

|

||||

For more details on Dapr Spring Boot integration, please refer to [Dapr Spring Boot](../DaprApplication.java) Application implementation.

|

||||

|

||||

### Cleanup

|

||||

|

||||

|

|

@ -429,6 +626,7 @@ name: Cleanup

|

|||

|

||||

```bash

|

||||

dapr stop --app-id publisher

|

||||

dapr stop --app-id bulk-publisher

|

||||

dapr stop --app-id subscriber

|

||||

```

|

||||

|

||||

|

|

@ -11,7 +11,7 @@

|

|||

limitations under the License.

|

||||

*/

|

||||

|

||||

package io.dapr.examples.pubsub.http;

|

||||

package io.dapr.examples.pubsub;

|

||||

|

||||

import io.dapr.examples.DaprApplication;

|

||||

import org.apache.commons.cli.CommandLine;

|

||||

|

|

@ -26,7 +26,7 @@ import org.apache.commons.cli.Options;

|

|||

* 2. cd [repo root]/examples

|

||||

* 3. Run the server:

|

||||

* dapr run --components-path ./components/pubsub --app-id subscriber --app-port 3000 -- \

|

||||

* java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.http.Subscriber -p 3000

|

||||

* java -jar target/dapr-java-sdk-examples-exec.jar io.dapr.examples.pubsub.Subscriber -p 3000

|

||||

*/

|

||||

public class Subscriber {

|

||||

|

||||

|

|

@ -11,7 +11,7 @@

|

|||

limitations under the License.

|

||||

*/

|

||||

|

||||

package io.dapr.examples.pubsub.http;

|

||||

package io.dapr.examples.pubsub;

|

||||

|

||||

import com.fasterxml.jackson.databind.ObjectMapper;

|

||||

import io.dapr.Rule;

|

||||

|

|

@ -79,6 +79,24 @@ public class SubscriberController {

|

|||

});

|

||||

}

|

||||

|

||||

/**

|

||||

* Handles a registered publish endpoint on this app (bulk published events).

|

||||

* @param cloudEvent The cloud event received.

|

||||

* @return A message containing the time.

|

||||

*/

|

||||

@Topic(name = "bulkpublishtesting", pubsubName = "${myAppProperty:messagebus}")

|

||||

@PostMapping(path = "/bulkpublishtesting")

|

||||

public Mono<Void> handleBulkPublishMessage(@RequestBody(required = false) CloudEvent cloudEvent) {

|

||||

return Mono.fromRunnable(() -> {

|

||||

try {

|

||||

System.out.println("Subscriber got from bulk published topic: " + cloudEvent.getData());

|

||||

System.out.println("Subscriber got: " + OBJECT_MAPPER.writeValueAsString(cloudEvent));

|

||||

} catch (Exception e) {

|

||||

throw new RuntimeException(e);

|

||||

}

|

||||

});

|

||||

}

|

||||

|

||||

/**

|

||||

* Handles a registered subscribe endpoint on this app using bulk subscribe.

|

||||

*

|

||||

Binary file not shown.

|

After Width: | Height: | Size: 2.1 MiB |

|

|

@ -17,6 +17,10 @@ import com.fasterxml.jackson.core.JsonProcessingException;

|

|||

import com.fasterxml.jackson.databind.ObjectMapper;

|

||||

import io.dapr.client.DaprClient;

|

||||

import io.dapr.client.DaprClientBuilder;

|

||||

import io.dapr.client.DaprPreviewClient;

|

||||

import io.dapr.client.domain.BulkPublishEntry;

|

||||

import io.dapr.client.domain.BulkPublishRequest;

|

||||

import io.dapr.client.domain.BulkPublishResponse;

|

||||

import io.dapr.client.domain.BulkSubscribeAppResponse;

|

||||

import io.dapr.client.domain.BulkSubscribeAppResponseEntry;

|

||||

import io.dapr.client.domain.BulkSubscribeAppResponseStatus;

|

||||

|

|

@ -51,9 +55,9 @@ import static io.dapr.it.Retry.callWithRetry;

|

|||

import static io.dapr.it.TestUtils.assertThrowsDaprException;

|

||||

import static org.junit.Assert.assertArrayEquals;

|

||||

import static org.junit.Assert.assertEquals;

|

||||

import static org.junit.Assert.assertNotNull;

|

||||

import static org.junit.Assert.assertNull;

|

||||

import static org.junit.Assert.assertTrue;

|

||||

import static org.junit.Assert.assertNotNull;

|

||||

|

||||

|

||||

@RunWith(Parameterized.class)

|

||||

|

|

@ -71,6 +75,8 @@ public class PubSubIT extends BaseIT {

|

|||

private static final String PUBSUB_NAME = "messagebus";

|

||||

//The title of the topic to be used for publishing

|

||||

private static final String TOPIC_NAME = "testingtopic";

|

||||

|

||||

private static final String TOPIC_BULK = "testingbulktopic";

|

||||

private static final String TYPED_TOPIC_NAME = "typedtestingtopic";

|

||||

private static final String ANOTHER_TOPIC_NAME = "anothertopic";

|

||||

// Topic used for TTL test

|

||||

|

|

@ -138,6 +144,162 @@ public class PubSubIT extends BaseIT {

|

|||

}

|

||||

}

|

||||

|

||||

@Test

|

||||

public void testBulkPublishPubSubNotFound() throws Exception {

|

||||

DaprRun daprRun = closeLater(startDaprApp(

|

||||

this.getClass().getSimpleName(),

|

||||

60000));

|

||||

if (this.useGrpc) {

|

||||

daprRun.switchToGRPC();

|

||||

} else {

|

||||

// No HTTP implementation for bulk publish

|

||||

System.out.println("no HTTP impl for bulkPublish");

|

||||

return;

|

||||

}

|

||||

|

||||

try (DaprPreviewClient client = new DaprClientBuilder().buildPreviewClient()) {

|

||||

assertThrowsDaprException(

|

||||

"INVALID_ARGUMENT",

|

||||

"INVALID_ARGUMENT: pubsub unknown pubsub not found",

|

||||

() -> client.publishEvents("unknown pubsub", "mytopic","text/plain", "message").block());

|

||||

}

|

||||

}

|

||||

|

||||

@Test

|

||||

public void testBulkPublish() throws Exception {

|

||||

final DaprRun daprRun = closeLater(startDaprApp(

|

||||

this.getClass().getSimpleName(),

|

||||

SubscriberService.SUCCESS_MESSAGE,

|

||||

SubscriberService.class,

|

||||

true,

|

||||

60000));

|

||||

// At this point, it is guaranteed that the service above is running and all ports being listened to.

|

||||

if (this.useGrpc) {

|

||||

daprRun.switchToGRPC();

|

||||

} else {

|

||||

System.out.println("HTTP BulkPublish is not implemented. So skipping tests");

|

||||

return;

|

||||

}

|

||||

DaprObjectSerializer serializer = new DaprObjectSerializer() {

|

||||

@Override

|

||||

public byte[] serialize(Object o) throws JsonProcessingException {

|

||||

return OBJECT_MAPPER.writeValueAsBytes(o);

|

||||

}

|

||||

|

||||

@Override

|

||||

public <T> T deserialize(byte[] data, TypeRef<T> type) throws IOException {

|

||||

return (T) OBJECT_MAPPER.readValue(data, OBJECT_MAPPER.constructType(type.getType()));

|

||||

}

|

||||

|

||||

@Override

|

||||

public String getContentType() {

|

||||

return "application/json";

|

||||

}

|

||||

};

|

||||

try (DaprClient client = new DaprClientBuilder().withObjectSerializer(serializer).build();

|

||||

DaprPreviewClient previewClient = new DaprClientBuilder().withObjectSerializer(serializer).buildPreviewClient()) {

|

||||

// Only for the gRPC test

|

||||

// Send a multiple messages on one topic in messagebus pubsub via publishEvents API.

|

||||

List<String> messages = new ArrayList<>();

|

||||

for (int i = 0; i < NUM_MESSAGES; i++) {

|

||||

messages.add(String.format("This is message #%d on topic %s", i, TOPIC_BULK));

|

||||

}

|

||||

//Publishing 10 messages

|

||||

BulkPublishResponse response = previewClient.publishEvents(PUBSUB_NAME, TOPIC_BULK, "", messages).block();

|

||||

System.out.println(String.format("Published %d messages to topic '%s' pubsub_name '%s'",

|

||||

NUM_MESSAGES, TOPIC_BULK, PUBSUB_NAME));

|

||||

assertNotNull("expected not null bulk publish response", response);

|

||||

Assert.assertEquals("expected no failures in the response", 0, response.getFailedEntries().size());

|

||||

|

||||

//Publishing an object.

|

||||

MyObject object = new MyObject();

|

||||

object.setId("123");

|

||||

response = previewClient.publishEvents(PUBSUB_NAME, TOPIC_BULK,

|

||||

"application/json", Collections.singletonList(object)).block();

|

||||

System.out.println("Published one object.");

|

||||

assertNotNull("expected not null bulk publish response", response);

|

||||

Assert.assertEquals("expected no failures in the response", 0, response.getFailedEntries().size());

|

||||

|

||||

//Publishing a single byte: Example of non-string based content published

|

||||

previewClient.publishEvents(PUBSUB_NAME, TOPIC_BULK, "",

|

||||

Collections.singletonList(new byte[]{1})).block();

|

||||

System.out.println("Published one byte.");

|

||||

|

||||

assertNotNull("expected not null bulk publish response", response);

|

||||

Assert.assertEquals("expected no failures in the response", 0, response.getFailedEntries().size());

|

||||

|

||||

CloudEvent cloudEvent = new CloudEvent();

|

||||

cloudEvent.setId("1234");

|

||||

cloudEvent.setData("message from cloudevent");

|

||||

cloudEvent.setSource("test");

|

||||

cloudEvent.setSpecversion("1");

|

||||

cloudEvent.setType("myevent");

|

||||

cloudEvent.setDatacontenttype("text/plain");

|

||||

BulkPublishRequest<CloudEvent> req = new BulkPublishRequest<>(PUBSUB_NAME, TOPIC_BULK,

|

||||

Collections.singletonList(

|

||||

new BulkPublishEntry<>("1", cloudEvent, "application/cloudevents+json", null)

|

||||

));

|

||||

|

||||

//Publishing a cloud event.

|

||||

previewClient.publishEvents(req).block();

|

||||

assertNotNull("expected not null bulk publish response", response);

|

||||

Assert.assertEquals("expected no failures in the response", 0, response.getFailedEntries().size());

|

||||

|

||||

System.out.println("Published one cloud event.");

|

||||

|

||||

// Introduce sleep

|

||||

Thread.sleep(10000);

|

||||

|

||||

// Check messagebus subscription for topic testingbulktopic since it is populated only by publishEvents API call

|

||||

callWithRetry(() -> {

|

||||

System.out.println("Checking results for topic " + TOPIC_BULK + " in pubsub " + PUBSUB_NAME);

|

||||

// Validate text payload.

|

||||

final List<CloudEvent> cloudEventMessages = client.invokeMethod(

|

||||

daprRun.getAppName(),

|

||||

"messages/redis/testingbulktopic",

|

||||

null,

|

||||

HttpExtension.GET,

|

||||

CLOUD_EVENT_LIST_TYPE_REF).block();

|

||||

assertEquals("expected 13 messages to be received on subscribe", 13, cloudEventMessages.size());

|

||||

for (int i = 0; i < NUM_MESSAGES; i++) {

|

||||

final int messageId = i;

|

||||

assertTrue("expected data content to match", cloudEventMessages

|

||||

.stream()

|

||||

.filter(m -> m.getData() != null)

|

||||

.map(m -> m.getData())

|

||||

.filter(m -> m.equals(String.format("This is message #%d on topic %s", messageId, TOPIC_BULK)))

|

||||

.count() == 1);

|

||||

}

|

||||

|

||||

// Validate object payload.

|

||||

assertTrue("expected data content 123 to match", cloudEventMessages

|

||||

.stream()

|

||||

.filter(m -> m.getData() != null)

|

||||

.filter(m -> m.getData() instanceof LinkedHashMap)

|

||||

.map(m -> (LinkedHashMap) m.getData())

|

||||

.filter(m -> "123".equals(m.get("id")))

|

||||

.count() == 1);

|

||||

|

||||

// Validate byte payload.

|

||||

assertTrue("expected bin data to match", cloudEventMessages

|

||||

.stream()

|

||||

.filter(m -> m.getData() != null)

|

||||

.map(m -> m.getData())

|

||||

.filter(m -> "AQ==".equals(m))

|

||||

.count() == 1);

|

||||

|

||||

// Validate cloudevent payload.

|

||||

assertTrue("expected data to match",cloudEventMessages

|

||||

.stream()

|

||||

.filter(m -> m.getData() != null)

|

||||

.map(m -> m.getData())

|

||||

.filter(m -> "message from cloudevent".equals(m))

|

||||

.count() == 1);

|

||||

}, 2000);

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

@Test

|

||||

public void testPubSub() throws Exception {

|

||||

final DaprRun daprRun = closeLater(startDaprApp(

|

||||

|

|

@ -589,7 +751,7 @@ public class PubSubIT extends BaseIT {

|

|||

"messages/testinglongvalues",

|

||||

null,

|

||||

HttpExtension.GET, CLOUD_EVENT_LONG_LIST_TYPE_REF).block();

|

||||

Assert.assertNotNull(messages);

|

||||

assertNotNull(messages);

|

||||

for (CloudEvent<ConvertToLong> message : messages) {

|

||||

actual.add(message.getData());

|

||||

}

|

||||

|

|

|

|||

|

|

@ -44,11 +44,18 @@ public class SubscriberController {

|

|||

return messagesByTopic.getOrDefault(topic, Collections.emptyList());

|

||||

}

|

||||

|

||||

private static final List<CloudEvent> messagesReceivedBulkPublishTopic = new ArrayList();

|

||||

private static final List<CloudEvent> messagesReceivedTestingTopic = new ArrayList();

|

||||

private static final List<CloudEvent> messagesReceivedTestingTopicV2 = new ArrayList();

|

||||

private static final List<CloudEvent> messagesReceivedTestingTopicV3 = new ArrayList();

|

||||

private static final List<BulkSubscribeAppResponse> responsesReceivedTestingTopicBulkSub = new ArrayList<>();

|

||||

|

||||

@GetMapping(path = "/messages/redis/testingbulktopic")

|

||||

public List<CloudEvent> getMessagesReceivedBulkTopic() {

|

||||

return messagesReceivedBulkPublishTopic;

|

||||

}

|

||||

|

||||

|

||||

private static final List<BulkSubscribeAppResponse> responsesReceivedTestingTopicBulk = new ArrayList<>();

|

||||

|

||||

@GetMapping(path = "/messages/testingtopic")

|

||||

public List<CloudEvent> getMessagesReceivedTestingTopic() {

|

||||

|

|

@ -67,7 +74,7 @@ public class SubscriberController {

|

|||

|

||||

@GetMapping(path = "/messages/topicBulkSub")

|

||||

public List<BulkSubscribeAppResponse> getMessagesReceivedTestingTopicBulkSub() {

|

||||

return responsesReceivedTestingTopicBulk;

|

||||

return responsesReceivedTestingTopicBulkSub;

|

||||

}

|

||||

|

||||

@Topic(name = "testingtopic", pubsubName = "messagebus")

|

||||

|

|

@ -85,6 +92,21 @@ public class SubscriberController {

|

|||

});

|

||||

}

|

||||

|

||||

@Topic(name = "testingbulktopic", pubsubName = "messagebus")

|

||||

@PostMapping("/route1_redis")

|

||||

public Mono<Void> handleBulkTopicMessage(@RequestBody(required = false) CloudEvent envelope) {

|

||||

return Mono.fromRunnable(() -> {

|

||||

try {

|

||||

String message = envelope.getData() == null ? "" : envelope.getData().toString();

|

||||

String contentType = envelope.getDatacontenttype() == null ? "" : envelope.getDatacontenttype();

|

||||

System.out.println("Testing bulk publish topic Subscriber got message: " + message + "; Content-type: " + contentType);

|

||||

messagesReceivedBulkPublishTopic.add(envelope);

|

||||

} catch (Exception e) {

|

||||

throw new RuntimeException(e);

|

||||

}

|

||||

});

|

||||

}

|

||||

|

||||

@Topic(name = "testingtopic", pubsubName = "messagebus",

|

||||

rule = @Rule(match = "event.type == 'myevent.v2'", priority = 2))

|

||||

@PostMapping(path = "/route1_v2")

|

||||

|

|

@ -205,7 +227,7 @@ public class SubscriberController {

|

|||

return Mono.fromCallable(() -> {

|

||||

if (bulkMessage.getEntries().size() == 0) {

|

||||

BulkSubscribeAppResponse response = new BulkSubscribeAppResponse(new ArrayList<>());

|

||||

responsesReceivedTestingTopicBulk.add(response);

|

||||

responsesReceivedTestingTopicBulkSub.add(response);

|

||||

return response;

|

||||

}

|

||||

|

||||

|

|

@ -219,7 +241,7 @@ public class SubscriberController {

|

|||

}

|

||||

}

|

||||

BulkSubscribeAppResponse response = new BulkSubscribeAppResponse(entries);

|

||||

responsesReceivedTestingTopicBulk.add(response);

|

||||

responsesReceivedTestingTopicBulkSub.add(response);

|

||||

return response;

|

||||

});

|

||||

}

|

||||

|

|

|

|||

|

|

@ -14,6 +14,9 @@ limitations under the License.

|

|||

package io.dapr.client;

|

||||

|

||||

import com.fasterxml.jackson.databind.ObjectMapper;

|

||||

import io.dapr.client.domain.BulkPublishEntry;

|

||||

import io.dapr.client.domain.BulkPublishRequest;

|

||||

import io.dapr.client.domain.BulkPublishResponse;

|

||||

import io.dapr.client.domain.ConfigurationItem;

|

||||

import io.dapr.client.domain.DeleteStateRequest;

|

||||

import io.dapr.client.domain.ExecuteStateTransactionRequest;

|

||||

|

|

@ -42,6 +45,7 @@ import io.dapr.utils.TypeRef;

|

|||

import reactor.core.publisher.Flux;

|

||||

import reactor.core.publisher.Mono;

|

||||

|

||||

import java.util.ArrayList;

|

||||

import java.util.Arrays;

|

||||

import java.util.Collections;

|

||||

import java.util.List;

|

||||

|

|

@ -392,6 +396,50 @@ abstract class AbstractDaprClient implements DaprClient, DaprPreviewClient {

|

|||

return this.queryState(request, TypeRef.get(clazz));

|

||||

}

|

||||

|

||||

/**

|

||||

* {@inheritDoc}

|

||||

*/

|

||||

@Override

|

||||

public <T> Mono<BulkPublishResponse<T>> publishEvents(String pubsubName, String topicName, String contentType,

|

||||

List<T> events) {

|

||||

return publishEvents(pubsubName, topicName, contentType, null, events);

|

||||

}

|

||||

|

||||

/**

|

||||

* {@inheritDoc}

|

||||

*/

|

||||

@Override

|

||||

public <T> Mono<BulkPublishResponse<T>> publishEvents(String pubsubName, String topicName, String contentType,

|

||||

T... events) {

|

||||

return publishEvents(pubsubName, topicName, contentType, null, events);

|

||||

}

|

||||

|

||||

/**

|

||||

* {@inheritDoc}

|

||||

*/

|

||||

@Override

|

||||

public <T> Mono<BulkPublishResponse<T>> publishEvents(String pubsubName, String topicName, String contentType,

|

||||

Map<String, String> requestMetadata, T... events) {

|

||||

return publishEvents(pubsubName, topicName, contentType, requestMetadata, Arrays.asList(events));

|

||||

}

|

||||

|

||||

/**

|

||||

* {@inheritDoc}

|

||||

*/

|

||||

@Override

|

||||

public <T> Mono<BulkPublishResponse<T>> publishEvents(String pubsubName, String topicName, String contentType,

|

||||

Map<String, String> requestMetadata, List<T> events) {

|

||||

if (events == null || events.size() == 0) {

|

||||

throw new IllegalArgumentException("list of events cannot be null or empty");

|

||||

}

|

||||

List<BulkPublishEntry<T>> entries = new ArrayList<>();

|

||||

for (int i = 0; i < events.size(); i++) {

|

||||

// entryID field is generated based on order of events in the request

|

||||

entries.add(new BulkPublishEntry<>("" + i, events.get(i), contentType, null));

|

||||

}

|

||||

return publishEvents(new BulkPublishRequest<>(pubsubName, topicName, entries, requestMetadata));

|

||||

}

|

||||

|

||||

/**

|

||||

* {@inheritDoc}

|

||||

*/

|

||||

|

|

|

|||

|

|

@ -17,6 +17,10 @@ import com.google.common.base.Strings;

|

|||

import com.google.protobuf.Any;

|

||||

import com.google.protobuf.ByteString;

|

||||

import com.google.protobuf.Empty;

|

||||

import io.dapr.client.domain.BulkPublishEntry;

|

||||

import io.dapr.client.domain.BulkPublishRequest;

|

||||

import io.dapr.client.domain.BulkPublishResponse;

|

||||

import io.dapr.client.domain.BulkPublishResponseFailedEntry;

|

||||

import io.dapr.client.domain.ConfigurationItem;

|

||||

import io.dapr.client.domain.DeleteStateRequest;

|

||||

import io.dapr.client.domain.ExecuteStateTransactionRequest;

|

||||

|

|

@ -44,6 +48,8 @@ import io.dapr.config.Properties;

|

|||

import io.dapr.exceptions.DaprException;

|

||||

import io.dapr.internal.opencensus.GrpcWrapper;

|

||||

import io.dapr.serializer.DaprObjectSerializer;

|

||||

import io.dapr.serializer.DefaultObjectSerializer;

|

||||

import io.dapr.utils.DefaultContentTypeConverter;

|

||||

import io.dapr.utils.NetworkUtils;

|

||||

import io.dapr.utils.TypeRef;

|

||||

import io.dapr.v1.CommonProtos;

|

||||

|

|

@ -65,6 +71,7 @@ import reactor.util.context.Context;

|

|||

|

||||

import java.io.Closeable;

|

||||

import java.io.IOException;

|

||||

import java.util.ArrayList;

|

||||

import java.util.Collections;

|

||||

import java.util.HashMap;

|

||||

import java.util.Iterator;

|

||||

|

|

@ -185,6 +192,93 @@ public class DaprClientGrpc extends AbstractDaprClient {

|

|||

}

|

||||

}

|

||||

|

||||

/**

|

||||

*

|

||||

* {@inheritDoc}

|

||||

*/

|

||||

@Override

|

||||

public <T> Mono<BulkPublishResponse<T>> publishEvents(BulkPublishRequest<T> request) {

|

||||

try {

|

||||

String pubsubName = request.getPubsubName();

|

||||