This update improves the animation for streaming a diff of changes when AI helper proofread is triggered. It shows the original text with diff changes live instead of after the fact.

This PR addresses a bug where uploads weren't being cleared after successfully posting a new private message in the AI bot conversations interface. Here's what the changes do:

## Main Fix:

- Makes the `prepareAndSubmitToBot()` method async and adds proper error handling

- Adds `this.uploads.clear()` after successful submission to clear all uploads

- Adds a test to verify that the "New Question" button properly resets the UI with no uploads

## Additional Improvements:

1. **Dynamic Character Length Validation**:

- Uses `siteSettings.min_personal_message_post_length` instead of hardcoded 10 characters

- Updates the error message to show the dynamic character count

- Adds proper pluralization in the localization file for the error message

2. **Bug Fixes**:

- Adds null checks with optional chaining (`link?.topic?.id`) in the sidebar code to prevent potential errors

3. **Code Organization**:

- Moves error handling from the service to the controller for better separation of concerns

This commit introduces file upload capabilities to the AI Bot conversations interface and improves the overall dedicated UX experience. It also changes the experimental setting to a more permanent one.

## Key changes:

- **File upload support**:

- Integrates UppyUpload for handling file uploads in conversations

- Adds UI for uploading, displaying, and managing attachments

- Supports drag & drop, clipboard paste, and manual file selection

- Shows upload progress indicators for in-progress uploads

- Appends uploaded file markdown to message content

- **Renamed setting**:

- Changed `ai_enable_experimental_bot_ux` to `ai_bot_enable_dedicated_ux`

- Updated setting description to be clearer

- Changed default value to `true` as this is now a stable feature

- Added migration to handle the setting name change in database

- **UI improvements**:

- Enhanced input area with better focus states

- Improved layout and styling for conversations page

- Added visual feedback for upload states

- Better error handling for uploads in progress

- **Code organization**:

- Refactored message submission logic to handle attachments

- Updated DOM element IDs for consistency

- Fixed focus management after submission

- **Added tests**:

- Tests for file upload functionality

- Tests for removing uploads before submission

- Updated existing tests to work with the renamed setting

---------

Co-authored-by: awesomerobot <kris.aubuchon@discourse.org>

This commit adds an empty state when the user doesn't have any PM history. It ALSO retains the new conversation button in the sidebar so it no longer jumps. The button is disabled, icon, and text are all updated.

AI bots come in 2 flavors

1. An LLM and LLM user, in this case we should decorate posts with persona name

2. A Persona user, in this case, in PMs we decorate with LLM name

(2) is a significant improvement, cause previously when creating a conversation

you could not tell which LLM you were talking to by simply looking at the post, you would

have to scroll to the top of the page.

* lint

* translation missing

# Preview

https://github.com/user-attachments/assets/3fe3ac8f-c938-4df4-9afe-11980046944d

# Details

- Group pms by `last_posted_at`. In this first iteration we are group by `7 days`, `30 days`, then by month beyond that.

- I inject a sidebar section link with the relative (last_posted_at) date and then update a tracked value to ensure we don't do it again. Then for each month beyond the first 30days, I add a value to the `loadedMonthLabels` set and we reference that (plus the year) to see if we need to load a new month label.

- I took the creative liberty to remove the `Conversations` section label - this had no purpose

- I hid the _collapse all sidebar sections_ carrot. This had no purpose.

- Swap `BasicTopicSerializer` to `ListableTopicSerializer` to get access to `last_posted_at`

In the last commit, I introduced a topic_custom_field to determine if a PM is indeed a bot PM.

This commit adds a migration to backfill any PM that is between 1 real user, and 1 bot. The correct topic_custom_field is added for these, so they will appear on the bot conversation sidebar properly.

We can also drop the joining to topic_users in the controller for sidebar conversations, and the isPostFromAiBot logic from the sidebar.

Overview

This PR introduces a Bot Homepage that was first introduced at https://ask.discourse.org/.

Key Features:

Add a bot homepage: /discourse-ai/ai-bot/conversations

Display a sidebar with previous bot conversations

Infinite scroll for large counts

Sidebar still visible when navigation mode is header_dropdown

Sidebar visible on homepage and bot PM show view

Add New Question button to the bottom of sidebar on bot PM show view

Add persona picker to homepage

In this feature update, we add the UI for the ability to easily configure persona backed AI-features. The feature will still be hidden until structured responses are complete.

This update adds the ability to disable search discoveries. This can be done through a tooltip when search discoveries are shown. It can also be done in the AI user preferences, which has also been updated to accommodate more than just the one image caption setting.

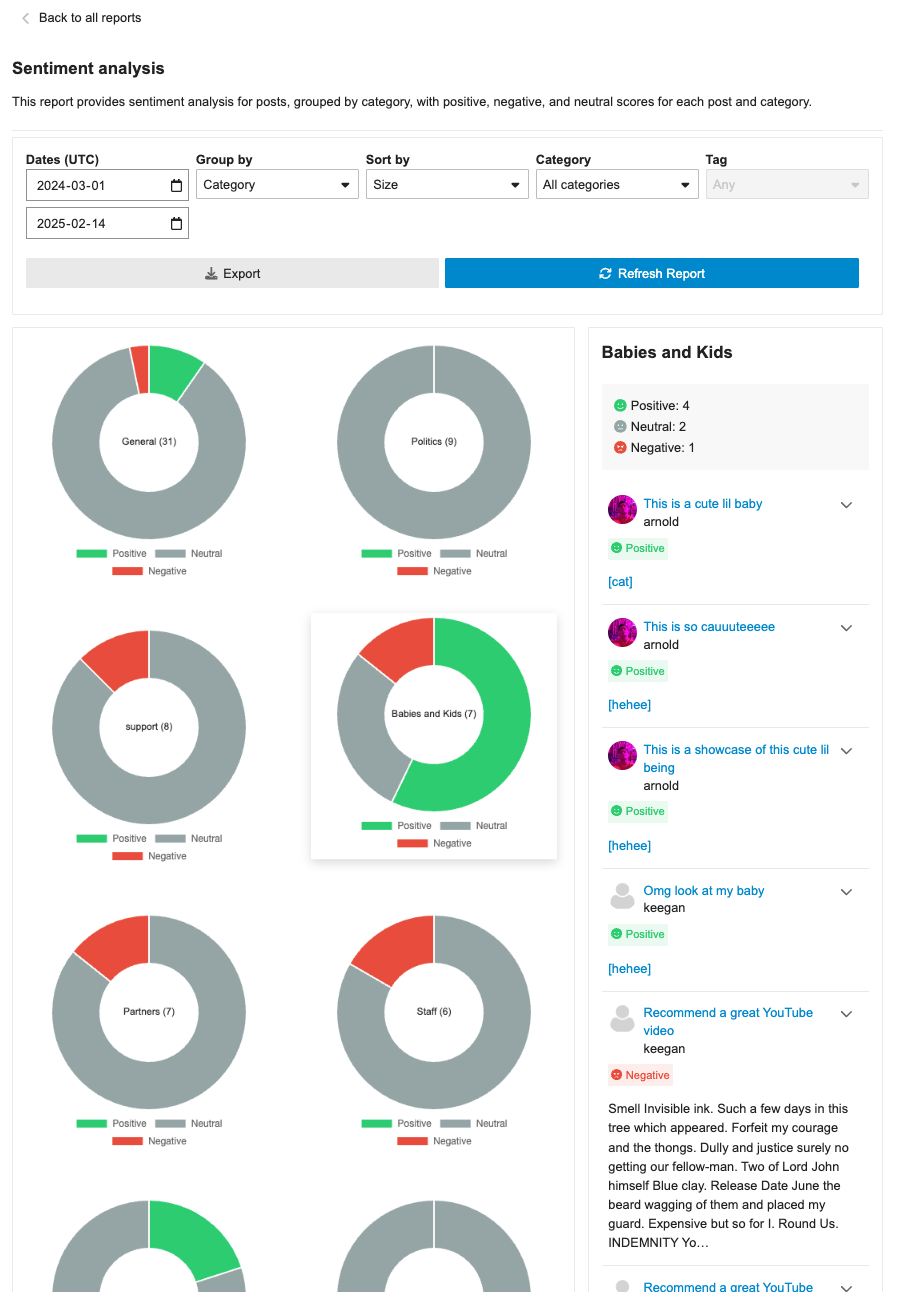

**This PR includes a variety of updates to the Sentiment Analysis report:**

- [X] Conditionally showing sentiment reports based on `sentiment_enabled` setting

- [X] Sentiment reports should only be visible in sidebar if data is in the reports

- [X] Fix infinite loading of posts in drill down

- [x] Fix markdown emojis showing not showing as emoji representation

- [x] Drill down of posts should have URL

- [x] ~~Different doughnut sizing based on post count~~ [reverting and will address in follow-up (see: `/t/146786/47`)]

- [X] Hide non-functional export button

- [X] Sticky drill down filter nav

## 🔍 Overview

This update adds a new report page at `admin/reports/sentiment_analysis` where admins can see a sentiment analysis report for the forum grouped by either category or tags.

## ➕ More details

The report can breakdown either category or tags into positive/negative/neutral sentiments based on the grouping (category/tag). Clicking on the doughnut visualization will bring up a post list of all the posts that were involved in that classification with further sentiment classifications by post.

The report can additionally be sorted in alphabetical order or by size, as well as be filtered by either category/tag based on the grouping.

## 👨🏽💻 Technical Details

The new admin report is registered via the pluginAPi with `api.registerReportModeComponent` to register the custom sentiment doughnut report. However, when each doughnut visualization is clicked, a new endpoint found at: `/discourse-ai/sentiment/posts` is fetched to showcase posts classified by sentiments based on the respective params.

## 📸 Screenshots

We have removed this flag in core. All plugins now use the "top mode" for their navigation. A backwards-compatible change has been made in core while we remove the usage from plugins.

* Use AR model for embeddings features

* endpoints

* Embeddings CRUD UI

* Add presets. Hide a couple more settings

* system specs

* Seed embedding definition from old settings

* Generate search bit index on the fly. cleanup orphaned data

* support for seeded models

* Fix run test for new embedding

* fix selected model not set correctly

Adds a comprehensive quota management system for LLM models that allows:

- Setting per-group (applied per user in the group) token and usage limits with configurable durations

- Tracking and enforcing token/usage limits across user groups

- Quota reset periods (hourly, daily, weekly, or custom)

- Admin UI for managing quotas with real-time updates

This system provides granular control over LLM API usage by allowing admins

to define limits on both total tokens and number of requests per group.

Supports multiple concurrent quotas per model and automatically handles

quota resets.

Co-authored-by: Keegan George <kgeorge13@gmail.com>

This introduces a comprehensive spam detection system that uses LLM models

to automatically identify and flag potential spam posts. The system is

designed to be both powerful and configurable while preventing false positives.

Key Features:

* Automatically scans first 3 posts from new users (TL0/TL1)

* Creates dedicated AI flagging user to distinguish from system flags

* Tracks false positives/negatives for quality monitoring

* Supports custom instructions to fine-tune detection

* Includes test interface for trying detection on any post

Technical Implementation:

* New database tables:

- ai_spam_logs: Stores scan history and results

- ai_moderation_settings: Stores LLM config and custom instructions

* Rate limiting and safeguards:

- Minimum 10-minute delay between rescans

- Only scans significant edits (>10 char difference)

- Maximum 3 scans per post

- 24-hour maximum age for scannable posts

* Admin UI features:

- Real-time testing capabilities

- 7-day statistics dashboard

- Configurable LLM model selection

- Custom instruction support

Security and Performance:

* Respects trust levels - only scans TL0/TL1 users

* Skips private messages entirely

* Stops scanning users after 3 successful public posts

* Includes comprehensive test coverage

* Maintains audit log of all scan attempts

---------

Co-authored-by: Keegan George <kgeorge13@gmail.com>

Co-authored-by: Martin Brennan <martin@discourse.org>

Add support for versioned artifacts with improved diff handling

* Add versioned artifacts support allowing artifacts to be updated and tracked

- New `ai_artifact_versions` table to store version history

- Support for updating artifacts through a new `UpdateArtifact` tool

- Add version-aware artifact rendering in posts

- Include change descriptions for version tracking

* Enhance artifact rendering and security

- Add support for module-type scripts and external JS dependencies

- Expand CSP to allow trusted CDN sources (unpkg, cdnjs, jsdelivr, googleapis)

- Improve JavaScript handling in artifacts

* Implement robust diff handling system (this is dormant but ready to use once LLMs catch up)

- Add new DiffUtils module for applying changes to artifacts

- Support for unified diff format with multiple hunks

- Intelligent handling of whitespace and line endings

- Comprehensive error handling for diff operations

* Update routes and UI components

- Add versioned artifact routes

- Update markdown processing for versioned artifacts

Also

- Tweaks summary prompt

- Improves upload support in custom tool to also provide urls

- Added a new admin interface to track AI usage metrics, including tokens, features, and models.

- Introduced a new route `/admin/plugins/discourse-ai/ai-usage` and supporting API endpoint in `AiUsageController`.

- Implemented `AiUsageSerializer` for structuring AI usage data.

- Integrated CSS stylings for charts and tables under `stylesheets/modules/llms/common/usage.scss`.

- Enhanced backend with `AiApiAuditLog` model changes: added `cached_tokens` column (implemented with OpenAI for now) with relevant DB migration and indexing.

- Created `Report` module for efficient aggregation and filtering of AI usage metrics.

- Updated AI Bot title generation logic to log correctly to user vs bot

- Extended test coverage for the new tracking features, ensuring data consistency and access controls.

This is a significant PR that introduces AI Artifacts functionality to the discourse-ai plugin along with several other improvements. Here are the key changes:

1. AI Artifacts System:

- Adds a new `AiArtifact` model and database migration

- Allows creation of web artifacts with HTML, CSS, and JavaScript content

- Introduces security settings (`strict`, `lax`, `disabled`) for controlling artifact execution

- Implements artifact rendering in iframes with sandbox protection

- New `CreateArtifact` tool for AI to generate interactive content

2. Tool System Improvements:

- Adds support for partial tool calls, allowing incremental updates during generation

- Better handling of tool call states and progress tracking

- Improved XML tool processing with CDATA support

- Fixes for tool parameter handling and duplicate invocations

3. LLM Provider Updates:

- Updates for Anthropic Claude models with correct token limits

- Adds support for native/XML tool modes in Gemini integration

- Adds new model configurations including Llama 3.1 models

- Improvements to streaming response handling

4. UI Enhancements:

- New artifact viewer component with expand/collapse functionality

- Security controls for artifact execution (click-to-run in strict mode)

- Improved dialog and response handling

- Better error management for tool execution

5. Security Improvements:

- Sandbox controls for artifact execution

- Public/private artifact sharing controls

- Security settings to control artifact behavior

- CSP and frame-options handling for artifacts

6. Technical Improvements:

- Better post streaming implementation

- Improved error handling in completions

- Better memory management for partial tool calls

- Enhanced testing coverage

7. Configuration:

- New site settings for artifact security

- Extended LLM model configurations

- Additional tool configuration options

This PR significantly enhances the plugin's capabilities for generating and displaying interactive content while maintaining security and providing flexible configuration options for administrators.

This PR fixes an issue where the AI search results were not being reset when you append your search to an existing query param (typically when you've come from quick search). This is because `handleSearch()` doesn't get called in this situation. So here we explicitly check for query params, trigger a reset and search for those occasions.

In preparation for applying the streaming animation elsewhere, we want to better improve the organization of folder structure and methods used in the `ai-streamer`

This PR updates the rate limits for AI helper so that image caption follows a specific rate limit of 20 requests per minute. This should help when uploading multiple files that need to be captioned. This PR also updates the UI so that it shows toast message with the extracted error message instead of having a blocking `popupAjaxError` error dialog.

---------

Co-authored-by: Rafael dos Santos Silva <xfalcox@gmail.com>

Co-authored-by: Penar Musaraj <pmusaraj@gmail.com>

Previously we had moved the AI helper from the options menu to a selection menu that appears when selecting text in the composer. This had the benefit of making the AI helper a more discoverable feature. Now that some time has passed and the AI helper is more recognized, we will be moving it back to the composer toolbar.

This is better because:

- It consistent with other behavior and ways of accessing tools in the composer

- It has an improved mobile experience

- It reduces unnecessary code and keeps things easier to migrate when we have composer V2.

- It allows for easily triggering AI helper for all content by clicking the button instead of having to select everything.