mirror of https://github.com/fluxcd/flagger.git

Update docs for Flagger v1beta1 API

This commit is contained in:

parent

8f12128aaf

commit

0e81b5f4d2

|

|

@ -17,12 +17,12 @@ contribution.

|

|||

## Chat

|

||||

|

||||

The project uses Slack: To join the conversation, simply join the

|

||||

[Weave community](https://slack.weave.works/) Slack workspace.

|

||||

[Weave community](https://slack.weave.works/) Slack workspace #flagger channel.

|

||||

|

||||

## Getting Started

|

||||

|

||||

- Fork the repository on GitHub

|

||||

- If you want to contribute as a developer, continue reading this document for further instructions

|

||||

- If you want to contribute as a developer, read [Flagger Development Guide](https://docs.flagger.app/dev-guide)

|

||||

- If you have questions, concerns, get stuck or need a hand, let us know

|

||||

on the Slack channel. We are happy to help and look forward to having

|

||||

you part of the team. No matter in which capacity.

|

||||

|

|

@ -59,7 +59,7 @@ get asked to resubmit the PR or divide the changes into more than one PR.

|

|||

|

||||

### Format of the Commit Message

|

||||

|

||||

For Flux we prefer the following rules for good commit messages:

|

||||

For Flagger we prefer the following rules for good commit messages:

|

||||

|

||||

- Limit the subject to 50 characters and write as the continuation

|

||||

of the sentence "If applied, this commit will ..."

|

||||

|

|

@ -69,4 +69,4 @@ For Flux we prefer the following rules for good commit messages:

|

|||

The [following article](https://chris.beams.io/posts/git-commit/#seven-rules)

|

||||

has some more helpful advice on documenting your work.

|

||||

|

||||

This doc is adapted from the [Weaveworks Flux](https://github.com/weaveworks/flux/blob/master/CONTRIBUTING.md)

|

||||

This doc is adapted from [FluxCD](https://github.com/fluxcd/flux/blob/master/CONTRIBUTING.md).

|

||||

|

|

|

|||

54

README.md

54

README.md

|

|

@ -87,7 +87,7 @@ spec:

|

|||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

# service name (optional)

|

||||

# service name (defaults to targetRef.name)

|

||||

name: podinfo

|

||||

# ClusterIP port number

|

||||

port: 9898

|

||||

|

|

@ -95,6 +95,9 @@ spec:

|

|||

targetPort: 9898

|

||||

# port name can be http or grpc (default http)

|

||||

portName: http

|

||||

# add all the other container ports

|

||||

# to the ClusterIP services (default false)

|

||||

portDiscovery: true

|

||||

# HTTP match conditions (optional)

|

||||

match:

|

||||

- uri:

|

||||

|

|

@ -118,36 +121,57 @@ spec:

|

|||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

# Istio Prometheus checks

|

||||

# validation (optional)

|

||||

metrics:

|

||||

# builtin checks

|

||||

- name: request-success-rate

|

||||

# builtin Prometheus check

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: request-duration

|

||||

# builtin Prometheus check

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# custom check

|

||||

- name: "kafka lag"

|

||||

threshold: 100

|

||||

query: |

|

||||

avg_over_time(

|

||||

kafka_consumergroup_lag{

|

||||

consumergroup=~"podinfo-consumer-.*",

|

||||

topic="podinfo"

|

||||

}[1m]

|

||||

)

|

||||

- name: "database connections"

|

||||

# custom Prometheus check

|

||||

templateRef:

|

||||

name: db-connections

|

||||

thresholdRange:

|

||||

min: 2

|

||||

max: 100

|

||||

interval: 1m

|

||||

# testing (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

- name: "conformance test"

|

||||

type: pre-rollout

|

||||

url: http://flagger-helmtester.test/

|

||||

timeout: 5m

|

||||

metadata:

|

||||

type: "helmv3"

|

||||

cmd: "test run podinfo -n test"

|

||||

- name: "load test"

|

||||

type: rollout

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

# alerting (optional)

|

||||

alerts:

|

||||

- name: "dev team Slack"

|

||||

severity: error

|

||||

providerRef:

|

||||

name: dev-slack

|

||||

namespace: flagger

|

||||

- name: "qa team Discord"

|

||||

severity: warn

|

||||

providerRef:

|

||||

name: qa-discord

|

||||

- name: "on-call MS Teams"

|

||||

severity: info

|

||||

providerRef:

|

||||

name: on-call-msteams

|

||||

```

|

||||

|

||||

For more details on how the canary analysis and promotion works please [read the docs](https://docs.flagger.app/how-it-works).

|

||||

|

|

|

|||

|

|

@ -15,6 +15,8 @@

|

|||

## Usage

|

||||

|

||||

* [Deployment Strategies](usage/deployment-strategies.md)

|

||||

* [Metrics Analysis](usage/metrics.md)

|

||||

* [Webhooks](usage/webhooks.md)

|

||||

* [Alerting](usage/alerting.md)

|

||||

* [Monitoring](usage/monitoring.md)

|

||||

|

||||

|

|

|

|||

|

|

@ -1,54 +1,70 @@

|

|||

# Development guide

|

||||

# Flagger Development Guide

|

||||

|

||||

This document describes how to build, test and run Flagger from source.

|

||||

|

||||

## Setup dev environment

|

||||

### Setup dev environment

|

||||

|

||||

Flagger is written in Go and uses Go modules for dependency management.

|

||||

|

||||

On your dev machine install the following tools:

|

||||

* go >= 1.13

|

||||

* git >= 2.20

|

||||

* bash >= 5.0

|

||||

* make >= 3.81

|

||||

* kubectl >= 1.16

|

||||

* kustomize >= 3.5

|

||||

* helm >= 3.0

|

||||

* docker >= 19.03

|

||||

|

||||

* go >= 1.13

|

||||

* git >= 2.20

|

||||

* bash >= 5.0

|

||||

* make >= 3.81

|

||||

* kubectl >= 1.16

|

||||

* kustomize >= 3.5

|

||||

* helm >= 3.0

|

||||

* docker >= 19.03

|

||||

You'll also need a Kubernetes cluster for testing Flagger.

|

||||

You can use Minikube, Kind, Docker desktop or any remote cluster

|

||||

(AKS/EKS/GKE/etc) Kubernetes version 1.14 or newer.

|

||||

|

||||

You'll also need a Kubernetes cluster for testing Flagger. You can use Minikube, Kind, Docker desktop or any remote cluster \(AKS/EKS/GKE/etc\) Kubernetes version 1.14 or newer.

|

||||

To start contributing to Flagger, fork the [repository](https://github.com/weaveworks/flagger) on GitHub.

|

||||

|

||||

## Build

|

||||

|

||||

To start contributing to Flagger, fork the repository and clone it locally:

|

||||

Create a dir inside your `GOPATH`:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/<YOUR-USERNAME>/flagger

|

||||

mkdir -p $GOPATH/src/github.com/weaveworks

|

||||

```

|

||||

|

||||

Clone your fork:

|

||||

|

||||

```bash

|

||||

cd $GOPATH/src/github.com/weaveworks

|

||||

git clone https://github.com/YOUR_USERNAME/flagger

|

||||

cd flagger

|

||||

```

|

||||

|

||||

Set Flagger repository as upstream:

|

||||

|

||||

```bash

|

||||

git remote add upstream https://github.com/weaveworks/flagger.git

|

||||

```

|

||||

|

||||

Sync your fork regularly to keep it up-to-date with upstream:

|

||||

|

||||

```bash

|

||||

git fetch upstream

|

||||

git checkout master

|

||||

git merge upstream/master

|

||||

```

|

||||

|

||||

### Build

|

||||

|

||||

Download Go modules:

|

||||

|

||||

```bash

|

||||

go mod download

|

||||

```

|

||||

|

||||

Build Flagger binary:

|

||||

|

||||

```bash

|

||||

CGO_ENABLED=0 go build -o ./bin/flagger ./cmd/flagger/

|

||||

```

|

||||

|

||||

Build Flagger container image:

|

||||

|

||||

```bash

|

||||

make build

|

||||

```

|

||||

|

||||

## Unit testing

|

||||

|

||||

Make a change to the source code and run the linter and unit tests:

|

||||

Run unit tests:

|

||||

|

||||

```bash

|

||||

make test

|

||||

|

|

@ -66,9 +82,10 @@ If you made changes to `pkg/apis` regenerate Kubernetes client sets with:

|

|||

./hack/update-codegen.sh

|

||||

```

|

||||

|

||||

## Manual testing

|

||||

### Manual testing

|

||||

|

||||

Install a service mesh and/or an ingress controller on your cluster and deploy Flagger using one of the install options [listed here](https://docs.flagger.app/install/flagger-install-on-kubernetes).

|

||||

Install a service mesh and/or an ingress controller on your cluster and deploy Flagger

|

||||

using one of the install options [listed here](https://docs.flagger.app/install/flagger-install-on-kubernetes).

|

||||

|

||||

If you made changes to the CRDs, apply your local copy with:

|

||||

|

||||

|

|

@ -76,7 +93,7 @@ If you made changes to the CRDs, apply your local copy with:

|

|||

kubectl apply -f artifacts/flagger/crd.yaml

|

||||

```

|

||||

|

||||

Shutdown the Flagger instance installed on your cluster \(replace the namespace with your mesh/ingress one\):

|

||||

Shutdown the Flagger instance installed on your cluster (replace the namespace with your mesh/ingress one):

|

||||

|

||||

```bash

|

||||

kubectl -n istio-system scale deployment/flagger --replicas=0

|

||||

|

|

@ -112,9 +129,9 @@ kubectl -n istio-system set image deployment/flagger flagger=<YOUR-DOCKERHUB-USE

|

|||

kubectl -n istio-system scale deployment/flagger --replicas=1

|

||||

```

|

||||

|

||||

Now you can use one of the [tutorials](dev-guide.md) to manually test your changes.

|

||||

Now you can use one of the [tutorials](https://docs.flagger.app/) to manually test your changes.

|

||||

|

||||

## Integration testing

|

||||

### Integration testing

|

||||

|

||||

Flagger end-to-end tests can be run locally with [Kubernetes Kind](https://github.com/kubernetes-sigs/kind).

|

||||

|

||||

|

|

@ -155,14 +172,14 @@ Run the Linkerd e2e tests:

|

|||

./test/e2e-linkerd-tests.sh

|

||||

```

|

||||

|

||||

For each service mesh and ingress controller there is a dedicated e2e test suite, chose one that matches your changes from this [list](https://github.com/weaveworks/flagger/tree/master/test).

|

||||

For each service mesh and ingress controller there is a dedicated e2e test suite,

|

||||

chose one that matches your changes from this [list](https://github.com/weaveworks/flagger/tree/master/test).

|

||||

|

||||

When you open a pull request on Flagger repo, the unit and integration tests will be run in CI.

|

||||

|

||||

## Release

|

||||

|

||||

To release a new Flagger version \(e.g. `2.0.0`\) follow these steps:

|

||||

### Release

|

||||

|

||||

To release a new Flagger version (e.g. `2.0.0`) follow these steps:

|

||||

* create a branch `git checkout -b prep-2.0.0`

|

||||

* set the version in code and manifests `TAG=2.0.0 make version-set`

|

||||

* commit changes and merge PR

|

||||

|

|

@ -170,10 +187,8 @@ To release a new Flagger version \(e.g. `2.0.0`\) follow these steps:

|

|||

* tag master `make release`

|

||||

|

||||

After the tag has been pushed to GitHub, the CI release pipeline does the following:

|

||||

|

||||

* creates a GitHub release

|

||||

* pushes the Flagger binary and change log to GitHub release

|

||||

* pushes the Flagger container image to Docker Hub

|

||||

* pushes the Helm chart to github-pages branch

|

||||

* GitHub pages publishes the new chart version on the Helm repository

|

||||

|

||||

|

|

|

|||

|

|

@ -1,159 +1,32 @@

|

|||

# FAQ

|

||||

# Frequently asked questions

|

||||

|

||||

## Deployment Strategies

|

||||

### Deployment Strategies

|

||||

|

||||

**Which deployment strategies are supported by Flagger?**

|

||||

|

||||

Flagger can run automated application analysis, promotion and rollback for the following deployment strategies:

|

||||

|

||||

* Canary \(progressive traffic shifting\)

|

||||

* Istio, Linkerd, App Mesh, NGINX, Contour, Gloo

|

||||

* Canary \(traffic mirroring\)

|

||||

* Istio

|

||||

* A/B Testing \(HTTP headers and cookies traffic routing\)

|

||||

* Istio, App Mesh, NGINX, Contour

|

||||

* Blue/Green \(traffic switch\)

|

||||

* Kubernetes CNI, Istio, Linkerd, App Mesh, NGINX, Contour, Gloo

|

||||

|

||||

For Canary deployments and A/B testing you'll need a Layer 7 traffic management solution like a service mesh or an ingress controller. For Blue/Green deployments no service mesh or ingress controller is required.

|

||||

Flagger implements the following deployment strategies:

|

||||

* [Canary Release](usage/deployment-strategies.md#canary-release)

|

||||

* [A/B Testing](usage/deployment-strategies.md#a-b-testing)

|

||||

* [Blue/Green](usage/deployment-strategies.md#blue-green-deployments)

|

||||

* [Blue/Green Mirroring](usage/deployment-strategies.md#blue-green-with-traffic-mirroring)

|

||||

|

||||

**When should I use A/B testing instead of progressive traffic shifting?**

|

||||

|

||||

For frontend applications that require session affinity you should use HTTP headers or cookies match conditions to ensure a set of users will stay on the same version for the whole duration of the canary analysis. A/B testing is supported by Istio and NGINX only.

|

||||

|

||||

Istio example:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

# total number of iterations

|

||||

iterations: 10

|

||||

# max number of failed iterations before rollback

|

||||

threshold: 2

|

||||

# canary match condition

|

||||

match:

|

||||

- headers:

|

||||

x-canary:

|

||||

regex: ".*insider.*"

|

||||

- headers:

|

||||

cookie:

|

||||

regex: "^(.*?;)?(canary=always)(;.*)?$"

|

||||

```

|

||||

|

||||

App Mesh example:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

interval: 1m

|

||||

threshold: 10

|

||||

iterations: 2

|

||||

match:

|

||||

- headers:

|

||||

user-agent:

|

||||

regex: ".*Chrome.*"

|

||||

```

|

||||

|

||||

Note that App Mesh supports a single condition.

|

||||

|

||||

Contour example:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

interval: 1m

|

||||

threshold: 10

|

||||

iterations: 2

|

||||

match:

|

||||

- headers:

|

||||

user-agent:

|

||||

prefix: "Chrome"

|

||||

```

|

||||

|

||||

Note that Contour does not support regex, you can use prefix, suffix or exact.

|

||||

|

||||

NGINX example:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

interval: 1m

|

||||

threshold: 10

|

||||

iterations: 2

|

||||

match:

|

||||

- headers:

|

||||

x-canary:

|

||||

exact: "insider"

|

||||

- headers:

|

||||

cookie:

|

||||

exact: "canary"

|

||||

```

|

||||

|

||||

Note that the NGINX ingress controller supports only exact matching for a single header and the cookie value is set to `always`.

|

||||

|

||||

The above configurations will route users with the x-canary header or canary cookie to the canary instance during analysis:

|

||||

|

||||

```bash

|

||||

curl -H 'X-Canary: insider' http://app.example.com

|

||||

curl -b 'canary=always' http://app.example.com

|

||||

```

|

||||

For frontend applications that require session affinity you should use HTTP headers or cookies match conditions

|

||||

to ensure a set of users will stay on the same version for the whole duration of the canary analysis.

|

||||

|

||||

**Can I use Flagger to manage applications that live outside of a service mesh?**

|

||||

|

||||

For applications that are not deployed on a service mesh, Flagger can orchestrate Blue/Green style deployments with Kubernetes L4 networking.

|

||||

|

||||

Blue/Green example:

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

spec:

|

||||

provider: kubernetes

|

||||

canaryAnalysis:

|

||||

interval: 30s

|

||||

threshold: 2

|

||||

iterations: 10

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: request-duration

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

type: cmd

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo-canary.test:9898/"

|

||||

```

|

||||

|

||||

The above configuration will run an analysis for five minutes. Flagger starts the load test for the canary service \(green version\) and checks the Prometheus metrics every 30 seconds. If the analysis result is positive, Flagger will promote the canary \(green version\) to primary \(blue version\).

|

||||

For applications that are not deployed on a service mesh, Flagger can orchestrate Blue/Green style deployments

|

||||

with Kubernetes L4 networking.

|

||||

|

||||

**When can I use traffic mirroring?**

|

||||

|

||||

Traffic Mirroring is a pre-stage in a Canary \(progressive traffic shifting\) or Blue/Green deployment strategy. Traffic mirroring will copy each incoming request, sending one request to the primary and one to the canary service. The response from the primary is sent back to the user. The response from the canary is discarded. Metrics are collected on both requests so that the deployment will only proceed if the canary metrics are healthy.

|

||||

Traffic mirroring can be used for Blue/Green deployment strategy or a pre-stage in a Canary release.

|

||||

Traffic mirroring will copy each incoming request, sending one request to the primary and one to the canary service.

|

||||

Mirroring should be used for requests that are **idempotent** or capable of being processed twice (once by the primary and once by the canary).

|

||||

|

||||

Mirroring is supported by Istio only.

|

||||

|

||||

In Istio, mirrored requests have `-shadow` appended to the `Host` \(HTTP\) or `Authority` \(HTTP/2\) header; for example requests to `podinfo.test` that are mirrored will be reported in telemetry with a destination host `podinfo.test-shadow`.

|

||||

|

||||

Mirroring must only be used for requests that are **idempotent** or capable of being processed twice \(once by the primary and once by the canary\). Reads are idempotent. Before using mirroring on requests that may be writes, you should consider what will happen if a write is duplicated and handled by the primary and canary.

|

||||

|

||||

To use mirroring, set `spec.canaryAnalysis.mirror` to `true`. Example for traffic shifting:

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

spec:

|

||||

provider: istio

|

||||

canaryAnalysis:

|

||||

mirror: true

|

||||

interval: 30s

|

||||

stepWeight: 20

|

||||

maxWeight: 50

|

||||

```

|

||||

|

||||

## Kubernetes services

|

||||

### Kubernetes services

|

||||

|

||||

**How is an application exposed inside the cluster?**

|

||||

|

||||

|

|

@ -181,23 +54,20 @@ spec:

|

|||

portName: http

|

||||

```

|

||||

|

||||

If the `service.name` is not specified, then `targetRef.name` is used for the apex domain and canary/primary services name prefix. You should treat the service name as an immutable field, changing it could result in routing conflicts.

|

||||

If the `service.name` is not specified, then `targetRef.name` is used for the apex domain and canary/primary services name prefix.

|

||||

You should treat the service name as an immutable field, changing it could result in routing conflicts.

|

||||

|

||||

Based on the canary spec service, Flagger generates the following Kubernetes ClusterIP service:

|

||||

|

||||

* `<service.name>.<namespace>.svc.cluster.local`

|

||||

|

||||

selector `app=<name>-primary`

|

||||

|

||||

* `<service.name>-primary.<namespace>.svc.cluster.local`

|

||||

|

||||

selector `app=<name>-primary`

|

||||

|

||||

* `<service.name>-canary.<namespace>.svc.cluster.local`

|

||||

|

||||

selector `app=<name>`

|

||||

|

||||

This ensures that traffic coming from a namespace outside the mesh to `podinfo.test:9898` will be routed to the latest stable release of your app.

|

||||

This ensures that traffic coming from a namespace outside the mesh to `podinfo.test:9898`

|

||||

will be routed to the latest stable release of your app.

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

|

|

@ -243,13 +113,16 @@ spec:

|

|||

targetPort: http

|

||||

```

|

||||

|

||||

The `podinfo-canary.test:9898` address is available only during the canary analysis and can be used for conformance testing or load testing.

|

||||

The `podinfo-canary.test:9898` address is available only during the

|

||||

canary analysis and can be used for conformance testing or load testing.

|

||||

|

||||

## Multiple ports

|

||||

### Multiple ports

|

||||

|

||||

**My application listens on multiple ports, how can I expose them inside the cluster?**

|

||||

|

||||

If port discovery is enabled, Flagger scans the deployment spec and extracts the containers ports excluding the port specified in the canary service and Envoy sidecar ports. \`These ports will be used when generating the ClusterIP services.

|

||||

If port discovery is enabled, Flagger scans the deployment spec and extracts the containers

|

||||

ports excluding the port specified in the canary service and Envoy sidecar ports.

|

||||

`These ports will be used when generating the ClusterIP services.

|

||||

|

||||

For a deployment that exposes two ports:

|

||||

|

||||

|

|

@ -291,7 +164,7 @@ spec:

|

|||

|

||||

Both port `8080` and `9090` will be added to the ClusterIP services.

|

||||

|

||||

## Label selectors

|

||||

### Label selectors

|

||||

|

||||

**What labels selectors are supported by Flagger?**

|

||||

|

||||

|

|

@ -312,7 +185,8 @@ spec:

|

|||

app: podinfo

|

||||

```

|

||||

|

||||

Besides `app` Flagger supports `name` and `app.kubernetes.io/name` selectors. If you use a different convention you can specify your label with the `-selector-labels` flag.

|

||||

Besides `app` Flagger supports `name` and `app.kubernetes.io/name` selectors. If you use a different

|

||||

convention you can specify your label with the `-selector-labels` flag.

|

||||

|

||||

**Is pod affinity and anti affinity supported?**

|

||||

|

||||

|

|

@ -347,13 +221,131 @@ spec:

|

|||

topologyKey: kubernetes.io/hostname

|

||||

```

|

||||

|

||||

## Istio routing

|

||||

### Metrics

|

||||

|

||||

**How does Flagger measures the request success rate and duration?**

|

||||

|

||||

Flagger measures the request success rate and duration using Prometheus queries.

|

||||

|

||||

**HTTP requests success rate percentage**

|

||||

|

||||

Spec:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

```

|

||||

|

||||

Istio query:

|

||||

|

||||

```javascript

|

||||

sum(

|

||||

rate(

|

||||

istio_requests_total{

|

||||

reporter="destination",

|

||||

destination_workload_namespace=~"$namespace",

|

||||

destination_workload=~"$workload",

|

||||

response_code!~"5.*"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

/

|

||||

sum(

|

||||

rate(

|

||||

istio_requests_total{

|

||||

reporter="destination",

|

||||

destination_workload_namespace=~"$namespace",

|

||||

destination_workload=~"$workload"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

Envoy query (App Mesh, Contour or Gloo):

|

||||

|

||||

```javascript

|

||||

sum(

|

||||

rate(

|

||||

envoy_cluster_upstream_rq{

|

||||

kubernetes_namespace="$namespace",

|

||||

kubernetes_pod_name=~"$workload",

|

||||

envoy_response_code!~"5.*"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

/

|

||||

sum(

|

||||

rate(

|

||||

envoy_cluster_upstream_rq{

|

||||

kubernetes_namespace="$namespace",

|

||||

kubernetes_pod_name=~"$workload"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

**HTTP requests milliseconds duration P99**

|

||||

|

||||

Spec:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

metrics:

|

||||

- name: request-duration

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 1m

|

||||

```

|

||||

|

||||

Istio query:

|

||||

|

||||

```javascript

|

||||

histogram_quantile(0.99,

|

||||

sum(

|

||||

irate(

|

||||

istio_request_duration_seconds_bucket{

|

||||

reporter="destination",

|

||||

destination_workload=~"$workload",

|

||||

destination_workload_namespace=~"$namespace"

|

||||

}[$interval]

|

||||

)

|

||||

) by (le)

|

||||

)

|

||||

```

|

||||

|

||||

Envoy query (App Mesh, Contour or Gloo):

|

||||

|

||||

```javascript

|

||||

histogram_quantile(0.99,

|

||||

sum(

|

||||

irate(

|

||||

envoy_cluster_upstream_rq_time_bucket{

|

||||

kubernetes_pod_name=~"$workload",

|

||||

kubernetes_namespace=~"$namespace"

|

||||

}[$interval]

|

||||

)

|

||||

) by (le)

|

||||

)

|

||||

```

|

||||

|

||||

> **Note** that the metric interval should be lower or equal to the control loop interval.

|

||||

|

||||

### Istio routing

|

||||

|

||||

**How does Flagger interact with Istio?**

|

||||

|

||||

Flagger creates an Istio Virtual Service and Destination Rules based on the Canary service spec. The service configuration lets you expose an app inside or outside the mesh. You can also define traffic policies, HTTP match conditions, URI rewrite rules, CORS policies, timeout and retries.

|

||||

Flagger creates an Istio Virtual Service and Destination Rules based on the Canary service spec.

|

||||

The service configuration lets you expose an app inside or outside the mesh.

|

||||

You can also define traffic policies, HTTP match conditions, URI rewrite rules, CORS policies, timeout and retries.

|

||||

|

||||

The following spec exposes the `frontend` workload inside the mesh on `frontend.test.svc.cluster.local:9898` and outside the mesh on `frontend.example.com`. You'll have to specify an Istio ingress gateway for external hosts.

|

||||

The following spec exposes the `frontend` workload inside the mesh on `frontend.test.svc.cluster.local:9898`

|

||||

and outside the mesh on `frontend.example.com`. You'll have to specify an Istio ingress gateway for external hosts.

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

|

|

@ -487,9 +479,11 @@ spec:

|

|||

mode: DISABLE

|

||||

```

|

||||

|

||||

Flagger keeps in sync the virtual service and destination rules with the canary service spec. Any direct modification to the virtual service spec will be overwritten.

|

||||

Flagger keeps in sync the virtual service and destination rules with the canary service spec.

|

||||

Any direct modification to the virtual service spec will be overwritten.

|

||||

|

||||

To expose a workload inside the mesh on `http://backend.test.svc.cluster.local:9898`, the service spec can contain only the container port and the traffic policy:

|

||||

To expose a workload inside the mesh on `http://backend.test.svc.cluster.local:9898`,

|

||||

the service spec can contain only the container port and the traffic policy:

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

|

|

@ -530,13 +524,15 @@ spec:

|

|||

app: backend-primary

|

||||

```

|

||||

|

||||

Flagger works for user facing apps exposed outside the cluster via an ingress gateway and for backend HTTP APIs that are accessible only from inside the mesh.

|

||||

Flagger works for user facing apps exposed outside the cluster via an ingress gateway

|

||||

and for backend HTTP APIs that are accessible only from inside the mesh.

|

||||

|

||||

## Istio Ingress Gateway

|

||||

### Istio Ingress Gateway

|

||||

|

||||

**How can I expose multiple canaries on the same external domain?**

|

||||

|

||||

Assuming you have two apps, one that servers the main website and one that serves the REST API. For each app you can define a canary object as:

|

||||

Assuming you have two apps, one that servers the main website and one that serves the REST API.

|

||||

For each app you can define a canary object as:

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

|

|

@ -574,11 +570,13 @@ spec:

|

|||

uri: /

|

||||

```

|

||||

|

||||

Based on the above configuration, Flagger will create two virtual services bounded to the same ingress gateway and external host. Istio Pilot will [merge](https://istio.io/help/ops/traffic-management/deploy-guidelines/#multiple-virtual-services-and-destination-rules-for-the-same-host) the two services and the website rule will be moved to the end of the list in the merged configuration.

|

||||

Based on the above configuration, Flagger will create two virtual services bounded to the same ingress gateway and external host.

|

||||

Istio Pilot will [merge](https://istio.io/help/ops/traffic-management/deploy-guidelines/#multiple-virtual-services-and-destination-rules-for-the-same-host)

|

||||

the two services and the website rule will be moved to the end of the list in the merged configuration.

|

||||

|

||||

Note that host merging only works if the canaries are bounded to a ingress gateway other than the `mesh` gateway.

|

||||

|

||||

## Istio Mutual TLS

|

||||

### Istio Mutual TLS

|

||||

|

||||

**How can I enable mTLS for a canary?**

|

||||

|

||||

|

|

@ -633,4 +631,3 @@ spec:

|

|||

ports:

|

||||

- number: 80

|

||||

```

|

||||

|

||||

|

|

|

|||

|

|

@ -1,8 +1,11 @@

|

|||

# How it works

|

||||

|

||||

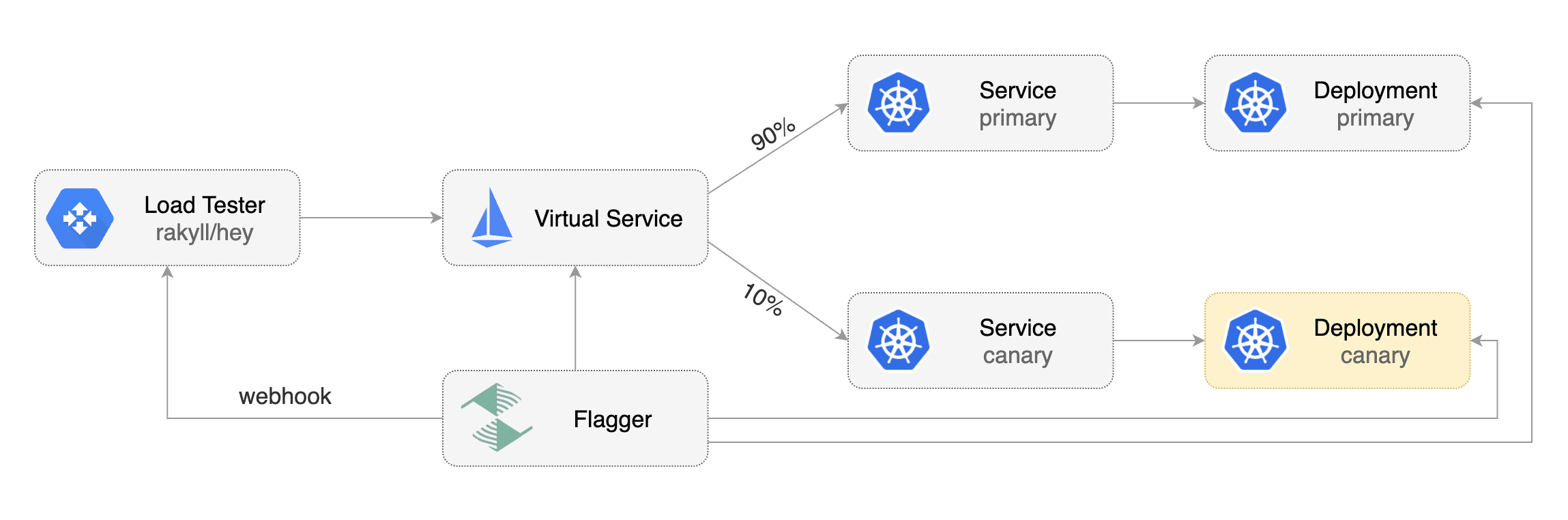

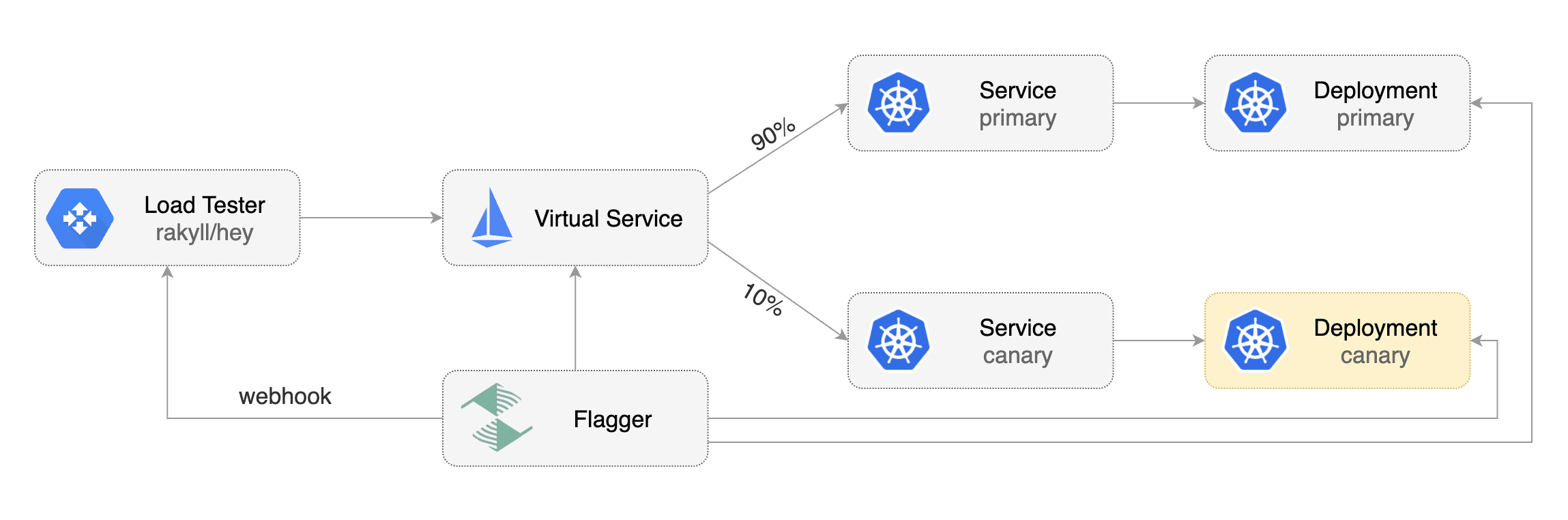

[Flagger](https://github.com/weaveworks/flagger) takes a Kubernetes deployment and optionally a horizontal pod autoscaler \(HPA\) and creates a series of objects \(Kubernetes deployments, ClusterIP services, virtual service, traffic split or ingress\) to drive the canary analysis and promotion.

|

||||

[Flagger](https://github.com/weaveworks/flagger) takes a Kubernetes deployment and optionally

|

||||

a horizontal pod autoscaler (HPA) and creates a series of objects

|

||||

(Kubernetes deployments, ClusterIP services, virtual service, traffic split or ingress)

|

||||

to drive the canary analysis and promotion.

|

||||

|

||||

## Canary Custom Resource

|

||||

### Canary Custom Resource

|

||||

|

||||

For a deployment named _podinfo_, a canary promotion can be defined using Flagger's custom resource:

|

||||

|

||||

|

|

@ -11,71 +14,57 @@ apiVersion: flagger.app/v1alpha3

|

|||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# service mesh provider (optional)

|

||||

# can be: kubernetes, istio, linkerd, appmesh, nginx, gloo, supergloo

|

||||

provider: linkerd

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

# service name (optional)

|

||||

name: podinfo

|

||||

# ClusterIP port number

|

||||

port: 9898

|

||||

# ClusterIP port name can be http or grpc (default http)

|

||||

portName: http

|

||||

# container port number or name (optional)

|

||||

targetPort: 9898

|

||||

# add all the other container ports

|

||||

# to the ClusterIP services (default false)

|

||||

portDiscovery: false

|

||||

# promote the canary without analysing it (default false)

|

||||

skipAnalysis: false

|

||||

# define the canary analysis timing and KPIs

|

||||

portDiscovery: true

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

# Prometheus checks

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: request-duration

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# testing (optional)

|

||||

- name: request-success-rate

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: request-duration

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo-canary.test:9898/"

|

||||

```

|

||||

|

||||

Based on the above configuration, Flagger generates the following Kubernetes objects:

|

||||

* `deployment/<targetRef.name>-primary`

|

||||

* `hpa/<autoscalerRef.name>-primary`

|

||||

|

||||

The primary deployment is considered the stable release of your app, by default all traffic is routed to this version

|

||||

and the target deployment is scaled to zero.

|

||||

Flagger will detect changes to the target deployment (including secrets and configmaps) and will perform a

|

||||

canary analysis before promoting the new version as primary.

|

||||

|

||||

The autoscaler reference is optional, when specified, Flagger will pause the traffic increase while the

|

||||

target and primary deployments are scaled up or down. HPA can help reduce the resource usage during the canary analysis.

|

||||

|

||||

If the target deployment uses secrets and/or configmaps, Flagger will create a copy of each object using the `-primary`

|

||||

prefix and will reference these objects in the primary deployment. You can disable the secrets/configmaps tracking

|

||||

with the `-enable-config-tracking=false` command flag in the Flagger deployment manifest under containers args

|

||||

or by setting `--set configTracking.enabled=false` when installing Flagger with Helm.

|

||||

|

||||

**Note** that the target deployment must have a single label selector in the format `app: <DEPLOYMENT-NAME>`:

|

||||

|

||||

```yaml

|

||||

|

|

@ -93,13 +82,30 @@ spec:

|

|||

app: podinfo

|

||||

```

|

||||

|

||||

Besides `app` Flagger supports `name` and `app.kubernetes.io/name` selectors. If you use a different convention you can specify your label with the `-selector-labels=my-app-label` command flag in the Flagger deployment manifest under containers args or by setting `--set selectorLabels=my-app-label` when installing Flagger with Helm.

|

||||

Besides `app` Flagger supports `name` and `app.kubernetes.io/name` selectors.

|

||||

If you use a different convention you can specify your label with

|

||||

the `-selector-labels=my-app-label` command flag in the Flagger deployment manifest under containers args

|

||||

or by setting `--set selectorLabels=my-app-label` when installing Flagger with Helm.

|

||||

|

||||

The target deployment should expose a TCP port that will be used by Flagger to create the ClusterIP Services. The container port from the target deployment should match the `service.port` or `service.targetPort`.

|

||||

The target deployment should expose a TCP port that will be used by Flagger to create the ClusterIP Services.

|

||||

The container port from the target deployment should match the `service.port` or `service.targetPort`.

|

||||

|

||||

## Canary status

|

||||

Based on the canary spec service, Flagger generates the following Kubernetes ClusterIP service:

|

||||

|

||||

Get the current status of canary deployments cluster wide:

|

||||

* `<service.name>.<namespace>.svc.cluster.local`

|

||||

selector `app=<name>-primary`

|

||||

* `<service.name>-primary.<namespace>.svc.cluster.local`

|

||||

selector `app=<name>-primary`

|

||||

* `<service.name>-canary.<namespace>.svc.cluster.local`

|

||||

selector `app=<name>`

|

||||

|

||||

This ensures that traffic to `podinfo.test:9898` will be routed to the latest stable release of your app.

|

||||

The `podinfo-canary.test:9898` address is available only during the

|

||||

canary analysis and can be used for conformance testing or load testing.

|

||||

|

||||

### Canary status

|

||||

|

||||

You can use kubectl to get the current status of canary deployments cluster wide:

|

||||

|

||||

```bash

|

||||

kubectl get canaries --all-namespaces

|

||||

|

|

@ -110,7 +116,7 @@ prod frontend Succeeded 0 2019-06-30T16:15:07Z

|

|||

prod backend Failed 0 2019-06-30T17:05:07Z

|

||||

```

|

||||

|

||||

The status condition reflects the last know state of the canary analysis:

|

||||

The status condition reflects the last known state of the canary analysis:

|

||||

|

||||

```bash

|

||||

kubectl -n test get canary/podinfo -oyaml | awk '/status/,0'

|

||||

|

|

@ -134,7 +140,10 @@ status:

|

|||

type: Promoted

|

||||

```

|

||||

|

||||

The `Promoted` status condition can have one of the following reasons: Initialized, Waiting, Progressing, Promoting, Finalising, Succeeded or Failed. A failed canary will have the promoted status set to `false`, the reason to `failed` and the last applied spec will be different to the last promoted one.

|

||||

The `Promoted` status condition can have one of the following reasons:

|

||||

Initialized, Waiting, Progressing, Promoting, Finalising, Succeeded or Failed.

|

||||

A failed canary will have the promoted status set to `false`,

|

||||

the reason to `failed` and the last applied spec will be different to the last promoted one.

|

||||

|

||||

Wait for a successful rollout:

|

||||

|

||||

|

|

@ -162,747 +171,47 @@ kubectl wait canary/podinfo --for=condition=promoted --timeout=5m

|

|||

kubectl get canary/podinfo | grep Succeeded

|

||||

```

|

||||

|

||||

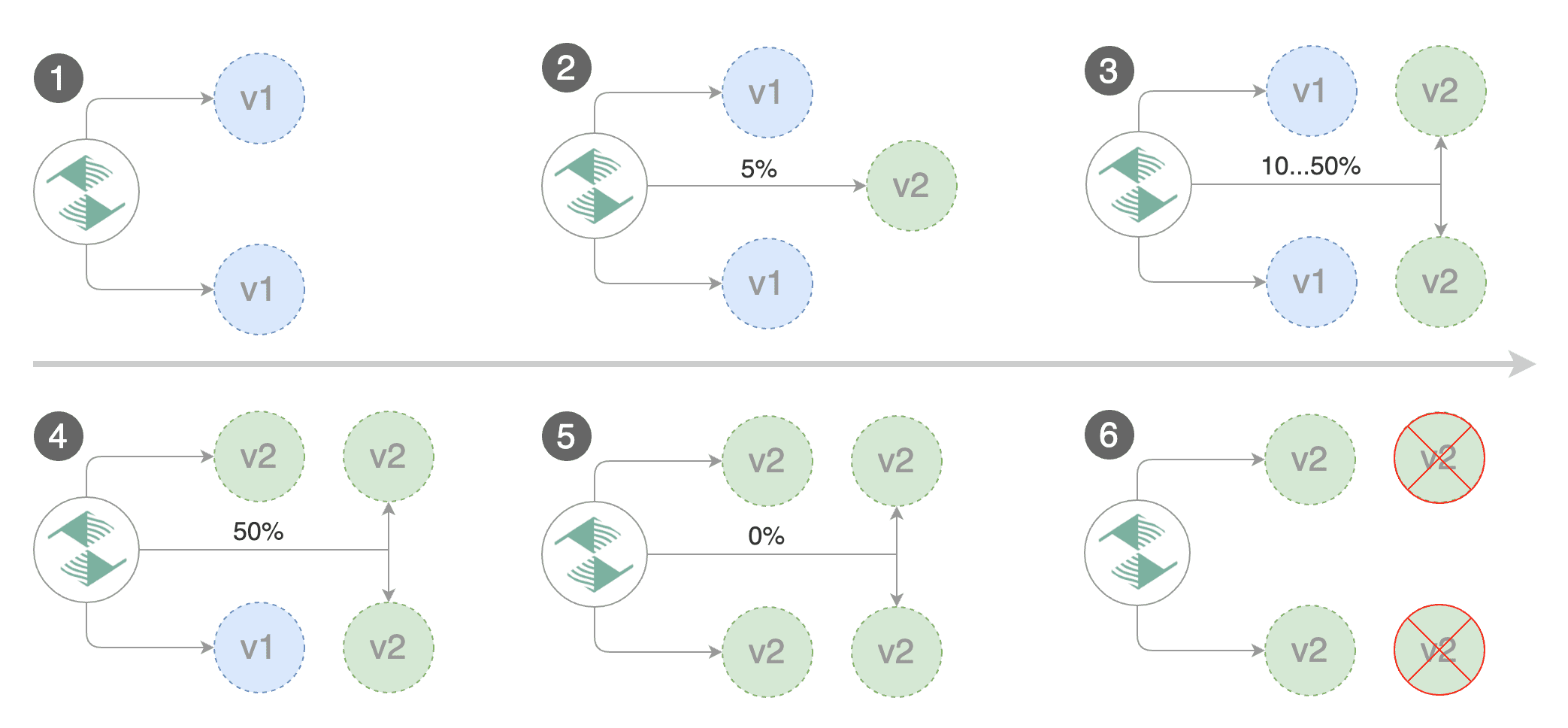

## Canary Stages

|

||||

### Canary Analysis

|

||||

|

||||

|

||||

|

||||

A canary deployment is triggered by changes in any of the following objects:

|

||||

|

||||

* Deployment PodSpec \(container image, command, ports, env, resources, etc\)

|

||||

* ConfigMaps mounted as volumes or mapped to environment variables

|

||||

* Secrets mounted as volumes or mapped to environment variables

|

||||

|

||||

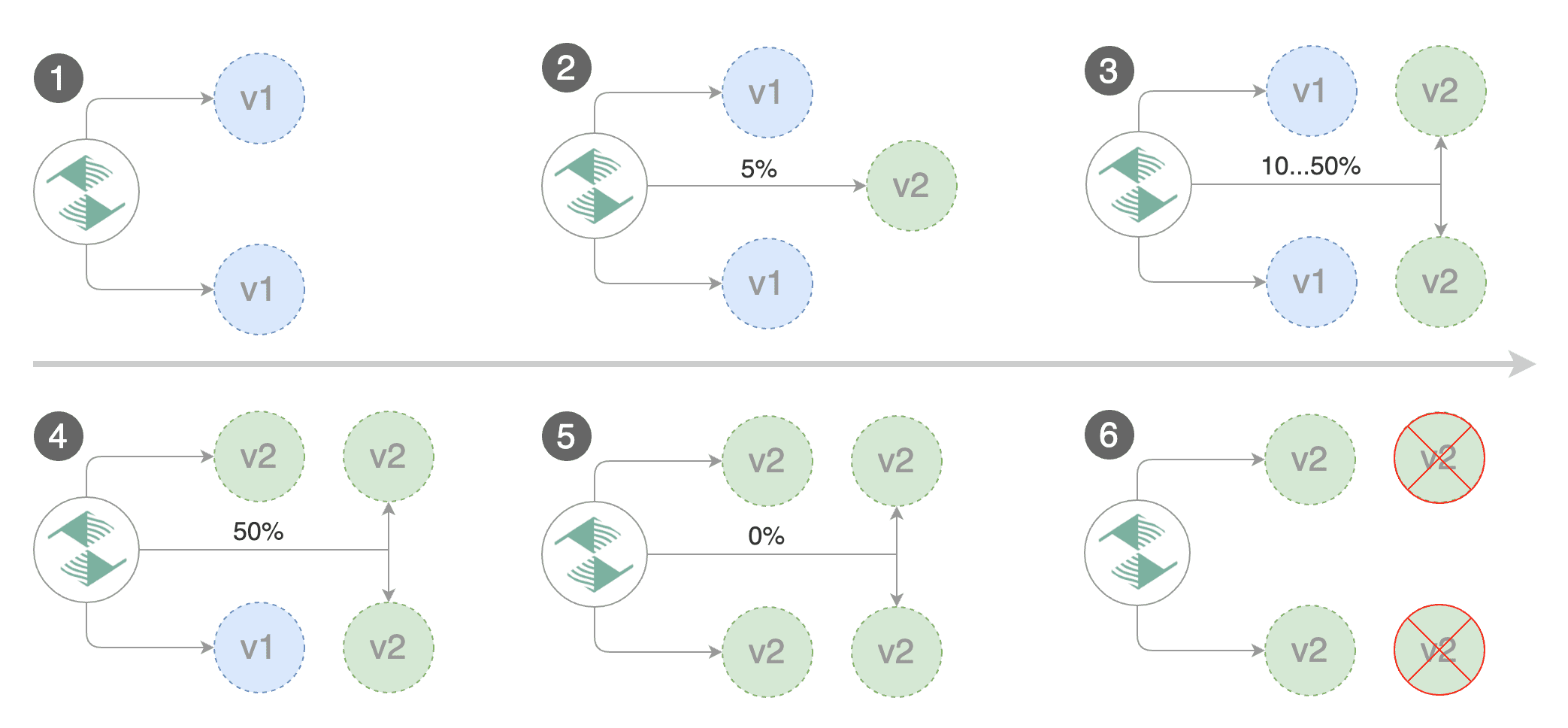

Gated canary promotion stages:

|

||||

|

||||

* scan for canary deployments

|

||||

* check primary and canary deployment status

|

||||

* halt advancement if a rolling update is underway

|

||||

* halt advancement if pods are unhealthy

|

||||

* call confirm-rollout webhooks and check results

|

||||

* halt advancement if any hook returns a non HTTP 2xx result

|

||||

* call pre-rollout webhooks and check results

|

||||

* halt advancement if any hook returns a non HTTP 2xx result

|

||||

* increment the failed checks counter

|

||||

* increase canary traffic weight percentage from 0% to 5% \(step weight\)

|

||||

* call rollout webhooks and check results

|

||||

* check canary HTTP request success rate and latency

|

||||

* halt advancement if any metric is under the specified threshold

|

||||

* increment the failed checks counter

|

||||

* check if the number of failed checks reached the threshold

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* call post-rollout webhooks

|

||||

* post the analysis result to Slack

|

||||

* wait for the canary deployment to be updated and start over

|

||||

* increase canary traffic weight by 5% \(step weight\) till it reaches 50% \(max weight\)

|

||||

* halt advancement if any webhook call fails

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* halt advancement while any custom metric check fails

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* call confirm-promotion webhooks and check results

|

||||

* halt advancement if any hook returns a non HTTP 2xx result

|

||||

* promote canary to primary

|

||||

* copy ConfigMaps and Secrets from canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* wait for primary rolling update to finish

|

||||

* halt advancement if pods are unhealthy

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment

|

||||

* mark rollout as finished

|

||||

* call post-rollout webhooks

|

||||

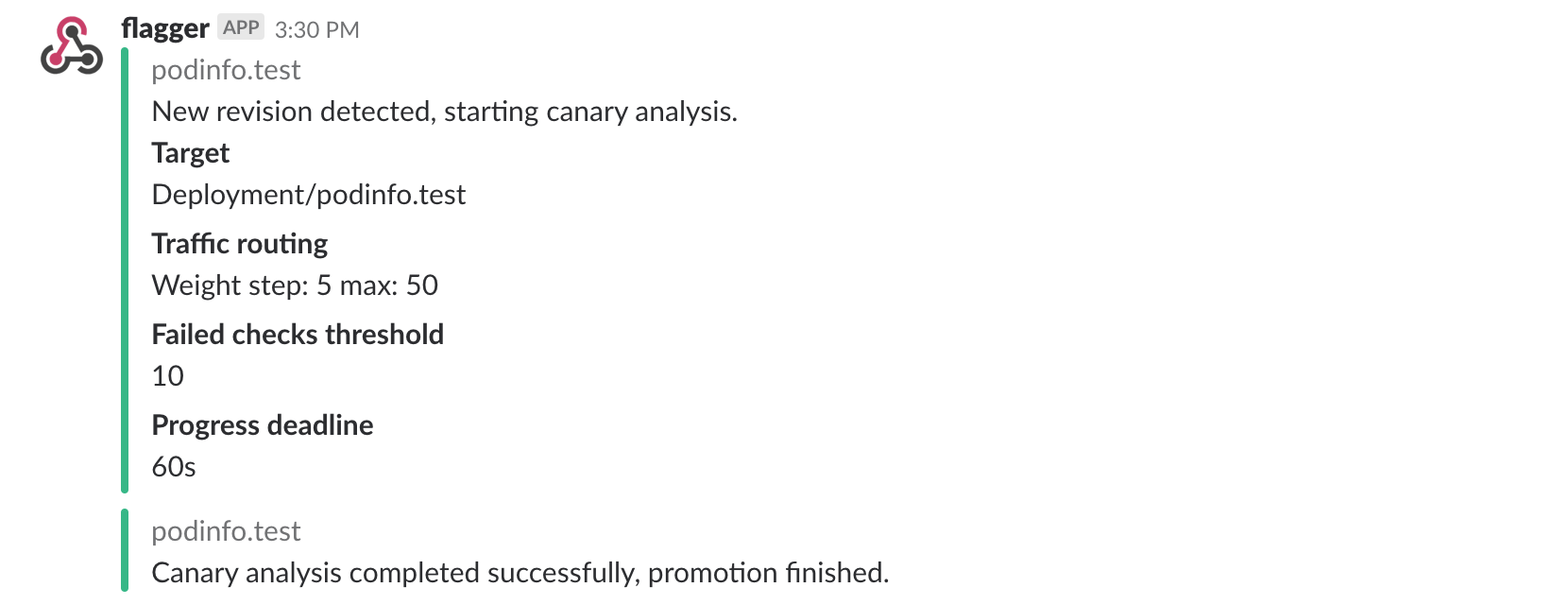

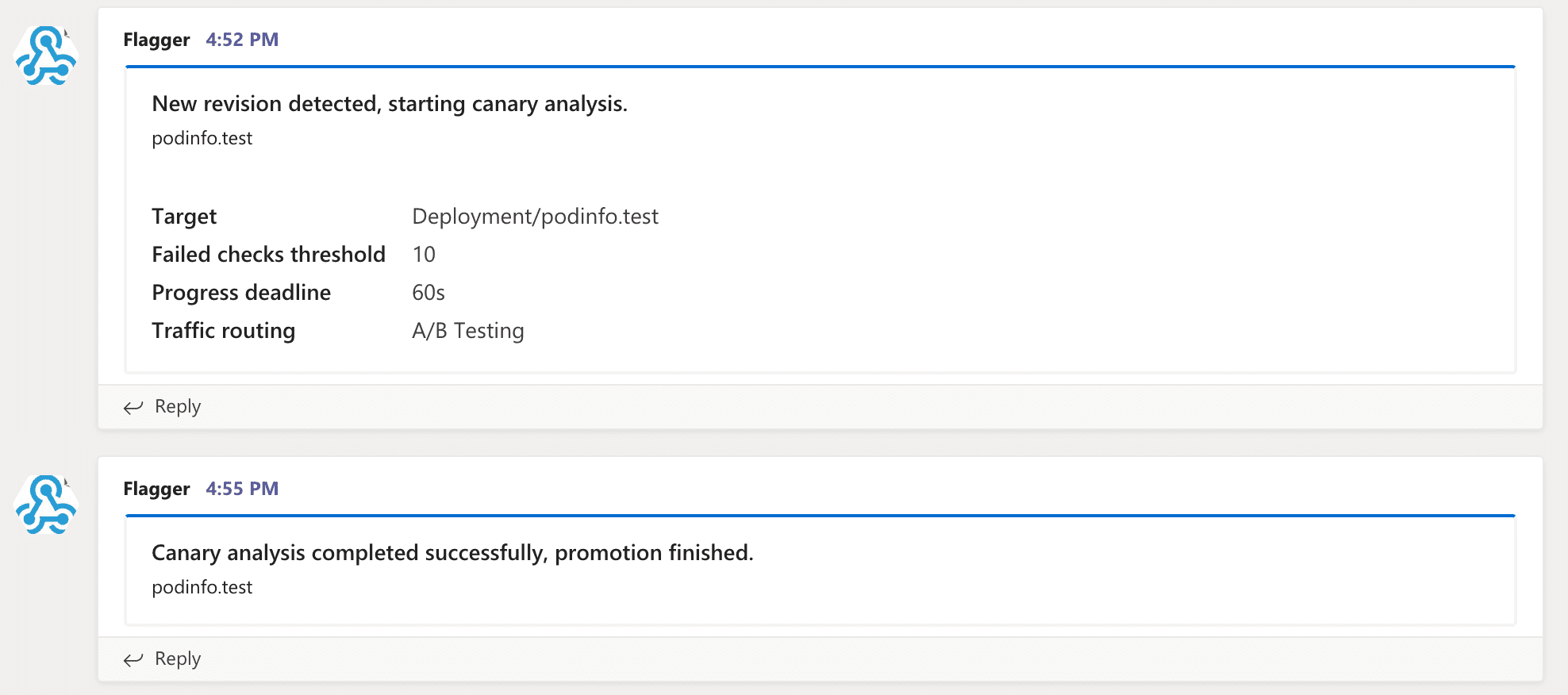

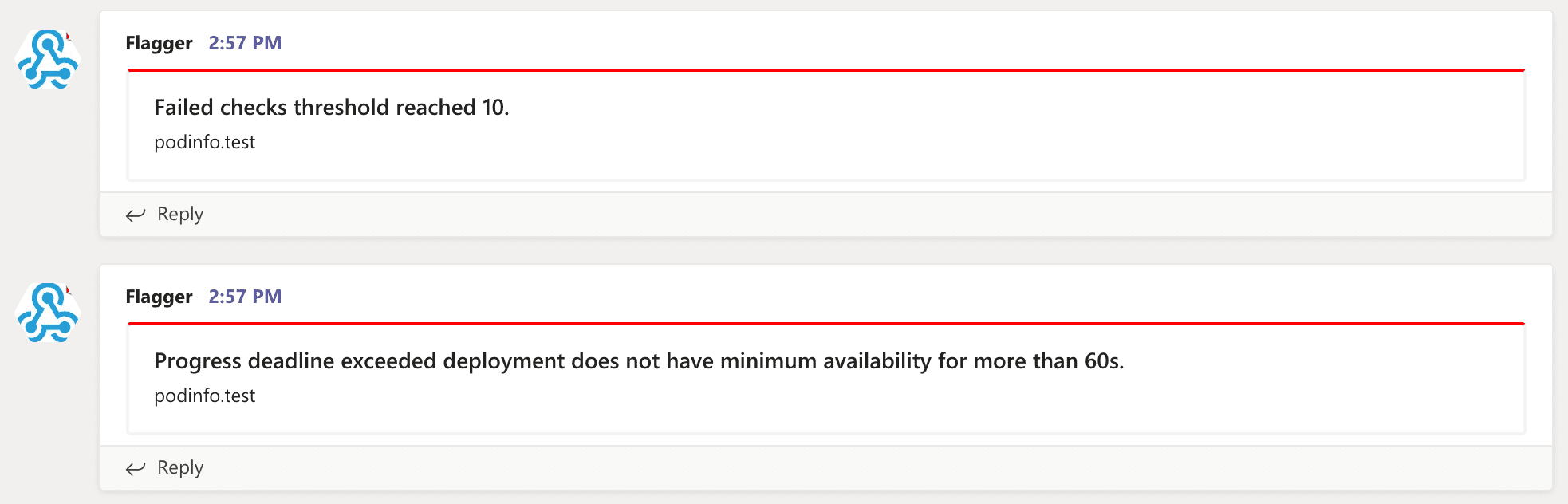

* post the analysis result to Slack or MS Teams

|

||||

* wait for the canary deployment to be updated and start over

|

||||

|

||||

## Canary Analysis

|

||||

|

||||

The canary analysis runs periodically until it reaches the maximum traffic weight or the failed checks threshold.

|

||||

The canary analysis defines:

|

||||

* the type of [deployment strategy](usage/deployment-strategies.md)

|

||||

* the [metrics](usage/metrics.md) used to validate the canary version

|

||||

* the [webhooks](usage/webhooks.md) used for conformance testing, load testing and manual gating

|

||||

* the [alerting settings](usage/alerting.md)

|

||||

|

||||

Spec:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

interval:

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

threshold:

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

maxWeight:

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 2

|

||||

# deploy straight to production without

|

||||

# the metrics and webhook checks

|

||||

skipAnalysis: false

|

||||

```

|

||||

|

||||

The above analysis, if it succeeds, will run for 25 minutes while validating the HTTP metrics and webhooks every minute. You can determine the minimum time that it takes to validate and promote a canary deployment using this formula:

|

||||

|

||||

```text

|

||||

interval * (maxWeight / stepWeight)

|

||||

```

|

||||

|

||||

And the time it takes for a canary to be rollback when the metrics or webhook checks are failing:

|

||||

|

||||

```text

|

||||

interval * threshold

|

||||

```

|

||||

|

||||

In emergency cases, you may want to skip the analysis phase and ship changes directly to production. At any time you can set the `spec.skipAnalysis: true`. When skip analysis is enabled, Flagger checks if the canary deployment is healthy and promotes it without analysing it. If an analysis is underway, Flagger cancels it and runs the promotion.

|

||||

|

||||

## A/B Testing

|

||||

|

||||

Besides weighted routing, Flagger can be configured to route traffic to the canary based on HTTP match conditions. In an A/B testing scenario, you'll be using HTTP headers or cookies to target a certain segment of your users. This is particularly useful for frontend applications that require session affinity.

|

||||

|

||||

You can enable A/B testing by specifying the HTTP match conditions and the number of iterations:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

stepWeight:

|

||||

# total number of iterations

|

||||

iterations: 10

|

||||

# max number of failed iterations before rollback

|

||||

threshold: 2

|

||||

# canary match condition

|

||||

# used for A/B Testing and Blue/Green

|

||||

iterations:

|

||||

# canary match conditions

|

||||

# used for A/B Testing

|

||||

match:

|

||||

- headers:

|

||||

user-agent:

|

||||

regex: "^(?!.*Chrome).*Safari.*"

|

||||

- headers:

|

||||

cookie:

|

||||

regex: "^(.*?;)?(user=test)(;.*)?$"

|

||||

```

|

||||

|

||||

If Flagger finds a HTTP match condition, it will ignore the `maxWeight` and `stepWeight` settings.

|

||||

|

||||

The above configuration will run an analysis for ten minutes targeting the Safari users and those that have a test cookie. You can determine the minimum time that it takes to validate and promote a canary deployment using this formula:

|

||||

|

||||

```text

|

||||

interval * iterations

|

||||

```

|

||||

|

||||

And the time it takes for a canary to be rollback when the metrics or webhook checks are failing:

|

||||

|

||||

```text

|

||||

interval * threshold

|

||||

```

|

||||

|

||||

Make sure that the analysis threshold is lower than the number of iterations.

|

||||

|

||||

## Blue/Green deployments

|

||||

|

||||

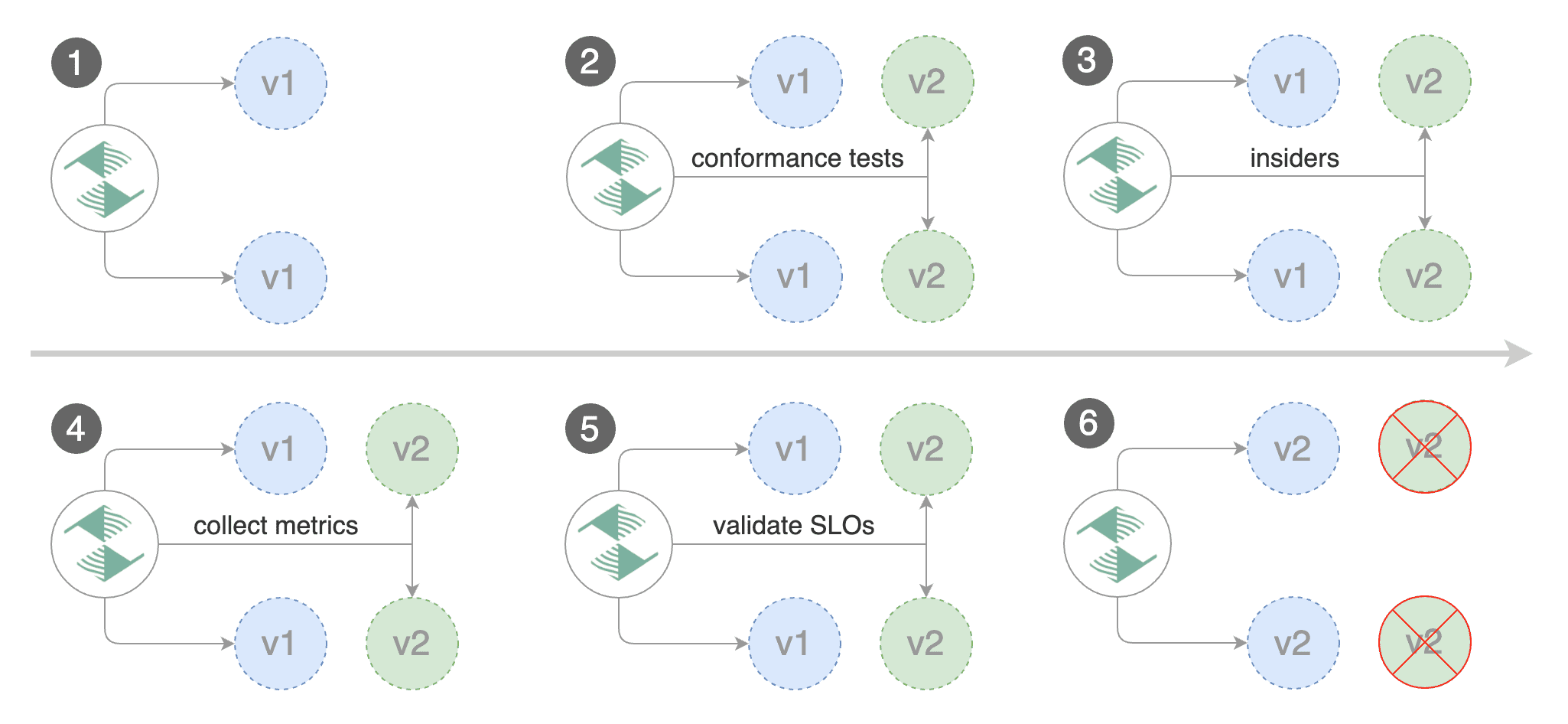

For applications that are not deployed on a service mesh, Flagger can orchestrate blue/green style deployments with Kubernetes L4 networking. When using Istio you have the option to mirror traffic between blue and green.

|

||||

|

||||

You can use the blue/green deployment strategy by replacing `stepWeight/maxWeight` with `iterations` in the `canaryAnalysis` spec:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

# total number of iterations

|

||||

iterations: 10

|

||||

# max number of failed iterations before rollback

|

||||

threshold: 2

|

||||

# Traffic shadowing (compatible with Istio only)

|

||||

mirror: true

|

||||

```

|

||||

|

||||

With the above configuration Flagger will run conformance and load tests on the canary pods for ten minutes. If the metrics analysis succeeds, live traffic will be switched from the old version to the new one when the canary is promoted.

|

||||

|

||||

The blue/green deployment strategy is supported for all service mesh providers.

|

||||

|

||||

Blue/Green rollout steps for service mesh:

|

||||

|

||||

* scale up the canary \(green\)

|

||||

* run conformance tests for the canary pods

|

||||

* run load tests and metric checks for the canary pods

|

||||

* route traffic to canary

|

||||

* promote canary spec over primary \(blue\)

|

||||

* wait for primary rollout

|

||||

* route traffic to primary

|

||||

* scale down canary

|

||||

|

||||

After the analysis finishes, the traffic is routed to the canary \(green\) before triggering the primary \(blue\) rolling update, this ensures a smooth transition to the new version avoiding dropping in-flight requests during the Kubernetes deployment rollout.

|

||||

|

||||

## HTTP Metrics

|

||||

|

||||

The canary analysis is using the following Prometheus queries:

|

||||

|

||||

**HTTP requests success rate percentage**

|

||||

|

||||

Spec:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

- # HTTP header

|

||||

# key performance indicators

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

```

|

||||

|

||||

Istio query:

|

||||

|

||||

```javascript

|

||||

sum(

|

||||

rate(

|

||||

istio_requests_total{

|

||||

reporter="destination",

|

||||

destination_workload_namespace=~"$namespace",

|

||||

destination_workload=~"$workload",

|

||||

response_code!~"5.*"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

/

|

||||

sum(

|

||||

rate(

|

||||

istio_requests_total{

|

||||

reporter="destination",

|

||||

destination_workload_namespace=~"$namespace",

|

||||

destination_workload=~"$workload"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

Envoy query \(App Mesh, Contour or Gloo\):

|

||||

|

||||

```javascript

|

||||

sum(

|

||||

rate(

|

||||

envoy_cluster_upstream_rq{

|

||||

kubernetes_namespace="$namespace",

|

||||

kubernetes_pod_name=~"$workload",

|

||||

envoy_response_code!~"5.*"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

/

|

||||

sum(

|

||||

rate(

|

||||

envoy_cluster_upstream_rq{

|

||||

kubernetes_namespace="$namespace",

|

||||

kubernetes_pod_name=~"$workload"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

**HTTP requests milliseconds duration P99**

|

||||

|

||||

Spec:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

metrics:

|

||||

- name: request-duration

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 1m

|

||||

```

|

||||

|

||||

Istio query:

|

||||

|

||||

```javascript

|

||||

histogram_quantile(0.99,

|

||||

sum(

|

||||

irate(

|

||||

istio_request_duration_seconds_bucket{

|

||||

reporter="destination",

|

||||

destination_workload=~"$workload",

|

||||

destination_workload_namespace=~"$namespace"

|

||||

}[$interval]

|

||||

)

|

||||

) by (le)

|

||||

)

|

||||

```

|

||||

|

||||

Envoy query \(App Mesh, Contour or Gloo\):

|

||||

|

||||

```javascript

|

||||

histogram_quantile(0.99,

|

||||

sum(

|

||||

irate(

|

||||

envoy_cluster_upstream_rq_time_bucket{

|

||||

kubernetes_pod_name=~"$workload",

|

||||

kubernetes_namespace=~"$namespace"

|

||||

}[$interval]

|

||||

)

|

||||

) by (le)

|

||||

)

|

||||

```

|

||||

|

||||

> **Note** that the metric interval should be lower or equal to the control loop interval.

|

||||

|

||||

## Custom Metrics

|

||||

|

||||

The canary analysis can be extended with custom Prometheus queries.

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

threshold: 1

|

||||

maxWeight: 50

|

||||

stepWeight: 5

|

||||

metrics:

|

||||

- name: "404s percentage"

|

||||

threshold: 5

|

||||

query: |

|

||||

100 - sum(

|

||||

rate(

|

||||

istio_requests_total{

|

||||

reporter="destination",

|

||||

destination_workload_namespace="test",

|

||||

destination_workload="podinfo",

|

||||

response_code!="404"

|

||||

}[1m]

|

||||

)

|

||||

)

|

||||

/

|

||||

sum(

|

||||

rate(

|

||||

istio_requests_total{

|

||||

reporter="destination",

|

||||

destination_workload_namespace="test",

|

||||

destination_workload="podinfo"

|

||||

}[1m]

|

||||

)

|

||||

) * 100

|

||||

```

|

||||

|

||||

The above configuration validates the canary by checking if the HTTP 404 req/sec percentage is below 5 percent of the total traffic. If the 404s rate reaches the 5% threshold, then the canary fails.

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

threshold: 1

|

||||

maxWeight: 50

|

||||

stepWeight: 5

|

||||

metrics:

|

||||

- name: "rpc error rate"

|

||||

threshold: 5

|

||||

query: |

|

||||

100 - (sum

|

||||

rate(

|

||||

grpc_server_handled_total{

|

||||

grpc_service="my.TestService",

|

||||

grpc_code!="OK"

|

||||

}[1m]

|

||||

)

|

||||

)

|

||||

/

|

||||

sum(

|

||||

rate(

|

||||

grpc_server_started_total{

|

||||

grpc_service="my.TestService"

|

||||

}[1m]

|

||||

)

|

||||

) * 100

|

||||

```

|

||||

|

||||

The above configuration validates the canary by checking if the percentage of non-OK GRPC req/sec is below 5 percent of the total requests. If the non-OK rate reaches the 5% threshold, then the canary fails.

|

||||

|

||||

When specifying a query, Flagger will run the promql query and convert the result to float64. Then it compares the query result value with the metric threshold value.

|

||||

|

||||

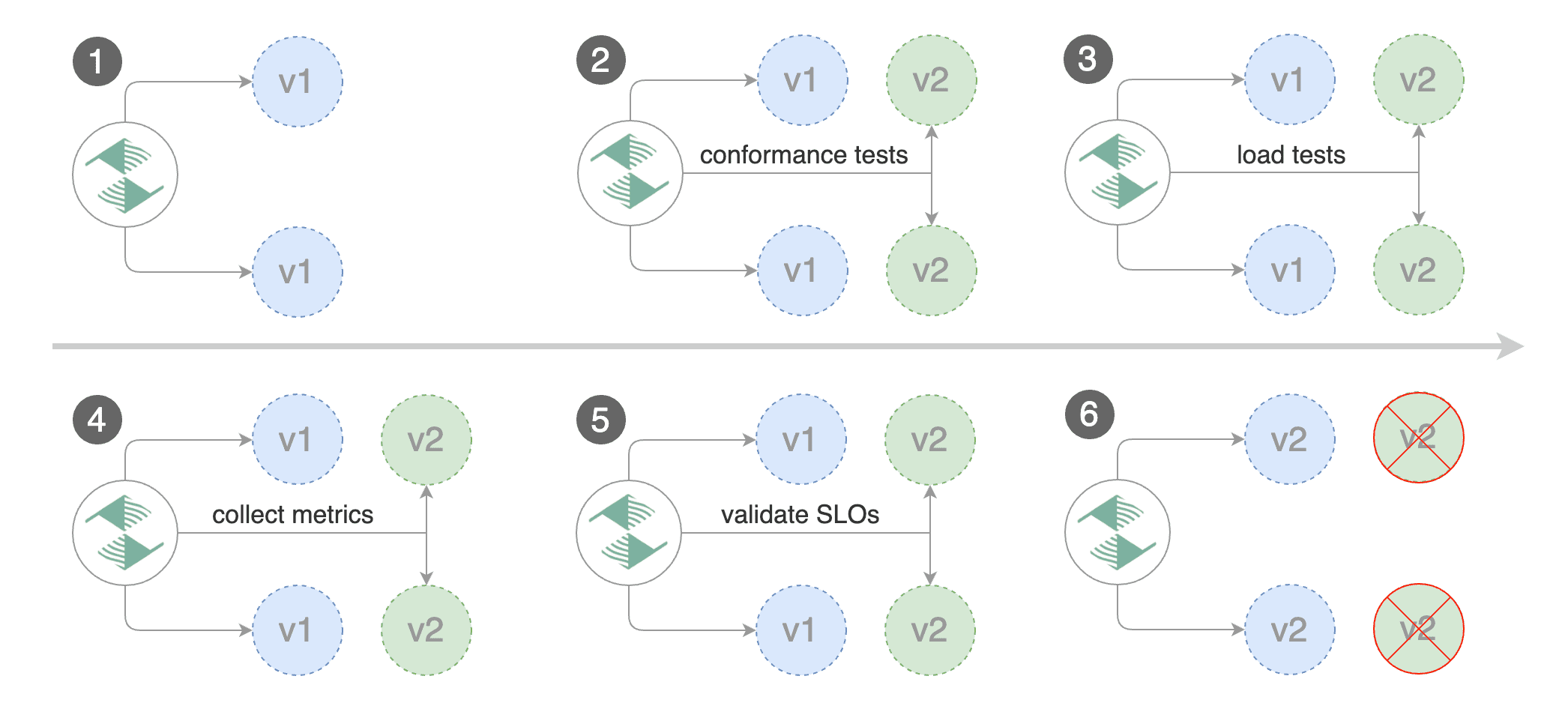

## Webhooks

|

||||

|

||||

The canary analysis can be extended with webhooks. Flagger will call each webhook URL and determine from the response status code \(HTTP 2xx\) if the canary is failing or not.

|

||||

|

||||

There are several types of hooks:

|

||||

|

||||

* **confirm-rollout** hooks are executed before scaling up the canary deployment and can be used for manual approval.

|

||||

|

||||

The rollout is paused until the hook returns a successful HTTP status code.

|

||||

|

||||

* **pre-rollout** hooks are executed before routing traffic to canary.

|

||||

|

||||

The canary advancement is paused if a pre-rollout hook fails and if the number of failures reach the

|

||||

|

||||

threshold the canary will be rollback.

|

||||

|

||||

* **rollout** hooks are executed during the analysis on each iteration before the metric checks.

|

||||

|

||||

If a rollout hook call fails the canary advancement is paused and eventfully rolled back.

|

||||

|

||||

* **confirm-promotion** hooks are executed before the promotion step.

|

||||

|

||||

The canary promotion is paused until the hooks return HTTP 200.

|

||||

|

||||

While the promotion is paused, Flagger will continue to run the metrics checks and rollout hooks.

|

||||

|

||||

* **post-rollout** hooks are executed after the canary has been promoted or rolled back.

|

||||

|

||||

If a post rollout hook fails the error is logged.

|

||||

|

||||

* **rollback** hooks are executed while a canary deployment is in either Progressing or Waiting status.

|

||||

|

||||

This provides the ability to rollback during analysis or while waiting for a confirmation. If a rollback hook

|

||||

|

||||

returns a successful HTTP status code, Flagger will stop the analysis and mark the canary release as failed.

|

||||

|

||||

* **event** hooks are executed every time Flagger emits a Kubernetes event. When configured,

|

||||

|

||||

every action that Flagger takes during a canary deployment will be sent as JSON via an HTTP POST request.

|

||||

|

||||

Spec:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

- # metric check

|

||||

# alerting

|

||||

alerts:

|

||||

- # alert provider

|

||||

# external checks

|

||||

webhooks:

|

||||

- name: "start gate"

|

||||

type: confirm-rollout

|

||||

url: http://flagger-loadtester.test/gate/approve

|

||||

- name: "smoke test"

|

||||

type: pre-rollout

|

||||

url: http://flagger-helmtester.kube-system/

|

||||

timeout: 3m

|

||||

metadata:

|

||||

type: "helm"

|

||||

cmd: "test podinfo --cleanup"

|

||||

- name: "load test"

|

||||

type: rollout

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 15s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 5 -c 2 http://podinfo-canary.test:9898/"

|

||||

- name: "promotion gate"

|

||||

type: confirm-promotion

|

||||

url: http://flagger-loadtester.test/gate/approve

|

||||

- name: "notify"

|

||||

type: post-rollout

|

||||

url: http://telegram.bot:8080/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

some: "message"

|

||||

- name: "rollback gate"

|

||||

type: rollback

|

||||

url: http://flagger-loadtester.test/rollback/check

|

||||

- name: "send to Slack"

|

||||

type: event

|

||||

url: http://event-recevier.notifications/slack

|

||||

- # hook

|

||||

```

|

||||

|

||||

> **Note** that the sum of all rollout webhooks timeouts should be lower than the analysis interval.

|

||||

|

||||

Webhook payload \(HTTP POST\):

|

||||

|

||||

```javascript

|

||||

{

|

||||

"name": "podinfo",

|

||||

"namespace": "test",

|

||||

"phase": "Progressing",

|

||||

"metadata": {

|

||||

"test": "all",

|

||||

"token": "16688eb5e9f289f1991c"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Response status codes:

|

||||

|

||||

* 200-202 - advance canary by increasing the traffic weight

|

||||

* timeout or non-2xx - halt advancement and increment failed checks

|

||||

|

||||

On a non-2xx response Flagger will include the response body \(if any\) in the failed checks log and Kubernetes events.

|

||||

|

||||

Event payload \(HTTP POST\):

|

||||

|

||||

```javascript

|

||||

{

|

||||

"name": "string (canary name)",

|

||||

"namespace": "string (canary namespace)",

|

||||

"phase": "string (canary phase)",

|

||||

"metadata": {

|

||||

"eventMessage": "string (canary event message)",

|

||||

"eventType": "string (canary event type)",

|

||||

"timestamp": "string (unix timestamp ms)"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||