mirror of https://github.com/fluxcd/flagger.git

Merge pull request #170 from weaveworks/nginx

Add support for nginx ingress controller

This commit is contained in:

commit

948df55de3

6

Makefile

6

Makefile

|

|

@ -18,6 +18,12 @@ run-appmesh:

|

|||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

run-nginx:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=nginx -namespace=nginx \

|

||||

-metrics-server=http://prometheus-weave.istio.weavedx.com \

|

||||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

build:

|

||||

docker build -t weaveworks/flagger:$(TAG) . -f Dockerfile

|

||||

|

||||

|

|

|

|||

|

|

@ -31,6 +31,12 @@ rules:

|

|||

resources:

|

||||

- horizontalpodautoscalers

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- "extensions"

|

||||

resources:

|

||||

- ingresses

|

||||

- ingresses/status

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- flagger.app

|

||||

resources:

|

||||

|

|

|

|||

|

|

@ -69,6 +69,18 @@ spec:

|

|||

type: string

|

||||

name:

|

||||

type: string

|

||||

ingressRef:

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

required: ['apiVersion', 'kind', 'name']

|

||||

properties:

|

||||

apiVersion:

|

||||

type: string

|

||||

kind:

|

||||

type: string

|

||||

name:

|

||||

type: string

|

||||

service:

|

||||

type: object

|

||||

required: ['port']

|

||||

|

|

|

|||

|

|

@ -0,0 +1,59 @@

|

|||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# ingress reference

|

||||

ingressRef:

|

||||

apiVersion: extensions/v1beta1

|

||||

kind: Ingress

|

||||

name: podinfo

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

# NGINX Prometheus checks

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: request-duration

|

||||

# maximum avg req duration

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 1m

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

type: cmd

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://app.example.com/"

|

||||

logCmdOutput: "true"

|

||||

|

|

@ -0,0 +1,69 @@

|

|||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

replicas: 1

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.4.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

value: green

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

failureThreshold: 3

|

||||

periodSeconds: 10

|

||||

successThreshold: 1

|

||||

timeoutSeconds: 2

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/readyz

|

||||

failureThreshold: 3

|

||||

periodSeconds: 3

|

||||

successThreshold: 1

|

||||

timeoutSeconds: 2

|

||||

resources:

|

||||

limits:

|

||||

cpu: 1000m

|

||||

memory: 256Mi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 16Mi

|

||||

|

|

@ -0,0 +1,19 @@

|

|||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

minReplicas: 2

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

- type: Resource

|

||||

resource:

|

||||

name: cpu

|

||||

# scale up if usage is above

|

||||

# 99% of the requested CPU (100m)

|

||||

targetAverageUtilization: 99

|

||||

|

|

@ -0,0 +1,17 @@

|

|||

apiVersion: extensions/v1beta1

|

||||

kind: Ingress

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

annotations:

|

||||

kubernetes.io/ingress.class: "nginx"

|

||||

spec:

|

||||

rules:

|

||||

- host: app.exmaple.com

|

||||

http:

|

||||

paths:

|

||||

- backend:

|

||||

serviceName: podinfo

|

||||

servicePort: 9898

|

||||

|

|

@ -70,6 +70,18 @@ spec:

|

|||

type: string

|

||||

name:

|

||||

type: string

|

||||

ingressRef:

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

required: ['apiVersion', 'kind', 'name']

|

||||

properties:

|

||||

apiVersion:

|

||||

type: string

|

||||

kind:

|

||||

type: string

|

||||

name:

|

||||

type: string

|

||||

service:

|

||||

type: object

|

||||

required: ['port']

|

||||

|

|

|

|||

|

|

@ -38,7 +38,11 @@ spec:

|

|||

{{- if .Values.meshProvider }}

|

||||

- -mesh-provider={{ .Values.meshProvider }}

|

||||

{{- end }}

|

||||

{{- if .Values.prometheus.install }}

|

||||

- -metrics-server=http://{{ template "flagger.fullname" . }}-prometheus:9090

|

||||

{{- else }}

|

||||

- -metrics-server={{ .Values.metricsServer }}

|

||||

{{- end }}

|

||||

{{- if .Values.namespace }}

|

||||

- -namespace={{ .Values.namespace }}

|

||||

{{- end }}

|

||||

|

|

|

|||

|

|

@ -0,0 +1,292 @@

|

|||

{{- if .Values.prometheus.install }}

|

||||

apiVersion: rbac.authorization.k8s.io/v1beta1

|

||||

kind: ClusterRole

|

||||

metadata:

|

||||

name: {{ template "flagger.fullname" . }}-prometheus

|

||||

labels:

|

||||

helm.sh/chart: {{ template "flagger.chart" . }}

|

||||

app.kubernetes.io/name: {{ template "flagger.name" . }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

rules:

|

||||

- apiGroups: [""]

|

||||

resources:

|

||||

- nodes

|

||||

- services

|

||||

- endpoints

|

||||

- pods

|

||||

- nodes/proxy

|

||||

verbs: ["get", "list", "watch"]

|

||||

- apiGroups: [""]

|

||||

resources:

|

||||

- configmaps

|

||||

verbs: ["get"]

|

||||

- nonResourceURLs: ["/metrics"]

|

||||

verbs: ["get"]

|

||||

---

|

||||

apiVersion: rbac.authorization.k8s.io/v1beta1

|

||||

kind: ClusterRoleBinding

|

||||

metadata:

|

||||

name: {{ template "flagger.fullname" . }}-prometheus

|

||||

labels:

|

||||

helm.sh/chart: {{ template "flagger.chart" . }}

|

||||

app.kubernetes.io/name: {{ template "flagger.name" . }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

roleRef:

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

kind: ClusterRole

|

||||

name: {{ template "flagger.fullname" . }}-prometheus

|

||||

subjects:

|

||||

- kind: ServiceAccount

|

||||

name: {{ template "flagger.serviceAccountName" . }}-prometheus

|

||||

namespace: {{ .Release.Namespace }}

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ServiceAccount

|

||||

metadata:

|

||||

name: {{ template "flagger.serviceAccountName" . }}-prometheus

|

||||

namespace: {{ .Release.Namespace }}

|

||||

labels:

|

||||

helm.sh/chart: {{ template "flagger.chart" . }}

|

||||

app.kubernetes.io/name: {{ template "flagger.name" . }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: {{ template "flagger.fullname" . }}-prometheus

|

||||

namespace: {{ .Release.Namespace }}

|

||||

labels:

|

||||

helm.sh/chart: {{ template "flagger.chart" . }}

|

||||

app.kubernetes.io/name: {{ template "flagger.name" . }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

data:

|

||||

prometheus.yml: |-

|

||||

global:

|

||||

scrape_interval: 5s

|

||||

scrape_configs:

|

||||

|

||||

# Scrape config for AppMesh Envoy sidecar

|

||||

- job_name: 'appmesh-envoy'

|

||||

metrics_path: /stats/prometheus

|

||||

kubernetes_sd_configs:

|

||||

- role: pod

|

||||

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_pod_container_name]

|

||||

action: keep

|

||||

regex: '^envoy$'

|

||||

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

|

||||

action: replace

|

||||

regex: ([^:]+)(?::\d+)?;(\d+)

|

||||

replacement: ${1}:9901

|

||||

target_label: __address__

|

||||

- action: labelmap

|

||||

regex: __meta_kubernetes_pod_label_(.+)

|

||||

- source_labels: [__meta_kubernetes_namespace]

|

||||

action: replace

|

||||

target_label: kubernetes_namespace

|

||||

- source_labels: [__meta_kubernetes_pod_name]

|

||||

action: replace

|

||||

target_label: kubernetes_pod_name

|

||||

|

||||

# Exclude high cardinality metrics

|

||||

metric_relabel_configs:

|

||||

- source_labels: [ cluster_name ]

|

||||

regex: '(outbound|inbound|prometheus_stats).*'

|

||||

action: drop

|

||||

- source_labels: [ tcp_prefix ]

|

||||

regex: '(outbound|inbound|prometheus_stats).*'

|

||||

action: drop

|

||||

- source_labels: [ listener_address ]

|

||||

regex: '(.+)'

|

||||

action: drop

|

||||

- source_labels: [ http_conn_manager_listener_prefix ]

|

||||

regex: '(.+)'

|

||||

action: drop

|

||||

- source_labels: [ http_conn_manager_prefix ]

|

||||

regex: '(.+)'

|

||||

action: drop

|

||||

- source_labels: [ __name__ ]

|

||||

regex: 'envoy_tls.*'

|

||||

action: drop

|

||||

- source_labels: [ __name__ ]

|

||||

regex: 'envoy_tcp_downstream.*'

|

||||

action: drop

|

||||

- source_labels: [ __name__ ]

|

||||

regex: 'envoy_http_(stats|admin).*'

|

||||

action: drop

|

||||

- source_labels: [ __name__ ]

|

||||

regex: 'envoy_cluster_(lb|retry|bind|internal|max|original).*'

|

||||

action: drop

|

||||

|

||||

# Scrape config for API servers

|

||||

- job_name: 'kubernetes-apiservers'

|

||||

kubernetes_sd_configs:

|

||||

- role: endpoints

|

||||

namespaces:

|

||||

names:

|

||||

- default

|

||||

scheme: https

|

||||

tls_config:

|

||||

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

|

||||

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

|

||||

action: keep

|

||||

regex: kubernetes;https

|

||||

|

||||

# Scrape config for nodes

|

||||

- job_name: 'kubernetes-nodes'

|

||||

scheme: https

|

||||

tls_config:

|

||||

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

|

||||

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

|

||||

kubernetes_sd_configs:

|

||||

- role: node

|

||||

relabel_configs:

|

||||

- action: labelmap

|

||||

regex: __meta_kubernetes_node_label_(.+)

|

||||

- target_label: __address__

|

||||

replacement: kubernetes.default.svc:443

|

||||

- source_labels: [__meta_kubernetes_node_name]

|

||||

regex: (.+)

|

||||

target_label: __metrics_path__

|

||||

replacement: /api/v1/nodes/${1}/proxy/metrics

|

||||

|

||||

# scrape config for cAdvisor

|

||||

- job_name: 'kubernetes-cadvisor'

|

||||

scheme: https

|

||||

tls_config:

|

||||

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

|

||||

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

|

||||

kubernetes_sd_configs:

|

||||

- role: node

|

||||

relabel_configs:

|

||||

- action: labelmap

|

||||

regex: __meta_kubernetes_node_label_(.+)

|

||||

- target_label: __address__

|

||||

replacement: kubernetes.default.svc:443

|

||||

- source_labels: [__meta_kubernetes_node_name]

|

||||

regex: (.+)

|

||||

target_label: __metrics_path__

|

||||

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

|

||||

|

||||

# scrape config for pods

|

||||

- job_name: kubernetes-pods

|

||||

kubernetes_sd_configs:

|

||||

- role: pod

|

||||

relabel_configs:

|

||||

- action: keep

|

||||

regex: true

|

||||

source_labels:

|

||||

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

|

||||

- source_labels: [ __address__ ]

|

||||

regex: '.*9901.*'

|

||||

action: drop

|

||||

- action: replace

|

||||

regex: (.+)

|

||||

source_labels:

|

||||

- __meta_kubernetes_pod_annotation_prometheus_io_path

|

||||

target_label: __metrics_path__

|

||||

- action: replace

|

||||

regex: ([^:]+)(?::\d+)?;(\d+)

|

||||

replacement: $1:$2

|

||||

source_labels:

|

||||

- __address__

|

||||

- __meta_kubernetes_pod_annotation_prometheus_io_port

|

||||

target_label: __address__

|

||||

- action: labelmap

|

||||

regex: __meta_kubernetes_pod_label_(.+)

|

||||

- action: replace

|

||||

source_labels:

|

||||

- __meta_kubernetes_namespace

|

||||

target_label: kubernetes_namespace

|

||||

- action: replace

|

||||

source_labels:

|

||||

- __meta_kubernetes_pod_name

|

||||

target_label: kubernetes_pod_name

|

||||

---

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: {{ template "flagger.fullname" . }}-prometheus

|

||||

namespace: {{ .Release.Namespace }}

|

||||

labels:

|

||||

helm.sh/chart: {{ template "flagger.chart" . }}

|

||||

app.kubernetes.io/name: {{ template "flagger.name" . }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

spec:

|

||||

replicas: 1

|

||||

selector:

|

||||

matchLabels:

|

||||

app.kubernetes.io/name: {{ template "flagger.name" . }}-prometheus

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

app.kubernetes.io/name: {{ template "flagger.name" . }}-prometheus

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

annotations:

|

||||

appmesh.k8s.aws/sidecarInjectorWebhook: disabled

|

||||

sidecar.istio.io/inject: "false"

|

||||

spec:

|

||||

serviceAccountName: {{ template "flagger.serviceAccountName" . }}-prometheus

|

||||

containers:

|

||||

- name: prometheus

|

||||

image: "docker.io/prom/prometheus:v2.7.1"

|

||||

imagePullPolicy: IfNotPresent

|

||||

args:

|

||||

- '--storage.tsdb.retention=6h'

|

||||

- '--config.file=/etc/prometheus/prometheus.yml'

|

||||

ports:

|

||||

- containerPort: 9090

|

||||

name: http

|

||||

livenessProbe:

|

||||

httpGet:

|

||||

path: /-/healthy

|

||||

port: 9090

|

||||

readinessProbe:

|

||||

httpGet:

|

||||

path: /-/ready

|

||||

port: 9090

|

||||

resources:

|

||||

requests:

|

||||

cpu: 10m

|

||||

memory: 128Mi

|

||||

volumeMounts:

|

||||

- name: config-volume

|

||||

mountPath: /etc/prometheus

|

||||

- name: data-volume

|

||||

mountPath: /prometheus/data

|

||||

|

||||

volumes:

|

||||

- name: config-volume

|

||||

configMap:

|

||||

name: {{ template "flagger.fullname" . }}-prometheus

|

||||

- name: data-volume

|

||||

emptyDir: {}

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

metadata:

|

||||

name: {{ template "flagger.fullname" . }}-prometheus

|

||||

namespace: {{ .Release.Namespace }}

|

||||

labels:

|

||||

helm.sh/chart: {{ template "flagger.chart" . }}

|

||||

app.kubernetes.io/name: {{ template "flagger.name" . }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

spec:

|

||||

selector:

|

||||

app.kubernetes.io/name: {{ template "flagger.name" . }}-prometheus

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

ports:

|

||||

- name: http

|

||||

protocol: TCP

|

||||

port: 9090

|

||||

{{- end }}

|

||||

|

|

@ -27,6 +27,12 @@ rules:

|

|||

resources:

|

||||

- horizontalpodautoscalers

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- "extensions"

|

||||

resources:

|

||||

- ingresses

|

||||

- ingresses/status

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- flagger.app

|

||||

resources:

|

||||

|

|

|

|||

|

|

@ -7,7 +7,7 @@ image:

|

|||

|

||||

metricsServer: "http://prometheus:9090"

|

||||

|

||||

# accepted values are istio or appmesh (defaults to istio)

|

||||

# accepted values are istio, appmesh, nginx or supergloo:mesh.namespace (defaults to istio)

|

||||

meshProvider: ""

|

||||

|

||||

# single namespace restriction

|

||||

|

|

@ -49,3 +49,7 @@ nodeSelector: {}

|

|||

tolerations: []

|

||||

|

||||

affinity: {}

|

||||

|

||||

prometheus:

|

||||

# to be used with AppMesh or nginx ingress

|

||||

install: false

|

||||

|

|

|

|||

|

|

@ -99,7 +99,7 @@ func main() {

|

|||

|

||||

canaryInformer := flaggerInformerFactory.Flagger().V1alpha3().Canaries()

|

||||

|

||||

logger.Infof("Starting flagger version %s revision %s", version.VERSION, version.REVISION)

|

||||

logger.Infof("Starting flagger version %s revision %s mesh provider %s", version.VERSION, version.REVISION, meshProvider)

|

||||

|

||||

ver, err := kubeClient.Discovery().ServerVersion()

|

||||

if err != nil {

|

||||

|

|

|

|||

Binary file not shown.

|

After Width: | Height: | Size: 40 KiB |

|

|

@ -0,0 +1,355 @@

|

|||

# NGNIX Ingress Controller Canary Deployments

|

||||

|

||||

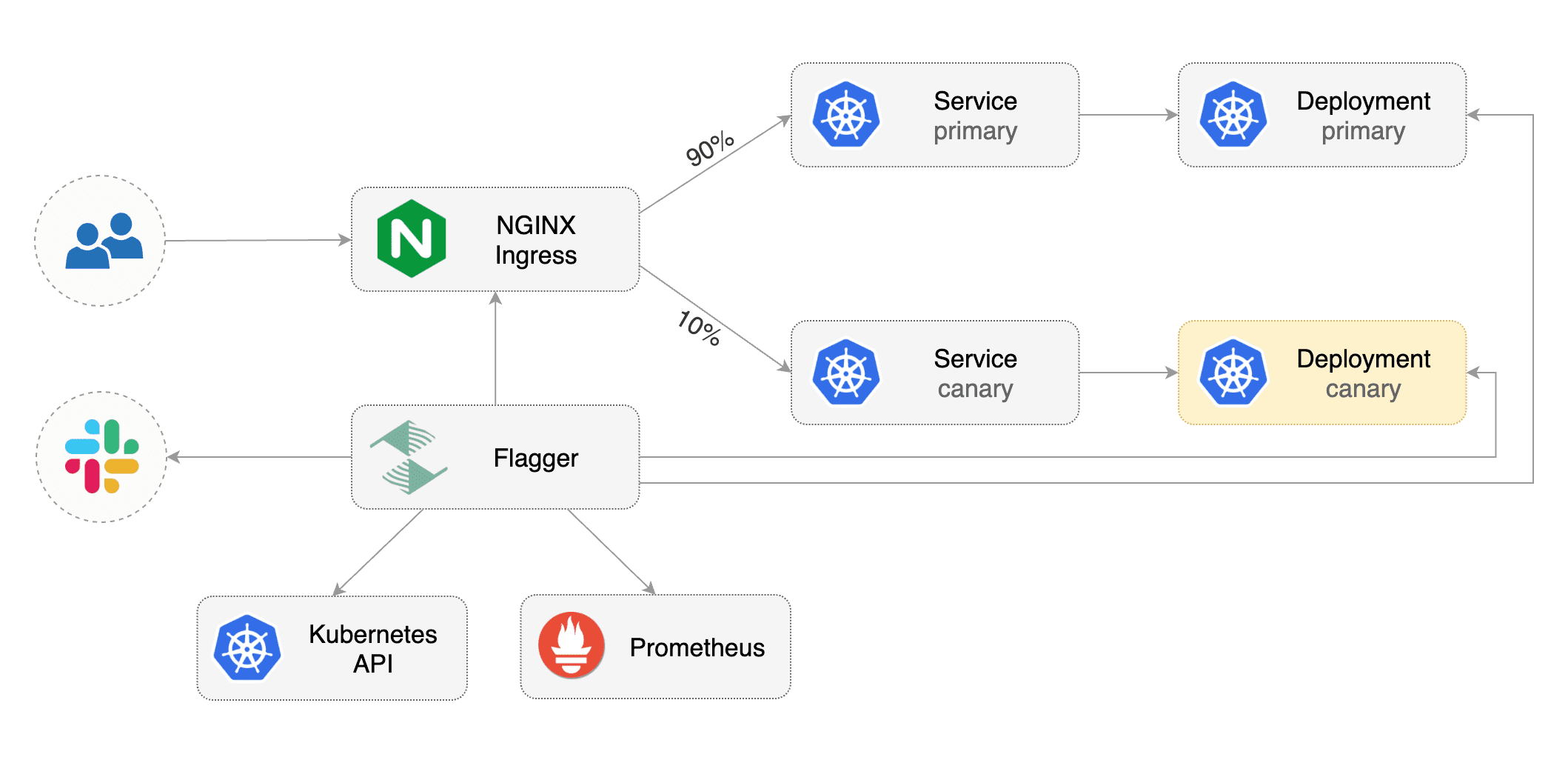

This guide shows you how to use the NGINX ingress controller and Flagger to automate canary deployments and A/B testing.

|

||||

|

||||

|

||||

|

||||

### Prerequisites

|

||||

|

||||

Flagger requires a Kubernetes cluster **v1.11** or newer and NGINX ingress **0.24** or newer.

|

||||

|

||||

Install NGINX with Helm:

|

||||

|

||||

```bash

|

||||

helm upgrade -i nginx-ingress stable/nginx-ingress \

|

||||

--namespace ingress-nginx \

|

||||

--set controller.stats.enabled=true \

|

||||

--set controller.metrics.enabled=true

|

||||

```

|

||||

|

||||

Install Flagger and the Prometheus add-on in the same namespace as NGINX:

|

||||

|

||||

```bash

|

||||

helm repo add flagger https://flagger.app

|

||||

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--namespace ingress-nginx \

|

||||

--set prometheus.install=true \

|

||||

--set meshProvider=nginx

|

||||

```

|

||||

|

||||

Optionally you can enable Slack notifications:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--reuse-values \

|

||||

--namespace ingress-nginx \

|

||||

--set slack.url=https://hooks.slack.com/services/YOUR/SLACK/WEBHOOK \

|

||||

--set slack.channel=general \

|

||||

--set slack.user=flagger

|

||||

```

|

||||

|

||||

### Bootstrap

|

||||

|

||||

Flagger takes a Kubernetes deployment and optionally a horizontal pod autoscaler (HPA),

|

||||

then creates a series of objects (Kubernetes deployments, ClusterIP services and canary ingress).

|

||||

These objects expose the application outside the cluster and drive the canary analysis and promotion.

|

||||

|

||||

Create a test namespace:

|

||||

|

||||

```bash

|

||||

kubectl create ns test

|

||||

```

|

||||

|

||||

Create a deployment and a horizontal pod autoscaler:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/nginx/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/nginx/hpa.yaml

|

||||

```

|

||||

|

||||

Deploy the load testing service to generate traffic during the canary analysis:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger-loadtester flagger/loadtester \

|

||||

--namespace=test

|

||||

```

|

||||

|

||||

Create an ingress definition (replace `app.exmaple.com` with your own domain):

|

||||

|

||||

```yaml

|

||||

apiVersion: extensions/v1beta1

|

||||

kind: Ingress

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

annotations:

|

||||

kubernetes.io/ingress.class: "nginx"

|

||||

spec:

|

||||

rules:

|

||||

- host: app.exmaple.com

|

||||

http:

|

||||

paths:

|

||||

- backend:

|

||||

serviceName: podinfo

|

||||

servicePort: 9898

|

||||

```

|

||||

|

||||

Save the above resource as podinfo-ingress.yaml and then apply it:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ./podinfo-ingress.yaml

|

||||

```

|

||||

|

||||

Create a canary custom resource (replace `app.exmaple.com` with your own domain):

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# ingress reference

|

||||

ingressRef:

|

||||

apiVersion: extensions/v1beta1

|

||||

kind: Ingress

|

||||

name: podinfo

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

# NGINX Prometheus checks

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

# load testing (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

type: cmd

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://app.example.com/"

|

||||

```

|

||||

|

||||

Save the above resource as podinfo-canary.yaml and then apply it:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ./podinfo-canary.yaml

|

||||

```

|

||||

|

||||

After a couple of seconds Flagger will create the canary objects:

|

||||

|

||||

```bash

|

||||

# applied

|

||||

deployment.apps/podinfo

|

||||

horizontalpodautoscaler.autoscaling/podinfo

|

||||

ingresses.extensions/podinfo

|

||||

canary.flagger.app/podinfo

|

||||

|

||||

# generated

|

||||

deployment.apps/podinfo-primary

|

||||

horizontalpodautoscaler.autoscaling/podinfo-primary

|

||||

service/podinfo

|

||||

service/podinfo-canary

|

||||

service/podinfo-primary

|

||||

ingresses.extensions/podinfo-canary

|

||||

```

|

||||

|

||||

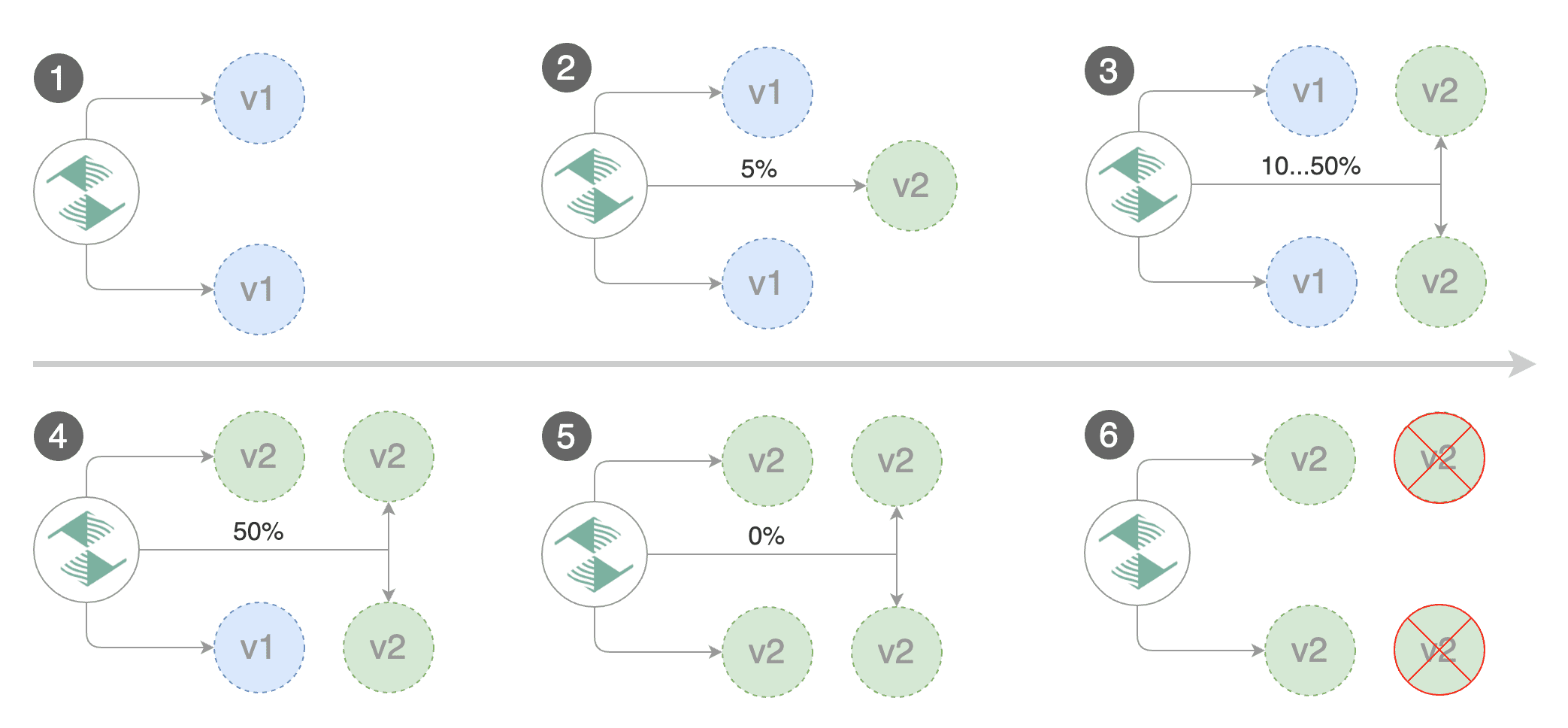

### Automated canary promotion

|

||||

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance indicators

|

||||

like HTTP requests success rate, requests average duration and pod health.

|

||||

Based on analysis of the KPIs a canary is promoted or aborted, and the analysis result is published to Slack.

|

||||

|

||||

|

||||

|

||||

Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.1

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

|

||||

```text

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Weight: 0

|

||||

Failed Checks: 0

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger New revision detected podinfo.test

|

||||

Normal Synced 3m flagger Scaling up podinfo.test

|

||||

Warning Synced 3m flagger Waiting for podinfo.test rollout to finish: 0 of 1 updated replicas are available

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 20

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 25

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 30

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 35

|

||||

Normal Synced 55s flagger Advance podinfo.test canary weight 40

|

||||

Normal Synced 45s flagger Advance podinfo.test canary weight 45

|

||||

Normal Synced 35s flagger Advance podinfo.test canary weight 50

|

||||

Normal Synced 25s flagger Copying podinfo.test template spec to podinfo-primary.test

|

||||

Warning Synced 15s flagger Waiting for podinfo-primary.test rollout to finish: 1 of 2 updated replicas are available

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

**Note** that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis.

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 15 2019-05-06T14:05:07Z

|

||||

prod frontend Succeeded 0 2019-05-05T16:15:07Z

|

||||

prod backend Failed 0 2019-05-04T17:05:07Z

|

||||

```

|

||||

|

||||

### Automated rollback

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors to test if Flagger pauses and rolls back the faulted version.

|

||||

|

||||

Trigger another canary deployment:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.2

|

||||

```

|

||||

|

||||

Generate HTTP 500 errors:

|

||||

|

||||

```bash

|

||||

watch curl http://app.exmaple.com/status/500

|

||||

```

|

||||

|

||||

When the number of failed checks reaches the canary analysis threshold, the traffic is routed back to the primary,

|

||||

the canary is scaled to zero and the rollout is marked as failed.

|

||||

|

||||

```text

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger Starting canary deployment for podinfo.test

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Normal Synced 3m flagger Halt podinfo.test advancement success rate 69.17% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 61.39% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 55.06% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 47.00% < 99%

|

||||

Normal Synced 2m flagger (combined from similar events): Halt podinfo.test advancement success rate 38.08% < 99%

|

||||

Warning Synced 1m flagger Rolling back podinfo.test failed checks threshold reached 10

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

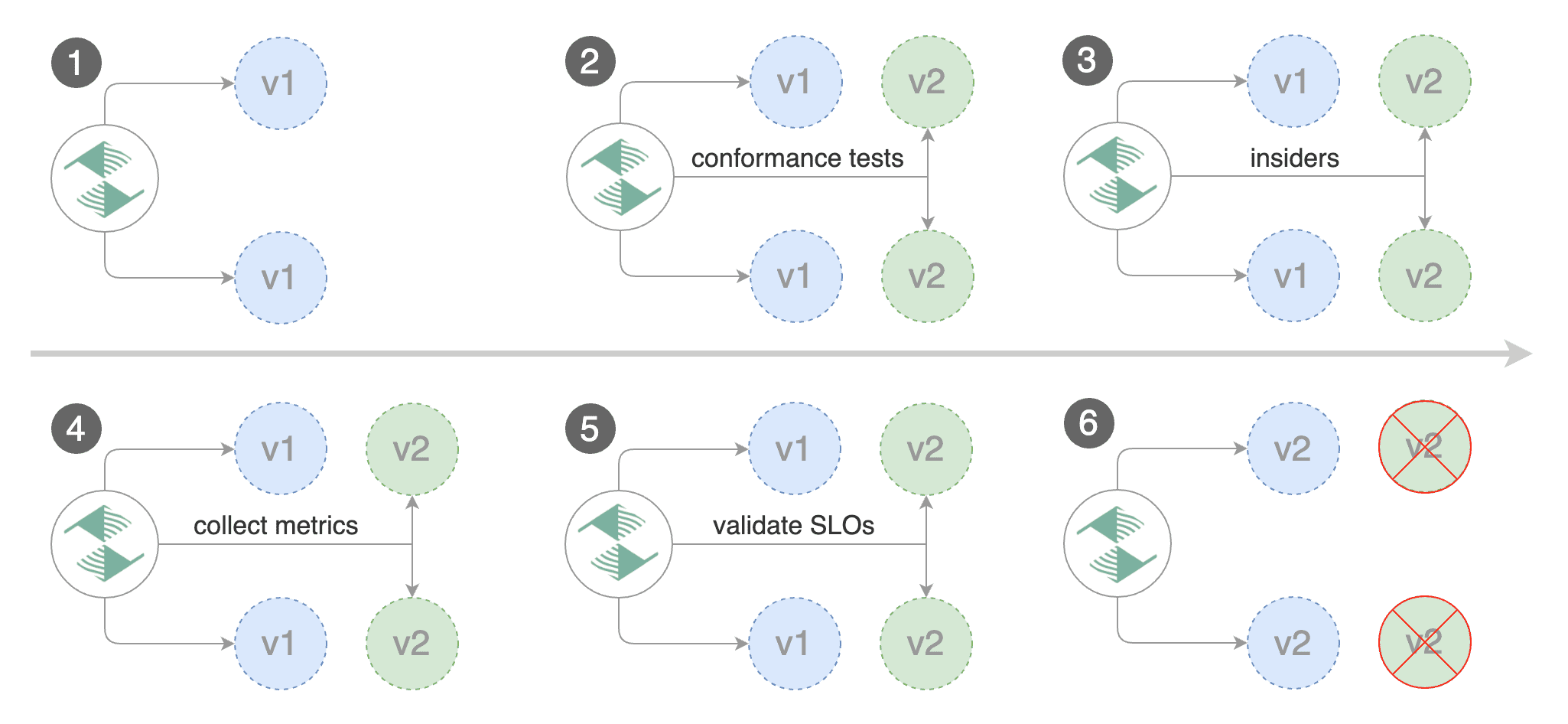

### A/B Testing

|

||||

|

||||

Besides weighted routing, Flagger can be configured to route traffic to the canary based on HTTP match conditions.

|

||||

In an A/B testing scenario, you'll be using HTTP headers or cookies to target a certain segment of your users.

|

||||

This is particularly useful for frontend applications that require session affinity.

|

||||

|

||||

|

||||

|

||||

Edit the canary analysis, remove the max/step weight and add the match conditions and iterations:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

interval: 1m

|

||||

threshold: 10

|

||||

iterations: 10

|

||||

match:

|

||||

# curl -H 'X-Canary: insider' http://app.example.com

|

||||

- headers:

|

||||

x-canary:

|

||||

exact: "insider"

|

||||

# curl -b 'canary=always' http://app.example.com

|

||||

- headers:

|

||||

cookie:

|

||||

exact: "canary"

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://localhost:8888/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

type: cmd

|

||||

cmd: "hey -z 1m -q 10 -c 2 -H 'Cookie: canary=always' http://app.example.com/"

|

||||

logCmdOutput: "true"

|

||||

```

|

||||

|

||||

The above configuration will run an analysis for ten minutes targeting users that have a `canary` cookie set to `always` or

|

||||

those that call the service using the `X-Canary: always` header.

|

||||

|

||||

Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.5.0

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts the A/B testing:

|

||||

|

||||

```text

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Failed Checks: 0

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger New revision detected podinfo.test

|

||||

Normal Synced 3m flagger Scaling up podinfo.test

|

||||

Warning Synced 3m flagger Waiting for podinfo.test rollout to finish: 0 of 1 updated replicas are available

|

||||

Normal Synced 3m flagger Advance podinfo.test canary iteration 1/10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary iteration 2/10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary iteration 3/10

|

||||

Normal Synced 2m flagger Advance podinfo.test canary iteration 4/10

|

||||

Normal Synced 2m flagger Advance podinfo.test canary iteration 5/10

|

||||

Normal Synced 1m flagger Advance podinfo.test canary iteration 6/10

|

||||

Normal Synced 1m flagger Advance podinfo.test canary iteration 7/10

|

||||

Normal Synced 55s flagger Advance podinfo.test canary iteration 8/10

|

||||

Normal Synced 45s flagger Advance podinfo.test canary iteration 9/10

|

||||

Normal Synced 35s flagger Advance podinfo.test canary iteration 10/10

|

||||

Normal Synced 25s flagger Copying podinfo.test template spec to podinfo-primary.test

|

||||

Warning Synced 15s flagger Waiting for podinfo-primary.test rollout to finish: 1 of 2 updated replicas are available

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

|

|

@ -52,6 +52,10 @@ type CanarySpec struct {

|

|||

// +optional

|

||||

AutoscalerRef *hpav1.CrossVersionObjectReference `json:"autoscalerRef,omitempty"`

|

||||

|

||||

// reference to NGINX ingress resource

|

||||

// +optional

|

||||

IngressRef *hpav1.CrossVersionObjectReference `json:"ingressRef,omitempty"`

|

||||

|

||||

// virtual service spec

|

||||

Service CanaryService `json:"service"`

|

||||

|

||||

|

|

|

|||

|

|

@ -205,6 +205,11 @@ func (in *CanarySpec) DeepCopyInto(out *CanarySpec) {

|

|||

*out = new(v1.CrossVersionObjectReference)

|

||||

**out = **in

|

||||

}

|

||||

if in.IngressRef != nil {

|

||||

in, out := &in.IngressRef, &out.IngressRef

|

||||

*out = new(v1.CrossVersionObjectReference)

|

||||

**out = **in

|

||||

}

|

||||

in.Service.DeepCopyInto(&out.Service)

|

||||

in.CanaryAnalysis.DeepCopyInto(&out.CanaryAnalysis)

|

||||

if in.ProgressDeadlineSeconds != nil {

|

||||

|

|

|

|||

|

|

@ -632,6 +632,41 @@ func (c *Controller) analyseCanary(r *flaggerv1.Canary) bool {

|

|||

}

|

||||

}

|

||||

|

||||

// NGINX checks

|

||||

if c.meshProvider == "nginx" {

|

||||

if metric.Name == "request-success-rate" {

|

||||

val, err := c.observer.GetNginxSuccessRate(r.Spec.IngressRef.Name, r.Namespace, metric.Name, metric.Interval)

|

||||

if err != nil {

|

||||

if strings.Contains(err.Error(), "no values found") {

|

||||

c.recordEventWarningf(r, "Halt advancement no values found for metric %s probably %s.%s is not receiving traffic",

|

||||

metric.Name, r.Spec.TargetRef.Name, r.Namespace)

|

||||

} else {

|

||||

c.recordEventErrorf(r, "Metrics server %s query failed: %v", c.observer.GetMetricsServer(), err)

|

||||

}

|

||||

return false

|

||||

}

|

||||

if float64(metric.Threshold) > val {

|

||||

c.recordEventWarningf(r, "Halt %s.%s advancement success rate %.2f%% < %v%%",

|

||||

r.Name, r.Namespace, val, metric.Threshold)

|

||||

return false

|

||||

}

|

||||

}

|

||||

|

||||

if metric.Name == "request-duration" {

|

||||

val, err := c.observer.GetNginxRequestDuration(r.Spec.IngressRef.Name, r.Namespace, metric.Name, metric.Interval)

|

||||

if err != nil {

|

||||

c.recordEventErrorf(r, "Metrics server %s query failed: %v", c.observer.GetMetricsServer(), err)

|

||||

return false

|

||||

}

|

||||

t := time.Duration(metric.Threshold) * time.Millisecond

|

||||

if val > t {

|

||||

c.recordEventWarningf(r, "Halt %s.%s advancement request duration %v > %v",

|

||||

r.Name, r.Namespace, val, t)

|

||||

return false

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// custom checks

|

||||

if metric.Query != "" {

|

||||

val, err := c.observer.GetScalar(metric.Query)

|

||||

|

|

|

|||

|

|

@ -0,0 +1,122 @@

|

|||

package metrics

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"net/url"

|

||||

"strconv"

|

||||

"time"

|

||||

)

|

||||

|

||||

const nginxSuccessRateQuery = `

|

||||

sum(rate(

|

||||

nginx_ingress_controller_requests{kubernetes_namespace="{{ .Namespace }}",

|

||||

ingress="{{ .Name }}",

|

||||

status!~"5.*"}

|

||||

[{{ .Interval }}]))

|

||||

/

|

||||

sum(rate(

|

||||

nginx_ingress_controller_requests{kubernetes_namespace="{{ .Namespace }}",

|

||||

ingress="{{ .Name }}"}

|

||||

[{{ .Interval }}]))

|

||||

* 100

|

||||

`

|

||||

|

||||

// GetNginxSuccessRate returns the requests success rate (non 5xx) using nginx_ingress_controller_requests metric

|

||||

func (c *Observer) GetNginxSuccessRate(name string, namespace string, metric string, interval string) (float64, error) {

|

||||

if c.metricsServer == "fake" {

|

||||

return 100, nil

|

||||

}

|

||||

|

||||

meta := struct {

|

||||

Name string

|

||||

Namespace string

|

||||

Interval string

|

||||

}{

|

||||

name,

|

||||

namespace,

|

||||

interval,

|

||||

}

|

||||

|

||||

query, err := render(meta, nginxSuccessRateQuery)

|

||||

if err != nil {

|

||||

return 0, err

|

||||

}

|

||||

|

||||

var rate *float64

|

||||

querySt := url.QueryEscape(query)

|

||||

result, err := c.queryMetric(querySt)

|

||||

if err != nil {

|

||||

return 0, err

|

||||

}

|

||||

|

||||

for _, v := range result.Data.Result {

|

||||

metricValue := v.Value[1]

|

||||

switch metricValue.(type) {

|

||||

case string:

|

||||

f, err := strconv.ParseFloat(metricValue.(string), 64)

|

||||

if err != nil {

|

||||

return 0, err

|

||||

}

|

||||

rate = &f

|

||||

}

|

||||

}

|

||||

if rate == nil {

|

||||

return 0, fmt.Errorf("no values found for metric %s", metric)

|

||||

}

|

||||

return *rate, nil

|

||||

}

|

||||

|

||||

const nginxRequestDurationQuery = `

|

||||

sum(rate(

|

||||

nginx_ingress_controller_ingress_upstream_latency_seconds_sum{kubernetes_namespace="{{ .Namespace }}",

|

||||

ingress="{{ .Name }}"}[{{ .Interval }}]))

|

||||

/

|

||||

sum(rate(nginx_ingress_controller_ingress_upstream_latency_seconds_count{kubernetes_namespace="{{ .Namespace }}",

|

||||

ingress="{{ .Name }}"}[{{ .Interval }}])) * 1000

|

||||

`

|

||||

|

||||

// GetNginxRequestDuration returns the avg requests latency using nginx_ingress_controller_ingress_upstream_latency_seconds_sum metric

|

||||

func (c *Observer) GetNginxRequestDuration(name string, namespace string, metric string, interval string) (time.Duration, error) {

|

||||

if c.metricsServer == "fake" {

|

||||

return 1, nil

|

||||

}

|

||||

|

||||

meta := struct {

|

||||

Name string

|

||||

Namespace string

|

||||

Interval string

|

||||

}{

|

||||

name,

|

||||

namespace,

|

||||

interval,

|

||||

}

|

||||

|

||||

query, err := render(meta, nginxRequestDurationQuery)

|

||||

if err != nil {

|

||||

return 0, err

|

||||

}

|

||||

|

||||

var rate *float64

|

||||

querySt := url.QueryEscape(query)

|

||||

result, err := c.queryMetric(querySt)

|

||||

if err != nil {

|

||||

return 0, err

|

||||

}

|

||||

|

||||

for _, v := range result.Data.Result {

|

||||

metricValue := v.Value[1]

|

||||

switch metricValue.(type) {

|

||||

case string:

|

||||

f, err := strconv.ParseFloat(metricValue.(string), 64)

|

||||

if err != nil {

|

||||

return 0, err

|

||||

}

|

||||

rate = &f

|

||||

}

|

||||

}

|

||||

if rate == nil {

|

||||

return 0, fmt.Errorf("no values found for metric %s", metric)

|

||||

}

|

||||

ms := time.Duration(int64(*rate)) * time.Millisecond

|

||||

return ms, nil

|

||||

}

|

||||

|

|

@ -0,0 +1,51 @@

|

|||

package metrics

|

||||

|

||||

import (

|

||||

"testing"

|

||||

)

|

||||

|

||||

func Test_NginxSuccessRateQueryRender(t *testing.T) {

|

||||

meta := struct {

|

||||

Name string

|

||||

Namespace string

|

||||

Interval string

|

||||

}{

|

||||

"podinfo",

|

||||

"nginx",

|

||||

"1m",

|

||||

}

|

||||

|

||||

query, err := render(meta, nginxSuccessRateQuery)

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

expected := `sum(rate(nginx_ingress_controller_requests{kubernetes_namespace="nginx",ingress="podinfo",status!~"5.*"}[1m])) / sum(rate(nginx_ingress_controller_requests{kubernetes_namespace="nginx",ingress="podinfo"}[1m])) * 100`

|

||||

|

||||

if query != expected {

|

||||

t.Errorf("\nGot %s \nWanted %s", query, expected)

|

||||

}

|

||||

}

|

||||

|

||||

func Test_NginxRequestDurationQueryRender(t *testing.T) {

|

||||

meta := struct {

|

||||

Name string

|

||||

Namespace string

|

||||

Interval string

|

||||

}{

|

||||

"podinfo",

|

||||

"nginx",

|

||||

"1m",

|

||||

}

|

||||

|

||||

query, err := render(meta, nginxRequestDurationQuery)

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

expected := `sum(rate(nginx_ingress_controller_ingress_upstream_latency_seconds_sum{kubernetes_namespace="nginx",ingress="podinfo"}[1m])) /sum(rate(nginx_ingress_controller_ingress_upstream_latency_seconds_count{kubernetes_namespace="nginx",ingress="podinfo"}[1m])) * 1000`

|

||||

|

||||

if query != expected {

|

||||

t.Errorf("\nGot %s \nWanted %s", query, expected)

|

||||

}

|

||||

}

|

||||

|

|

@ -41,9 +41,14 @@ func (factory *Factory) KubernetesRouter(label string) *KubernetesRouter {

|

|||

}

|

||||

}

|

||||

|

||||

// MeshRouter returns a service mesh router (Istio or AppMesh)

|

||||

// MeshRouter returns a service mesh router

|

||||

func (factory *Factory) MeshRouter(provider string) Interface {

|

||||

switch {

|

||||

case provider == "nginx":

|

||||

return &IngressRouter{

|

||||

logger: factory.logger,

|

||||

kubeClient: factory.kubeClient,

|

||||

}

|

||||

case provider == "appmesh":

|

||||

return &AppMeshRouter{

|

||||

logger: factory.logger,

|

||||

|

|

|

|||

|

|

@ -0,0 +1,231 @@

|

|||

package router

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"github.com/google/go-cmp/cmp"

|

||||

flaggerv1 "github.com/weaveworks/flagger/pkg/apis/flagger/v1alpha3"

|

||||

"go.uber.org/zap"

|

||||

"k8s.io/api/extensions/v1beta1"

|

||||

"k8s.io/apimachinery/pkg/api/errors"

|

||||

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

"k8s.io/apimachinery/pkg/runtime/schema"

|

||||

"k8s.io/client-go/kubernetes"

|

||||

"strconv"

|

||||

"strings"

|

||||

)

|

||||

|

||||

type IngressRouter struct {

|

||||

kubeClient kubernetes.Interface

|

||||

logger *zap.SugaredLogger

|

||||

}

|

||||

|

||||

func (i *IngressRouter) Reconcile(canary *flaggerv1.Canary) error {

|

||||

if canary.Spec.IngressRef == nil || canary.Spec.IngressRef.Name == "" {

|

||||

return fmt.Errorf("ingress selector is empty")

|

||||

}

|

||||

|

||||

targetName := canary.Spec.TargetRef.Name

|

||||

canaryName := fmt.Sprintf("%s-canary", targetName)

|

||||

canaryIngressName := fmt.Sprintf("%s-canary", canary.Spec.IngressRef.Name)

|

||||

|

||||

ingress, err := i.kubeClient.ExtensionsV1beta1().Ingresses(canary.Namespace).Get(canary.Spec.IngressRef.Name, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

ingressClone := ingress.DeepCopy()

|

||||

|

||||

// change backend to <deployment-name>-canary

|

||||

backendExists := false

|

||||

for k, v := range ingressClone.Spec.Rules {

|

||||

for x, y := range v.HTTP.Paths {

|

||||

if y.Backend.ServiceName == targetName {

|

||||

ingressClone.Spec.Rules[k].HTTP.Paths[x].Backend.ServiceName = canaryName

|

||||

backendExists = true

|

||||

break

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

if !backendExists {

|

||||

return fmt.Errorf("backend %s not found in ingress %s", targetName, canary.Spec.IngressRef.Name)

|

||||

}

|

||||

|

||||

canaryIngress, err := i.kubeClient.ExtensionsV1beta1().Ingresses(canary.Namespace).Get(canaryIngressName, metav1.GetOptions{})

|

||||

|

||||

if errors.IsNotFound(err) {

|

||||

ing := &v1beta1.Ingress{

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Name: canaryIngressName,

|

||||

Namespace: canary.Namespace,

|

||||

OwnerReferences: []metav1.OwnerReference{

|

||||

*metav1.NewControllerRef(canary, schema.GroupVersionKind{

|

||||

Group: flaggerv1.SchemeGroupVersion.Group,

|

||||

Version: flaggerv1.SchemeGroupVersion.Version,

|

||||

Kind: flaggerv1.CanaryKind,

|

||||

}),

|

||||

},

|

||||

Annotations: i.makeAnnotations(ingressClone.Annotations),

|

||||

Labels: ingressClone.Labels,

|

||||

},

|

||||

Spec: ingressClone.Spec,

|

||||

}

|

||||

|

||||

_, err := i.kubeClient.ExtensionsV1beta1().Ingresses(canary.Namespace).Create(ing)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

i.logger.With("canary", fmt.Sprintf("%s.%s", canary.Name, canary.Namespace)).

|

||||

Infof("Ingress %s.%s created", ing.GetName(), canary.Namespace)

|

||||

return nil

|

||||

}

|

||||

|

||||

if err != nil {

|

||||

return fmt.Errorf("ingress %s query error %v", canaryIngressName, err)

|

||||

}

|

||||

|

||||

if diff := cmp.Diff(ingressClone.Spec, canaryIngress.Spec); diff != "" {

|

||||

iClone := canaryIngress.DeepCopy()

|

||||

iClone.Spec = ingressClone.Spec

|

||||

|

||||

_, err := i.kubeClient.ExtensionsV1beta1().Ingresses(canary.Namespace).Update(iClone)

|

||||

if err != nil {

|

||||

return fmt.Errorf("ingress %s update error %v", canaryIngressName, err)

|

||||

}

|

||||

|

||||

i.logger.With("canary", fmt.Sprintf("%s.%s", canary.Name, canary.Namespace)).

|

||||

Infof("Ingress %s updated", canaryIngressName)

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

func (i *IngressRouter) GetRoutes(canary *flaggerv1.Canary) (

|

||||

primaryWeight int,

|

||||

canaryWeight int,

|

||||

err error,

|

||||

) {

|

||||

canaryIngressName := fmt.Sprintf("%s-canary", canary.Spec.IngressRef.Name)

|

||||

canaryIngress, err := i.kubeClient.ExtensionsV1beta1().Ingresses(canary.Namespace).Get(canaryIngressName, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

return 0, 0, err

|

||||

}

|

||||

|

||||

// A/B testing

|

||||

if len(canary.Spec.CanaryAnalysis.Match) > 0 {

|

||||

for k := range canaryIngress.Annotations {

|

||||

if k == "nginx.ingress.kubernetes.io/canary-by-cookie" || k == "nginx.ingress.kubernetes.io/canary-by-header" {

|

||||

return 0, 100, nil

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// Canary

|

||||

for k, v := range canaryIngress.Annotations {

|

||||

if k == "nginx.ingress.kubernetes.io/canary-weight" {

|

||||

val, err := strconv.Atoi(v)

|

||||

if err != nil {

|

||||

return 0, 0, err

|

||||

}

|

||||

|

||||

canaryWeight = val

|

||||

break

|

||||

}

|

||||

}

|

||||

|

||||

primaryWeight = 100 - canaryWeight

|

||||

return

|

||||

}

|

||||

|

||||

func (i *IngressRouter) SetRoutes(

|

||||

canary *flaggerv1.Canary,

|

||||

primaryWeight int,

|

||||

canaryWeight int,

|

||||

) error {

|

||||

canaryIngressName := fmt.Sprintf("%s-canary", canary.Spec.IngressRef.Name)

|

||||

canaryIngress, err := i.kubeClient.ExtensionsV1beta1().Ingresses(canary.Namespace).Get(canaryIngressName, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

iClone := canaryIngress.DeepCopy()

|

||||

|

||||

// A/B testing

|

||||

if len(canary.Spec.CanaryAnalysis.Match) > 0 {

|

||||

cookie := ""

|

||||

header := ""

|

||||

headerValue := ""

|

||||

for _, m := range canary.Spec.CanaryAnalysis.Match {

|

||||

for k, v := range m.Headers {

|

||||

if k == "cookie" {

|

||||

cookie = v.Exact

|

||||

} else {

|

||||

header = k

|

||||

headerValue = v.Exact

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

iClone.Annotations = i.makeHeaderAnnotations(iClone.Annotations, header, headerValue, cookie)

|

||||

} else {

|

||||

// canary

|

||||

iClone.Annotations["nginx.ingress.kubernetes.io/canary-weight"] = fmt.Sprintf("%v", canaryWeight)

|

||||

}

|

||||

|

||||

// toggle canary

|

||||

if canaryWeight > 0 {

|

||||

iClone.Annotations["nginx.ingress.kubernetes.io/canary"] = "true"

|

||||

} else {

|

||||

iClone.Annotations = i.makeAnnotations(iClone.Annotations)

|

||||

}

|

||||

|

||||

_, err = i.kubeClient.ExtensionsV1beta1().Ingresses(canary.Namespace).Update(iClone)

|

||||

if err != nil {

|

||||

return fmt.Errorf("ingress %s update error %v", canaryIngressName, err)

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

func (i *IngressRouter) makeAnnotations(annotations map[string]string) map[string]string {

|

||||

res := make(map[string]string)

|

||||

for k, v := range annotations {

|

||||

if !strings.Contains(k, "nginx.ingress.kubernetes.io/canary") &&

|

||||

!strings.Contains(k, "kubectl.kubernetes.io/last-applied-configuration") {

|

||||

res[k] = v

|

||||

}

|

||||

}

|

||||

|

||||

res["nginx.ingress.kubernetes.io/canary"] = "false"

|

||||

res["nginx.ingress.kubernetes.io/canary-weight"] = "0"

|

||||

|

||||

return res

|

||||

}

|

||||

|

||||

func (i *IngressRouter) makeHeaderAnnotations(annotations map[string]string,

|

||||

header string, headerValue string, cookie string) map[string]string {

|

||||

res := make(map[string]string)

|

||||

for k, v := range annotations {

|

||||

if !strings.Contains(v, "nginx.ingress.kubernetes.io/canary") {

|

||||

res[k] = v

|

||||

}

|

||||

}

|

||||

|

||||

res["nginx.ingress.kubernetes.io/canary"] = "true"

|

||||

res["nginx.ingress.kubernetes.io/canary-weight"] = "0"

|

||||

|

||||

if cookie != "" {

|

||||

res["nginx.ingress.kubernetes.io/canary-by-cookie"] = cookie

|

||||

}

|

||||

|

||||

if header != "" {

|

||||

res["nginx.ingress.kubernetes.io/canary-by-header"] = header

|

||||

}

|

||||

|

||||

if headerValue != "" {

|

||||

res["nginx.ingress.kubernetes.io/canary-by-header-value"] = headerValue

|

||||

}

|

||||

|

||||

return res

|

||||

}

|

||||

Loading…

Reference in New Issue