mirror of https://github.com/kubeflow/examples.git

Add files via upload

This commit is contained in:

parent

0870f9157a

commit

76e882ed0b

|

|

@ -0,0 +1,61 @@

|

|||

目的 :

|

||||

|

||||

這是一個node-red搭配kubeflow的作品,node-red作為畫面顯示,而kubeflow則是資料處理。在這個作品我將LSTM、mnist節點放在node-red,點擊該節點後,kubeflow則執行對應模型,模型所使用的輸入資料是normal.csv & abnormal.csv,輸出則是資料經模型訓練後的準確度。

|

||||

|

||||

安裝 :

|

||||

|

||||

1. 將檔案 git clone 到 windows/System32

|

||||

2. 執行 Docker

|

||||

3. 執行 Wsl 並依序輸入 cd kube-nodered, cd examples, 最後是 ./run.sh 1.connect-kubeflow

|

||||

4. 查 Docker 是否有生成的容器和映像檔

|

||||

5. 執行 Docker 映像檔並執行 node-red

|

||||

6. 執行 node-red後, 點擊 'intsall dependency', 接著使用者可以在'six pipeline' 頁面執行模型

|

||||

7. 到路徑 kube-nodered\examples\1.connect-kubeflow\py 中調整目錄底下的模型Python檔案,將登錄帳密改為使用者的

|

||||

===============================================================================

|

||||

|

||||

架構 :

|

||||

|

||||

客製化節點的關鍵在於更改.flows.json、加入js.和html到nodepipe資料夾、加入模型python到py資料夾和加入模型pipeline到pipeline資料夾。node-red顯示的部分 : .flow.json更改會影響node-red節點的顯示,nodepipe的js.和html可以客製一個節點的功能欄位,如下拉選單。Kubeflow執行的部分 : 模型的python和pipeline。

|

||||

以下是各個檔案的路徑

|

||||

1. C:\Windows\System32\kube-nodered\examples\1.connect-kubeflo的.flow.json

|

||||

2. C:\Windows\System32\kube-nodered\examples\1.connect-kubeflow\node_modules\nodepipe的js.和html

|

||||

3. C:\Windows\System32\kube-nodered\examples\1.connect-kubeflow\py的模型python

|

||||

4. C:\Windows\System32\kube-nodered\examples\1.connect-kubeflow\py\pipelines的模型pipeline

|

||||

5.

|

||||

================================================================================

|

||||

|

||||

輸入和輸出 :

|

||||

|

||||

輸入資料

|

||||

|

||||

輸出結果

|

||||

|

||||

|

||||

=================================================================================

|

||||

|

||||

操作說明 :

|

||||

|

||||

打開node-red後,先點擊圖中按鈕,安裝所需套件

|

||||

|

||||

點擊圖中圓圈,切換分頁到模型節點

|

||||

|

||||

切換後的分頁

|

||||

|

||||

點擊圖中按鈕,kubeflow會執行對應pipeline

|

||||

|

||||

可在畫面右側確認執行狀況,圖中範例為LSTM pipeline正在執行

|

||||

|

||||

在node-red上點擊執行後,可在kubeflow上看到新增的執行pipeline

|

||||

|

||||

Kubeflow上的執行結果

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

|

@ -0,0 +1,201 @@

|

|||

Apache License

|

||||

Version 2.0, January 2004

|

||||

http://www.apache.org/licenses/

|

||||

|

||||

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||

|

||||

1. Definitions.

|

||||

|

||||

"License" shall mean the terms and conditions for use, reproduction,

|

||||

and distribution as defined by Sections 1 through 9 of this document.

|

||||

|

||||

"Licensor" shall mean the copyright owner or entity authorized by

|

||||

the copyright owner that is granting the License.

|

||||

|

||||

"Legal Entity" shall mean the union of the acting entity and all

|

||||

other entities that control, are controlled by, or are under common

|

||||

control with that entity. For the purposes of this definition,

|

||||

"control" means (i) the power, direct or indirect, to cause the

|

||||

direction or management of such entity, whether by contract or

|

||||

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||

|

||||

"You" (or "Your") shall mean an individual or Legal Entity

|

||||

exercising permissions granted by this License.

|

||||

|

||||

"Source" form shall mean the preferred form for making modifications,

|

||||

including but not limited to software source code, documentation

|

||||

source, and configuration files.

|

||||

|

||||

"Object" form shall mean any form resulting from mechanical

|

||||

transformation or translation of a Source form, including but

|

||||

not limited to compiled object code, generated documentation,

|

||||

and conversions to other media types.

|

||||

|

||||

"Work" shall mean the work of authorship, whether in Source or

|

||||

Object form, made available under the License, as indicated by a

|

||||

copyright notice that is included in or attached to the work

|

||||

(an example is provided in the Appendix below).

|

||||

|

||||

"Derivative Works" shall mean any work, whether in Source or Object

|

||||

form, that is based on (or derived from) the Work and for which the

|

||||

editorial revisions, annotations, elaborations, or other modifications

|

||||

represent, as a whole, an original work of authorship. For the purposes

|

||||

of this License, Derivative Works shall not include works that remain

|

||||

separable from, or merely link (or bind by name) to the interfaces of,

|

||||

the Work and Derivative Works thereof.

|

||||

|

||||

"Contribution" shall mean any work of authorship, including

|

||||

the original version of the Work and any modifications or additions

|

||||

to that Work or Derivative Works thereof, that is intentionally

|

||||

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||

or by an individual or Legal Entity authorized to submit on behalf of

|

||||

the copyright owner. For the purposes of this definition, "submitted"

|

||||

means any form of electronic, verbal, or written communication sent

|

||||

to the Licensor or its representatives, including but not limited to

|

||||

communication on electronic mailing lists, source code control systems,

|

||||

and issue tracking systems that are managed by, or on behalf of, the

|

||||

Licensor for the purpose of discussing and improving the Work, but

|

||||

excluding communication that is conspicuously marked or otherwise

|

||||

designated in writing by the copyright owner as "Not a Contribution."

|

||||

|

||||

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||

on behalf of whom a Contribution has been received by Licensor and

|

||||

subsequently incorporated within the Work.

|

||||

|

||||

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

copyright license to reproduce, prepare Derivative Works of,

|

||||

publicly display, publicly perform, sublicense, and distribute the

|

||||

Work and such Derivative Works in Source or Object form.

|

||||

|

||||

3. Grant of Patent License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

(except as stated in this section) patent license to make, have made,

|

||||

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||

where such license applies only to those patent claims licensable

|

||||

by such Contributor that are necessarily infringed by their

|

||||

Contribution(s) alone or by combination of their Contribution(s)

|

||||

with the Work to which such Contribution(s) was submitted. If You

|

||||

institute patent litigation against any entity (including a

|

||||

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||

or a Contribution incorporated within the Work constitutes direct

|

||||

or contributory patent infringement, then any patent licenses

|

||||

granted to You under this License for that Work shall terminate

|

||||

as of the date such litigation is filed.

|

||||

|

||||

4. Redistribution. You may reproduce and distribute copies of the

|

||||

Work or Derivative Works thereof in any medium, with or without

|

||||

modifications, and in Source or Object form, provided that You

|

||||

meet the following conditions:

|

||||

|

||||

(a) You must give any other recipients of the Work or

|

||||

Derivative Works a copy of this License; and

|

||||

|

||||

(b) You must cause any modified files to carry prominent notices

|

||||

stating that You changed the files; and

|

||||

|

||||

(c) You must retain, in the Source form of any Derivative Works

|

||||

that You distribute, all copyright, patent, trademark, and

|

||||

attribution notices from the Source form of the Work,

|

||||

excluding those notices that do not pertain to any part of

|

||||

the Derivative Works; and

|

||||

|

||||

(d) If the Work includes a "NOTICE" text file as part of its

|

||||

distribution, then any Derivative Works that You distribute must

|

||||

include a readable copy of the attribution notices contained

|

||||

within such NOTICE file, excluding those notices that do not

|

||||

pertain to any part of the Derivative Works, in at least one

|

||||

of the following places: within a NOTICE text file distributed

|

||||

as part of the Derivative Works; within the Source form or

|

||||

documentation, if provided along with the Derivative Works; or,

|

||||

within a display generated by the Derivative Works, if and

|

||||

wherever such third-party notices normally appear. The contents

|

||||

of the NOTICE file are for informational purposes only and

|

||||

do not modify the License. You may add Your own attribution

|

||||

notices within Derivative Works that You distribute, alongside

|

||||

or as an addendum to the NOTICE text from the Work, provided

|

||||

that such additional attribution notices cannot be construed

|

||||

as modifying the License.

|

||||

|

||||

You may add Your own copyright statement to Your modifications and

|

||||

may provide additional or different license terms and conditions

|

||||

for use, reproduction, or distribution of Your modifications, or

|

||||

for any such Derivative Works as a whole, provided Your use,

|

||||

reproduction, and distribution of the Work otherwise complies with

|

||||

the conditions stated in this License.

|

||||

|

||||

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||

any Contribution intentionally submitted for inclusion in the Work

|

||||

by You to the Licensor shall be under the terms and conditions of

|

||||

this License, without any additional terms or conditions.

|

||||

Notwithstanding the above, nothing herein shall supersede or modify

|

||||

the terms of any separate license agreement you may have executed

|

||||

with Licensor regarding such Contributions.

|

||||

|

||||

6. Trademarks. This License does not grant permission to use the trade

|

||||

names, trademarks, service marks, or product names of the Licensor,

|

||||

except as required for reasonable and customary use in describing the

|

||||

origin of the Work and reproducing the content of the NOTICE file.

|

||||

|

||||

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||

agreed to in writing, Licensor provides the Work (and each

|

||||

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||

implied, including, without limitation, any warranties or conditions

|

||||

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||

appropriateness of using or redistributing the Work and assume any

|

||||

risks associated with Your exercise of permissions under this License.

|

||||

|

||||

8. Limitation of Liability. In no event and under no legal theory,

|

||||

whether in tort (including negligence), contract, or otherwise,

|

||||

unless required by applicable law (such as deliberate and grossly

|

||||

negligent acts) or agreed to in writing, shall any Contributor be

|

||||

liable to You for damages, including any direct, indirect, special,

|

||||

incidental, or consequential damages of any character arising as a

|

||||

result of this License or out of the use or inability to use the

|

||||

Work (including but not limited to damages for loss of goodwill,

|

||||

work stoppage, computer failure or malfunction, or any and all

|

||||

other commercial damages or losses), even if such Contributor

|

||||

has been advised of the possibility of such damages.

|

||||

|

||||

9. Accepting Warranty or Additional Liability. While redistributing

|

||||

the Work or Derivative Works thereof, You may choose to offer,

|

||||

and charge a fee for, acceptance of support, warranty, indemnity,

|

||||

or other liability obligations and/or rights consistent with this

|

||||

License. However, in accepting such obligations, You may act only

|

||||

on Your own behalf and on Your sole responsibility, not on behalf

|

||||

of any other Contributor, and only if You agree to indemnify,

|

||||

defend, and hold each Contributor harmless for any liability

|

||||

incurred by, or claims asserted against, such Contributor by reason

|

||||

of your accepting any such warranty or additional liability.

|

||||

|

||||

END OF TERMS AND CONDITIONS

|

||||

|

||||

APPENDIX: How to apply the Apache License to your work.

|

||||

|

||||

To apply the Apache License to your work, attach the following

|

||||

boilerplate notice, with the fields enclosed by brackets "[]"

|

||||

replaced with your own identifying information. (Don't include

|

||||

the brackets!) The text should be enclosed in the appropriate

|

||||

comment syntax for the file format. We also recommend that a

|

||||

file or class name and description of purpose be included on the

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright [yyyy] [name of copyright owner]

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

|

|

@ -0,0 +1,354 @@

|

|||

# Kube-node-red(en)

|

||||

[](https://hackmd.io/cocSOGQMR-qzo7DHdwgRsQ)

|

||||

|

||||

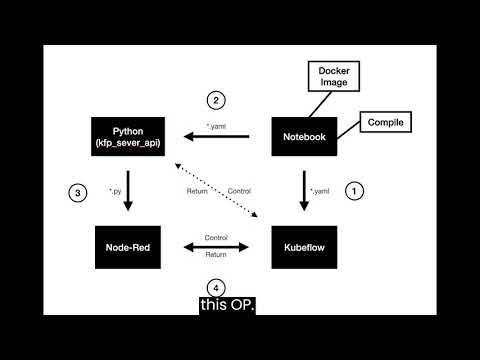

Kube-node-red is aiming to integrate Kubeflow/Kubebeters with node-red, leveraging node-red's low-code modules, and using Kubeflow resources (e.g. Kubeflow pipeline, Kserve) to enhance its AI/ML ability.

|

||||

## Table of Contents

|

||||

<!-- toc -->

|

||||

|

||||

- [Installation](#installation)

|

||||

* [Prerequisites](#Prerequisites)

|

||||

* [Building](#Building)

|

||||

* [Install dependencies](#Install-dependencies)

|

||||

- [Using our nodes](#Using-our-nodes)

|

||||

- [Test python files to interact with kubeflow](#Test-python-files-to-interact-with-kubeflow)

|

||||

- [possible problems and solution](#possible-problems-and-solution)

|

||||

- [Modify your own custom nodes/pipeline](#Modify-your-own-custom-nodes/pipeline)

|

||||

* [Kubeflow part](#Kubeflow-part)

|

||||

* [Node-red part](#Node-red-part)

|

||||

- [Architecture](#Architecture)

|

||||

- [Demo](#Demo)

|

||||

- [Reference](#Reference)

|

||||

|

||||

<!-- tocstop -->

|

||||

|

||||

# Installation

|

||||

## Prerequisites

|

||||

- `Kubeflow`

|

||||

As this project focused on the node-red integration with Kubeflow, one running Kubeflow instance should be ready on a publicly available network.

|

||||

(If you need to provision your own Kubeflow instance, you could refer to our [mulitkf](https://github.com/footprintai/multikf) project to allocate one instance for developing.)

|

||||

- [`WSL`](https://learn.microsoft.com/en-us/windows/wsl/install) If you are Windows OS.

|

||||

- [`Docker`](https://www.docker.com)

|

||||

|

||||

## Building

|

||||

|

||||

We organized some examples under examples folder, and make sensitive information pass via environment variables. Please refer the following example to launch an individual example:

|

||||

|

||||

1. In terminal (If you on Windows system, please use WSL)

|

||||

```

|

||||

$ git clone https://github.com/NightLightTw/kube-nodered.git

|

||||

```

|

||||

2. Enter target folder

|

||||

```

|

||||

cd kube-nodered/examples

|

||||

```

|

||||

3. Enter account information and start

|

||||

```

|

||||

KUBEFLOW_HOST=<your-kubeflow-instance-endpoint> \

|

||||

KUBEFLOW_USERNAME=<your-username-account> \

|

||||

KUBEFLOW_PASSWORD=<your-password> \

|

||||

./run.sh <example-index>

|

||||

```

|

||||

> **Info:** Here <example-index> please use 1.connect-kubeflow

|

||||

|

||||

## Install dependencies

|

||||

|

||||

1. Then you can go to UI, check it out: http://127.0.0.1:1880/

|

||||

|

||||

|

||||

2. Click the “install dependency” button to install dependency items such as specific python libraries and wait for its completion

|

||||

|

||||

|

||||

3. Click the “list experiments” button to test the environment work!

|

||||

|

||||

|

||||

## Using our nodes

|

||||

Switch to the "three-pipeline" flow and press the button to trigger the pipeline process

|

||||

|

||||

|

||||

|

||||

On kubeflow:

|

||||

|

||||

|

||||

|

||||

|

||||

> **Info:** If the environment variable does not work, please fill in the account password directly in the python file

|

||||

|

||||

|

||||

## Test python files to interact with kubeflow

|

||||

```

|

||||

# Open another terminal and check docker status

|

||||

docker ps

|

||||

#enter container

|

||||

docker exec -it <containerID> bash

|

||||

#enter document folder

|

||||

cd /data/1.connect-kubeflow/py/api_examples

|

||||

#execute function

|

||||

python3 <file-name>

|

||||

```

|

||||

|

||||

You can test the file in api_example

|

||||

> **Info:** Some of these files require a custom name, description, or assigned id in <change yours>

|

||||

|

||||

## Possible problems and solution

|

||||

Q1: MissingSchema Invalid URL ''

|

||||

|

||||

A1: This problem means that the login information is not accessed correctly, which may be caused by the environment variable not being read.

|

||||

You can directly override the login information of the specified file

|

||||

|

||||

ex:

|

||||

|

||||

Change to your own login information

|

||||

```

|

||||

host = "https://example@test.com"

|

||||

username = "test01"

|

||||

password = "123456"

|

||||

```

|

||||

|

||||

# Modify your own custom nodes/pipeline

|

||||

|

||||

|

||||

## Kubeflow part

|

||||

### Custom make pipeline’s yaml file

|

||||

Please refer to [Kubeflow implementation:add Random Forest algorithm](https://hackmd.io/@Nhi7So-lTz2m5R6pHyCLcA/Sk1eZFTbh)

|

||||

|

||||

### Take changing randomForest.py as an example

|

||||

|

||||

Modify using your own yaml file path

|

||||

> **Info:** Line 66: uploadfile='pipelines/only_randomforest.yaml'

|

||||

|

||||

> **Info:** Line 122~129 use json parser for filtering different outputs from get_run()

|

||||

|

||||

```python=

|

||||

from __future__ import print_function

|

||||

|

||||

import time

|

||||

import kfp_server_api

|

||||

import os

|

||||

import requests

|

||||

import string

|

||||

import random

|

||||

import json

|

||||

from kfp_server_api.rest import ApiException

|

||||

from pprint import pprint

|

||||

from kfp_login import get_istio_auth_session

|

||||

from kfp_namespace import retrieve_namespaces

|

||||

|

||||

host = os.getenv("KUBEFLOW_HOST")

|

||||

username = os.getenv("KUBEFLOW_USERNAME")

|

||||

password = os.getenv("KUBEFLOW_PASSWORD")

|

||||

|

||||

auth_session = get_istio_auth_session(

|

||||

url=host,

|

||||

username=username,

|

||||

password=password

|

||||

)

|

||||

|

||||

# The client must configure the authentication and authorization parameters

|

||||

# in accordance with the API server security policy.

|

||||

# Examples for each auth method are provided below, use the example that

|

||||

# satisfies your auth use case.

|

||||

|

||||

# Configure API key authorization: Bearer

|

||||

configuration = kfp_server_api.Configuration(

|

||||

host = os.path.join(host, "pipeline"),

|

||||

)

|

||||

configuration.debug = True

|

||||

|

||||

namespaces = retrieve_namespaces(host, auth_session)

|

||||

#print("available namespace: {}".format(namespaces))

|

||||

|

||||

def random_suffix() -> string:

|

||||

return ''.join(random.choices(string.ascii_lowercase + string.digits, k=10))

|

||||

|

||||

# Enter a context with an instance of the API client

|

||||

with kfp_server_api.ApiClient(configuration, cookie=auth_session["session_cookie"]) as api_client:

|

||||

# Create an instance of the Experiment API class

|

||||

experiment_api_instance = kfp_server_api.ExperimentServiceApi(api_client)

|

||||

name="experiment-" + random_suffix()

|

||||

description="This is a experiment for only_randomforest."

|

||||

resource_reference_key_id = namespaces[0]

|

||||

resource_references=[kfp_server_api.models.ApiResourceReference(

|

||||

key=kfp_server_api.models.ApiResourceKey(

|

||||

type=kfp_server_api.models.ApiResourceType.NAMESPACE,

|

||||

id=resource_reference_key_id

|

||||

),

|

||||

relationship=kfp_server_api.models.ApiRelationship.OWNER

|

||||

)]

|

||||

body = kfp_server_api.ApiExperiment(name=name, description=description, resource_references=resource_references) # ApiExperiment | The experiment to be created.

|

||||

try:

|

||||

# Creates a new experiment.

|

||||

experiment_api_response = experiment_api_instance.create_experiment(body)

|

||||

experiment_id = experiment_api_response.id # str | The ID of the run to be retrieved.

|

||||

except ApiException as e:

|

||||

print("Exception when calling ExperimentServiceApi->create_experiment: %s\n" % e)

|

||||

|

||||

# Create an instance of the pipeline API class

|

||||

api_instance = kfp_server_api.PipelineUploadServiceApi(api_client)

|

||||

uploadfile='pipelines/only_randomforest.yaml'

|

||||

name='pipeline-' + random_suffix()

|

||||

description="This is a only_randomForest pipline."

|

||||

try:

|

||||

pipeline_api_response = api_instance.upload_pipeline(uploadfile, name=name, description=description)

|

||||

pipeline_id = pipeline_api_response.id # str | The ID of the run to be retrieved.

|

||||

except ApiException as e:

|

||||

print("Exception when calling PipelineUploadServiceApi->upload_pipeline: %s\n" % e)

|

||||

|

||||

# Create an instance of the run API class

|

||||

run_api_instance = kfp_server_api.RunServiceApi(api_client)

|

||||

display_name = 'run_only_randomForest' + random_suffix()

|

||||

description = "This is a only_randomForest run."

|

||||

pipeline_spec = kfp_server_api.ApiPipelineSpec(pipeline_id=pipeline_id)

|

||||

resource_reference_key_id = namespaces[0]

|

||||

resource_references=[kfp_server_api.models.ApiResourceReference(

|

||||

key=kfp_server_api.models.ApiResourceKey(id=experiment_id, type=kfp_server_api.models.ApiResourceType.EXPERIMENT),

|

||||

relationship=kfp_server_api.models.ApiRelationship.OWNER )]

|

||||

body = kfp_server_api.ApiRun(name=display_name, description=description, pipeline_spec=pipeline_spec, resource_references=resource_references) # ApiRun |

|

||||

try:

|

||||

# Creates a new run.

|

||||

run_api_response = run_api_instance.create_run(body)

|

||||

run_id = run_api_response.run.id # str | The ID of the run to be retrieved.

|

||||

except ApiException as e:

|

||||

print("Exception when calling RunServiceApi->create_run: %s\n" % e)

|

||||

|

||||

Completed_flag = False

|

||||

polling_interval = 10 # Time in seconds between polls

|

||||

|

||||

while not Completed_flag:

|

||||

try:

|

||||

time.sleep(1)

|

||||

# Finds a specific run by ID.

|

||||

api_instance = run_api_instance.get_run(run_id)

|

||||

output = api_instance.pipeline_runtime.workflow_manifest

|

||||

output = json.loads(output)

|

||||

|

||||

try:

|

||||

nodes = output['status']['nodes']

|

||||

conditions = output['status']['conditions'] # Comfirm completion.

|

||||

|

||||

except KeyError:

|

||||

nodes = {}

|

||||

conditions = []

|

||||

|

||||

output_value = None

|

||||

Completed_flag = conditions[1]['status'] if len(conditions) > 1 else False

|

||||

|

||||

except ApiException as e:

|

||||

print("Exception when calling RunServiceApi->get_run: %s\n" % e)

|

||||

break

|

||||

|

||||

if not Completed_flag:

|

||||

print("Pipeline is still running. Waiting...")

|

||||

time.sleep(polling_interval-1)

|

||||

|

||||

for node_id, node in nodes.items():

|

||||

if 'inputs' in node and 'parameters' in node['inputs']:

|

||||

for parameter in node['inputs']['parameters']:

|

||||

if parameter['name'] == 'random-forest-classifier-Accuracy': #change parameter

|

||||

output_value = parameter['value']

|

||||

|

||||

if output_value is not None:

|

||||

print(f"Random Forest Classifier Accuracy: {output_value}")

|

||||

else:

|

||||

print("Parameter not found.")

|

||||

print(nodes)

|

||||

```

|

||||

|

||||

## Node-red part

|

||||

**Package nodered pyshell node**

|

||||

|

||||

**A node mainly consists of two files**

|

||||

* **Javascript file(.js)**

|

||||

define what the node does

|

||||

* **HTML file(.html)**

|

||||

Define the properties of the node and the windows and help messages in the Node-RED editor

|

||||

|

||||

**When finally package into npm module, will need package.json**

|

||||

|

||||

|

||||

### **package.json**

|

||||

A standard file for describing the content of node.js modules

|

||||

|

||||

A standard package.json can be generated using npm init. This command will ask a series of questions to find a reasonable default value. When asked for the name of the module name:<default value> enter the example name node-red-contrib-<self_defined>

|

||||

|

||||

When it is established, you need to manually add the node-red attribute

|

||||

*p.s. Where the example files need to be changed *

|

||||

|

||||

|

||||

|

||||

```json=

|

||||

{

|

||||

"name": "node-red-contrib-pythonshell-custom",

|

||||

...

|

||||

"node-red": {

|

||||

"nodes": {

|

||||

"decisionTree": "decisiontree.js",

|

||||

"randomForest": "randomforest.js",

|

||||

"logisticRegression": "logisticregression.js"

|

||||

"<self_defined>":"<self_defined.js>"

|

||||

}

|

||||

},

|

||||

...

|

||||

}

|

||||

|

||||

```

|

||||

### **HTML**

|

||||

```javascript=

|

||||

<script type="text/javascript">

|

||||

# Replace the node name displayed/registered in the palette

|

||||

RED.nodes.registerType('decisionTree',{

|

||||

category: 'input',

|

||||

defaults: {

|

||||

name: {required: false},

|

||||

# Replace the .py path to be used

|

||||

pyfile: {value: "/data/1.connect-kubeflow/py/decisionTree.py"},

|

||||

virtualenv: {required: false},

|

||||

continuous: {required: false},

|

||||

stdInData: {required: false},

|

||||

python3: {required: false}

|

||||

},

|

||||

```

|

||||

### **Javascript(main function)**

|

||||

1.Open decisionTree.js

|

||||

```javascript=

|

||||

function PythonshellInNode(config) {

|

||||

if (!config.pyfile){

|

||||

throw 'pyfile not present';

|

||||

}

|

||||

this.pythonExec = config.python3 ? "python3" : "python";

|

||||

# Replace the path or change the following path to config.pyfile

|

||||

this.pyfile = "/data/1.connect-kubeflow/py/decisionTree.py";

|

||||

this.virtualenv = config.virtualenv;

|

||||

```

|

||||

2.Open deccisiontree.js

|

||||

```javascript=

|

||||

var util = require("util");

|

||||

var httpclient;

|

||||

#change the path/file name of the module file

|

||||

var PythonshellNode = require('./decisionTree');

|

||||

|

||||

# To change the name to be registered, it must be consistent with the change of .html

|

||||

RED.nodes.registerType("decisionTree", PythonshellInNode);

|

||||

```

|

||||

|

||||

### Connect nodered

|

||||

Import the folder where the above file is located to the node_modules directory of the container

|

||||

e.g. docker desktop

|

||||

|

||||

e.g. wsl

|

||||

|

||||

|

||||

## Architecture

|

||||

|

||||

|

||||

## Demo

|

||||

[](https://youtu.be/72tXYl6FcvU)

|

||||

|

||||

## Reference

|

||||

https://github.com/NightLightTw/kube-nodered

|

||||

|

||||

https://github.com/kubeflow/pipelines/tree/1.8.21/backend/api/python_http_client

|

||||

|

||||

[Kubeflow implementation:add Random Forest algorithm](https://hackmd.io/@ZJ2023/BJYQGMvJ6)

|

||||

|

||||

|

||||

|

||||

|

|

@ -0,0 +1,101 @@

|

|||

[

|

||||

{

|

||||

"id": "f6f2187d.f17ca8",

|

||||

"type": "tab",

|

||||

"label": "Flow 1",

|

||||

"disabled": false,

|

||||

"info": ""

|

||||

},

|

||||

{

|

||||

"id": "3cc11d24.ff01a2",

|

||||

"type": "comment",

|

||||

"z": "f6f2187d.f17ca8",

|

||||

"name": "WARNING: please check you have started this container with a volume that is mounted to /data\\n otherwise any flow changes are lost when you redeploy or upgrade the container\\n (e.g. upgrade to a more recent node-red docker image).\\n If you are using named volumes you can ignore this warning.\\n Double click or see info side panel to learn how to start Node-RED in Docker to save your work",

|

||||

"info": "\nTo start docker with a bind mount volume (-v option), for example:\n\n```\ndocker run -it -p 1880:1880 -v /home/user/node_red_data:/data --name mynodered nodered/node-red\n```\n\nwhere `/home/user/node_red_data` is a directory on your host machine where you want to store your flows.\n\nIf you do not do this then you can experiment and redploy flows, but if you restart or upgrade the container the flows will be disconnected and lost. \n\nThey will still exist in a hidden data volume, which can be recovered using standard docker techniques, but that is much more complex than just starting with a named volume as described above.",

|

||||

"x": 350,

|

||||

"y": 80,

|

||||

"wires": []

|

||||

},

|

||||

{

|

||||

"id": "c228c538ddfd97cc",

|

||||

"type": "inject",

|

||||

"z": "f6f2187d.f17ca8",

|

||||

"name": "",

|

||||

"props": [

|

||||

{

|

||||

"p": "payload"

|

||||

},

|

||||

{

|

||||

"p": "topic",

|

||||

"vt": "str"

|

||||

}

|

||||

],

|

||||

"repeat": "",

|

||||

"crontab": "",

|

||||

"once": false,

|

||||

"onceDelay": 0.1,

|

||||

"topic": "",

|

||||

"payload": "",

|

||||

"payloadType": "date",

|

||||

"x": 300,

|

||||

"y": 440,

|

||||

"wires": [

|

||||

[

|

||||

"fae8437b33358ca0"

|

||||

]

|

||||

]

|

||||

},

|

||||

{

|

||||

"id": "fae8437b33358ca0",

|

||||

"type": "function",

|

||||

"z": "f6f2187d.f17ca8",

|

||||

"name": "",

|

||||

"func": "// Create a Date object from the payload\nvar date = new Date(msg.payload);\n// Change the payload to be a formatted Date string\nmsg.payload = date.toString();\n// Return the message so it can be sent on\nreturn msg;",

|

||||

"outputs": 1,

|

||||

"noerr": 0,

|

||||

"initialize": "",

|

||||

"finalize": "",

|

||||

"libs": [],

|

||||

"x": 480,

|

||||

"y": 440,

|

||||

"wires": [

|

||||

[

|

||||

"7588c855ba3f1c81"

|

||||

]

|

||||

]

|

||||

},

|

||||

{

|

||||

"id": "7588c855ba3f1c81",

|

||||

"type": "pythonshell in",

|

||||

"z": "f6f2187d.f17ca8",

|

||||

"name": "hellepython",

|

||||

"pyfile": "/data/0.helloworld/helloworld.py",

|

||||

"virtualenv": "",

|

||||

"continuous": true,

|

||||

"stdInData": true,

|

||||

"x": 670,

|

||||

"y": 440,

|

||||

"wires": [

|

||||

[

|

||||

"b126ea03f7d74573"

|

||||

]

|

||||

]

|

||||

},

|

||||

{

|

||||

"id": "b126ea03f7d74573",

|

||||

"type": "debug",

|

||||

"z": "f6f2187d.f17ca8",

|

||||

"name": "",

|

||||

"active": true,

|

||||

"tosidebar": true,

|

||||

"console": false,

|

||||

"tostatus": false,

|

||||

"complete": "payload",

|

||||

"targetType": "msg",

|

||||

"statusVal": "",

|

||||

"statusType": "auto",

|

||||

"x": 870,

|

||||

"y": 440,

|

||||

"wires": []

|

||||

}

|

||||

]

|

||||

|

|

@ -0,0 +1,3 @@

|

|||

{

|

||||

"$": "debb1e3e4666ba98dd5189a6e20b7e40jk4="

|

||||

}

|

||||

|

|

@ -0,0 +1,6 @@

|

|||

import sys

|

||||

|

||||

while True:

|

||||

line = sys.stdin.readline()

|

||||

print('this is send from python')

|

||||

print(line)

|

||||

|

|

@ -0,0 +1,6 @@

|

|||

{

|

||||

"name": "node-red-project",

|

||||

"description": "A Node-RED Project",

|

||||

"version": "0.0.1",

|

||||

"private": true

|

||||

}

|

||||

|

|

@ -0,0 +1,498 @@

|

|||

/**

|

||||

* This is the default settings file provided by Node-RED.

|

||||

*

|

||||

* It can contain any valid JavaScript code that will get run when Node-RED

|

||||

* is started.

|

||||

*

|

||||

* Lines that start with // are commented out.

|

||||

* Each entry should be separated from the entries above and below by a comma ','

|

||||

*

|

||||

* For more information about individual settings, refer to the documentation:

|

||||

* https://nodered.org/docs/user-guide/runtime/configuration

|

||||

*

|

||||

* The settings are split into the following sections:

|

||||

* - Flow File and User Directory Settings

|

||||

* - Security

|

||||

* - Server Settings

|

||||

* - Runtime Settings

|

||||

* - Editor Settings

|

||||

* - Node Settings

|

||||

*

|

||||

**/

|

||||

|

||||

module.exports = {

|

||||

|

||||

/*******************************************************************************

|

||||

* Flow File and User Directory Settings

|

||||

* - flowFile

|

||||

* - credentialSecret

|

||||

* - flowFilePretty

|

||||

* - userDir

|

||||

* - nodesDir

|

||||

******************************************************************************/

|

||||

|

||||

/** The file containing the flows. If not set, defaults to flows_<hostname>.json **/

|

||||

flowFile: 'flows.json',

|

||||

|

||||

/** By default, credentials are encrypted in storage using a generated key. To

|

||||

* specify your own secret, set the following property.

|

||||

* If you want to disable encryption of credentials, set this property to false.

|

||||

* Note: once you set this property, do not change it - doing so will prevent

|

||||

* node-red from being able to decrypt your existing credentials and they will be

|

||||

* lost.

|

||||

*/

|

||||

//credentialSecret: "a-secret-key",

|

||||

credentialSecret: process.env.NODE_RED_CREDENTIAL_SECRET,

|

||||

|

||||

/** By default, the flow JSON will be formatted over multiple lines making

|

||||

* it easier to compare changes when using version control.

|

||||

* To disable pretty-printing of the JSON set the following property to false.

|

||||

*/

|

||||

flowFilePretty: true,

|

||||

|

||||

/** By default, all user data is stored in a directory called `.node-red` under

|

||||

* the user's home directory. To use a different location, the following

|

||||

* property can be used

|

||||

*/

|

||||

//userDir: '/home/nol/.node-red/',

|

||||

|

||||

/** Node-RED scans the `nodes` directory in the userDir to find local node files.

|

||||

* The following property can be used to specify an additional directory to scan.

|

||||

*/

|

||||

//nodesDir: '/home/nol/.node-red/nodes',

|

||||

|

||||

/*******************************************************************************

|

||||

* Security

|

||||

* - adminAuth

|

||||

* - https

|

||||

* - httpsRefreshInterval

|

||||

* - requireHttps

|

||||

* - httpNodeAuth

|

||||

* - httpStaticAuth

|

||||

******************************************************************************/

|

||||

|

||||

/** To password protect the Node-RED editor and admin API, the following

|

||||

* property can be used. See http://nodered.org/docs/security.html for details.

|

||||

*/

|

||||

//adminAuth: {

|

||||

// type: "credentials",

|

||||

// users: [{

|

||||

// username: "admin",

|

||||

// password: "$2a$08$zZWtXTja0fB1pzD4sHCMyOCMYz2Z6dNbM6tl8sJogENOMcxWV9DN.",

|

||||

// permissions: "*"

|

||||

// }]

|

||||

//},

|

||||

|

||||

/** The following property can be used to enable HTTPS

|

||||

* This property can be either an object, containing both a (private) key

|

||||

* and a (public) certificate, or a function that returns such an object.

|

||||

* See http://nodejs.org/api/https.html#https_https_createserver_options_requestlistener

|

||||

* for details of its contents.

|

||||

*/

|

||||

|

||||

/** Option 1: static object */

|

||||

//https: {

|

||||

// key: require("fs").readFileSync('privkey.pem'),

|

||||

// cert: require("fs").readFileSync('cert.pem')

|

||||

//},

|

||||

|

||||

/** Option 2: function that returns the HTTP configuration object */

|

||||

// https: function() {

|

||||

// // This function should return the options object, or a Promise

|

||||

// // that resolves to the options object

|

||||

// return {

|

||||

// key: require("fs").readFileSync('privkey.pem'),

|

||||

// cert: require("fs").readFileSync('cert.pem')

|

||||

// }

|

||||

// },

|

||||

|

||||

/** If the `https` setting is a function, the following setting can be used

|

||||

* to set how often, in hours, the function will be called. That can be used

|

||||

* to refresh any certificates.

|

||||

*/

|

||||

//httpsRefreshInterval : 12,

|

||||

|

||||

/** The following property can be used to cause insecure HTTP connections to

|

||||

* be redirected to HTTPS.

|

||||

*/

|

||||

//requireHttps: true,

|

||||

|

||||

/** To password protect the node-defined HTTP endpoints (httpNodeRoot),

|

||||

* including node-red-dashboard, or the static content (httpStatic), the

|

||||

* following properties can be used.

|

||||

* The `pass` field is a bcrypt hash of the password.

|

||||

* See http://nodered.org/docs/security.html#generating-the-password-hash

|

||||

*/

|

||||

//httpNodeAuth: {user:"user",pass:"$2a$08$zZWtXTja0fB1pzD4sHCMyOCMYz2Z6dNbM6tl8sJogENOMcxWV9DN."},

|

||||

//httpStaticAuth: {user:"user",pass:"$2a$08$zZWtXTja0fB1pzD4sHCMyOCMYz2Z6dNbM6tl8sJogENOMcxWV9DN."},

|

||||

|

||||

/*******************************************************************************

|

||||

* Server Settings

|

||||

* - uiPort

|

||||

* - uiHost

|

||||

* - apiMaxLength

|

||||

* - httpServerOptions

|

||||

* - httpAdminRoot

|

||||

* - httpAdminMiddleware

|

||||

* - httpNodeRoot

|

||||

* - httpNodeCors

|

||||

* - httpNodeMiddleware

|

||||

* - httpStatic

|

||||

******************************************************************************/

|

||||

|

||||

/** the tcp port that the Node-RED web server is listening on */

|

||||

uiPort: process.env.PORT || 1880,

|

||||

|

||||

/** By default, the Node-RED UI accepts connections on all IPv4 interfaces.

|

||||

* To listen on all IPv6 addresses, set uiHost to "::",

|

||||

* The following property can be used to listen on a specific interface. For

|

||||

* example, the following would only allow connections from the local machine.

|

||||

*/

|

||||

//uiHost: "127.0.0.1",

|

||||

|

||||

/** The maximum size of HTTP request that will be accepted by the runtime api.

|

||||

* Default: 5mb

|

||||

*/

|

||||

//apiMaxLength: '5mb',

|

||||

|

||||

/** The following property can be used to pass custom options to the Express.js

|

||||

* server used by Node-RED. For a full list of available options, refer

|

||||

* to http://expressjs.com/en/api.html#app.settings.table

|

||||

*/

|

||||

//httpServerOptions: { },

|

||||

|

||||

/** By default, the Node-RED UI is available at http://localhost:1880/

|

||||

* The following property can be used to specify a different root path.

|

||||

* If set to false, this is disabled.

|

||||

*/

|

||||

//httpAdminRoot: '/admin',

|

||||

|

||||

/** The following property can be used to add a custom middleware function

|

||||

* in front of all admin http routes. For example, to set custom http

|

||||

* headers. It can be a single function or an array of middleware functions.

|

||||

*/

|

||||

// httpAdminMiddleware: function(req,res,next) {

|

||||

// // Set the X-Frame-Options header to limit where the editor

|

||||

// // can be embedded

|

||||

// //res.set('X-Frame-Options', 'sameorigin');

|

||||

// next();

|

||||

// },

|

||||

|

||||

|

||||

/** Some nodes, such as HTTP In, can be used to listen for incoming http requests.

|

||||

* By default, these are served relative to '/'. The following property

|

||||

* can be used to specifiy a different root path. If set to false, this is

|

||||

* disabled.

|

||||

*/

|

||||

//httpNodeRoot: '/red-nodes',

|

||||

|

||||

/** The following property can be used to configure cross-origin resource sharing

|

||||

* in the HTTP nodes.

|

||||

* See https://github.com/troygoode/node-cors#configuration-options for

|

||||

* details on its contents. The following is a basic permissive set of options:

|

||||

*/

|

||||

//httpNodeCors: {

|

||||

// origin: "*",

|

||||

// methods: "GET,PUT,POST,DELETE"

|

||||

//},

|

||||

|

||||

/** If you need to set an http proxy please set an environment variable

|

||||

* called http_proxy (or HTTP_PROXY) outside of Node-RED in the operating system.

|

||||

* For example - http_proxy=http://myproxy.com:8080

|

||||

* (Setting it here will have no effect)

|

||||

* You may also specify no_proxy (or NO_PROXY) to supply a comma separated

|

||||

* list of domains to not proxy, eg - no_proxy=.acme.co,.acme.co.uk

|

||||

*/

|

||||

|

||||

/** The following property can be used to add a custom middleware function

|

||||

* in front of all http in nodes. This allows custom authentication to be

|

||||

* applied to all http in nodes, or any other sort of common request processing.

|

||||

* It can be a single function or an array of middleware functions.

|

||||

*/

|

||||

//httpNodeMiddleware: function(req,res,next) {

|

||||

// // Handle/reject the request, or pass it on to the http in node by calling next();

|

||||

// // Optionally skip our rawBodyParser by setting this to true;

|

||||

// //req.skipRawBodyParser = true;

|

||||

// next();

|

||||

//},

|

||||

|

||||

/** When httpAdminRoot is used to move the UI to a different root path, the

|

||||

* following property can be used to identify a directory of static content

|

||||

* that should be served at http://localhost:1880/.

|

||||

*/

|

||||

//httpStatic: '/home/nol/node-red-static/',

|

||||

|

||||

/*******************************************************************************

|

||||

* Runtime Settings

|

||||

* - lang

|

||||

* - logging

|

||||

* - contextStorage

|

||||

* - exportGlobalContextKeys

|

||||

* - externalModules

|

||||

******************************************************************************/

|

||||

|

||||

/** Uncomment the following to run node-red in your preferred language.

|

||||

* Available languages include: en-US (default), ja, de, zh-CN, zh-TW, ru, ko

|

||||

* Some languages are more complete than others.

|

||||

*/

|

||||

// lang: "de",

|

||||

|

||||

/** Configure the logging output */

|

||||

logging: {

|

||||

/** Only console logging is currently supported */

|

||||

console: {

|

||||

/** Level of logging to be recorded. Options are:

|

||||

* fatal - only those errors which make the application unusable should be recorded

|

||||

* error - record errors which are deemed fatal for a particular request + fatal errors

|

||||

* warn - record problems which are non fatal + errors + fatal errors

|

||||

* info - record information about the general running of the application + warn + error + fatal errors

|

||||

* debug - record information which is more verbose than info + info + warn + error + fatal errors

|

||||

* trace - record very detailed logging + debug + info + warn + error + fatal errors

|

||||

* off - turn off all logging (doesn't affect metrics or audit)

|

||||

*/

|

||||

level: "info",

|

||||

/** Whether or not to include metric events in the log output */

|

||||

metrics: false,

|

||||

/** Whether or not to include audit events in the log output */

|

||||

audit: false

|

||||

}

|

||||

},

|

||||

|

||||

/** Context Storage

|

||||

* The following property can be used to enable context storage. The configuration

|

||||

* provided here will enable file-based context that flushes to disk every 30 seconds.

|

||||

* Refer to the documentation for further options: https://nodered.org/docs/api/context/

|

||||

*/

|

||||

//contextStorage: {

|

||||

// default: {

|

||||

// module:"localfilesystem"

|

||||

// },

|

||||

//},

|

||||

|

||||

/** `global.keys()` returns a list of all properties set in global context.

|

||||

* This allows them to be displayed in the Context Sidebar within the editor.

|

||||

* In some circumstances it is not desirable to expose them to the editor. The

|

||||

* following property can be used to hide any property set in `functionGlobalContext`

|

||||

* from being list by `global.keys()`.

|

||||

* By default, the property is set to false to avoid accidental exposure of

|

||||

* their values. Setting this to true will cause the keys to be listed.

|

||||

*/

|

||||

exportGlobalContextKeys: false,

|

||||

|

||||

/** Configure how the runtime will handle external npm modules.

|

||||

* This covers:

|

||||

* - whether the editor will allow new node modules to be installed

|

||||

* - whether nodes, such as the Function node are allowed to have their

|

||||

* own dynamically configured dependencies.

|

||||

* The allow/denyList options can be used to limit what modules the runtime

|

||||

* will install/load. It can use '*' as a wildcard that matches anything.

|

||||

*/

|

||||

externalModules: {

|

||||

// autoInstall: false, /** Whether the runtime will attempt to automatically install missing modules */

|

||||

// autoInstallRetry: 30, /** Interval, in seconds, between reinstall attempts */

|

||||

// palette: { /** Configuration for the Palette Manager */

|

||||

// allowInstall: true, /** Enable the Palette Manager in the editor */

|

||||

// allowUpdate: true, /** Allow modules to be updated in the Palette Manager */

|

||||

// allowUpload: true, /** Allow module tgz files to be uploaded and installed */

|

||||

// allowList: ['*'],

|

||||

// denyList: [],

|

||||

// allowUpdateList: ['*'],

|

||||

// denyUpdateList: []

|

||||

// },

|

||||

// modules: { /** Configuration for node-specified modules */

|

||||

// allowInstall: true,

|

||||

// allowList: [],

|

||||

// denyList: []

|

||||

// }

|

||||

},

|

||||

|

||||

|

||||

/*******************************************************************************

|

||||

* Editor Settings

|

||||

* - disableEditor

|

||||

* - editorTheme

|

||||

******************************************************************************/

|

||||

|

||||

/** The following property can be used to disable the editor. The admin API

|

||||

* is not affected by this option. To disable both the editor and the admin

|

||||

* API, use either the httpRoot or httpAdminRoot properties

|

||||

*/

|

||||

//disableEditor: false,

|

||||

|

||||

/** Customising the editor

|

||||

* See https://nodered.org/docs/user-guide/runtime/configuration#editor-themes

|

||||

* for all available options.

|

||||

*/

|

||||

editorTheme: {

|

||||

/** The following property can be used to set a custom theme for the editor.

|

||||

* See https://github.com/node-red-contrib-themes/theme-collection for

|

||||

* a collection of themes to chose from.

|

||||

*/

|

||||

//theme: "",

|

||||

|

||||

/** To disable the 'Welcome to Node-RED' tour that is displayed the first

|

||||

* time you access the editor for each release of Node-RED, set this to false

|

||||

*/

|

||||

//tours: false,

|

||||

|

||||

palette: {

|

||||

/** The following property can be used to order the categories in the editor

|

||||

* palette. If a node's category is not in the list, the category will get

|

||||

* added to the end of the palette.

|

||||

* If not set, the following default order is used:

|

||||

*/

|

||||

//categories: ['subflows', 'common', 'function', 'network', 'sequence', 'parser', 'storage'],

|

||||

},

|

||||

|

||||

projects: {

|

||||

/** To enable the Projects feature, set this value to true */

|

||||

enabled: false,

|

||||

workflow: {

|

||||

/** Set the default projects workflow mode.

|

||||

* - manual - you must manually commit changes

|

||||

* - auto - changes are automatically committed

|

||||

* This can be overridden per-user from the 'Git config'

|

||||

* section of 'User Settings' within the editor

|

||||

*/

|

||||

mode: "manual"

|

||||

}

|

||||

},

|

||||

|

||||

codeEditor: {

|

||||

/** Select the text editor component used by the editor.

|

||||

* Defaults to "ace", but can be set to "ace" or "monaco"

|

||||

*/

|

||||

lib: "ace",

|

||||

options: {

|

||||

/** The follow options only apply if the editor is set to "monaco"

|

||||

*

|

||||

* theme - must match the file name of a theme in

|

||||

* packages/node_modules/@node-red/editor-client/src/vendor/monaco/dist/theme

|

||||

* e.g. "tomorrow-night", "upstream-sunburst", "github", "my-theme"

|

||||

*/

|

||||

theme: "vs",

|

||||

/** other overrides can be set e.g. fontSize, fontFamily, fontLigatures etc.

|

||||

* for the full list, see https://microsoft.github.io/monaco-editor/api/interfaces/monaco.editor.istandaloneeditorconstructionoptions.html

|

||||

*/

|

||||

//fontSize: 14,

|

||||

//fontFamily: "Cascadia Code, Fira Code, Consolas, 'Courier New', monospace",

|

||||

//fontLigatures: true,

|

||||

}

|

||||

}

|

||||

},

|

||||

|

||||

/*******************************************************************************

|

||||

* Node Settings

|

||||

* - fileWorkingDirectory

|

||||

* - functionGlobalContext

|

||||

* - functionExternalModules

|

||||

* - nodeMessageBufferMaxLength

|

||||

* - ui (for use with Node-RED Dashboard)

|

||||

* - debugUseColors

|

||||

* - debugMaxLength

|

||||

* - execMaxBufferSize

|

||||

* - httpRequestTimeout

|

||||

* - mqttReconnectTime

|

||||

* - serialReconnectTime

|

||||

* - socketReconnectTime

|

||||

* - socketTimeout

|

||||

* - tcpMsgQueueSize

|

||||

* - inboundWebSocketTimeout

|

||||

* - tlsConfigDisableLocalFiles

|

||||

* - webSocketNodeVerifyClient

|

||||

******************************************************************************/

|

||||

|

||||

/** The working directory to handle relative file paths from within the File nodes

|

||||

* defaults to the working directory of the Node-RED process.

|

||||

*/

|

||||

//fileWorkingDirectory: "",

|

||||

|

||||

/** Allow the Function node to load additional npm modules directly */

|

||||

functionExternalModules: true,

|

||||

|

||||

/** The following property can be used to set predefined values in Global Context.

|

||||

* This allows extra node modules to be made available with in Function node.

|

||||

* For example, the following:

|

||||

* functionGlobalContext: { os:require('os') }

|

||||

* will allow the `os` module to be accessed in a Function node using:

|

||||

* global.get("os")

|

||||

*/

|

||||

functionGlobalContext: {

|

||||

// os:require('os'),

|

||||

},

|

||||

|

||||

/** The maximum number of messages nodes will buffer internally as part of their

|

||||

* operation. This applies across a range of nodes that operate on message sequences.

|

||||

* defaults to no limit. A value of 0 also means no limit is applied.

|

||||

*/

|

||||

//nodeMessageBufferMaxLength: 0,

|

||||

|

||||

/** If you installed the optional node-red-dashboard you can set it's path

|

||||

* relative to httpNodeRoot

|

||||

* Other optional properties include

|

||||

* readOnly:{boolean},

|

||||

* middleware:{function or array}, (req,res,next) - http middleware

|

||||

* ioMiddleware:{function or array}, (socket,next) - socket.io middleware

|

||||

*/

|

||||

//ui: { path: "ui" },

|

||||

|

||||

/** Colourise the console output of the debug node */

|

||||

//debugUseColors: true,

|

||||

|

||||

/** The maximum length, in characters, of any message sent to the debug sidebar tab */

|

||||

debugMaxLength: 1000,

|

||||

|

||||

/** Maximum buffer size for the exec node. Defaults to 10Mb */

|

||||

//execMaxBufferSize: 10000000,

|

||||

|

||||

/** Timeout in milliseconds for HTTP request connections. Defaults to 120s */

|

||||

//httpRequestTimeout: 120000,

|

||||

|

||||

/** Retry time in milliseconds for MQTT connections */

|

||||

mqttReconnectTime: 15000,

|

||||

|

||||

/** Retry time in milliseconds for Serial port connections */

|

||||

serialReconnectTime: 15000,

|

||||

|

||||

/** Retry time in milliseconds for TCP socket connections */

|

||||

//socketReconnectTime: 10000,

|

||||

|

||||

/** Timeout in milliseconds for TCP server socket connections. Defaults to no timeout */

|

||||

//socketTimeout: 120000,

|

||||

|

||||

/** Maximum number of messages to wait in queue while attempting to connect to TCP socket

|

||||

* defaults to 1000

|

||||

*/

|

||||

//tcpMsgQueueSize: 2000,

|

||||

|

||||

/** Timeout in milliseconds for inbound WebSocket connections that do not

|

||||

* match any configured node. Defaults to 5000

|

||||

*/

|

||||

//inboundWebSocketTimeout: 5000,

|

||||

|

||||

/** To disable the option for using local files for storing keys and

|

||||

* certificates in the TLS configuration node, set this to true.

|

||||

*/

|

||||

//tlsConfigDisableLocalFiles: true,

|

||||

|

||||

/** The following property can be used to verify websocket connection attempts.

|

||||

* This allows, for example, the HTTP request headers to be checked to ensure

|

||||

* they include valid authentication information.

|

||||

*/

|

||||

//webSocketNodeVerifyClient: function(info) {

|

||||

// /** 'info' has three properties:

|

||||

// * - origin : the value in the Origin header

|

||||

// * - req : the HTTP request

|

||||

// * - secure : true if req.connection.authorized or req.connection.encrypted is set

|

||||

// *

|

||||

// * The function should return true if the connection should be accepted, false otherwise.

|

||||

// *

|

||||

// * Alternatively, if this function is defined to accept a second argument, callback,

|

||||

// * it can be used to verify the client asynchronously.

|

||||

// * The callback takes three arguments:

|

||||

// * - result : boolean, whether to accept the connection or not

|

||||

// * - code : if result is false, the HTTP error status to return

|

||||

// * - reason: if result is false, the HTTP reason string to return

|

||||

// */

|

||||

//},

|

||||

}

|

||||

|

|

@ -0,0 +1,368 @@

|

|||

[

|

||||

{

|

||||

"id": "f6f2187d.f17ca8",

|

||||

"type": "tab",

|

||||

"label": "Flow 1",

|

||||

"disabled": false,

|

||||

"info": ""

|

||||

},

|

||||

{

|

||||

"id": "34d396e7c091c5fd",

|

||||

"type": "tab",

|

||||

"label": "six-pipelines",

|

||||

"disabled": false,

|

||||

"info": "",

|

||||

"env": []

|

||||

},

|

||||

{

|

||||

"id": "3cc11d24.ff01a2",

|

||||

"type": "comment",

|

||||

"z": "f6f2187d.f17ca8",

|

||||

"name": "WARNING: please check you have started this container with a volume that is mounted to /data\\n otherwise any flow changes are lost when you redeploy or upgrade the container\\n (e.g. upgrade to a more recent node-red docker image).\\n If you are using named volumes you can ignore this warning.\\n Double click or see info side panel to learn how to start Node-RED in Docker to save your work",

|

||||

"info": "\nTo start docker with a bind mount volume (-v option), for example:\n\n```\ndocker run -it -p 1880:1880 -v /home/user/node_red_data:/data --name mynodered nodered/node-red\n```\n\nwhere `/home/user/node_red_data` is a directory on your host machine where you want to store your flows.\n\nIf you do not do this then you can experiment and redploy flows, but if you restart or upgrade the container the flows will be disconnected and lost. \n\nThey will still exist in a hidden data volume, which can be recovered using standard docker techniques, but that is much more complex than just starting with a named volume as described above.",

|

||||

"x": 350,

|

||||

"y": 80,

|

||||

"wires": []

|

||||

},

|

||||

{

|

||||

"id": "c228c538ddfd97cc",

|

||||

"type": "inject",

|

||||

"z": "f6f2187d.f17ca8",

|

||||

"name": "",

|

||||

"props": [

|

||||

{

|

||||

"p": "payload"

|

||||

},

|

||||

{

|

||||

"p": "topic",

|

||||

"vt": "str"

|

||||

}

|

||||

],

|

||||

"repeat": "",

|

||||

"crontab": "",

|

||||

"once": false,

|

||||

"onceDelay": 0.1,

|

||||

"topic": "",

|

||||

"payload": "",

|

||||

"payloadType": "date",

|

||||

"x": 300,

|

||||

"y": 440,

|

||||

"wires": [

|

||||

[

|

||||

"fae8437b33358ca0"

|

||||

]

|

||||

]

|

||||

},

|

||||

{

|

||||

"id": "fae8437b33358ca0",

|

||||

"type": "function",

|

||||

"z": "f6f2187d.f17ca8",

|

||||

"name": "",

|

||||