Merge remote-tracking branch 'upstream/master' into dev-1.19

This commit is contained in:

commit

e2a861c2f9

3

Makefile

3

Makefile

|

|

@ -6,7 +6,8 @@ NETLIFY_FUNC = $(NODE_BIN)/netlify-lambda

|

|||

# but this can be overridden when calling make, e.g.

|

||||

# CONTAINER_ENGINE=podman make container-image

|

||||

CONTAINER_ENGINE ?= docker

|

||||

CONTAINER_IMAGE = kubernetes-hugo

|

||||

IMAGE_VERSION=$(shell scripts/hash-files.sh Dockerfile Makefile | cut -c 1-12)

|

||||

CONTAINER_IMAGE = kubernetes-hugo:v$(HUGO_VERSION)-$(IMAGE_VERSION)

|

||||

CONTAINER_RUN = $(CONTAINER_ENGINE) run --rm --interactive --tty --volume $(CURDIR):/src

|

||||

|

||||

CCRED=\033[0;31m

|

||||

|

|

|

|||

|

|

@ -192,6 +192,7 @@ aliases:

|

|||

- potapy4

|

||||

- dianaabv

|

||||

sig-docs-ru-reviews: # PR reviews for Russian content

|

||||

- Arhell

|

||||

- msheldyakov

|

||||

- aisonaku

|

||||

- potapy4

|

||||

|

|

|

|||

|

|

@ -511,7 +511,7 @@ section#cncf {

|

|||

}

|

||||

|

||||

#desktopKCButton {

|

||||

position: relative;

|

||||

position: absolute;

|

||||

font-size: 18px;

|

||||

background-color: $dark-grey;

|

||||

border-radius: 8px;

|

||||

|

|

|

|||

16

config.toml

16

config.toml

|

|

@ -275,6 +275,7 @@ description = "Production-Grade Container Orchestration"

|

|||

languageName ="English"

|

||||

# Weight used for sorting.

|

||||

weight = 1

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.zh]

|

||||

title = "Kubernetes"

|

||||

|

|

@ -282,6 +283,7 @@ description = "生产级别的容器编排系统"

|

|||

languageName = "中文 Chinese"

|

||||

weight = 2

|

||||

contentDir = "content/zh"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.zh.params]

|

||||

time_format_blog = "2006.01.02"

|

||||

|

|

@ -293,6 +295,7 @@ description = "운영 수준의 컨테이너 오케스트레이션"

|

|||

languageName = "한국어 Korean"

|

||||

weight = 3

|

||||

contentDir = "content/ko"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.ko.params]

|

||||

time_format_blog = "2006.01.02"

|

||||

|

|

@ -304,6 +307,7 @@ description = "プロダクショングレードのコンテナ管理基盤"

|

|||

languageName = "日本語 Japanese"

|

||||

weight = 4

|

||||

contentDir = "content/ja"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.ja.params]

|

||||

time_format_blog = "2006.01.02"

|

||||

|

|

@ -315,6 +319,7 @@ description = "Solution professionnelle d’orchestration de conteneurs"

|

|||

languageName ="Français"

|

||||

weight = 5

|

||||

contentDir = "content/fr"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.fr.params]

|

||||

time_format_blog = "02.01.2006"

|

||||

|

|

@ -327,6 +332,7 @@ description = "Orchestrazione di Container in produzione"

|

|||

languageName = "Italiano"

|

||||

weight = 6

|

||||

contentDir = "content/it"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.it.params]

|

||||

time_format_blog = "02.01.2006"

|

||||

|

|

@ -339,6 +345,7 @@ description = "Production-Grade Container Orchestration"

|

|||

languageName ="Norsk"

|

||||

weight = 7

|

||||

contentDir = "content/no"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.no.params]

|

||||

time_format_blog = "02.01.2006"

|

||||

|

|

@ -351,6 +358,7 @@ description = "Produktionsreife Container-Orchestrierung"

|

|||

languageName ="Deutsch"

|

||||

weight = 8

|

||||

contentDir = "content/de"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.de.params]

|

||||

time_format_blog = "02.01.2006"

|

||||

|

|

@ -363,6 +371,7 @@ description = "Orquestación de contenedores para producción"

|

|||

languageName ="Español"

|

||||

weight = 9

|

||||

contentDir = "content/es"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.es.params]

|

||||

time_format_blog = "02.01.2006"

|

||||

|

|

@ -375,6 +384,7 @@ description = "Orquestração de contêineres em nível de produção"

|

|||

languageName ="Português"

|

||||

weight = 9

|

||||

contentDir = "content/pt"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.pt.params]

|

||||

time_format_blog = "02.01.2006"

|

||||

|

|

@ -387,6 +397,7 @@ description = "Orkestrasi Kontainer dengan Skala Produksi"

|

|||

languageName ="Bahasa Indonesia"

|

||||

weight = 10

|

||||

contentDir = "content/id"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.id.params]

|

||||

time_format_blog = "02.01.2006"

|

||||

|

|

@ -399,6 +410,7 @@ description = "Production-Grade Container Orchestration"

|

|||

languageName = "Hindi"

|

||||

weight = 11

|

||||

contentDir = "content/hi"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.hi.params]

|

||||

time_format_blog = "01.02.2006"

|

||||

|

|

@ -410,6 +422,7 @@ description = "Giải pháp điều phối container trong môi trường produc

|

|||

languageName = "Tiếng Việt"

|

||||

contentDir = "content/vi"

|

||||

weight = 12

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.ru]

|

||||

title = "Kubernetes"

|

||||

|

|

@ -417,6 +430,7 @@ description = "Первоклассная оркестрация контейн

|

|||

languageName = "Русский"

|

||||

weight = 12

|

||||

contentDir = "content/ru"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.ru.params]

|

||||

time_format_blog = "02.01.2006"

|

||||

|

|

@ -429,6 +443,7 @@ description = "Produkcyjny system zarządzania kontenerami"

|

|||

languageName = "Polski"

|

||||

weight = 13

|

||||

contentDir = "content/pl"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.pl.params]

|

||||

time_format_blog = "01.02.2006"

|

||||

|

|

@ -441,6 +456,7 @@ description = "Довершена система оркестрації конт

|

|||

languageName = "Українська"

|

||||

weight = 14

|

||||

contentDir = "content/uk"

|

||||

languagedirection = "ltr"

|

||||

|

||||

[languages.uk.params]

|

||||

time_format_blog = "02.01.2006"

|

||||

|

|

|

|||

|

|

@ -4,7 +4,6 @@ abstract: "Automatisierte Bereitstellung, Skalierung und Verwaltung von Containe

|

|||

cid: home

|

||||

---

|

||||

|

||||

{{< deprecationwarning >}}

|

||||

|

||||

{{< blocks/section id="oceanNodes" >}}

|

||||

{{% blocks/feature image="flower" %}}

|

||||

|

|

@ -59,4 +58,4 @@ Kubernetes ist Open Source und bietet Dir die Freiheit, die Infrastruktur vor Or

|

|||

|

||||

{{< blocks/kubernetes-features >}}

|

||||

|

||||

{{< blocks/case-studies >}}

|

||||

{{< blocks/case-studies >}}

|

||||

|

|

@ -41,7 +41,6 @@ Kubernetes is open source giving you the freedom to take advantage of on-premise

|

|||

<button id="desktopShowVideoButton" onclick="kub.showVideo()">Watch Video</button>

|

||||

<br>

|

||||

<br>

|

||||

<br>

|

||||

<a href="https://events.linuxfoundation.org/kubecon-cloudnativecon-europe/?utm_source=kubernetes.io&utm_medium=nav&utm_campaign=kccnceu20" button id="desktopKCButton">Attend KubeCon EU virtually on August 17-20, 2020</a>

|

||||

<br>

|

||||

<br>

|

||||

|

|

|

|||

|

|

@ -19,34 +19,20 @@ The entries in the catalog include not just the ability to [start a Kubernetes c

|

|||

|

||||

|

||||

|

||||

-

|

||||

Apache web server

|

||||

-

|

||||

Nginx web server

|

||||

-

|

||||

Crate - The Distributed Database for Docker

|

||||

-

|

||||

GlassFish - Java EE 7 Application Server

|

||||

-

|

||||

Tomcat - An open-source web server and servlet container

|

||||

-

|

||||

InfluxDB - An open-source, distributed, time series database

|

||||

-

|

||||

Grafana - Metrics dashboard for InfluxDB

|

||||

-

|

||||

Jenkins - An extensible open source continuous integration server

|

||||

-

|

||||

MariaDB database

|

||||

-

|

||||

MySql database

|

||||

-

|

||||

Redis - Key-value cache and store

|

||||

-

|

||||

PostgreSQL database

|

||||

-

|

||||

MongoDB NoSQL database

|

||||

-

|

||||

Zend Server - The Complete PHP Application Platform

|

||||

- Apache web server

|

||||

- Nginx web server

|

||||

- Crate - The Distributed Database for Docker

|

||||

- GlassFish - Java EE 7 Application Server

|

||||

- Tomcat - An open-source web server and servlet container

|

||||

- InfluxDB - An open-source, distributed, time series database

|

||||

- Grafana - Metrics dashboard for InfluxDB

|

||||

- Jenkins - An extensible open source continuous integration server

|

||||

- MariaDB database

|

||||

- MySql database

|

||||

- Redis - Key-value cache and store

|

||||

- PostgreSQL database

|

||||

- MongoDB NoSQL database

|

||||

- Zend Server - The Complete PHP Application Platform

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -12,14 +12,10 @@ In many ways the switch from VMs to containers is like the switch from monolithi

|

|||

|

||||

The benefits of thinking in terms of modular containers are enormous, in particular, modular containers provide the following:

|

||||

|

||||

-

|

||||

Speed application development, since containers can be re-used between teams and even larger communities

|

||||

-

|

||||

Codify expert knowledge, since everyone collaborates on a single containerized implementation that reflects best-practices rather than a myriad of different home-grown containers with roughly the same functionality

|

||||

-

|

||||

Enable agile teams, since the container boundary is a natural boundary and contract for team responsibilities

|

||||

-

|

||||

Provide separation of concerns and focus on specific functionality that reduces spaghetti dependencies and un-testable components

|

||||

- Speed application development, since containers can be re-used between teams and even larger communities

|

||||

- Codify expert knowledge, since everyone collaborates on a single containerized implementation that reflects best-practices rather than a myriad of different home-grown containers with roughly the same functionality

|

||||

- Enable agile teams, since the container boundary is a natural boundary and contract for team responsibilities

|

||||

- Provide separation of concerns and focus on specific functionality that reduces spaghetti dependencies and un-testable components

|

||||

|

||||

Building an application from modular containers means thinking about symbiotic groups of containers that cooperate to provide a service, not one container per service. In Kubernetes, the embodiment of this modular container service is a Pod. A Pod is a group of containers that share resources like file systems, kernel namespaces and an IP address. The Pod is the atomic unit of scheduling in a Kubernetes cluster, precisely because the symbiotic nature of the containers in the Pod require that they be co-scheduled onto the same machine, and the only way to reliably achieve this is by making container groups atomic scheduling units.

|

||||

|

||||

|

|

|

|||

|

|

@ -14,121 +14,71 @@ Here are the notes from today's meeting:

|

|||

|

||||

|

||||

|

||||

-

|

||||

Eric Paris: replacing salt with ansible (if we want)

|

||||

- Eric Paris: replacing salt with ansible (if we want)

|

||||

|

||||

-

|

||||

In contrib, there is a provisioning tool written in ansible

|

||||

-

|

||||

The goal in the rewrite was to eliminate as much of the cloud provider stuff as possible

|

||||

-

|

||||

The salt setup does a bunch of setup in scripts and then the environment is setup with salt

|

||||

- In contrib, there is a provisioning tool written in ansible

|

||||

- The goal in the rewrite was to eliminate as much of the cloud provider stuff as possible

|

||||

- The salt setup does a bunch of setup in scripts and then the environment is setup with salt

|

||||

|

||||

-

|

||||

This means that things like generating certs is done differently on GCE/AWS/Vagrant

|

||||

-

|

||||

For ansible, everything must be done within ansible

|

||||

-

|

||||

Background on ansible

|

||||

- This means that things like generating certs is done differently on GCE/AWS/Vagrant

|

||||

- For ansible, everything must be done within ansible

|

||||

- Background on ansible

|

||||

|

||||

-

|

||||

Does not have clients

|

||||

-

|

||||

Provisioner ssh into the machine and runs scripts on the machine

|

||||

-

|

||||

You define what you want your cluster to look like, run the script, and it sets up everything at once

|

||||

-

|

||||

If you make one change in a config file, ansible re-runs everything (which isn’t always desirable)

|

||||

-

|

||||

Uses a jinja2 template

|

||||

-

|

||||

Create machines with minimal software, then use ansible to get that machine into a runnable state

|

||||

- Does not have clients

|

||||

- Provisioner ssh into the machine and runs scripts on the machine

|

||||

- You define what you want your cluster to look like, run the script, and it sets up everything at once

|

||||

- If you make one change in a config file, ansible re-runs everything (which isn’t always desirable)

|

||||

- Uses a jinja2 template

|

||||

- Create machines with minimal software, then use ansible to get that machine into a runnable state

|

||||

|

||||

-

|

||||

Sets up all of the add-ons

|

||||

-

|

||||

Eliminates the provisioner shell scripts

|

||||

-

|

||||

Full cluster setup currently takes about 6 minutes

|

||||

- Sets up all of the add-ons

|

||||

- Eliminates the provisioner shell scripts

|

||||

- Full cluster setup currently takes about 6 minutes

|

||||

|

||||

-

|

||||

CentOS with some packages

|

||||

-

|

||||

Redeploy to the cluster takes 25 seconds

|

||||

-

|

||||

Questions for Eric

|

||||

- CentOS with some packages

|

||||

- Redeploy to the cluster takes 25 seconds

|

||||

- Questions for Eric

|

||||

|

||||

-

|

||||

Where does the provider-specific configuration go?

|

||||

- Where does the provider-specific configuration go?

|

||||

|

||||

-

|

||||

The only network setup that the ansible config does is flannel; you can turn it off

|

||||

-

|

||||

What about init vs. systemd?

|

||||

- The only network setup that the ansible config does is flannel; you can turn it off

|

||||

- What about init vs. systemd?

|

||||

|

||||

-

|

||||

Should be able to support in the code w/o any trouble (not yet implemented)

|

||||

-

|

||||

Discussion

|

||||

- Should be able to support in the code w/o any trouble (not yet implemented)

|

||||

- Discussion

|

||||

|

||||

-

|

||||

Why not push the setup work into containers or kubernetes config?

|

||||

- Why not push the setup work into containers or kubernetes config?

|

||||

|

||||

-

|

||||

To bootstrap a cluster drop a kubelet and a manifest

|

||||

-

|

||||

Running a kubelet and configuring the network should be the only things required. We can cut a machine image that is preconfigured minus the data package (certs, etc)

|

||||

- To bootstrap a cluster drop a kubelet and a manifest

|

||||

- Running a kubelet and configuring the network should be the only things required. We can cut a machine image that is preconfigured minus the data package (certs, etc)

|

||||

|

||||

-

|

||||

The ansible scripts install kubelet & docker if they aren’t already installed

|

||||

-

|

||||

Each OS (RedHat, Debian, Ubuntu) could have a different image. We could view this as part of the build process instead of the install process.

|

||||

-

|

||||

There needs to be solution for bare metal as well.

|

||||

-

|

||||

In favor of the overall goal -- reducing the special configuration in the salt configuration

|

||||

-

|

||||

Everything except the kubelet should run inside a container (eventually the kubelet should as well)

|

||||

- The ansible scripts install kubelet & docker if they aren’t already installed

|

||||

- Each OS (RedHat, Debian, Ubuntu) could have a different image. We could view this as part of the build process instead of the install process.

|

||||

- There needs to be solution for bare metal as well.

|

||||

- In favor of the overall goal -- reducing the special configuration in the salt configuration

|

||||

- Everything except the kubelet should run inside a container (eventually the kubelet should as well)

|

||||

|

||||

-

|

||||

Running in a container doesn’t cut down on the complexity that we currently have

|

||||

-

|

||||

But it does more clearly define the interface about what the code expects

|

||||

-

|

||||

These tools (Chef, Puppet, Ansible) conflate binary distribution with configuration

|

||||

- Running in a container doesn’t cut down on the complexity that we currently have

|

||||

- But it does more clearly define the interface about what the code expects

|

||||

- These tools (Chef, Puppet, Ansible) conflate binary distribution with configuration

|

||||

|

||||

-

|

||||

Containers more clearly separate these problems

|

||||

-

|

||||

The mesos deployment is not completely automated yet, but the mesos deployment is completely different: kubelets get put on top on an existing mesos cluster

|

||||

- Containers more clearly separate these problems

|

||||

- The mesos deployment is not completely automated yet, but the mesos deployment is completely different: kubelets get put on top on an existing mesos cluster

|

||||

|

||||

-

|

||||

The bash scripts allow the mesos devs to see what each cloud provider is doing and re-use the relevant bits

|

||||

-

|

||||

There was a large reverse engineering curve, but the bash is at least readable as opposed to the salt

|

||||

-

|

||||

Openstack uses a different deployment as well

|

||||

-

|

||||

We need a well documented list of steps (e.g. create certs) that are necessary to stand up a cluster

|

||||

- The bash scripts allow the mesos devs to see what each cloud provider is doing and re-use the relevant bits

|

||||

- There was a large reverse engineering curve, but the bash is at least readable as opposed to the salt

|

||||

- Openstack uses a different deployment as well

|

||||

- We need a well documented list of steps (e.g. create certs) that are necessary to stand up a cluster

|

||||

|

||||

-

|

||||

This would allow us to compare across cloud providers

|

||||

-

|

||||

We should reduce the number of steps as much as possible

|

||||

-

|

||||

Ansible has 241 steps to launch a cluster

|

||||

-

|

||||

1.0 Code freeze

|

||||

- This would allow us to compare across cloud providers

|

||||

- We should reduce the number of steps as much as possible

|

||||

- Ansible has 241 steps to launch a cluster

|

||||

- 1.0 Code freeze

|

||||

|

||||

-

|

||||

How are we getting out of code freeze?

|

||||

-

|

||||

This is a topic for next week, but the preview is that we will move slowly rather than totally opening the firehose

|

||||

- How are we getting out of code freeze?

|

||||

- This is a topic for next week, but the preview is that we will move slowly rather than totally opening the firehose

|

||||

|

||||

-

|

||||

We want to clear the backlog as fast as possible while maintaining stability both on HEAD and on the 1.0 branch

|

||||

-

|

||||

The backlog of almost 300 PRs but there are also various parallel feature branches that have been developed during the freeze

|

||||

-

|

||||

Cutting a cherry pick release today (1.0.1) that fixes a few issues

|

||||

- We want to clear the backlog as fast as possible while maintaining stability both on HEAD and on the 1.0 branch

|

||||

- The backlog of almost 300 PRs but there are also various parallel feature branches that have been developed during the freeze

|

||||

- Cutting a cherry pick release today (1.0.1) that fixes a few issues

|

||||

- Next week we will discuss the cadence for patch releases

|

||||

|

|

|

|||

|

|

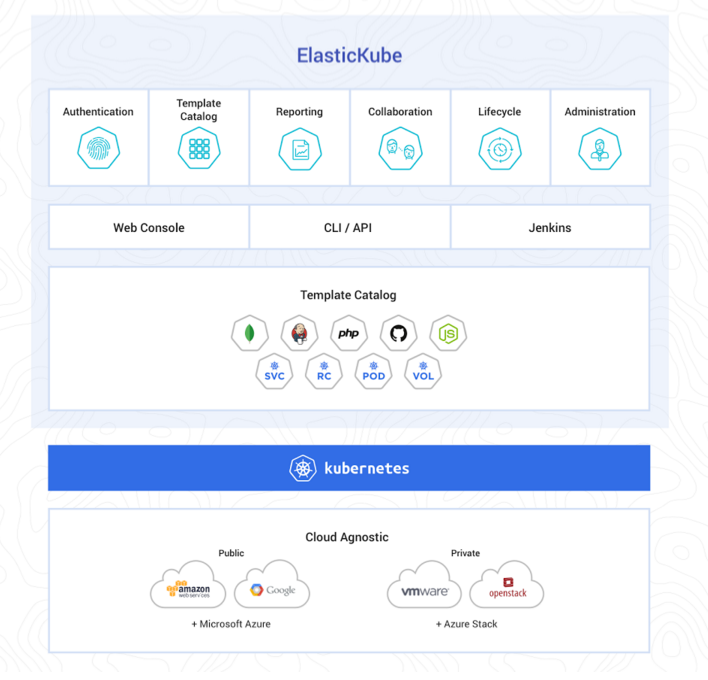

@ -16,17 +16,10 @@ Fundamentally, ElasticKube delivers a web console for which compliments Kubernet

|

|||

|

||||

ElasticKube enables organizations to accelerate adoption by developers, application operations and traditional IT operations teams and shares a mutual goal of increasing developer productivity, driving efficiency in container management and promoting the use of microservices as a modern application delivery methodology. When leveraging ElasticKube in your environment, users need to ensure the following technologies are configured appropriately to guarantee everything runs correctly:

|

||||

|

||||

-

|

||||

Configure Google Container Engine (GKE) for cluster installation and management

|

||||

|

||||

-

|

||||

Use Kubernetes to provision the infrastructure and clusters for containers

|

||||

|

||||

-

|

||||

Use your existing tools of choice to actually build your containers

|

||||

-

|

||||

|

||||

Use ElasticKube to run, deploy and manage your containers and services

|

||||

- Configure Google Container Engine (GKE) for cluster installation and management

|

||||

- Use Kubernetes to provision the infrastructure and clusters for containers

|

||||

- Use your existing tools of choice to actually build your containers

|

||||

- Use ElasticKube to run, deploy and manage your containers and services

|

||||

|

||||

[](http://cl.ly/0i3M2L3Q030z/Image%202016-03-11%20at%209.49.12%20AM.png)

|

||||

|

||||

|

|

@ -39,14 +32,10 @@ Getting Started with Kubernetes and ElasticKube

|

|||

|

||||

(this is a 3min walk through video with the following topics)

|

||||

|

||||

1.

|

||||

Deploy ElasticKube to a Kubernetes cluster

|

||||

2.

|

||||

Configuration

|

||||

3.

|

||||

Admin: Setup and invite a user

|

||||

4.

|

||||

Deploy an instance

|

||||

1. Deploy ElasticKube to a Kubernetes cluster

|

||||

2. Configuration

|

||||

3. Admin: Setup and invite a user

|

||||

4. Deploy an instance

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -13,24 +13,18 @@ Today, we want to take you on a short tour explaining the background of our offe

|

|||

|

||||

In mid 2014 we looked at the challenges enterprises are facing in the context of digitization, where traditional enterprises experience that more and more competitors from the IT sector are pushing into the core of their markets. A big part of Fujitsu’s customers are such traditional businesses, so we considered how we could help them and came up with three basic principles:

|

||||

|

||||

-

|

||||

Decouple applications from infrastructure - Focus on where the value for the customer is: the application.

|

||||

-

|

||||

Decompose applications - Build applications from smaller, loosely coupled parts. Enable reconfiguration of those parts depending on the needs of the business. Also encourage innovation by low-cost experiments.

|

||||

-

|

||||

Automate everything - Fight the increasing complexity of the first two points by introducing a high degree of automation.

|

||||

- Decouple applications from infrastructure - Focus on where the value for the customer is: the application.

|

||||

- Decompose applications - Build applications from smaller, loosely coupled parts. Enable reconfiguration of those parts depending on the needs of the business. Also encourage innovation by low-cost experiments.

|

||||

- Automate everything - Fight the increasing complexity of the first two points by introducing a high degree of automation.

|

||||

|

||||

We found that Linux containers themselves cover the first point and touch the second. But at this time there was little support for creating distributed applications and running them managed automatically. We found Kubernetes as the missing piece.

|

||||

**Not a free lunch**

|

||||

|

||||

The general approach of Kubernetes in managing containerized workload is convincing, but as we looked at it with the eyes of customers, we realized that it’s not a free lunch. Many customers are medium-sized companies whose core business is often bound to strict data protection regulations. The top three requirements we identified are:

|

||||

|

||||

-

|

||||

On-premise deployments (with the option for hybrid scenarios)

|

||||

-

|

||||

Efficient operations as part of a (much) bigger IT infrastructure

|

||||

-

|

||||

Enterprise-grade support, potentially on global scale

|

||||

- On-premise deployments (with the option for hybrid scenarios)

|

||||

- Efficient operations as part of a (much) bigger IT infrastructure

|

||||

- Enterprise-grade support, potentially on global scale

|

||||

|

||||

We created Cloud Load Control with these requirements in mind. It is basically a distribution of Kubernetes targeted for on-premise use, primarily focusing on operational aspects of container infrastructure. We are committed to work with the community, and contribute all relevant changes and extensions upstream to the Kubernetes project.

|

||||

**On-premise deployments**

|

||||

|

|

@ -39,12 +33,9 @@ As Kubernetes core developer Tim Hockin often puts it in his[talks](https://spea

|

|||

|

||||

Cloud Load Control addresses these issues. It enables customers to reliably and readily provision a production grade Kubernetes clusters on their own infrastructure, with the following benefits:

|

||||

|

||||

-

|

||||

Proven setup process, lowers risk of problems while setting up the cluster

|

||||

-

|

||||

Reduction of provisioning time to minutes

|

||||

-

|

||||

Repeatable process, relevant especially for large, multi-tenant environments

|

||||

- Proven setup process, lowers risk of problems while setting up the cluster

|

||||

- Reduction of provisioning time to minutes

|

||||

- Repeatable process, relevant especially for large, multi-tenant environments

|

||||

|

||||

Cloud Load Control delivers these benefits for a range of platforms, starting from selected OpenStack distributions in the first versions of Cloud Load Control, and successively adding more platforms depending on customer demand. We are especially excited about the option to remove the virtualization layer and support Kubernetes bare-metal on Fujitsu servers in the long run. By removing a layer of complexity, the total cost to run the system would be decreased and the missing hypervisor would increase performance.

|

||||

|

||||

|

|

@ -53,10 +44,8 @@ Right now we are in the process of contributing a generic provider to set up Kub

|

|||

|

||||

Reducing operation costs is the target of any organization providing IT infrastructure. This can be achieved by increasing the efficiency of operations and helping operators to get their job done. Considering large-scale container infrastructures, we found it is important to differentiate between two types of operations:

|

||||

|

||||

-

|

||||

Platform-oriented, relates to the overall infrastructure, often including various systems, one of which might be Kubernetes.

|

||||

-

|

||||

Application-oriented, focusses rather on a single, or a small set of applications deployed on Kubernetes.

|

||||

- Platform-oriented, relates to the overall infrastructure, often including various systems, one of which might be Kubernetes.

|

||||

- Application-oriented, focusses rather on a single, or a small set of applications deployed on Kubernetes.

|

||||

|

||||

Kubernetes is already great for the application-oriented part. Cloud Load Control was created to help platform-oriented operators to efficiently manage Kubernetes as part of the overall infrastructure and make it easy to execute Kubernetes tasks relevant to them.

|

||||

|

||||

|

|

|

|||

|

|

@ -11,15 +11,12 @@ Hello, and welcome to the second installment of the Kubernetes state of the cont

|

|||

In January, 71% of respondents were currently using containers, in February, 89% of respondents were currently using containers. The percentage of users not even considering containers also shrank from 4% in January to a surprising 0% in February. Will see if that holds consistent in March.Likewise, the usage of containers continued to march across the dev/canary/prod lifecycle. In all parts of the lifecycle, container usage increased:

|

||||

|

||||

|

||||

-

|

||||

Development: 80% -\> 88%

|

||||

-

|

||||

Test: 67% -\> 72%

|

||||

-

|

||||

Pre production: 41% -\> 55%

|

||||

-

|

||||

Production: 50% -\> 62%

|

||||

What is striking in this is that pre-production growth continued, even as workloads were clearly transitioned into true production. Likewise the share of people considering containers for production rose from 78% in January to 82% in February. Again we’ll see if the trend continues into March.

|

||||

- Development: 80% -\> 88%

|

||||

- Test: 67% -\> 72%

|

||||

- Pre production: 41% -\> 55%

|

||||

- Production: 50% -\> 62%

|

||||

|

||||

What is striking in this is that pre-production growth continued, even as workloads were clearly transitioned into true production. Likewise the share of people considering containers for production rose from 78% in January to 82% in February. Again we’ll see if the trend continues into March.

|

||||

|

||||

## Container and cluster sizes

|

||||

|

||||

|

|

|

|||

|

|

@ -215,14 +215,10 @@ CRI is being actively developed and maintained by the Kubernetes [SIG-Node](http

|

|||

|

||||

|

||||

|

||||

-

|

||||

Post issues or feature requests on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

-

|

||||

Join the #sig-node channel on [Slack](https://kubernetes.slack.com/)

|

||||

-

|

||||

Subscribe to the [SIG-Node mailing list](mailto:kubernetes-sig-node@googlegroups.com)

|

||||

-

|

||||

Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

- Post issues or feature requests on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

- Join the #sig-node channel on [Slack](https://kubernetes.slack.com/)

|

||||

- Subscribe to the [SIG-Node mailing list](mailto:kubernetes-sig-node@googlegroups.com)

|

||||

- Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -21,13 +21,8 @@ This progress is our commitment in continuing to make Kubernetes best way to man

|

|||

Connect

|

||||

|

||||

|

||||

-

|

||||

[Download](http://get.k8s.io/) Kubernetes

|

||||

-

|

||||

Get involved with the Kubernetes project on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

-

|

||||

Post questions (or answer questions) on [Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

-

|

||||

Connect with the community on [Slack](http://slack.k8s.io/)

|

||||

-

|

||||

Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

- [Download](http://get.k8s.io/) Kubernetes

|

||||

- Get involved with the Kubernetes project on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

- Post questions (or answer questions) on [Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

- Connect with the community on [Slack](http://slack.k8s.io/)

|

||||

- Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

|

|

|

|||

|

|

@ -36,12 +36,11 @@ Most of the Kubernetes constructs, such as Pods, Services, Labels, etc. work wit

|

|||

|

|

||||

What doesn’t work yet?

|

||||

|

|

||||

-

|

||||

Pod abstraction is not same due to networking namespaces. Net result is that Windows containers in a single POD cannot communicate over localhost. Linux containers can share networking stack by placing them in the same network namespace.

|

||||

-

|

||||

DNS capabilities are not fully implemented

|

||||

-

|

||||

UDP is not supported inside a container

|

||||

|

||||

- Pod abstraction is not same due to networking namespaces. Net result is that Windows containers in a single POD cannot communicate over localhost. Linux containers can share networking stack by placing them in the same network namespace.

|

||||

- DNS capabilities are not fully implemented

|

||||

- UDP is not supported inside a container

|

||||

|

||||

|

|

||||

|

|

||||

When will it be ready for all production workloads (general availability)?

|

||||

|

|

|

|||

|

|

@ -78,11 +78,7 @@ _--Jean-Mathieu Saponaro, Research & Analytics Engineer, Datadog_

|

|||

|

||||

|

||||

|

||||

-

|

||||

Get involved with the Kubernetes project on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

-

|

||||

Post questions (or answer questions) on [Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

-

|

||||

Connect with the community on [Slack](http://slack.k8s.io/)

|

||||

-

|

||||

Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

- Get involved with the Kubernetes project on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

- Post questions (or answer questions) on [Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

- Connect with the community on [Slack](http://slack.k8s.io/)

|

||||

- Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

|

|

|

|||

|

|

@ -113,11 +113,7 @@ _-- Rob Hirschfeld, co-founder of RackN and co-chair of the Cluster Ops SIG_

|

|||

|

||||

|

||||

|

||||

-

|

||||

Get involved with the Kubernetes project on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

-

|

||||

Post questions (or answer questions) on [Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

-

|

||||

Connect with the community on [Slack](http://slack.k8s.io/)

|

||||

-

|

||||

Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

- Get involved with the Kubernetes project on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

- Post questions (or answer questions) on [Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

- Connect with the community on [Slack](http://slack.k8s.io/)

|

||||

- Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

|

|

|

|||

|

|

@ -26,87 +26,69 @@ Kubernetes has also earned the trust of many [Fortune 500 companies](https://kub

|

|||

|

||||

July 2016

|

||||

|

||||

-

|

||||

Kubernauts celebrated its [first anniversary](https://kubernetes.io/blog/2016/07/happy-k8sbday-1) of the Kubernetes 1.0 launch with 20 [#k8sbday](https://twitter.com/search?q=k8sbday&src=typd) parties hosted worldwide

|

||||

-

|

||||

Kubernetes [v1.3 release](https://kubernetes.io/blog/2016/07/kubernetes-1-3-bridging-cloud-native-and-enterprise-workloads/)

|

||||

- Kubernauts celebrated its [first anniversary](https://kubernetes.io/blog/2016/07/happy-k8sbday-1) of the Kubernetes 1.0 launch with 20 [#k8sbday](https://twitter.com/search?q=k8sbday&src=typd) parties hosted worldwide

|

||||

- Kubernetes [v1.3 release](https://kubernetes.io/blog/2016/07/kubernetes-1-3-bridging-cloud-native-and-enterprise-workloads/)

|

||||

|

||||

|

||||

|

||||

September 2016

|

||||

|

||||

-

|

||||

Kubernetes [v1.4 release](https://kubernetes.io/blog/2016/09/kubernetes-1-4-making-it-easy-to-run-on-kuberentes-anywhere/)

|

||||

-

|

||||

Launch of [kubeadm](https://kubernetes.io/blog/2016/09/how-we-made-kubernetes-easy-to-install), a tool that makes Kubernetes dramatically easier to install

|

||||

-

|

||||

[Pokemon Go](https://www.sdxcentral.com/articles/news/google-dealt-pokemon-go-traffic-50-times-beyond-expectations/2016/09/) - one of the largest installs of Kubernetes ever

|

||||

- Kubernetes [v1.4 release](https://kubernetes.io/blog/2016/09/kubernetes-1-4-making-it-easy-to-run-on-kuberentes-anywhere/)

|

||||

- Launch of [kubeadm](https://kubernetes.io/blog/2016/09/how-we-made-kubernetes-easy-to-install), a tool that makes Kubernetes dramatically easier to install

|

||||

- [Pokemon Go](https://www.sdxcentral.com/articles/news/google-dealt-pokemon-go-traffic-50-times-beyond-expectations/2016/09/) - one of the largest installs of Kubernetes ever

|

||||

|

||||

|

||||

|

||||

October 2016

|

||||

|

||||

-

|

||||

Introduced [Kubernetes service partners program](https://kubernetes.io/blog/2016/10/kubernetes-service-technology-partners-program) and a redesigned [partners page](https://kubernetes.io/partners/)

|

||||

- Introduced [Kubernetes service partners program](https://kubernetes.io/blog/2016/10/kubernetes-service-technology-partners-program) and a redesigned [partners page](https://kubernetes.io/partners/)

|

||||

|

||||

|

||||

|

||||

November 2016

|

||||

|

||||

-

|

||||

CloudNativeCon/KubeCon [Seattle](https://www.cncf.io/blog/2016/11/17/cloudnativeconkubecon-2016-wrap/)

|

||||

-

|

||||

Cloud Native Computing Foundation partners with The Linux Foundation to launch a [new Kubernetes certification, training and managed service provider program](https://www.cncf.io/blog/2016/11/08/cncf-partners-linux-foundation-launch-new-kubernetes-certification-training-managed-service-provider-program/)

|

||||

- CloudNativeCon/KubeCon [Seattle](https://www.cncf.io/blog/2016/11/17/cloudnativeconkubecon-2016-wrap/)

|

||||

- Cloud Native Computing Foundation partners with The Linux Foundation to launch a [new Kubernetes certification, training and managed service provider program](https://www.cncf.io/blog/2016/11/08/cncf-partners-linux-foundation-launch-new-kubernetes-certification-training-managed-service-provider-program/)

|

||||

|

||||

|

||||

|

||||

December 2016

|

||||

|

||||

-

|

||||

Kubernetes [v1.5 release](https://kubernetes.io/blog/2016/12/kubernetes-1-5-supporting-production-workloads/)

|

||||

- Kubernetes [v1.5 release](https://kubernetes.io/blog/2016/12/kubernetes-1-5-supporting-production-workloads/)

|

||||

|

||||

|

||||

|

||||

January 2017

|

||||

|

||||

-

|

||||

[Survey](https://www.cncf.io/blog/2017/01/17/container-management-trends-kubernetes-moves-testing-production/) from CloudNativeCon + KubeCon Seattle showcases the maturation of Kubernetes deployment

|

||||

- [Survey](https://www.cncf.io/blog/2017/01/17/container-management-trends-kubernetes-moves-testing-production/) from CloudNativeCon + KubeCon Seattle showcases the maturation of Kubernetes deployment

|

||||

|

||||

|

||||

|

||||

March 2017

|

||||

|

||||

-

|

||||

CloudNativeCon/KubeCon [Europe](https://www.cncf.io/blog/2017/04/17/highlights-cloudnativecon-kubecon-europe-2017/)

|

||||

-

|

||||

Kubernetes[v1.6 release](https://kubernetes.io/blog/2017/03/kubernetes-1-6-multi-user-multi-workloads-at-scale)

|

||||

- CloudNativeCon/KubeCon [Europe](https://www.cncf.io/blog/2017/04/17/highlights-cloudnativecon-kubecon-europe-2017/)

|

||||

- Kubernetes[v1.6 release](https://kubernetes.io/blog/2017/03/kubernetes-1-6-multi-user-multi-workloads-at-scale)

|

||||

|

||||

|

||||

|

||||

April 2017

|

||||

|

||||

-

|

||||

The [Battery Open Source Software (BOSS) Index](https://www.battery.com/powered/boss-index-tracking-explosive-growth-open-source-software/) lists Kubernetes as #33 in the top 100 popular open-source software projects

|

||||

- The [Battery Open Source Software (BOSS) Index](https://www.battery.com/powered/boss-index-tracking-explosive-growth-open-source-software/) lists Kubernetes as #33 in the top 100 popular open-source software projects

|

||||

|

||||

|

||||

|

||||

May 2017

|

||||

|

||||

-

|

||||

[Four Kubernetes projects](https://www.cncf.io/blog/2017/05/04/cncf-brings-kubernetes-coredns-opentracing-prometheus-google-summer-code-2017/) accepted to The [Google Summer of Code](https://developers.google.com/open-source/gsoc/) (GSOC) 2017 program

|

||||

-

|

||||

Stutterstock and Kubernetes appear in [The Wall Street Journal](https://blogs.wsj.com/cio/2017/05/26/shutterstock-ceo-says-new-business-plan-hinged-upon-total-overhaul-of-it/): “On average we [Shutterstock] deploy 45 different releases into production a day using that framework. We use Docker, Kubernetes and Jenkins [to build and run containers and automate development,” said CTO Marty Brodbeck on the company’s IT overhaul and adoption of containerization.

|

||||

- [Four Kubernetes projects](https://www.cncf.io/blog/2017/05/04/cncf-brings-kubernetes-coredns-opentracing-prometheus-google-summer-code-2017/) accepted to The [Google Summer of Code](https://developers.google.com/open-source/gsoc/) (GSOC) 2017 program

|

||||

- Stutterstock and Kubernetes appear in [The Wall Street Journal](https://blogs.wsj.com/cio/2017/05/26/shutterstock-ceo-says-new-business-plan-hinged-upon-total-overhaul-of-it/): “On average we [Shutterstock] deploy 45 different releases into production a day using that framework. We use Docker, Kubernetes and Jenkins [to build and run containers and automate development,” said CTO Marty Brodbeck on the company’s IT overhaul and adoption of containerization.

|

||||

|

||||

|

||||

|

||||

June 2017

|

||||

|

||||

-

|

||||

Kubernetes [v1.7 release](https://kubernetes.io/blog/2017/06/kubernetes-1-7-security-hardening-stateful-application-extensibility-updates)

|

||||

-

|

||||

[Survey](https://www.cncf.io/blog/2017/06/28/survey-shows-kubernetes-leading-orchestration-platform/) from CloudNativeCon + KubeCon Europe shows Kubernetes leading as the orchestration platform of choice

|

||||

-

|

||||

Kubernetes ranked [#4](https://github.com/cncf/velocity) in the [30 highest velocity open source projects](https://www.cncf.io/blog/2017/06/05/30-highest-velocity-open-source-projects/)

|

||||

- Kubernetes [v1.7 release](https://kubernetes.io/blog/2017/06/kubernetes-1-7-security-hardening-stateful-application-extensibility-updates)

|

||||

- [Survey](https://www.cncf.io/blog/2017/06/28/survey-shows-kubernetes-leading-orchestration-platform/) from CloudNativeCon + KubeCon Europe shows Kubernetes leading as the orchestration platform of choice

|

||||

- Kubernetes ranked [#4](https://github.com/cncf/velocity) in the [30 highest velocity open source projects](https://www.cncf.io/blog/2017/06/05/30-highest-velocity-open-source-projects/)

|

||||

|

||||

|

||||

|

||||

|

|

@ -116,8 +98,7 @@ Figure 2: The 30 highest velocity open source projects. Source: [https://github.

|

|||

|

||||

July 2017

|

||||

|

||||

-

|

||||

Kubernauts celebrate the second anniversary of the Kubernetes 1.0 launch with [#k8sbday](https://twitter.com/search?q=k8sbday&src=typd) parties worldwide!

|

||||

- Kubernauts celebrate the second anniversary of the Kubernetes 1.0 launch with [#k8sbday](https://twitter.com/search?q=k8sbday&src=typd) parties worldwide!

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -92,14 +92,10 @@ Usage of UCD in the Process Flow:

|

|||

|

||||

UCD is used for deployment and the end-to end deployment process is automated here. UCD component process involves the following steps:

|

||||

|

||||

-

|

||||

Download the required artifacts for deployment from the Gitlab.

|

||||

-

|

||||

Login to Bluemix and set the KUBECONFIG based on the Kubernetes cluster used for creating the pods.

|

||||

-

|

||||

Create the application pod in the cluster using kubectl create command.

|

||||

-

|

||||

If needed, run a rolling update to update the existing pod.

|

||||

- Download the required artifacts for deployment from the Gitlab.

|

||||

- Login to Bluemix and set the KUBECONFIG based on the Kubernetes cluster used for creating the pods.

|

||||

- Create the application pod in the cluster using kubectl create command.

|

||||

- If needed, run a rolling update to update the existing pod.

|

||||

|

||||

|

||||

|

||||

|

|

@ -150,13 +146,8 @@ To expose our services to outside the cluster, we used Ingress. In IBM Cloud Kub

|

|||

|

||||

|

||||

|

||||

-

|

||||

Post questions (or answer questions) on [Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

-

|

||||

Join the community portal for advocates on [K8sPort](http://k8sport.org/)

|

||||

-

|

||||

Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

-

|

||||

Connect with the community on [Slack](http://slack.k8s.io/)

|

||||

-

|

||||

Get involved with the Kubernetes project on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

- Post questions (or answer questions) on [Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

- Join the community portal for advocates on [K8sPort](http://k8sport.org/)

|

||||

- Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

- Connect with the community on [Slack](http://slack.k8s.io/)

|

||||

- Get involved with the Kubernetes project on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

|

|

|

|||

|

|

@ -129,14 +129,10 @@ With our graduation, comes the release of Kompose 1.0.0, here’s what’s new:

|

|||

|

||||

|

||||

|

||||

-

|

||||

Docker Compose Version 3: Kompose now supports Docker Compose Version 3. New keys such as ‘deploy’ now convert to their Kubernetes equivalent.

|

||||

-

|

||||

Docker Push and Build Support: When you supply a ‘build’ key within your `docker-compose.yaml` file, Kompose will automatically build and push the image to the respective Docker repository for Kubernetes to consume.

|

||||

-

|

||||

New Keys: With the addition of version 3 support, new keys such as pid and deploy are supported. For full details on what Kompose supports, view our [conversion document](http://kompose.io/conversion/).

|

||||

-

|

||||

Bug Fixes: In every release we fix any bugs related to edge-cases when converting. This release fixes issues relating to converting volumes with ‘./’ in the target name.

|

||||

- Docker Compose Version 3: Kompose now supports Docker Compose Version 3. New keys such as ‘deploy’ now convert to their Kubernetes equivalent.

|

||||

- Docker Push and Build Support: When you supply a ‘build’ key within your `docker-compose.yaml` file, Kompose will automatically build and push the image to the respective Docker repository for Kubernetes to consume.

|

||||

- New Keys: With the addition of version 3 support, new keys such as pid and deploy are supported. For full details on what Kompose supports, view our [conversion document](http://kompose.io/conversion/).

|

||||

- Bug Fixes: In every release we fix any bugs related to edge-cases when converting. This release fixes issues relating to converting volumes with ‘./’ in the target name.

|

||||

|

||||

|

||||

|

||||

|

|

@ -145,28 +141,18 @@ What’s ahead?

|

|||

As we continue development, we will strive to convert as many Docker Compose keys as possible for all future and current Docker Compose releases, converting each one to their Kubernetes equivalent. All future releases will be backwards-compatible.

|

||||

|

||||

|

||||

-

|

||||

[Install Kompose](https://github.com/kubernetes/kompose/blob/master/docs/installation.md)

|

||||

-

|

||||

[Kompose Quick Start Guide](https://github.com/kubernetes/kompose/blob/master/docs/installation.md)

|

||||

-

|

||||

[Kompose Web Site](http://kompose.io/)

|

||||

-

|

||||

[Kompose Documentation](https://github.com/kubernetes/kompose/tree/master/docs)

|

||||

- [Install Kompose](https://github.com/kubernetes/kompose/blob/master/docs/installation.md)

|

||||

- [Kompose Quick Start Guide](https://github.com/kubernetes/kompose/blob/master/docs/installation.md)

|

||||

- [Kompose Web Site](http://kompose.io/)

|

||||

- [Kompose Documentation](https://github.com/kubernetes/kompose/tree/master/docs)

|

||||

|

||||

|

||||

|

||||

--Charlie Drage, Software Engineer, Red Hat

|

||||

|

||||

|

||||

-

|

||||

Post questions (or answer questions) on[Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

-

|

||||

Join the community portal for advocates on[K8sPort](http://k8sport.org/)

|

||||

-

|

||||

Follow us on Twitter[@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

-

|

||||

Connect with the community on[Slack](http://slack.k8s.io/)

|

||||

-

|

||||

Get involved with the Kubernetes project on[GitHub](https://github.com/kubernetes/kubernetes)

|

||||

-

|

||||

- Post questions (or answer questions) on[Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

- Join the community portal for advocates on[K8sPort](http://k8sport.org/)

|

||||

- Follow us on Twitter[@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

- Connect with the community on[Slack](http://slack.k8s.io/)

|

||||

- Get involved with the Kubernetes project on[GitHub](https://github.com/kubernetes/kubernetes)

|

||||

|

|

|

|||

|

|

@ -987,13 +987,8 @@ Rolling updates and roll backs close an important feature gap for DaemonSets and

|

|||

|

||||

|

||||

|

||||

-

|

||||

Post questions (or answer questions) on [Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

-

|

||||

Join the community portal for advocates on [K8sPort](http://k8sport.org/)

|

||||

-

|

||||

Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

-

|

||||

Connect with the community on [Slack](http://slack.k8s.io/)

|

||||

-

|

||||

Get involved with the Kubernetes project on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

- Post questions (or answer questions) on [Stack Overflow](http://stackoverflow.com/questions/tagged/kubernetes)

|

||||

- Join the community portal for advocates on [K8sPort](http://k8sport.org/)

|

||||

- Follow us on Twitter [@Kubernetesio](https://twitter.com/kubernetesio) for latest updates

|

||||

- Connect with the community on [Slack](http://slack.k8s.io/)

|

||||

- Get involved with the Kubernetes project on [GitHub](https://github.com/kubernetes/kubernetes)

|

||||

|

|

|

|||

|

|

@ -0,0 +1,219 @@

|

|||

---

|

||||

layout: blog

|

||||

title: "Music and math: the Kubernetes 1.17 release interview"

|

||||

date: 2020-07-27

|

||||

---

|

||||

|

||||

**Author**: Adam Glick (Google)

|

||||

|

||||

Every time the Kubernetes release train stops at the station, we like to ask the release lead to take a moment to reflect on their experience. That takes the form of an interview on the weekly [Kubernetes Podcast from Google](https://kubernetespodcast.com/) that I co-host with [Craig Box](https://twitter.com/craigbox). If you're not familiar with the show, every week we summarise the new in the Cloud Native ecosystem, and have an insightful discussion with an interesting guest from the broader Kubernetes community.

|

||||

|

||||

At the time of the 1.17 release in December, we [talked to release team lead Guinevere Saenger](https://kubernetespodcast.com/episode/083-kubernetes-1.17/). We have [shared](https://kubernetes.io/blog/2018/07/16/how-the-sausage-is-made-the-kubernetes-1.11-release-interview-from-the-kubernetes-podcast/) [the](https://kubernetes.io/blog/2019/05/13/cat-shirts-and-groundhog-day-the-kubernetes-1.14-release-interview/) [transcripts](https://kubernetes.io/blog/2019/12/06/when-youre-in-the-release-team-youre-family-the-kubernetes-1.16-release-interview/) of previous interviews on the Kubernetes blog, and we're very happy to share another today.

|

||||

|

||||

Next week we will bring you up to date with the story of Kubernetes 1.18, as we gear up for the release of 1.19 next month. [Subscribe to the show](https://kubernetespodcast.com/subscribe/) wherever you get your podcasts to make sure you don't miss that chat!

|

||||

|

||||

---

|

||||

|

||||

**ADAM GLICK: You have a nontraditional background for someone who works as a software engineer. Can you explain that background?**

|

||||

|

||||

GUINEVERE SAENGER: My first career was as a [collaborative pianist](https://en.wikipedia.org/wiki/Collaborative_piano), which is an academic way of saying "piano accompanist". I was a classically trained pianist who spends most of her time onstage, accompanying other people and making them sound great.

|

||||

|

||||

**ADAM GLICK: Is that the piano equivalent of pair-programming?**

|

||||

|

||||

GUINEVERE SAENGER: No one has said it to me like that before, but all sorts of things are starting to make sense in my head right now. I think that's a really great way of putting it.

|

||||

|

||||

**ADAM GLICK: That's a really interesting background, as someone who also has a background with music. What made you decide to get into software development?**

|

||||

|

||||

GUINEVERE SAENGER: I found myself in a life situation where I needed more stable source of income, and teaching music, and performing for various gig opportunities, was really just not cutting it anymore. And I found myself to be working really, really hard with not much to show for it. I had a lot of friends who were software engineers. I live in Seattle. That's sort of a thing that happens to you when you live in Seattle — you get to know a bunch of software engineers, one way or the other.

|

||||

|

||||

The ones I met were all lovely people, and they said, hey, I'm happy to show you how to program in Python. And so I did that for a bit, and then I heard about this program called [Ada Developers Academy](https://adadevelopersacademy.org/). That's a year long coding school, targeted at women and non-binary folks that are looking for a second career in tech. And so I applied for that.

|

||||

|

||||

**CRAIG BOX: What can you tell us about that program?**

|

||||

|

||||

GUINEVERE SAENGER: It's incredibly selective, for starters. It's really popular in Seattle and has gotten quite a good reputation. It took me three tries to get in. They do two classes a year, and so it was a while before I got my response saying 'congratulations, we are happy to welcome you into Cohort 6'. I think what sets Ada Developers Academy apart from other bootcamp style coding programs are three things, I think? The main important one is that if you get in, you pay no tuition. The entire program is funded by company sponsors.

|

||||

|

||||

**CRAIG BOX: Right.**

|

||||

|

||||

GUINEVERE SAENGER: The other thing that really convinced me is that five months of the 11-month program are an industry internship, which means you get both practical experience, mentorship, and potential job leads at the end of it.

|

||||

|

||||

**CRAIG BOX: So very much like a condensed version of the University of Waterloo degree, where you do co-op terms.**

|

||||

|

||||

GUINEVERE SAENGER: Interesting. I didn't know about that.

|

||||

|

||||

**CRAIG BOX: Having lived in Waterloo for a while, I knew a lot of people who did that. But what would you say the advantages were of going through such a condensed schooling process in computer science?**

|

||||

|

||||

GUINEVERE SAENGER: I'm not sure that the condensed process is necessarily an advantage. I think it's a necessity, though. People have to quit their jobs to go do this program. It's not an evening school type of thing.

|

||||

|

||||

**CRAIG BOX: Right.**

|

||||

|

||||

GUINEVERE SAENGER: And your internship is basically a full-time job when you do it. One thing that Ada was really, really good at is giving us practical experience that directly relates to the workplace. We learned how to use Git. We learned how to design websites using [Rails](https://rubyonrails.org/). And we also learned how to collaborate, how to pair-program. We had a weekly retrospective, so we sort of got a soft introduction to workflows at a real workplace. Adding to that, the internship, and I think the overall experience is a little bit more 'practical workplace oriented' and a little bit less academic.

|

||||

|

||||

When you're done with it, you don't have to relearn how to be an adult in a working relationship with other people. You come with a set of previous skills. There are Ada graduates who have previously been campaign lawyers, and veterinarians, and nannies, cooks, all sorts of people. And it turns out these skills tend to translate, and they tend to matter.

|

||||

|

||||

**ADAM GLICK: With your background in music, what do you think that that allows you to bring to software development that could be missing from, say, standard software development training that people go through?**

|

||||

|

||||

GUINEVERE SAENGER: People tend to really connect the dots when I tell them I used to be a musician. Of course, I still consider myself a musician, because you don't really ever stop being a musician. But they say, 'oh, yeah, music and math', and that's just a similar sort of brain. And that makes so much sense. And I think there's a little bit of a point to that. When you learn a piece of music, you have to start recognizing patterns incredibly quickly, almost intuitively.

|

||||

|

||||

And I think that is the main skill that translates into programming— recognizing patterns, finding the things that work, finding the things that don't work. And for me, especially as a collaborative pianist, it's the communicating with people, the finding out what people really want, where something is going, how to figure out what the general direction is that we want to take, before we start writing the first line of code.

|

||||

|

||||

**CRAIG BOX: In your experience at Ada or with other experiences you've had, have you been able to identify patterns in other backgrounds for people that you'd recommend, 'hey, you're good at music, so therefore you might want to consider doing something like a course in computer science'?**

|

||||

|

||||

GUINEVERE SAENGER: Overall, I think ultimately writing code is just giving a set of instructions to a computer. And we do that in daily life all the time. We give instructions to our kids, we give instructions to our students. We do math, we write textbooks. We give instructions to a room full of people when you're in court as a lawyer.

|

||||

|

||||

Actually, the entrance exam to Ada Developers Academy used to have questions from the [LSAT](https://en.wikipedia.org/wiki/Law_School_Admission_Test) on it to see if you were qualified to join the program. They changed that when I applied, but I think that's a thing that happened at one point. So, overall, I think software engineering is a much more varied field than we give it credit for, and that there are so many ways in which you can apply your so-called other skills and bring them under the umbrella of software engineering.

|

||||

|

||||

**CRAIG BOX: I do think that programming is effectively half art and half science. There's creativity to be applied. There is perhaps one way to solve a problem most efficiently. But there are many different ways that you can choose to express how you compiled something down to that way.**

|

||||

|

||||

GUINEVERE SAENGER: Yeah, I mean, that's definitely true. I think one way that you could probably prove that is that if you write code at work and you're working on something with other people, you can probably tell which one of your co-workers wrote which package, just by the way it's written, or how it is documented, or how it is styled, or any of those things. I really do think that the human character shines through.

|

||||

|

||||

**ADAM GLICK: What got you interested in Kubernetes and open source?**

|

||||

|

||||

GUINEVERE SAENGER: The honest answer is absolutely nothing. Going back to my programming school— and remember that I had to do a five-month internship as part of my training— the way that the internship works is that sponsor companies for the program get interns in according to how much they sponsored a specific cohort of students.

|

||||

|

||||

So at the time, Samsung and SDS offered to host two interns for five months on their [Cloud Native Computing team](https://samsung-cnct.github.io/) and have that be their practical experience. So I go out of a Ruby on Rails full stack web development bootcamp and show up at my internship, and they said, "Welcome to Kubernetes. Try to bring up a cluster." And I said, "Kuber what?"

|

||||

|

||||

**CRAIG BOX: We've all said that on occasion.**

|

||||

|

||||

**ADAM GLICK: Trial by fire, wow.**

|

||||

|

||||

GUINEVERE SAENGER: I will say that that entire team was absolutely wonderful, delightful to work with, incredibly helpful. And I will forever be grateful for all of the help and support that I got in that environment. It was a great place to learn.

|

||||

|

||||

**CRAIG BOX: You now work on GitHub's Kubernetes infrastructure. Obviously, there was GitHub before there was a Kubernetes, so a migration happened. What can you tell us about the transition that GitHub made to running on Kubernetes?**

|

||||

|

||||

GUINEVERE SAENGER: A disclaimer here— I was not at GitHub at the time that the transition to Kubernetes was made. However, to the best of my knowledge, the decision to transition to Kubernetes was made and people decided, yes, we want to try Kubernetes. We want to use Kubernetes. And mostly, the only decision left was, which one of our applications should we move over to Kubernetes?

|

||||

|

||||

**CRAIG BOX: I thought GitHub was written on Rails, so there was only one application.**

|

||||

|

||||

GUINEVERE SAENGER: [LAUGHING] We have a lot of supplementary stuff under the covers.

|

||||

|

||||

**CRAIG BOX: I'm sure.**

|

||||

|

||||

GUINEVERE SAENGER: But yes, GitHub is written in Rails. It is still written in Rails. And most of the supplementary things are currently running on Kubernetes. We have a fair bit of stuff that currently does not run on Kubernetes. Mainly, that is GitHub Enterprise related things. I would know less about that because I am on the platform team that helps people use the Kubernetes infrastructure. But back to your question, leadership at the time decided that it would be a good idea to start with GitHub the Rails website as the first project to move to Kubernetes.

|

||||

|

||||

**ADAM GLICK: High stakes!**

|

||||

|

||||

GUINEVERE SAENGER: The reason for this was that they decided if they were going to not start big, it really wasn't going to transition ever. It was really not going to happen. So they just decided to go all out, and it was successful, for which I think the lesson would probably be commit early, commit big.

|

||||

|

||||

**CRAIG BOX: Are there any other lessons that you would take away or that you've learned kind of from the transition that the company made, and might be applicable to other people who are looking at moving their companies from a traditional infrastructure to a Kubernetes infrastructure?**

|

||||

|

||||

GUINEVERE SAENGER: I'm not sure this is a lesson specifically, but I was on support recently, and it turned out that, due to unforeseen circumstances and a mix of human error, a bunch of the namespaces on one of our Kubernetes clusters got deleted.

|

||||

|

||||

**ADAM GLICK: Oh, my.**

|

||||

|

||||

GUINEVERE SAENGER: It should not have affected any customers, I should mention, at this point. But all in all, it took a few of us a few hours to almost completely recover from this event. I think that, without Kubernetes, this would not have been possible.

|

||||

|