Feat: add the resource graph blog (#726)

* Feat: add the resource graph blog Signed-off-by: barnettZQG <barnett.zqg@gmail.com> * Feat: change the access application document Signed-off-by: barnettZQG <barnett.zqg@gmail.com> * Fix: change title Signed-off-by: barnettZQG <barnett.zqg@gmail.com> * Feat: change the quick start document Signed-off-by: barnettZQG <barnett.zqg@gmail.com> * Feat: add the reference about the mongodb configuration Signed-off-by: barnettZQG <barnett.zqg@gmail.com>

This commit is contained in:

parent

1a4e36213e

commit

1ee1fdb930

|

|

@ -0,0 +1,145 @@

|

|||

---

|

||||

title: Trace and visualize the relationships between the kubernetes resources with KubeVela

|

||||

author: Qingguo Zeng

|

||||

author_url: https://github.com/barnettZQG

|

||||

author_image_url: https://avatars.githubusercontent.com/u/18493394?v=4

|

||||

tags: [ KubeVela, Observable]

|

||||

description: ""

|

||||

image: https://raw.githubusercontent.com/oam-dev/KubeVela.io/main/docs/resources/KubeVela-03.png

|

||||

hide_table_of_contents: false

|

||||

---

|

||||

|

||||

One of the biggest requests from KubeVela community is to provide a transparent delivery process for resources in the application. For example, many users prefer to use Helm Chart to package a lot of complex YAML, but once there is any issue during the deployment, such as the underlying storage can not be provided normally, the associated resources are not created normally, or the underlying configuration is incorrect, etc., even a small problem will be a huge threshold for troubleshooting due to the black box of Helm chart. Especially in the modern hybrid multi-cluster environment, there is a wide range of resources, how to obtain effective information and solve the problem? This can be a very big challenge.

|

||||

|

||||

|

||||

|

||||

As shown in the figure above, KubeVela has offered a real-time observation resource topology graph for applications, which further improves KubeVela's application-centric delivery experience. Developers only need to care about simple and consistent APIs when initiating application delivery. When they need to troubleshoot problems or pay attention to the delivery process, they can use the resource topology graph to quickly obtain the arrangement relationship of resources in different clusters, from the application to the running status of the Pod instance. Automatically obtain resource relationships, including complex and black-box Helm Charts.

|

||||

|

||||

In this post, we will describe how this new feature of KubeVela is implemented and works, and the roadmap for this feature.

|

||||

|

||||

## Application resource composition

|

||||

|

||||

In KubeVela, an application consists of multiple components and traits and is associated with delivery workflow and delivery policy configuration. The application configuration generates Kubernetes resources through rendering and inspection and applies them to the target cluster. Take a simple application as an example:

|

||||

|

||||

```yaml

|

||||

apiVersion: core.oam.dev/v1beta1

|

||||

kind: Application

|

||||

metadata:

|

||||

name: first-vela-app

|

||||

spec:

|

||||

components:

|

||||

- name: express-server

|

||||

type: webservice

|

||||

properties:

|

||||

image: oamdev/hello-world

|

||||

ports:

|

||||

- port: 8000

|

||||

expose: false

|

||||

```

|

||||

|

||||

Based on the above configuration, a `Deployment` resource will be rendered and deployed to the target cluster, if we slightly add some configuration, such as:

|

||||

|

||||

```yaml

|

||||

apiVersion: core.oam.dev/v1beta1

|

||||

kind: Application

|

||||

metadata:

|

||||

name: first-vela-app

|

||||

spec:

|

||||

components:

|

||||

- name: express-server

|

||||

type: webservice

|

||||

properties:

|

||||

image: oamdev/hello-world

|

||||

ports:

|

||||

- port: 8000

|

||||

expose: true

|

||||

traits:

|

||||

- type: gateway

|

||||

properties:

|

||||

domain: testsvc.example.com

|

||||

http:

|

||||

"/": 8000

|

||||

```

|

||||

|

||||

In the above configuration, we set the `expose` field of port 8000 to true, and add a trait with type `gateway`. The application will render three resources `Deployment` + `Service` + `Ingress`.

|

||||

|

||||

As mentioned above, the resources directly rendered by the application are called <b>Direct Resources</b>, and they will be stored in `ResourceTracker` as version records at the same time, and these resources can be obtained by direct indexing. When these resources are delivered to the target cluster, taking the `Deployment` resource as an example, the lower-level resource `ReplicaSet` will be generated, and then the lower-level resource `Pod` will be derived. These secondary derived resources from direct resources are called <b>Indirect Resources</b>. An application resource tree consists of direct resources and indirect resources, that work together to run dynamic applications at scale.

|

||||

|

||||

## Trace and Visualize resources’ relationships

|

||||

|

||||

The relationship chain `Deployment` => `ReplicaSet` => `Pod` described in the previous chapter is a resource cascade relationship, which is also the introductory knowledge of the Kubernetes system. We can build this relationship chain very easily through experience. Where `Deployment` is a direct resource, which we can index to based on (application & component & cluster) conditions. Next, we mainly build the relationship between the indirect resources.

|

||||

|

||||

In Kubernetes, the concept of Owner Reference is designed to record the relationship between resources. For example, in the following use case, the Pod resource records its parent resource ReplicaSet.

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

kind: Pod

|

||||

metadata:

|

||||

name: kruise-rollout-controller-manager-696bdb76f8-92rsn

|

||||

ownerReferences:

|

||||

- apiVersion: apps/v1

|

||||

blockOwnerDeletion: true

|

||||

controller: true

|

||||

kind: ReplicaSet

|

||||

name: kruise-rollout-controller-manager-696bdb76f8

|

||||

uid: aba76833-b6e3-4231-bf2e-539c81be9278

|

||||

...

|

||||

```

|

||||

|

||||

Therefore, we can reversely build any resource-dependent link through the resource's Owner Reference. However, there are two difficulties here:

|

||||

|

||||

1. The resource links in our experience may not necessarily be constructed through Owner Reference, such as the HelmRelease resource, which is the resource API defined by FluxCD to deliver the Helm Chart artifacts. In KubeVela, we use this API to deliver Helm Chart artifacts. Helm Chart can theoretically include any Kubernetes cluster resource type. From user experience, these resources are subordinate resources of HelmRelease, but HelmRelease cannot be the owner of these resources at present. That is to say, we cannot build a tracking link similar to the HelmRelease resource through Owner Reference.

|

||||

|

||||

2. When forward tracing resources, if you do not know the subordinate resource types, you need to traverse and query all types of resources and then filter them according to the Owner Reference, which results in a large amount of computation and puts a lot of pressure on the Kubernetes API.

|

||||

|

||||

The cascading relationship of application resources is often the link when we troubleshoot application failures or configuration errors. If your Kubernetes experience cannot build such a link, it will be very difficult to troubleshoot Kubernetes application failures. For example, HelmRelease, once encountering a failure, maybe we need to check the definition source code of Chart to know which subordinate resources it will generate. This threshold may hinder 90% of developers and users.

|

||||

|

||||

There are many types resources that do not follow the Owner Reference in Kubernetes and continue to grow. Therefore, we need to enable the system to have the ability to learn to speed up forward queries and adapt to resources that do not follow the Owner Reference mechanism.

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: clone-set-relation

|

||||

namespace: vela-system

|

||||

labels:

|

||||

"rules.oam.dev/resources": "true"

|

||||

data:

|

||||

rules: |-

|

||||

- parentResourceType:

|

||||

group: apps.kruise.io

|

||||

kind: CloneSet

|

||||

childrenResourceType:

|

||||

- apiVersion: v1

|

||||

kind: Pod

|

||||

```

|

||||

|

||||

The above is a configuration use case that informs KubeVela that the `CloneSet` resource cascades down the `Pod`, KubeVela will query the Pod that satisfies the Owner Reference condition from the same Namespace, so that the query complexity is O(1). A rule defines multiple resource types that are associated with a parent type down, this way is called static configuration learning. We need to implement more rules:

|

||||

|

||||

For example, if we need to pay attention to whether the Service is correctly associated with the Pod, and whether the PVC is correctly associated with the PV, there is no Owner Reference filter rule between these resources. If only configuring the type is not enough, we also need to have filter conditions, such as through labels, name, etc. At present, this part of the logic of KubeVela has some built-in rules. In addition, to simplify the user's burden of configuring static learning rules, we plan to implement dynamic learning capabilities. Based on all resource types in the current cluster, the system can dynamically analyze a certain type of resource as the owner of which types of resources. The result rules can be shared.

|

||||

|

||||

## Visualize the resource status and key information

|

||||

|

||||

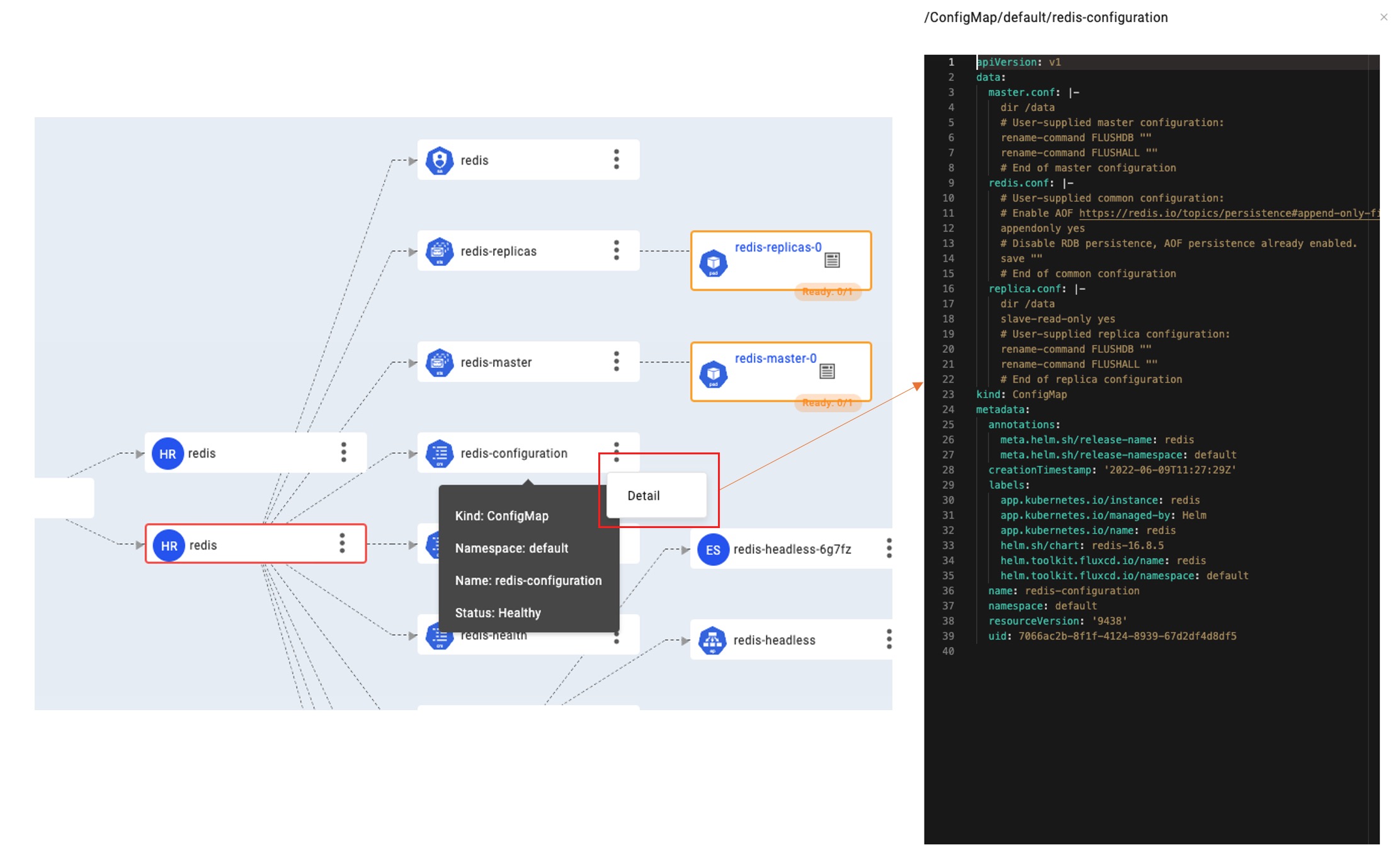

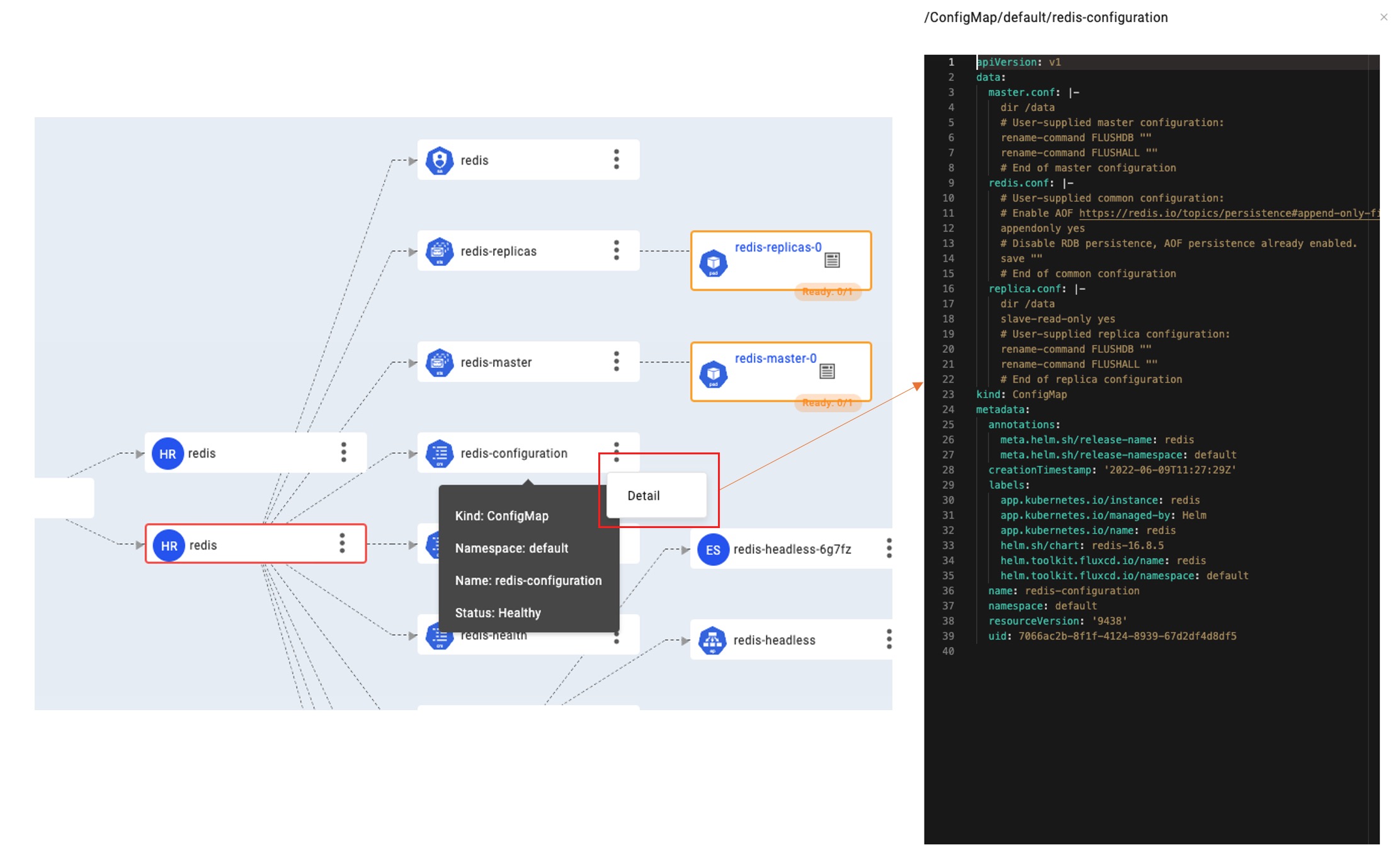

If there is only a resource relationship, it cannot solve our difficulties. We also need to be able to directly reflect exceptions, so that developers can have O(1) complexity when troubleshooting errors. Different resources have slightly different state calculations, but the general resource state has a Condition field that represents its final state. Based on this, the resource state calculation logic is formed by adding the state calculation method of a specific resource. We divide the resource state into normal, in-progress and abnormal, which are represented by blue, yellow and red border colors on the topology graph node, which is convenient for users to distinguish.

|

||||

|

||||

|

||||

|

||||

In addition, different resources have different key information, such as whether PVC is bound, whether Pod instance is started, whether Service resource is associated with external IP and so on. These are called key information, and some information is displayed on the resource node bottom, others display when the mouse moves over the node. The information helps you quickly determine whether the resource configuration is correct and its status is normal.

|

||||

|

||||

Further, if you want to query the detailed configuration of ConfigMap, whether the capacity and access method of PersistentVolumes are correct, whether the RBAC authorization rules are correct, etc., you do not need to leave the VelaUX and manually dig through YAML files. Initiate a query by clicking the Detail button in the node extension area. KubeVela will securely query and display the latest configurations from the target cluster through the cluster gateway.

|

||||

|

||||

|

||||

|

||||

## What's next?

|

||||

|

||||

* More intelligent

|

||||

|

||||

We will continue to refine the default rules and find more ways to intelligently judge resource diagrams and key information so that developers can troubleshoot errors without too much experience.

|

||||

|

||||

* Application topology graph

|

||||

|

||||

What we are currently building is the Kubernetes resource map, which is actually not our original intention. We prefer that business developers only pay attention to the application and all the relationships and states of its components, and at the same time combine business metrics analysis and monitoring data to reflect the operating pressure of the service.

|

||||

|

||||

* More runtimes

|

||||

|

||||

The currently established resource relationship graph is mainly based on Kubernetes resources. KubeVela also has a core runtime that is a cloud service platform. We need to associate the resource system of cloud services with the resource topology graph. Makes it easier for developers to manage multi-cloud applications.

|

||||

|

|

@ -193,11 +193,11 @@ It requires you to override with a new password for the first login, please make

|

|||

|

||||

Click the application card, then you can view the details of the application.

|

||||

|

||||

|

||||

|

||||

|

||||

## Clean up

|

||||

|

||||

```

|

||||

```bash

|

||||

$ vela delete first-vela-app

|

||||

Deleting Application "first-vela-app"

|

||||

app "first-vela-app" deleted from namespace "prod"

|

||||

|

|

|

|||

|

|

@ -13,7 +13,7 @@ expected output:

|

|||

Addon: velaux enabled Successfully.

|

||||

```

|

||||

|

||||

VelaUX need authentication. Default username is `admin` and the password is `VelaUX12345`. Please must set and remember the new password after the first login.

|

||||

VelaUX needs authentication. The default username is `admin` and the password is `VelaUX12345`. Please must set and remember the new password after the first login.

|

||||

|

||||

By default, VelaUX didn't have any exposed port.

|

||||

|

||||

|

|

@ -32,7 +32,7 @@ Choose `> Cluster: local | Namespace: vela-system | Component: velaux | Kind: Se

|

|||

There are three service types for VelaUX addon which aligned with Kubernetes service, they're `ClusterIP`, `NodePort` and `LoadBalancer`.

|

||||

By default the service type is ClusterIP for security.

|

||||

|

||||

If you want to expose your VelaUX dashboard for convenient, you can specify the service type.

|

||||

If you want to expose your VelaUX dashboard for convenience, you can specify the service type.

|

||||

|

||||

- `LoadBalancer` type requires your cluster has cloud LoadBalancer available.

|

||||

```shell script

|

||||

|

|

@ -43,7 +43,7 @@ If you want to expose your VelaUX dashboard for convenient, you can specify the

|

|||

vela addon enable velaux serviceType=NodePort

|

||||

```

|

||||

|

||||

After specify the service type to `LoadBalancer` or `NodePort`, you can obtain the access address through `vela status`:

|

||||

After specifying the service type to `LoadBalancer` or `NodePort`, you can obtain the access address through `vela status`:

|

||||

|

||||

```

|

||||

vela status addon-velaux -n vela-system --endpoint

|

||||

|

|

@ -62,8 +62,8 @@ The expected output:

|

|||

|

||||

If you have ingress and domain available in your cluster, you can also deploy VelaUX by specify a domain like below:

|

||||

|

||||

```shell script

|

||||

vela addon enable velaux domain=example.doamin.com

|

||||

```bash

|

||||

$ vela addon enable velaux domain=example.doamin.com

|

||||

```

|

||||

|

||||

The expected output:

|

||||

|

|

@ -85,6 +85,14 @@ If you enabled the traefik addon, you can set the `gatewayDriver` parameter to u

|

|||

$ vela addon enable velaux domain=example.doamin.com gatewayDriver=traefik

|

||||

```

|

||||

|

||||

## Setup with MongoDB database

|

||||

|

||||

VelaUX supports the Kubernetes and MongoDB as the database. the default is Kubernetes. We strongly advise using the MongoDB database to power your production environment.

|

||||

|

||||

```shell script

|

||||

$ vela addon enable velaux dbType=mongodb dbURL=mongodb://<MONGODB_USER>:<MONGODB_PASSWORD>@<MONGODB_URL>

|

||||

```

|

||||

|

||||

## Specify the addon image

|

||||

|

||||

By default the image repo is docker hub, you can specify the image repo by the `repo` parameter:

|

||||

|

|

@ -93,7 +101,6 @@ By default the image repo is docker hub, you can specify the image repo by the `

|

|||

vela addon enable velaux repo=acr.kubevela.net

|

||||

```

|

||||

|

||||

But it has API request limit, so you can try specify the `acr.kubevela.net` image registry as alternative,

|

||||

It's maintained by KubeVela team, we will upload/sync the built-in addon image for convenience.

|

||||

You can try to specify the `acr.kubevela.net` image registry as an alternative, It's maintained by KubeVela team, and we will upload/sync the built-in addon image for convenience.

|

||||

|

||||

This feature can also help you to build your private installation, just upload all images to your private image registry.

|

||||

|

|

@ -0,0 +1,132 @@

|

|||

---

|

||||

title: Access the application

|

||||

---

|

||||

|

||||

After deploying the application, the next station is publishing your service.

|

||||

|

||||

There are multiple ways to do this, for testing, such as Port Forward and Node Port. for production, such as LoadBalancer and via cluster gateway. This section focus on the production environment.

|

||||

|

||||

## Use LoadBalancer by Cloud Provider

|

||||

|

||||

This way is only suitable for the cloud environment, such as Aliyun, AWS, and Azure.

|

||||

|

||||

For the webservice component, you only need to set the `ExposeType` to `LoadBalancer`, it means this component will generate a Kubernetes Service with LoadBalancer type. After deployment, you could get the service endpoints.

|

||||

|

||||

For Example:

|

||||

|

||||

```yaml

|

||||

apiVersion: core.oam.dev/v1beta1

|

||||

kind: Application

|

||||

metadata:

|

||||

name: webservice-app

|

||||

spec:

|

||||

components:

|

||||

- name: frontend

|

||||

type: webservice

|

||||

properties:

|

||||

image: oamdev/testapp:v1

|

||||

cmd: ["node", "server.js"]

|

||||

ports:

|

||||

- port: 8080

|

||||

expose: true

|

||||

exposeType: LoadBalancer

|

||||

```

|

||||

|

||||

For other components, similarly, only need to generate the Kubernetes Service with LoadBalancer type.

|

||||

|

||||

## Use HTTP Domain by Ingress Gateway

|

||||

|

||||

This way required installed the ingress controller in the cluster.

|

||||

|

||||

Users could bound the `gateway` trait for the component to generate the Ingress. for example:

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: gateway

|

||||

properties:

|

||||

domain: testsvc.example.com

|

||||

http:

|

||||

"/": 8000

|

||||

"/manage": 8090

|

||||

```

|

||||

|

||||

This means accessing the component service by domain `testsvc.example.com`, there are two rules, the request path begins with `/manage` will request the component 8090 port. others request path request the component 8000 port.

|

||||

|

||||

In VelaUX, you can click the `Add Trait` button and select the `gateway` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

## Use Traefik

|

||||

|

||||

This way required installed the traefik addon firstly.

|

||||

|

||||

```bash

|

||||

$ vela addon enable traefik

|

||||

```

|

||||

|

||||

Get more info about this addon, refer to [Traefik Addon](../reference/addons/traefik)

|

||||

|

||||

This addon provides three traits, including [http-route](../reference/addons/traefik#http-routetrait), [https-route](../reference/addons/traefik#https-routetrait) and [tcp-route](../reference/addons/traefik#tcp-routetrait).

|

||||

|

||||

### HTTP/HTTPS

|

||||

|

||||

For HTTP, you can click the `Add Trait` button and select the `http-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: http-route

|

||||

properties:

|

||||

domains:

|

||||

- testsvc.example.com

|

||||

rules:

|

||||

- port: 80

|

||||

gatewayName: traefik-gateway

|

||||

listenerName: web

|

||||

```

|

||||

|

||||

There must be one route rule. The port is the same as the service port, the route condition supports the Header and Request Path.

|

||||

|

||||

For HTTPS, you must create the TLS certificate firstly. In VelaUX, open the Integrations page, there is a `TLS Certificate` type. Click the New button, You need to provide the certificate public and private keys and encoded the content by base64.

|

||||

|

||||

|

||||

|

||||

This will generate the secret and distribute to managed clusters with the application.

|

||||

|

||||

Then open the application configuration page and click the `Add Trait` button and select the `https-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: https-route

|

||||

properties:

|

||||

domains:

|

||||

- testsvc.example.com

|

||||

TLSPort: 8443

|

||||

secrets:

|

||||

- name: kubevela

|

||||

rules:

|

||||

- port: 80

|

||||

```

|

||||

|

||||

The secret name is the same as the name of the certificate configuration.

|

||||

|

||||

### TCP

|

||||

|

||||

This way is suitable for the service with the stream protocol, reusing the same public IP address.

|

||||

|

||||

You can click the `Add Trait` button and select the `tcp-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: https-route

|

||||

properties:

|

||||

rules:

|

||||

- gatewayPort: 16379

|

||||

port: 6379

|

||||

serviceName: redis-master

|

||||

```

|

||||

|

|

@ -0,0 +1,143 @@

|

|||

---

|

||||

title: 追踪和可视化多集群 Kubernetes 资源拓扑

|

||||

author: Qingguo Zeng

|

||||

author_url: https://github.com/barnettZQG

|

||||

author_image_url: https://avatars.githubusercontent.com/u/18493394?v=4

|

||||

tags: [ KubeVela, Observable]

|

||||

description: ""

|

||||

image: https://raw.githubusercontent.com/oam-dev/KubeVela.io/main/docs/resources/KubeVela-03.png

|

||||

hide_table_of_contents: false

|

||||

---

|

||||

|

||||

在应用交付中另一个很大的诉求是对资源交付流程的透明化管理,比如社区里很多用户喜欢使用 Helm Chart ,把一大堆复杂的 YAML 打包在一起,但是一旦部署出现问题,如底层存储未正常提供、关联资源未正常创建、底层配置不正确等,即使是一个很小的问题也会因为整体黑盒化而难以排查。尤其是在现代混合的多集群混合环境下,资源类型众多、错综复杂,如何从中获取到有效信息并解决问题是一个非常大的难题。

|

||||

|

||||

|

||||

|

||||

如上图所示,KubeVela 已经发布了针对应用的实时观测资源拓扑图功能,进一步完善了 KubeVela 以应用为中心的交付体验。开发者在发起应用交付时只需要关心简单一致的 API ,需要排查问题或者关注交付过程时,可以通过资源拓扑功能,快速获取资源在不同集群的编排关系,从应用一直追踪到 Pod 实例运行状态,自动化地获取资源的关联关系,包括复杂且黑盒化的 Helm Chart。

|

||||

|

||||

在本篇文章中,我们将介绍 KubeVela 的这项新能力是如何实现和工作的,以及后续的迭代方向。

|

||||

|

||||

## 应用的资源构成

|

||||

|

||||

在 KubeVela 中,应用由多个组件,运维特征组成,同时关联交付工作流和交付策略配置,应用配置通过渲染/检验等流程生成了 Kubernetes 资源,下发到目标集群。以一个简单的应用为例:

|

||||

|

||||

```yaml

|

||||

apiVersion: core.oam.dev/v1beta1

|

||||

kind: Application

|

||||

metadata:

|

||||

name: first-vela-app

|

||||

spec:

|

||||

components:

|

||||

- name: express-server

|

||||

type: webservice

|

||||

properties:

|

||||

image: oamdev/hello-world

|

||||

ports:

|

||||

- port: 8000

|

||||

expose: false

|

||||

```

|

||||

|

||||

基于上述配置,实际会渲染出来一个 `Deployment` 资源部署到目标集群, 如果我们稍微增加一些配置,比如:

|

||||

|

||||

```yaml

|

||||

apiVersion: core.oam.dev/v1beta1

|

||||

kind: Application

|

||||

metadata:

|

||||

name: first-vela-app

|

||||

spec:

|

||||

components:

|

||||

- name: express-server

|

||||

type: webservice

|

||||

properties:

|

||||

image: oamdev/hello-world

|

||||

ports:

|

||||

- port: 8000

|

||||

expose: true

|

||||

traits:

|

||||

- type: gateway

|

||||

properties:

|

||||

domain: testsvc.example.com

|

||||

http:

|

||||

"/": 8000

|

||||

```

|

||||

|

||||

上述配置我们将 8000 端口的 `expose` 属性设置为true,同时添加了一个类型为 `gateway` 类型的运维特征,该应用实际会渲染出 `Deployment` + `Service` + `Ingress` 三个资源。

|

||||

|

||||

如上所述,由应用直接渲染出来的资源我们称为 <b>直接资源</b> ,它们将同时存储于 `ResourceTracker` 中作为版本记录,这些资源我们可以直接索引获得。当这些资源被下发到目标集群后,以 `Deployment` 资源为例, 将产生下级资源 `ReplicaSet` 进而再衍生下级资源 `Pod`。这些由直接资源二次衍生出来的资源称为 <b>间接资源</b>。完整的应用资源树由直接资源和间接资源构成,他们都是支撑应用运行的关键环节。

|

||||

|

||||

## 应用资源关系

|

||||

|

||||

上一章节中描述的 `Deployment` =》 `ReplicaSet` =》`Pod` 这条关系链就是资源级联关系,这也是 Kubernetes 系统的入门知识,我们通过经验可以非常容易构建这条关系链。其中 `Deployment` 为直接资源,我们可以从根据(应用&组件&集群)条件索引到该资源。接下来我们主要分析间接资源关系的建立。

|

||||

|

||||

在 Kubernetes 中,为了记录资源间的关系设计了 属主引用(Owner Reference)概念。比如下面这个用例,Pod 资源记录了其父级资源 ReplicaSet。

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

kind: Pod

|

||||

metadata:

|

||||

name: kruise-rollout-controller-manager-696bdb76f8-92rsn

|

||||

ownerReferences:

|

||||

- apiVersion: apps/v1

|

||||

blockOwnerDeletion: true

|

||||

controller: true

|

||||

kind: ReplicaSet

|

||||

name: kruise-rollout-controller-manager-696bdb76f8

|

||||

uid: aba76833-b6e3-4231-bf2e-539c81be9278

|

||||

...

|

||||

```

|

||||

|

||||

因此理论上我们可以通过资源的 Owner Reference 来反向建立任何资源依赖链路。然而这里会有两个难点:

|

||||

|

||||

1. 我们经验中的资源链路却并不一定能通过 Owner Reference 来构建,比如 HelmRelease 资源,这是 FluxCD 定义的交付 Helm Chart 包的资源 API。在 KubeVela 中,我们使用此 API 来交付 Helm Chart 制品。 Helm Chart 理论上可以包括任何 Kubernetes 集群资源类型,从用户经验认知来说,这些资源都是 HelmRelease 的下级资源,但是目前 HelmRelease 无法作为这些资源的 Owner。也就是说如果我们无法通过 Owner Reference 来建立类似 HelmRelease 资源的追踪链路。

|

||||

2. 当正向追踪资源时如果不知道下级资源类型,则需要通过遍历查询所有类型资源再根据 Owner Reference 来进行过滤,这导致计算量偏大且给集群 API 带来比较大的压力。

|

||||

|

||||

应用资源的级联关系往往就是我们排查应用故障/配置错误时的链路,如果你的 Kubernetes 经验无法建立起这样的链路,排查 Kubernetes 应用的故障将非常困难。比如 HelmRelease,一旦遇到故障,或许我们需要去查看 Chart 的定义源码才能知道其会产生哪些下级资源,这个门槛可能会阻碍 90% 的开发者用户。

|

||||

|

||||

在 Kubernetes 中或许不遵循 Owner Reference 的级联关系资源类型非常多且还在继续增长,因此我们需要让系统具备学习能力来加快正向查询和适配不遵循 Owner Reference 的资源。

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: clone-set-relation

|

||||

namespace: vela-system

|

||||

labels:

|

||||

"rules.oam.dev/resources": "true"

|

||||

data:

|

||||

rules: |-

|

||||

- parentResourceType:

|

||||

group: apps.kruise.io

|

||||

kind: CloneSet

|

||||

childrenResourceType:

|

||||

- apiVersion: v1

|

||||

kind: Pod

|

||||

```

|

||||

|

||||

上面是一个配置用例,告知系统 `CloneSet` 资源向下级联了 `Pod` 资源,系统则将从 CloneSet 所在 Namespace 中去查询满足 Owner Reference 条件的 Pod,使查询复杂度为 O(1),每一个规则定义一个父级类型向下关联的多种资源类型,这种方式称为静态配置学习。我们需要实现更多的规则:

|

||||

比如我们思考,如果我们需要关注 Service 是否正确关联了 Pod,PVC 是否正确的关联了 PV ,这些资源间都不存在 Owner Reference 过滤规则,如果仅配置类型是不够的,我们还需要有过滤条件,比如通过标签,名称等。目前 KubeVela 这部分逻辑是内置了部分规则。除此之外,为了简化用户配置静态学习规则的负担,我们计划实现动态学习能力,基于当前集群所有的资源类型,系统可以动态分析某一个类型的资源作为了哪些类型资源的 Owner,学习成果规则可以共享使用。

|

||||

|

||||

## 资源状态和关键信息

|

||||

|

||||

如果仅有资源关系,还不能解决我们的困难,我们还需要能够将异常直接体现出来,让开发者可以在排查错误时具有 O(1) 的复杂度。不同的资源其状态计算略有不同,但一般资源状态中都具有代表其最终状态的 Condition 字段。以此为基础再加上特定资源的状态计算方法形成资源状态计算逻辑,我们将资源状态分为正常,进行中和异常,在拓扑图中分别以蓝色,黄色和红色来代表,方便用户分辨。

|

||||

|

||||

|

||||

|

||||

另外不同的资源有不同的关键信息,比如 PVC 是否绑定,Pod 实例使用启动完成,Service 资源是否已关联外网 IP 等等,这些信息我们称为关键信息,对于关键信息通过资源节点挂件或者鼠标移动到其上方时显示。这些信息来帮助用户快速判断该资源的配置是否正确,状态是否正常。

|

||||

|

||||

进一步的,如果你希望查询 ConfigMap 详细配置,PersistentVolumes 的容量和访问方式是否正确,RBAC 授权规则是否正确等信息时,你也不需要离开控制台去手动挖掘 Yaml。通过点击节点扩展功能区域的 Detail 按钮发起查询。KubeVela 将通过网关安全地从目标集群中去查询最新的数据状态并展现。

|

||||

|

||||

|

||||

|

||||

## 下一步计划

|

||||

|

||||

* 更加智能

|

||||

|

||||

我们将持续打磨默认规则并通过更多的方式智能判断资源关系图和关键信息,让开发者排查错误时无需过多的经验知识。

|

||||

|

||||

* 应用/组件拓扑图

|

||||

|

||||

我们目前建立的是底层资源图,这其实不是我们的初衷,我们更希望业务开发者只需要关注应用及包括的所有组件关系和状态,同时结合业务指标分析和监控数据来体现服务的运行压力。

|

||||

|

||||

* 更多的运行时

|

||||

|

||||

目前建立的资源关系图主要基于 Kubernetes 资源,KubeVela 还有一个核心运行时是云服务平台,我们需要在资源拓扑图中关联到云服务的资源体系。使开发者可以更方便的管理多云应用。

|

||||

|

|

@ -133,9 +133,9 @@ Hello World

|

|||

|

||||

### 执行工作流部署应用

|

||||

|

||||

点击应用详情页右上方的 `部署` 按钮,选择指定环境的工作流即可开始部署应用。

|

||||

点击应用详情页右上方的 `部署` 按钮,选择指定环境的工作流即可开始部署应用,开始部署后可以切换到环境视图下打开应用资源拓扑图观测资源状态。

|

||||

|

||||

|

||||

|

||||

|

||||

### 删除应用

|

||||

|

||||

|

|

|

|||

|

|

@ -1,5 +1,5 @@

|

|||

---

|

||||

title: 启用 VelaUX 控制台

|

||||

title: VelaUX 控制台

|

||||

---

|

||||

|

||||

## Install

|

||||

|

|

@ -9,14 +9,13 @@ vela addon enable velaux

|

|||

```

|

||||

|

||||

expected output:

|

||||

|

||||

```

|

||||

Addon: velaux enabled Successfully.

|

||||

```

|

||||

|

||||

VelaUX need authentication. Default username is `admin` and the password is `VelaUX12345`. Please must set and remember the new password after the first login.

|

||||

VelaUX needs authentication. The default username is `admin` and the password is `VelaUX12345`. Please must set and remember the new password after the first login.

|

||||

|

||||

By default, velaux didn't have any exposed port.

|

||||

By default, VelaUX didn't have any exposed port.

|

||||

|

||||

## Visit VelaUX by port-forward

|

||||

|

||||

|

|

@ -33,25 +32,24 @@ Choose `> Cluster: local | Namespace: vela-system | Component: velaux | Kind: Se

|

|||

There are three service types for VelaUX addon which aligned with Kubernetes service, they're `ClusterIP`, `NodePort` and `LoadBalancer`.

|

||||

By default the service type is ClusterIP for security.

|

||||

|

||||

If you want to expose your VelaUX dashboard for convenient, you can specify the service type.

|

||||

If you want to expose your VelaUX dashboard for convenience, you can specify the service type.

|

||||

|

||||

- `LoadBalancer` type requires your cluster has cloud LoadBalancer available.

|

||||

```shell script

|

||||

vela addon enable velaux serviceType=LoadBalancer

|

||||

```

|

||||

```shell script

|

||||

vela addon enable velaux serviceType=LoadBalancer

|

||||

```

|

||||

- `NodePort` type requires you can access the Kubernetes Node IP/Port.

|

||||

```shell script

|

||||

vela addon enable velaux serviceType=NodePort

|

||||

```

|

||||

```shell script

|

||||

vela addon enable velaux serviceType=NodePort

|

||||

```

|

||||

|

||||

After specify the service type to `LoadBalancer` or `NodePort`, you can obtain the access address through `vela status`:

|

||||

After specifying the service type to `LoadBalancer` or `NodePort`, you can obtain the access address through `vela status`:

|

||||

|

||||

```

|

||||

vela status addon-velaux -n vela-system --endpoint

|

||||

```

|

||||

|

||||

The expected output:

|

||||

|

||||

```

|

||||

+----------------------------+----------------------+

|

||||

| REF(KIND/NAMESPACE/NAME) | ENDPOINT |

|

||||

|

|

@ -64,12 +62,11 @@ The expected output:

|

|||

|

||||

If you have ingress and domain available in your cluster, you can also deploy VelaUX by specify a domain like below:

|

||||

|

||||

```shell script

|

||||

vela addon enable velaux domain=example.doamin.com

|

||||

```bash

|

||||

$ vela addon enable velaux domain=example.doamin.com

|

||||

```

|

||||

|

||||

The expected output:

|

||||

|

||||

```

|

||||

I0112 15:23:40.428364 34884 apply.go:106] "patching object" name="addon-velaux" resource="core.oam.dev/v1beta1, Kind=Application"

|

||||

I0112 15:23:40.676894 34884 apply.go:106] "patching object" name="addon-secret-velaux" resource="/v1, Kind=Secret"

|

||||

|

|

@ -82,15 +79,28 @@ Please access the velaux from the following endpoints:

|

|||

+----------------------------+---------------------------+

|

||||

```

|

||||

|

||||

If you enabled the traefik addon, you can set the `gatewayDriver` parameter to use the Gateway API.

|

||||

|

||||

```shell script

|

||||

$ vela addon enable velaux domain=example.doamin.com gatewayDriver=traefik

|

||||

```

|

||||

|

||||

## Setup with MongoDB database

|

||||

|

||||

VelaUX supports the Kubernetes and MongoDB as the database. the default is Kubernetes. We strongly advise using the MongoDB database to power your production environment.

|

||||

|

||||

```shell script

|

||||

$ vela addon enable velaux dbType=mongodb dbURL=mongodb://<MONGODB_USER>:<MONGODB_PASSWORD>@<MONGODB_URL>

|

||||

```

|

||||

|

||||

## Specify the addon image

|

||||

|

||||

By default the image repo is docker hub, you can specify the image repo by the `repo` parameter:

|

||||

By default the image repo is docker hub, you can specify the image repo by the `repo` parameter:

|

||||

|

||||

```

|

||||

vela addon enable velaux repo=acr.kubevela.net

|

||||

```

|

||||

|

||||

But it has API request limit, so you can try specify the `acr.kubevela.net` image registry as alternative,

|

||||

It's maintained by KubeVela team, we will upload/sync the built-in addon image for convenience.

|

||||

You can try to specify the `acr.kubevela.net` image registry as an alternative, It's maintained by KubeVela team, and we will upload/sync the built-in addon image for convenience.

|

||||

|

||||

This feature can also help you to build your private installation, just upload all images to your private image registry.

|

||||

This feature can also help you to build your private installation, just upload all images to your private image registry.

|

||||

|

|

@ -0,0 +1,132 @@

|

|||

---

|

||||

title: 访问应用

|

||||

---

|

||||

|

||||

After deploying the application, the next station is publishing your service.

|

||||

|

||||

There are multiple ways to do this, for testing, such as Port Forward and Node Port. for production, such as LoadBalancer and via cluster gateway. This section focus on the production environment.

|

||||

|

||||

## Use LoadBalancer by Cloud Provider

|

||||

|

||||

This way is only suitable for the cloud environment, such as Aliyun, AWS, and Azure.

|

||||

|

||||

For the webservice component, you only need to set the `ExposeType` to `LoadBalancer`, it means this component will generate a Kubernetes Service with LoadBalancer type. After deployment, you could get the service endpoints.

|

||||

|

||||

For Example:

|

||||

|

||||

```yaml

|

||||

apiVersion: core.oam.dev/v1beta1

|

||||

kind: Application

|

||||

metadata:

|

||||

name: webservice-app

|

||||

spec:

|

||||

components:

|

||||

- name: frontend

|

||||

type: webservice

|

||||

properties:

|

||||

image: oamdev/testapp:v1

|

||||

cmd: ["node", "server.js"]

|

||||

ports:

|

||||

- port: 8080

|

||||

expose: true

|

||||

exposeType: LoadBalancer

|

||||

```

|

||||

|

||||

For other components, similarly, only need to generate the Kubernetes Service with LoadBalancer type.

|

||||

|

||||

## Use HTTP Domain by Ingress Gateway

|

||||

|

||||

This way required installed the ingress controller in the cluster.

|

||||

|

||||

Users could bound the `gateway` trait for the component to generate the Ingress. for example:

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: gateway

|

||||

properties:

|

||||

domain: testsvc.example.com

|

||||

http:

|

||||

"/": 8000

|

||||

"/manage": 8090

|

||||

```

|

||||

|

||||

This means accessing the component service by domain `testsvc.example.com`, there are two rules, the request path begins with `/manage` will request the component 8090 port. others request path request the component 8000 port.

|

||||

|

||||

In VelaUX, you can click the `Add Trait` button and select the `gateway` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

## Use Traefik

|

||||

|

||||

This way required installed the traefik addon firstly.

|

||||

|

||||

```bash

|

||||

$ vela addon enable traefik

|

||||

```

|

||||

|

||||

Get more info about this addon, refer to [Traefik Addon](../reference/addons/traefik)

|

||||

|

||||

This addon provides three traits, including [http-route](../reference/addons/traefik#http-routetrait), [https-route](../reference/addons/traefik#https-routetrait) and [tcp-route](../reference/addons/traefik#tcp-routetrait).

|

||||

|

||||

### HTTP/HTTPS

|

||||

|

||||

For HTTP, you can click the `Add Trait` button and select the `http-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: http-route

|

||||

properties:

|

||||

domains:

|

||||

- testsvc.example.com

|

||||

rules:

|

||||

- port: 80

|

||||

gatewayName: traefik-gateway

|

||||

listenerName: web

|

||||

```

|

||||

|

||||

There must be one route rule. The port is the same as the service port, the route condition supports the Header and Request Path.

|

||||

|

||||

For HTTPS, you must create the TLS certificate firstly. In VelaUX, open the Integrations page, there is a `TLS Certificate` type. Click the New button, You need to provide the certificate public and private keys and encoded the content by base64.

|

||||

|

||||

|

||||

|

||||

This will generate the secret and distribute to managed clusters with the application.

|

||||

|

||||

Then open the application configuration page and click the `Add Trait` button and select the `https-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: https-route

|

||||

properties:

|

||||

domains:

|

||||

- testsvc.example.com

|

||||

TLSPort: 8443

|

||||

secrets:

|

||||

- name: kubevela

|

||||

rules:

|

||||

- port: 80

|

||||

```

|

||||

|

||||

The secret name is the same as the name of the certificate configuration.

|

||||

|

||||

### TCP

|

||||

|

||||

This way is suitable for the service with the stream protocol, reusing the same public IP address.

|

||||

|

||||

You can click the `Add Trait` button and select the `tcp-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: https-route

|

||||

properties:

|

||||

rules:

|

||||

- gatewayPort: 16379

|

||||

port: 6379

|

||||

serviceName: redis-master

|

||||

```

|

||||

|

|

@ -133,9 +133,9 @@ Hello World

|

|||

|

||||

### 执行工作流部署应用

|

||||

|

||||

点击应用详情页右上方的 `部署` 按钮,选择指定环境的工作流即可开始部署应用。

|

||||

点击应用详情页右上方的 `部署` 按钮,选择指定环境的工作流即可开始部署应用,开始部署后可以切换到环境视图下打开应用资源拓扑图观测资源状态。

|

||||

|

||||

|

||||

|

||||

|

||||

### 删除应用

|

||||

|

||||

|

|

|

|||

|

|

@ -0,0 +1,132 @@

|

|||

---

|

||||

title: 访问应用

|

||||

---

|

||||

|

||||

After deploying the application, the next station is publishing your service.

|

||||

|

||||

There are multiple ways to do this, for testing, such as Port Forward and Node Port. for production, such as LoadBalancer and via cluster gateway. This section focus on the production environment.

|

||||

|

||||

## Use LoadBalancer by Cloud Provider

|

||||

|

||||

This way is only suitable for the cloud environment, such as Aliyun, AWS, and Azure.

|

||||

|

||||

For the webservice component, you only need to set the `ExposeType` to `LoadBalancer`, it means this component will generate a Kubernetes Service with LoadBalancer type. After deployment, you could get the service endpoints.

|

||||

|

||||

For Example:

|

||||

|

||||

```yaml

|

||||

apiVersion: core.oam.dev/v1beta1

|

||||

kind: Application

|

||||

metadata:

|

||||

name: webservice-app

|

||||

spec:

|

||||

components:

|

||||

- name: frontend

|

||||

type: webservice

|

||||

properties:

|

||||

image: oamdev/testapp:v1

|

||||

cmd: ["node", "server.js"]

|

||||

ports:

|

||||

- port: 8080

|

||||

expose: true

|

||||

exposeType: LoadBalancer

|

||||

```

|

||||

|

||||

For other components, similarly, only need to generate the Kubernetes Service with LoadBalancer type.

|

||||

|

||||

## Use HTTP Domain by Ingress Gateway

|

||||

|

||||

This way required installed the ingress controller in the cluster.

|

||||

|

||||

Users could bound the `gateway` trait for the component to generate the Ingress. for example:

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: gateway

|

||||

properties:

|

||||

domain: testsvc.example.com

|

||||

http:

|

||||

"/": 8000

|

||||

"/manage": 8090

|

||||

```

|

||||

|

||||

This means accessing the component service by domain `testsvc.example.com`, there are two rules, the request path begins with `/manage` will request the component 8090 port. others request path request the component 8000 port.

|

||||

|

||||

In VelaUX, you can click the `Add Trait` button and select the `gateway` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

## Use Traefik

|

||||

|

||||

This way required installed the traefik addon firstly.

|

||||

|

||||

```bash

|

||||

$ vela addon enable traefik

|

||||

```

|

||||

|

||||

Get more info about this addon, refer to [Traefik Addon](../reference/addons/traefik)

|

||||

|

||||

This addon provides three traits, including [http-route](../reference/addons/traefik#http-routetrait), [https-route](../reference/addons/traefik#https-routetrait) and [tcp-route](../reference/addons/traefik#tcp-routetrait).

|

||||

|

||||

### HTTP/HTTPS

|

||||

|

||||

For HTTP, you can click the `Add Trait` button and select the `http-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: http-route

|

||||

properties:

|

||||

domains:

|

||||

- testsvc.example.com

|

||||

rules:

|

||||

- port: 80

|

||||

gatewayName: traefik-gateway

|

||||

listenerName: web

|

||||

```

|

||||

|

||||

There must be one route rule. The port is the same as the service port, the route condition supports the Header and Request Path.

|

||||

|

||||

For HTTPS, you must create the TLS certificate firstly. In VelaUX, open the Integrations page, there is a `TLS Certificate` type. Click the New button, You need to provide the certificate public and private keys and encoded the content by base64.

|

||||

|

||||

|

||||

|

||||

This will generate the secret and distribute to managed clusters with the application.

|

||||

|

||||

Then open the application configuration page and click the `Add Trait` button and select the `https-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: https-route

|

||||

properties:

|

||||

domains:

|

||||

- testsvc.example.com

|

||||

TLSPort: 8443

|

||||

secrets:

|

||||

- name: kubevela

|

||||

rules:

|

||||

- port: 80

|

||||

```

|

||||

|

||||

The secret name is the same as the name of the certificate configuration.

|

||||

|

||||

### TCP

|

||||

|

||||

This way is suitable for the service with the stream protocol, reusing the same public IP address.

|

||||

|

||||

You can click the `Add Trait` button and select the `tcp-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: https-route

|

||||

properties:

|

||||

rules:

|

||||

- gatewayPort: 16379

|

||||

port: 6379

|

||||

serviceName: redis-master

|

||||

```

|

||||

29

sidebars.js

29

sidebars.js

|

|

@ -30,7 +30,7 @@ module.exports = {

|

|||

items: [

|

||||

'tutorials/webservice',

|

||||

'case-studies/multi-cluster',

|

||||

'end-user/traits/ingress',

|

||||

'tutorials/access-application',

|

||||

'end-user/traits/sidecar',

|

||||

],

|

||||

},

|

||||

|

|

@ -38,10 +38,7 @@ module.exports = {

|

|||

type: 'category',

|

||||

label: 'Helm Chart CD',

|

||||

collapsed: true,

|

||||

items: [

|

||||

'tutorials/helm',

|

||||

'tutorials/helm-multi-cluster',

|

||||

],

|

||||

items: ['tutorials/helm', 'tutorials/helm-multi-cluster'],

|

||||

},

|

||||

{

|

||||

'Cloud Resources CD': [

|

||||

|

|

@ -56,10 +53,7 @@ module.exports = {

|

|||

type: 'category',

|

||||

label: 'Kubernetes Manifest CD',

|

||||

collapsed: true,

|

||||

items: [

|

||||

'tutorials/k8s-object',

|

||||

'end-user/components/ref-objects',

|

||||

],

|

||||

items: ['tutorials/k8s-object', 'end-user/components/ref-objects'],

|

||||

},

|

||||

{

|

||||

type: 'category',

|

||||

|

|

@ -75,9 +69,7 @@ module.exports = {

|

|||

type: 'category',

|

||||

label: 'GitOps',

|

||||

collapsed: true,

|

||||

items: [

|

||||

'case-studies/gitops',

|

||||

],

|

||||

items: ['case-studies/gitops'],

|

||||

},

|

||||

{

|

||||

'General CD Features': [

|

||||

|

|

@ -102,14 +94,11 @@ module.exports = {

|

|||

'platform-engineers/advanced-install',

|

||||

'platform-engineers/system-operation/vela-cli-image',

|

||||

'platform-engineers/system-operation/enable-addon-offline',

|

||||

'platform-engineers/system-operation/migration-from-old-version'

|

||||

'platform-engineers/system-operation/migration-from-old-version',

|

||||

],

|

||||

},

|

||||

{

|

||||

'User Management': [

|

||||

'how-to/dashboard/user/user',

|

||||

'tutorials/sso',

|

||||

],

|

||||

'User Management': ['how-to/dashboard/user/user', 'tutorials/sso'],

|

||||

},

|

||||

'how-to/dashboard/user/project',

|

||||

{

|

||||

|

|

@ -151,7 +140,7 @@ module.exports = {

|

|||

'contributor/non-code-contribute',

|

||||

'contributor/code-contribute',

|

||||

{

|

||||

'Conventions': [

|

||||

Conventions: [

|

||||

'contributor/release-process',

|

||||

'contributor/code-conventions',

|

||||

'contributor/principle-of-test',

|

||||

|

|

@ -182,10 +171,10 @@ module.exports = {

|

|||

'Patch and Override': [

|

||||

'platform-engineers/traits/patch-trait',

|

||||

'platform-engineers/cue/patch-strategy',

|

||||

]

|

||||

],

|

||||

},

|

||||

{

|

||||

'Debugging': [

|

||||

Debugging: [

|

||||

'platform-engineers/debug/dry-run',

|

||||

'platform-engineers/debug/debug',

|

||||

],

|

||||

|

|

|

|||

|

|

@ -193,11 +193,11 @@ It requires you to override with a new password for the first login, please make

|

|||

|

||||

Click the application card, then you can view the details of the application.

|

||||

|

||||

|

||||

|

||||

|

||||

## Clean up

|

||||

|

||||

```

|

||||

```bash

|

||||

$ vela delete first-vela-app

|

||||

Deleting Application "first-vela-app"

|

||||

app "first-vela-app" deleted from namespace "prod"

|

||||

|

|

|

|||

|

|

@ -0,0 +1,132 @@

|

|||

---

|

||||

title: Access the application

|

||||

---

|

||||

|

||||

After deploying the application, the next station is publishing your service.

|

||||

|

||||

There are multiple ways to do this, for testing, such as Port Forward and Node Port. for production, such as LoadBalancer and via cluster gateway. This section focus on the production environment.

|

||||

|

||||

## Use LoadBalancer by Cloud Provider

|

||||

|

||||

This way is only suitable for the cloud environment, such as Aliyun, AWS, and Azure.

|

||||

|

||||

For the webservice component, you only need to set the `ExposeType` to `LoadBalancer`, it means this component will generate a Kubernetes Service with LoadBalancer type. After deployment, you could get the service endpoints.

|

||||

|

||||

For Example:

|

||||

|

||||

```yaml

|

||||

apiVersion: core.oam.dev/v1beta1

|

||||

kind: Application

|

||||

metadata:

|

||||

name: webservice-app

|

||||

spec:

|

||||

components:

|

||||

- name: frontend

|

||||

type: webservice

|

||||

properties:

|

||||

image: oamdev/testapp:v1

|

||||

cmd: ["node", "server.js"]

|

||||

ports:

|

||||

- port: 8080

|

||||

expose: true

|

||||

exposeType: LoadBalancer

|

||||

```

|

||||

|

||||

For other components, similarly, only need to generate the Kubernetes Service with LoadBalancer type.

|

||||

|

||||

## Use HTTP Domain by Ingress Gateway

|

||||

|

||||

This way required installed the ingress controller in the cluster.

|

||||

|

||||

Users could bound the `gateway` trait for the component to generate the Ingress. for example:

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: gateway

|

||||

properties:

|

||||

domain: testsvc.example.com

|

||||

http:

|

||||

"/": 8000

|

||||

"/manage": 8090

|

||||

```

|

||||

|

||||

This means accessing the component service by domain `testsvc.example.com`, there are two rules, the request path begins with `/manage` will request the component 8090 port. others request path request the component 8000 port.

|

||||

|

||||

In VelaUX, you can click the `Add Trait` button and select the `gateway` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

## Use Traefik

|

||||

|

||||

This way required installed the traefik addon firstly.

|

||||

|

||||

```bash

|

||||

$ vela addon enable traefik

|

||||

```

|

||||

|

||||

Get more info about this addon, refer to [Traefik Addon](../reference/addons/traefik)

|

||||

|

||||

This addon provides three traits, including [http-route](../reference/addons/traefik#http-routetrait), [https-route](../reference/addons/traefik#https-routetrait) and [tcp-route](../reference/addons/traefik#tcp-routetrait).

|

||||

|

||||

### HTTP/HTTPS

|

||||

|

||||

For HTTP, you can click the `Add Trait` button and select the `http-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: http-route

|

||||

properties:

|

||||

domains:

|

||||

- testsvc.example.com

|

||||

rules:

|

||||

- port: 80

|

||||

gatewayName: traefik-gateway

|

||||

listenerName: web

|

||||

```

|

||||

|

||||

There must be one route rule. The port is the same as the service port, the route condition supports the Header and Request Path.

|

||||

|

||||

For HTTPS, you must create the TLS certificate firstly. In VelaUX, open the Integrations page, there is a `TLS Certificate` type. Click the New button, You need to provide the certificate public and private keys and encoded the content by base64.

|

||||

|

||||

|

||||

|

||||

This will generate the secret and distribute to managed clusters with the application.

|

||||

|

||||

Then open the application configuration page and click the `Add Trait` button and select the `https-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: https-route

|

||||

properties:

|

||||

domains:

|

||||

- testsvc.example.com

|

||||

TLSPort: 8443

|

||||

secrets:

|

||||

- name: kubevela

|

||||

rules:

|

||||

- port: 80

|

||||

```

|

||||

|

||||

The secret name is the same as the name of the certificate configuration.

|

||||

|

||||

### TCP

|

||||

|

||||

This way is suitable for the service with the stream protocol, reusing the same public IP address.

|

||||

|

||||

You can click the `Add Trait` button and select the `tcp-route` trait type. refer to follow configuration:

|

||||

|

||||

|

||||

|

||||

```yaml

|

||||

traits:

|

||||

- type: https-route

|

||||

properties:

|

||||

rules:

|

||||

- gatewayPort: 16379

|

||||

port: 6379

|

||||

serviceName: redis-master

|

||||

```

|

||||

|

|

@ -58,7 +58,7 @@

|

|||

},

|

||||

{

|

||||

"type": "doc",

|

||||

"id": "version-v1.4/end-user/traits/ingress"

|

||||

"id": "version-v1.4/tutorials/access-application"

|

||||

},

|

||||

{

|

||||

"type": "doc",

|

||||

|

|

|

|||

Loading…

Reference in New Issue