mirror of https://github.com/loggie-io/loggie.git

Compare commits

113 Commits

| Author | SHA1 | Date |

|---|---|---|

|

|

ec6c44733d | |

|

|

31bec67423 | |

|

|

d9c57b9202 | |

|

|

04da98fff9 | |

|

|

f27f8d336a | |

|

|

b6f758bafc | |

|

|

fef96ad0d2 | |

|

|

e21f3d0df1 | |

|

|

b584302836 | |

|

|

ba7e228e20 | |

|

|

7d03ad88dd | |

|

|

76fe3f4801 | |

|

|

342e58a413 | |

|

|

8e89d72644 | |

|

|

ae4a7250f9 | |

|

|

fa19c9ef53 | |

|

|

c7cdbac92a | |

|

|

95a00ef1dd | |

|

|

2f3a2104d1 | |

|

|

083d9601a6 | |

|

|

71e3a7ea7f | |

|

|

d933083227 | |

|

|

350b21ec57 | |

|

|

d024a5052a | |

|

|

9d7b9a54b5 | |

|

|

2520f55bfd | |

|

|

6d18d0f847 | |

|

|

6a5b1e5ce6 | |

|

|

c452784e11 | |

|

|

96516da425 | |

|

|

1a7b7dc24c | |

|

|

a577e5944b | |

|

|

71ce680008 | |

|

|

9fcbacb47c | |

|

|

a18cfbc306 | |

|

|

56aa85cdc8 | |

|

|

fd73e56df2 | |

|

|

aa68ae5ef9 | |

|

|

8f8c9756e9 | |

|

|

641f9a1002 | |

|

|

b441fef23f | |

|

|

ad1722b704 | |

|

|

f83e4a46c8 | |

|

|

d7dc45a124 | |

|

|

aa321c79d0 | |

|

|

09ed74eea9 | |

|

|

5cf14ad81c | |

|

|

51151c567c | |

|

|

c61efab59d | |

|

|

744889f0af | |

|

|

254c32df33 | |

|

|

d24a44d36e | |

|

|

c10e519cd5 | |

|

|

0fc45e513d | |

|

|

2988233d90 | |

|

|

8f07f0fba1 | |

|

|

5521209f35 | |

|

|

0bdef8263d | |

|

|

3fbbaeced7 | |

|

|

3cdbc1295e | |

|

|

f3610dd97c | |

|

|

c013a2db08 | |

|

|

c2be4c2a42 | |

|

|

3d96c1e3a1 | |

|

|

78ab6ef12f | |

|

|

3760c38f83 | |

|

|

5cfbc97a4a | |

|

|

37f22655e2 | |

|

|

389c28a780 | |

|

|

4356df9dc6 | |

|

|

b54cc313b7 | |

|

|

c2f69ca735 | |

|

|

b8420c43a7 | |

|

|

749ae0dfc0 | |

|

|

41046da74b | |

|

|

771da3f696 | |

|

|

497f1a4625 | |

|

|

e22c55b503 | |

|

|

2588a0e515 | |

|

|

e311f4859d | |

|

|

1ba463a206 | |

|

|

b099468646 | |

|

|

bb1428c2ff | |

|

|

0f03167202 | |

|

|

a0877f44e3 | |

|

|

1522f8c3b8 | |

|

|

cb765921b3 | |

|

|

86ca94f8a1 | |

|

|

b405987648 | |

|

|

f146b8fb95 | |

|

|

65020bb373 | |

|

|

66280111ce | |

|

|

09bb2070ad | |

|

|

e729b9f8d8 | |

|

|

42665897b2 | |

|

|

eb1e54a79d | |

|

|

1a321f3abf | |

|

|

dbcd30d864 | |

|

|

726f6dcf6a | |

|

|

b2d8667587 | |

|

|

ac994a3dc4 | |

|

|

9ec308a2f8 | |

|

|

1a7cdc72f9 | |

|

|

97468872a9 | |

|

|

54eb253074 | |

|

|

9726a97560 | |

|

|

cfc5e945ca | |

|

|

6fbcd54949 | |

|

|

d1740a6f99 | |

|

|

015f84474f | |

|

|

b5d6634560 | |

|

|

fb5cf7668d | |

|

|

9af388e138 |

|

|

@ -5,6 +5,7 @@ on:

|

|||

branches:

|

||||

- main

|

||||

- release-*

|

||||

- test-*

|

||||

tags:

|

||||

- v*

|

||||

|

||||

|

|

|

|||

|

|

@ -14,7 +14,7 @@ RUN if [ "$TARGETARCH" = "arm64" ]; then apt-get update && apt-get install -y gc

|

|||

&& GOOS=$TARGETOS GOARCH=$TARGETARCH CC=$CC CC_FOR_TARGET=$CC_FOR_TARGET make build

|

||||

|

||||

# Run

|

||||

FROM --platform=$BUILDPLATFORM debian:buster-slim

|

||||

FROM debian:buster-slim

|

||||

WORKDIR /

|

||||

COPY --from=builder /loggie .

|

||||

|

||||

|

|

|

|||

|

|

@ -0,0 +1,18 @@

|

|||

# Build the binary

|

||||

FROM --platform=$BUILDPLATFORM golang:1.18 as builder

|

||||

|

||||

ARG TARGETARCH

|

||||

ARG TARGETOS

|

||||

|

||||

# Copy in the go src

|

||||

WORKDIR /

|

||||

COPY . .

|

||||

# Build

|

||||

RUN make build-in-badger

|

||||

|

||||

# Run

|

||||

FROM debian:buster-slim

|

||||

WORKDIR /

|

||||

COPY --from=builder /loggie .

|

||||

|

||||

ENTRYPOINT ["/loggie"]

|

||||

21

Makefile

21

Makefile

|

|

@ -82,8 +82,13 @@ benchmark: ## Run benchmark

|

|||

|

||||

##@ Build

|

||||

|

||||

build: ## go build

|

||||

CGO_ENABLED=1 GOOS=${GOOS} GOARCH=${GOARCH} go build -mod=vendor -a ${extra_flags} -o loggie cmd/loggie/main.go

|

||||

build: ## go build, EXT_BUILD_TAGS=include_core would only build core package

|

||||

CGO_ENABLED=1 GOOS=${GOOS} GOARCH=${GOARCH} go build -tags ${EXT_BUILD_TAGS} -mod=vendor -a ${extra_flags} -o loggie cmd/loggie/main.go

|

||||

|

||||

##@ Build(without sqlite)

|

||||

|

||||

build-in-badger: ## go build without sqlite, EXT_BUILD_TAGS=include_core would only build core package

|

||||

GOOS=${GOOS} GOARCH=${GOARCH} go build -tags driver_badger,${EXT_BUILD_TAGS} -mod=vendor -a -ldflags '-X github.com/loggie-io/loggie/pkg/core/global._VERSION_=${TAG} -X github.com/loggie-io/loggie/pkg/util/persistence._DRIVER_=badger -s -w' -o loggie cmd/loggie/main.go

|

||||

|

||||

##@ Images

|

||||

|

||||

|

|

@ -93,6 +98,16 @@ docker-build: ## Docker build -t ${REPO}:${TAG}, try: make docker-build REPO=<Yo

|

|||

docker-push: ## Docker push ${REPO}:${TAG}

|

||||

docker push ${REPO}:${TAG}

|

||||

|

||||

docker-multi-arch: ## Docker buildx, try: make docker-build REPO=<YourRepoHost>, ${TAG} generated by git

|

||||

docker-multi-arch: ## Docker buildx, try: make docker-multi-arch REPO=<YourRepoHost>, ${TAG} generated by git

|

||||

docker buildx build --platform linux/amd64,linux/arm64 -t ${REPO}:${TAG} . --push

|

||||

|

||||

LOG_DIR ?= /tmp/log ## log directory

|

||||

LOG_MAXSIZE ?= 10 ## max size in MB of the logfile before it's rolled

|

||||

LOG_QPS ?= 0 ## qps of line generate

|

||||

LOG_TOTAL ?= 5 ## total line count

|

||||

LOG_LINE_BYTES ?= 1024 ## bytes per line

|

||||

LOG_MAX_BACKUPS ?= 5 ## max number of rolled files to keep

|

||||

genfiles: ## generate log files, try: make genfiles LOG_TOTAL=30000

|

||||

go run cmd/loggie/main.go genfiles -totalCount=${LOG_TOTAL} -lineBytes=${LOG_LINE_BYTES} -qps=${LOG_QPS} \

|

||||

-log.maxBackups=${LOG_MAX_BACKUPS} -log.maxSize=${LOG_MAXSIZE} -log.directory=${LOG_DIR} -log.noColor=true \

|

||||

-log.enableStdout=false -log.enableFile=true -log.timeFormat="2006-01-02 15:04:05.000"

|

||||

172

README.md

172

README.md

|

|

@ -1,5 +1,5 @@

|

|||

|

||||

<img src="https://github.com/loggie-io/loggie/blob/main/logo/loggie.svg" width="250">

|

||||

<img src="https://github.com/loggie-io/loggie/blob/main/logo/loggie-draw.png" width="250">

|

||||

|

||||

[](https://loggie-io.github.io/docs/)

|

||||

[](https://bestpractices.coreinfrastructure.org/projects/569)

|

||||

|

|

@ -9,14 +9,169 @@

|

|||

|

||||

Loggie is a lightweight, high-performance, cloud-native agent and aggregator based on Golang.

|

||||

|

||||

- Supports multiple pipeline and pluggable components, including data transfer, filtering, parsing, and alarm functions.

|

||||

- Supports multiple pipeline and pluggable components, including data transfer, filtering, parsing, and alerting.

|

||||

- Uses native Kubernetes CRD for operation and management.

|

||||

- Offers a range of observability, reliability, and automation features suitable for production environments.

|

||||

|

||||

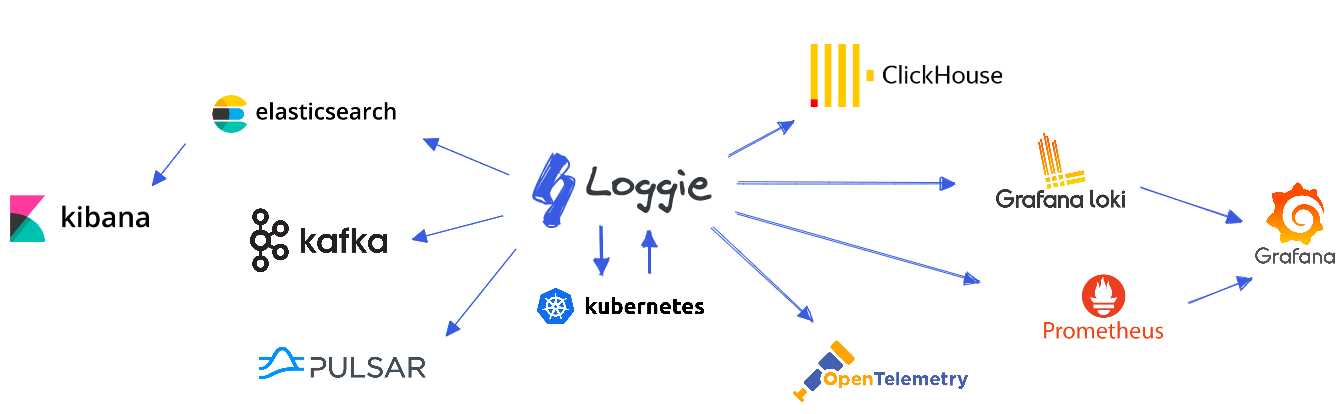

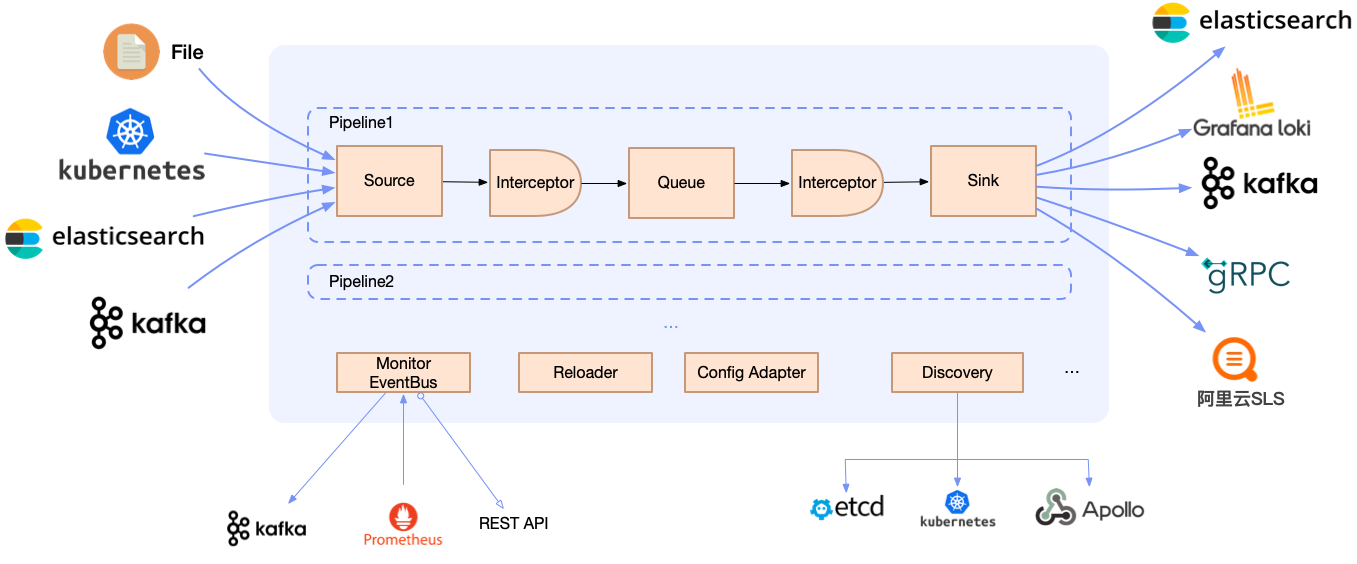

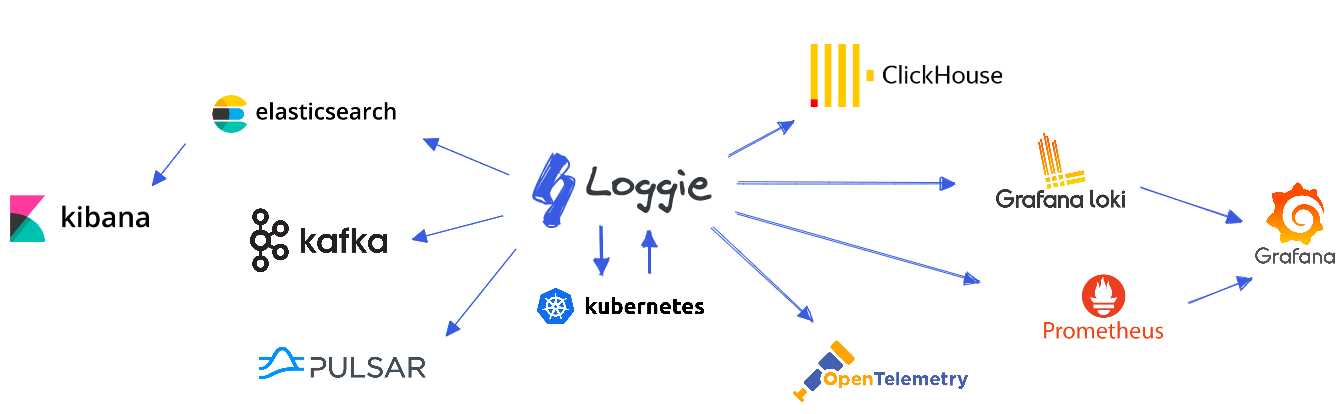

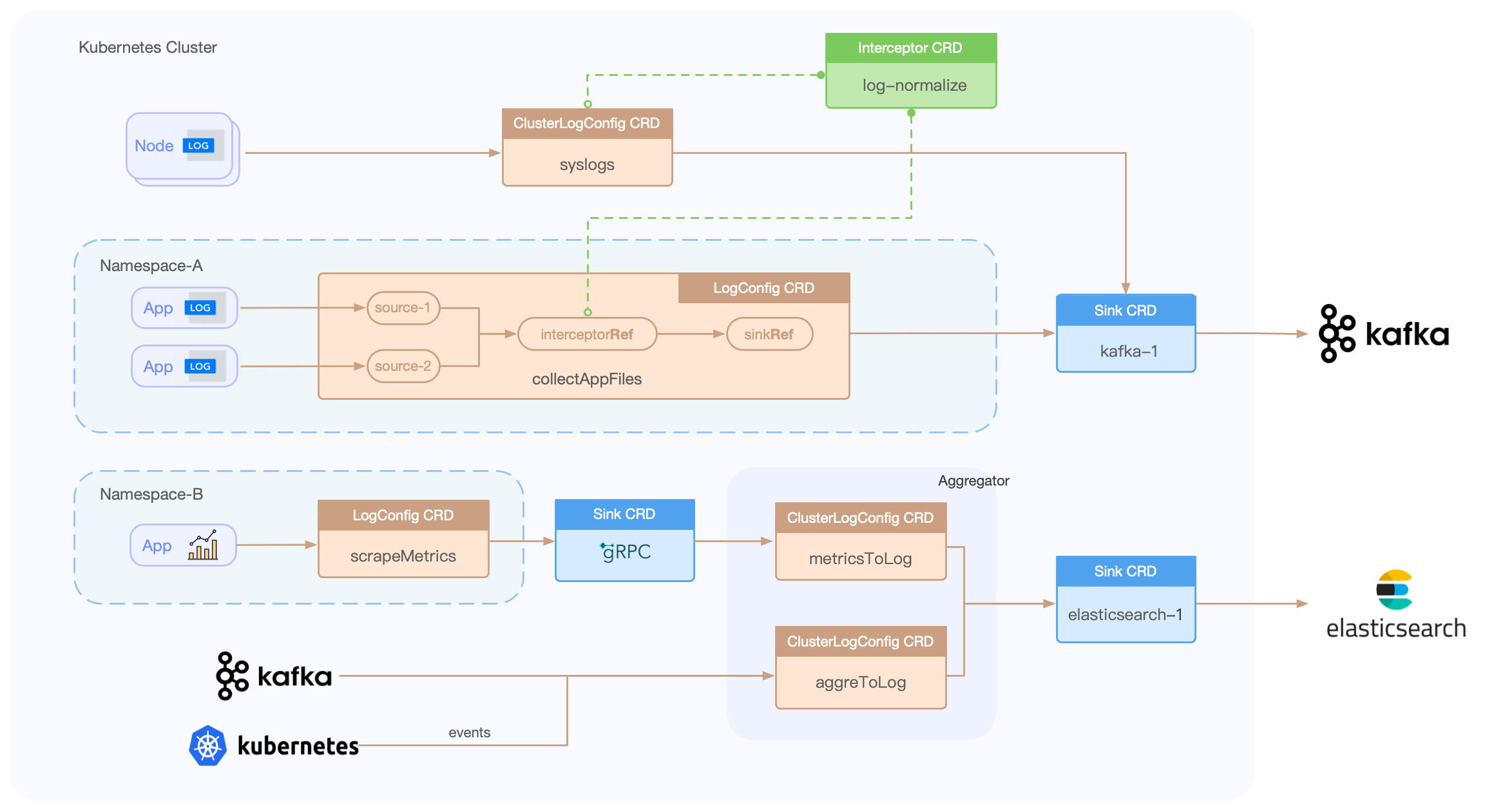

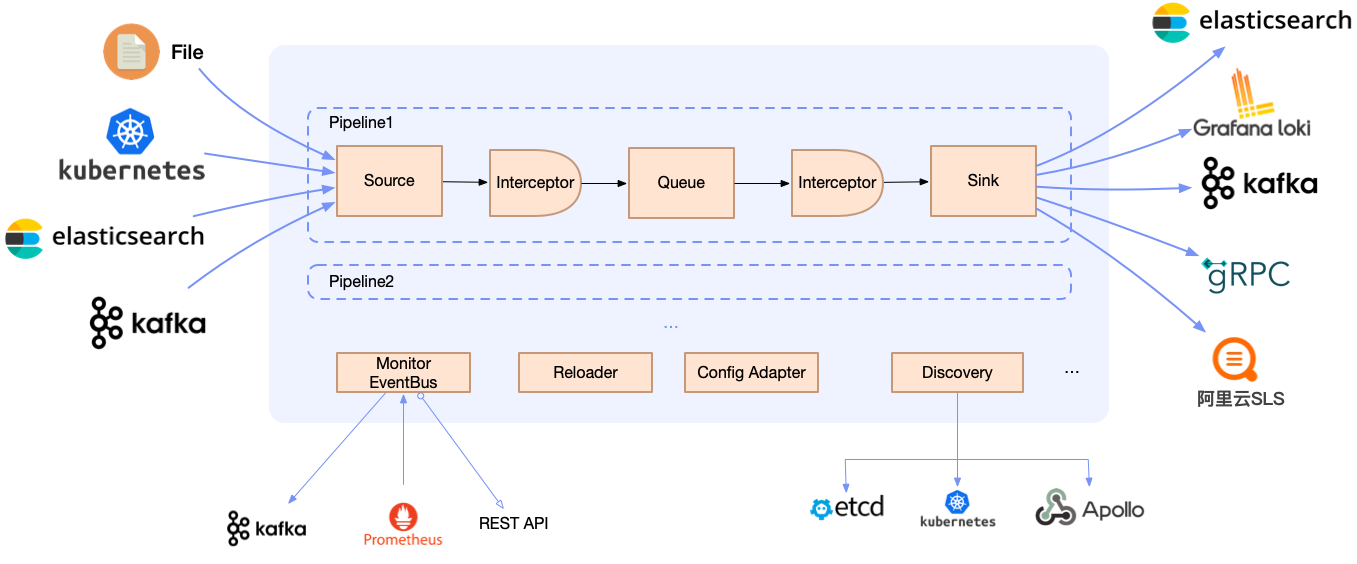

## Architecture

|

||||

Based on Loggie, we can build a cloud-native scalable log data platform.

|

||||

|

||||

|

||||

|

||||

## Features

|

||||

|

||||

### Next-generation cloud-native log collection and transmission

|

||||

|

||||

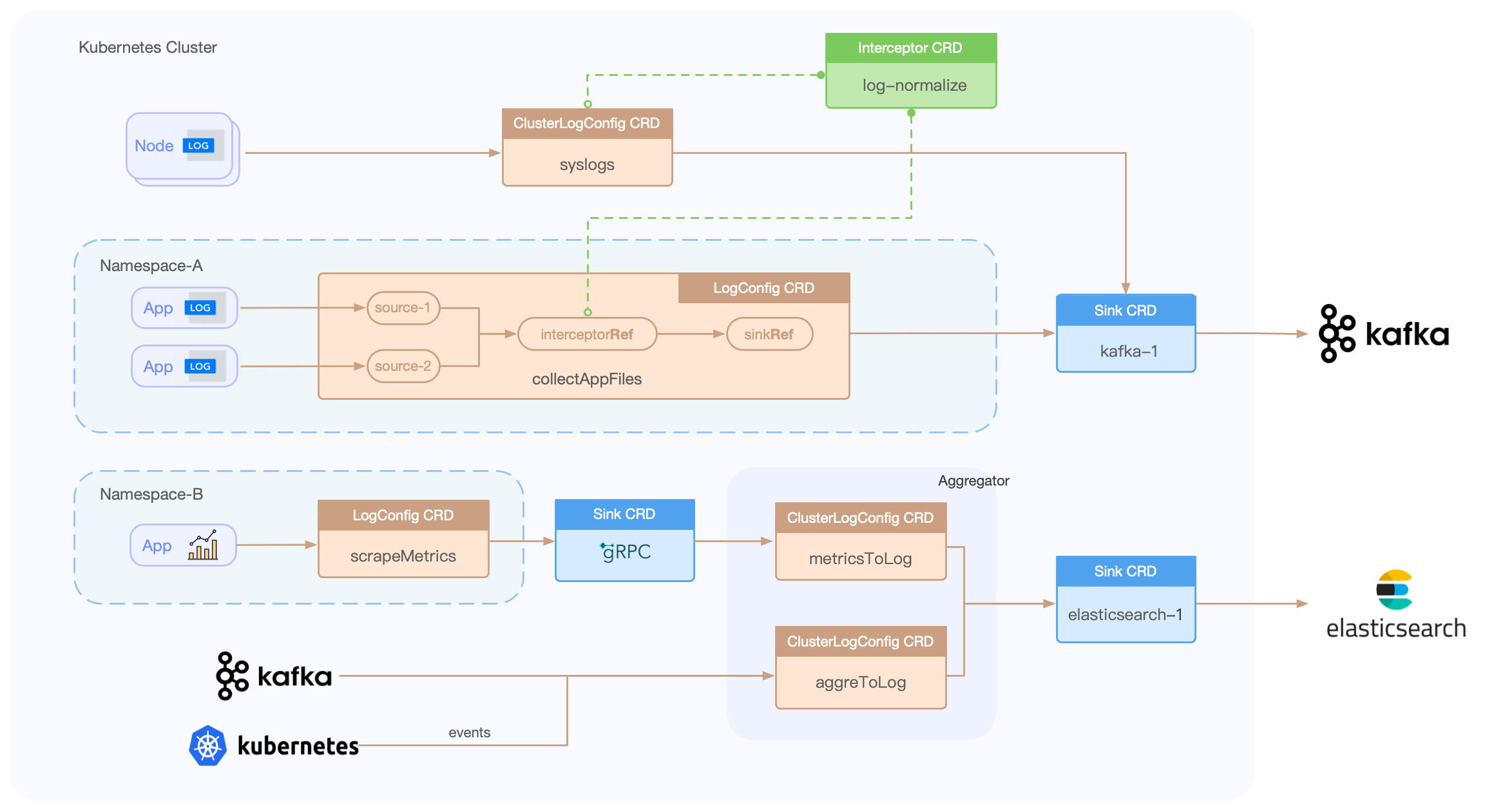

#### Building pipelines based on CRD

|

||||

|

||||

Loggie includes LogConfig/ClusterLogConfig/Interceptor/Sink CRDs, allowing for the creation of data collection, transfer, processing, and sending pipelines through simple YAML file creation.

|

||||

|

||||

eg:

|

||||

```yaml

|

||||

apiVersion: loggie.io/v1beta1

|

||||

kind: LogConfig

|

||||

metadata:

|

||||

name: tomcat

|

||||

namespace: default

|

||||

spec:

|

||||

selector:

|

||||

type: pod

|

||||

labelSelector:

|

||||

app: tomcat

|

||||

pipeline:

|

||||

sources: |

|

||||

- type: file

|

||||

name: common

|

||||

paths:

|

||||

- stdout

|

||||

- /usr/local/tomcat/logs/*.log

|

||||

sinkRef: default

|

||||

interceptorRef: default

|

||||

```

|

||||

|

||||

|

||||

|

||||

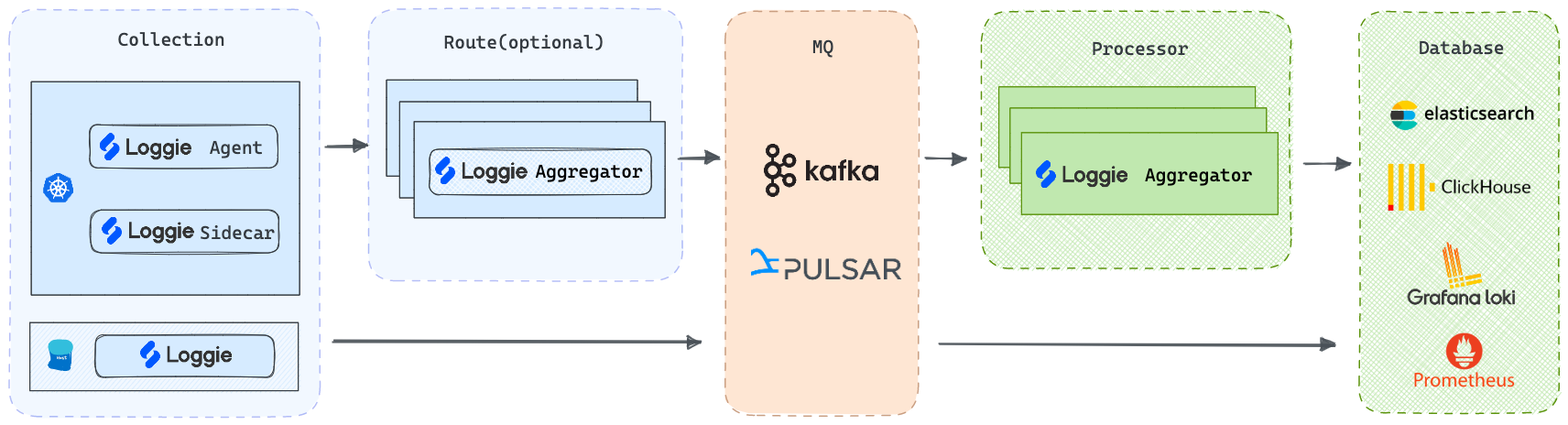

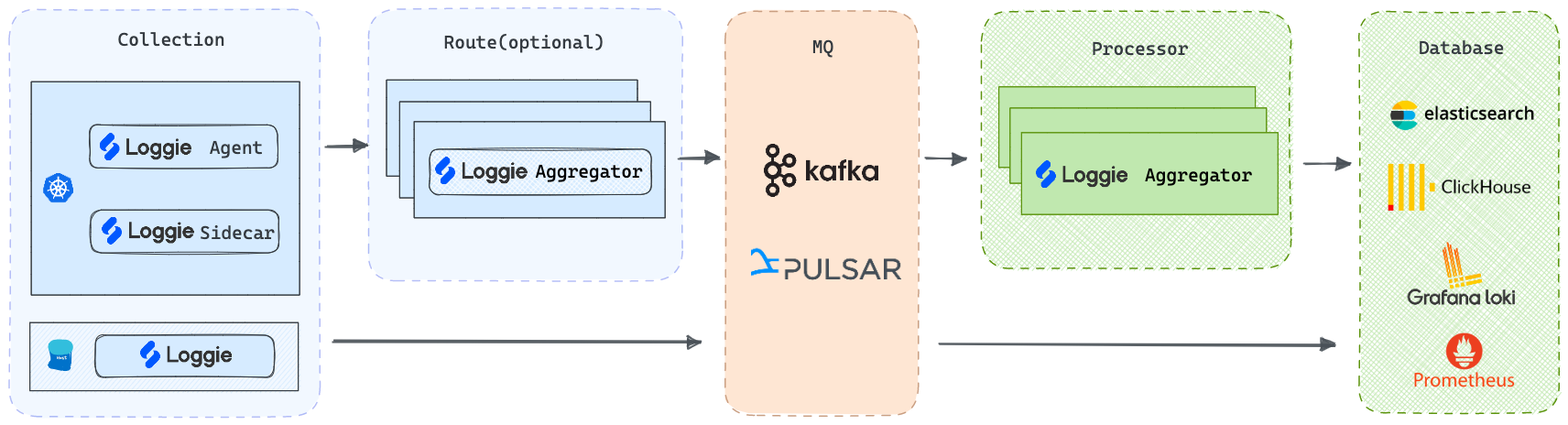

#### Multiple architectures

|

||||

|

||||

- **Agent**: Deployed via DaemonSet, Loggie can collect log files without the need for containers to mount volumes.

|

||||

|

||||

- **Sidecar**: Supports non-intrusive auto-injection of Loggie sidecars, without the need to manually add them to the Deployment/StatefulSet templates.

|

||||

|

||||

- **Aggregator**: Supports deployment as an independent intermediate machine, which can receive aggregated data sent by Loggie Agent and can also be used to consume and process various data sources.

|

||||

|

||||

But regardless of the deployment architecture, Loggie still maintains a simple and intuitive internal design.

|

||||

|

||||

|

||||

|

||||

### High Performance

|

||||

|

||||

#### Benchmark

|

||||

|

||||

Configure Filebeat and Loggie to collect logs, and send them to a Kafka topic without using client compression, with the Kafka topic partition configured as 3.

|

||||

|

||||

With sufficient resources for the Agent specification, modify the number of files collected, the concurrency of the sending client (configure Filebeat worker and Loggie parallelism), and observe their respective CPU, memory, and pod network card transmission rates.

|

||||

|

||||

| Agent | File Size | File Count | Sink Concurrency | CPU | MEM (rss) | Transmission Rates |

|

||||

|----------|-----------|------------|------------------|----------|-----------|--------------------|

|

||||

| Filebeat | 3.2G | 1 | 3 | 7.5~8.5c | 63.8MiB | 75.9MiB/s |

|

||||

| Filebeat | 3.2G | 1 | 8 | 10c | 65MiB | 70MiB/s |

|

||||

| Filebeat | 3.2G | 10 | 8 | 11c | 65MiB | 80MiB/s |

|

||||

| | | | | | | |

|

||||

| Loggie | 3.2G | 1 | 3 | 2.1c | 60MiB | 120MiB/s |

|

||||

| Loggie | 3.2G | 1 | 8 | 2.4c | 68.7MiB | 120MiB/s |

|

||||

| Loggie | 3.2G | 10 | 8 | 3.5c | 70MiB | 210MiB/s |

|

||||

|

||||

#### Adaptive Sink Concurrency

|

||||

|

||||

With sink concurrency configuration enabled, Loggie can:

|

||||

|

||||

- Automatically adjust the downstream data sending parallelism based on the actual downstream data response, making full use of the downstream server's performance without affecting its performance.

|

||||

- Adjust the downstream data sending speed appropriately when upstream data collection is blocked to relieve upstream blocking.

|

||||

|

||||

### Lightweight Streaming Data Analysis and Monitoring

|

||||

|

||||

Logs are a universal data type and are not related to platforms or systems. How to better utilize this data is the core capability that Loggie focuses on and develops.

|

||||

|

||||

|

||||

|

||||

#### Real-time parsing and transformation

|

||||

|

||||

With the configuration of transformer interceptors and the configuration of functional actions, Loggie can achieve:

|

||||

|

||||

- Parsing of various data formats (json, grok, regex, split, etc.)

|

||||

- Conversion of various fields (add, copy, move, set, del, fmt, etc.)

|

||||

- Support for conditional judgment and processing logic (if, else, return, dropEvent, ignoreError, etc.)

|

||||

|

||||

eg:

|

||||

```yaml

|

||||

interceptors:

|

||||

- type: transformer

|

||||

actions:

|

||||

- action: regex(body)

|

||||

pattern: (?<ip>\S+) (?<id>\S+) (?<u>\S+) (?<time>\[.*?\]) (?<url>\".*?\") (?<status>\S+) (?<size>\S+)

|

||||

- if: equal(status, 404)

|

||||

then:

|

||||

- action: add(topic, not_found)

|

||||

- action: return()

|

||||

- if: equal(status, 500)

|

||||

then:

|

||||

- action: dropEvent()

|

||||

```

|

||||

|

||||

#### Detection, recognition, and alerting

|

||||

|

||||

Helps you quickly detect potential problems and anomalies in the data and issue timely alerts. Support custom webhooks to connect to various alert channels.

|

||||

|

||||

Supports matching methods such as:

|

||||

|

||||

- No data: no log data generated within the configured time period.

|

||||

- Fuzzy matching

|

||||

- Regular expression matching

|

||||

- Conditional judgment

|

||||

- Field comparison: equal/less/greater...

|

||||

|

||||

#### Log data aggregation and monitoring

|

||||

|

||||

Often, metric data is not only exposed through prometheus exporters, but log data itself can also provide a source of metrics. For example, by counting the access logs of a gateway, you can calculate the number of 5xx or 4xx status codes within a certain time interval, aggregate the qps of a certain interface, and calculate the total amount of body data, etc.

|

||||

|

||||

eg:

|

||||

```yaml

|

||||

- type: aggregator

|

||||

interval: 1m

|

||||

select:

|

||||

# operator:COUNT/COUNT-DISTINCT/SUM/AVG/MAX/MIN

|

||||

- {key: amount, operator: SUM, as: amount_total}

|

||||

- {key: quantity, operator: SUM, as: qty_total}

|

||||

groupBy: ["city"]

|

||||

calculate:

|

||||

- {expression: " ${amount_total} / ${qty_total} ", as: avg_amount}

|

||||

```

|

||||

|

||||

### Observability and fast troubleshooting

|

||||

|

||||

- Loggie provides configurable and rich metrics, and dashboards that can be imported into Grafana with one click.

|

||||

|

||||

<img src="https://loggie-io.github.io/docs/user-guide/monitor/img/grafana-agent-1.png" width="1000"/>

|

||||

|

||||

- Quickly troubleshoot Loggie itself and any problems in data transmission by Loggie terminal.

|

||||

|

||||

<img src="https://loggie-io.github.io/docs/user-guide/troubleshot/img/loggie-dashboard.png" width="1000"/>

|

||||

|

||||

## FAQs

|

||||

|

||||

### Loggie vs Filebeat/Fluentd/Logstash/Flume

|

||||

|

||||

| | Loggie | Filebeat | Fluentd | Logstash | Flume |

|

||||

|-------------------------------------|------------------------------------------------------------------------------------------------------------------|--------------|--------------------|---------------|---------------|

|

||||

| Language | Golang | Golang | Ruby | JRuby | Java |

|

||||

| Multiple Pipelines | ✓ | single queue | single queue | ✓ | ✓ |

|

||||

| Multiple output | ✓ | one output | copy | ✓ | ✓ |

|

||||

| Aggregator | ✓ | ✓ | ✓ | ✓ | ✓ |

|

||||

| Log Alarm | ✓ | | | | |

|

||||

| Kubernetes container log collection | support container stdout and logs files in container | stdout | stdout | | |

|

||||

| Configuration delivery | through CRD | manual | manual | manual | manual |

|

||||

| Monitoring | support Prometheus metrics,and can be configured to output indicator log files separately, sending metrics, etc. | | prometheus metrics | need exporter | need exporter |

|

||||

| Resource Usage | low | low | average | high | high |

|

||||

|

||||

|

||||

## [Documentation](https://loggie-io.github.io/docs-en/)

|

||||

|

||||

|

|

@ -36,10 +191,13 @@ Loggie is a lightweight, high-performance, cloud-native agent and aggregator bas

|

|||

- [Args](https://loggie-io.github.io/docs-en/reference/global/args/)

|

||||

- [System](https://loggie-io.github.io/docs-en/reference/global/monitor/)

|

||||

- Pipelines

|

||||

- source: [file](https://loggie-io.github.io/docs-en/reference/pipelines/source/file/), [kafka](https://loggie-io.github.io/docs-en/reference/pipelines/source/kafka/), [kubeEvent](https://loggie-io.github.io/docs-en/reference/pipelines/source/kube-event/), [grpc](https://loggie-io.github.io/docs-en/reference/pipelines/source/grpc/)..

|

||||

- sink: [elasticsearch](https://loggie-io.github.io/docs-en/reference/pipelines/sink/elasticsearch/), [kafka](https://loggie-io.github.io/docs-en/reference/pipelines/sink/kafka/), [grpc](https://loggie-io.github.io/docs-en/reference/pipelines/sink/grpc/), [dev](https://loggie-io.github.io/docs-en/reference/pipelines/sink/dev/)..

|

||||

- interceptor: [transformer](https://loggie-io.github.io/docs-en/reference/pipelines/interceptor/transformer/), [limit](https://loggie-io.github.io/docs-en/reference/pipelines/interceptor/limit/), [logAlert](https://loggie-io.github.io/docs-en/reference/pipelines/interceptor/logalert/), [maxbytes](https://loggie-io.github.io/docs-en/reference/pipelines/interceptor/maxbytes/)..

|

||||

- CRD ([logConfig](https://loggie-io.github.io/docs-en/reference/discovery/kubernetes/logconfig/), [sink](https://loggie-io.github.io/docs-en/reference/discovery/kubernetes/sink/), [interceptor](https://loggie-io.github.io/docs-en/reference/discovery/kubernetes/interceptors/))

|

||||

- Source: [file](https://loggie-io.github.io/docs-en/reference/pipelines/source/file/), [kafka](https://loggie-io.github.io/docs-en/reference/pipelines/source/kafka/), [kubeEvent](https://loggie-io.github.io/docs-en/reference/pipelines/source/kubeEvent/), [grpc](https://loggie-io.github.io/docs-en/reference/pipelines/source/grpc/), [elasticsearch](https://loggie-io.github.io/docs-en/reference/pipelines/source/elasticsearch/), [prometheusExporter](https://loggie-io.github.io/docs-en/reference/pipelines/source/prometheus-exporter/)..

|

||||

- Sink: [elasticsearch](https://loggie-io.github.io/docs-en/reference/pipelines/sink/elasticsearch/), [kafka](https://loggie-io.github.io/docs-en/reference/pipelines/sink/kafka/), [grpc](https://loggie-io.github.io/docs-en/reference/pipelines/sink/grpc/), [loki](https://loggie-io.github.io/docs-en/reference/pipelines/sink/loki/), [zinc](https://loggie-io.github.io/docs-en/reference/pipelines/sink/zinc/), [alertWebhook](https://loggie-io.github.io/docs-en/reference/pipelines/sink/webhook/), [dev](https://loggie-io.github.io/docs-en/reference/pipelines/sink/dev/)..

|

||||

- Interceptor: [transformer](https://loggie-io.github.io/docs-en/reference/pipelines/interceptor/transformer/), [schema](https://loggie-io.github.io/docs-en/reference/pipelines/interceptor/schema/), [limit](https://loggie-io.github.io/docs-en/reference/pipelines/interceptor/limit/), [logAlert](https://loggie-io.github.io/docs-en/reference/pipelines/interceptor/logalert/), [maxbytes](https://loggie-io.github.io/docs-en/reference/pipelines/interceptor/maxbytes/)..

|

||||

- CRD: [LogConfig](https://loggie-io.github.io/docs-en/reference/discovery/kubernetes/logconfig/), [ClusterLogConfig](https://loggie-io.github.io/docs-en/reference/discovery/kubernetes/clusterlogconfig/), [Sink](https://loggie-io.github.io/docs-en/reference/discovery/kubernetes/sink/), [Interceptor](https://loggie-io.github.io/docs-en/reference/discovery/kubernetes/interceptors/)

|

||||

|

||||

## RoadMap

|

||||

[RoadMap 2023](https://loggie-io.github.io/docs-en/getting-started/roadmap/roadmap-2023/)

|

||||

|

||||

## License

|

||||

|

||||

|

|

|

|||

189

README_cn.md

189

README_cn.md

|

|

@ -1,5 +1,5 @@

|

|||

|

||||

<img src="https://github.com/loggie-io/loggie/blob/main/logo/loggie.svg" width="250">

|

||||

<img src="https://github.com/loggie-io/loggie/blob/main/logo/loggie-draw.png" width="250">

|

||||

|

||||

[](https://loggie-io.github.io/docs/)

|

||||

[](https://bestpractices.coreinfrastructure.org/projects/569)

|

||||

|

|

@ -11,34 +11,197 @@ Loggie是一个基于Golang的轻量级、高性能、云原生日志采集Agent

|

|||

- **云原生的日志形态**:快速便捷的容器日志采集方式,原生的Kubernetes CRD动态配置下发

|

||||

- **生产级的特性**:基于长期的大规模运维经验,形成了全方位的可观测性、快速排障、异常预警、自动化运维能力

|

||||

|

||||

## 架构

|

||||

我们可以基于Loggie,打造一套的云原生可扩展的全链路日志数据平台。

|

||||

|

||||

|

||||

|

||||

|

||||

## 特性

|

||||

|

||||

### 新一代的云原生日志采集和传输方式

|

||||

|

||||

#### 基于CRD的快速配置和使用

|

||||

|

||||

Loggie包含LogConfig/ClusterLogConfig/Interceptor/Sink CRD,只需简单的创建一些YAML文件,即可搭建一系列的数据采集、传输、处理、发送流水线。

|

||||

|

||||

示例:

|

||||

```yaml

|

||||

apiVersion: loggie.io/v1beta1

|

||||

kind: LogConfig

|

||||

metadata:

|

||||

name: tomcat

|

||||

namespace: default

|

||||

spec:

|

||||

selector:

|

||||

type: pod

|

||||

labelSelector:

|

||||

app: tomcat

|

||||

pipeline:

|

||||

sources: |

|

||||

- type: file

|

||||

name: common

|

||||

paths:

|

||||

- stdout

|

||||

- /usr/local/tomcat/logs/*.log

|

||||

sinkRef: default

|

||||

interceptorRef: default

|

||||

```

|

||||

|

||||

|

||||

|

||||

#### 支持多种部署架构

|

||||

|

||||

- **Agent**: 使用DaemonSet部署,无需业务容器挂载Volume即可采集日志文件

|

||||

|

||||

- **Sidecar**: 支持Loggie sidecar无侵入自动注入,无需手动添加到Deployment/StatefulSet部署模版

|

||||

|

||||

- **Aggregator**: 支持Deployment独立部署成中转机形态,可接收聚合Loggie Agent发送的数据,也可单独用于消费处理各类数据源

|

||||

|

||||

但不管是哪种部署架构,Loggie仍然保持着简单直观的内部设计。

|

||||

|

||||

|

||||

|

||||

### 轻量级和高性能

|

||||

|

||||

#### 基准压测对比

|

||||

配置Filebeat和Loggie采集日志,并发送至Kafka某个Topic,不使用客户端压缩,Kafka Topic配置Partition为3。

|

||||

|

||||

在保证Agent规格资源充足的情况下,修改采集的文件个数、发送客户端并发度(配置Filebeat worker和Loggie parallelism),观察各自的CPU、Memory和Pod网卡发送速率。

|

||||

|

||||

| Agent | 文件大小 | 日志文件数 | 发送并发度 | CPU | MEM (rss) | 网卡发包速率 |

|

||||

|----------|------|-------|-------|----------|-----------|-----------|

|

||||

| Filebeat | 3.2G | 1 | 3 | 7.5~8.5c | 63.8MiB | 75.9MiB/s |

|

||||

| Filebeat | 3.2G | 1 | 8 | 10c | 65MiB | 70MiB/s |

|

||||

| Filebeat | 3.2G | 10 | 8 | 11c | 65MiB | 80MiB/s |

|

||||

| | | | | | | |

|

||||

| Loggie | 3.2G | 1 | 3 | 2.1c | 60MiB | 120MiB/s |

|

||||

| Loggie | 3.2G | 1 | 8 | 2.4c | 68.7MiB | 120MiB/s |

|

||||

| Loggie | 3.2G | 10 | 8 | 3.5c | 70MiB | 210MiB/s |

|

||||

|

||||

|

||||

#### 自适应sink并发度

|

||||

|

||||

打开sink并发度配置后,Loggie可做到:

|

||||

- 根据下游数据响应的实际情况,自动调整下游数据发送并行数,尽量发挥下游服务端的性能,且不影响其性能。

|

||||

- 在上游数据收集被阻塞时,适当调整下游数据发送速度,缓解上游阻塞。

|

||||

|

||||

### 轻量级流式数据分析与监控

|

||||

日志本身是一种通用的,和平台、系统无关的数据,如何更好的利用到这些数据,是Loggie关注和主要发展的核心能力。

|

||||

|

||||

|

||||

|

||||

#### 实时解析和转换

|

||||

只需配置transformer interceptor,通过配置函数式的action,即可实现:

|

||||

- 各种数据格式的解析(json, grok, regex, split...)

|

||||

- 各种字段的转换(add, copy, move, set, del, fmt...)

|

||||

- 支持条件判断和处理逻辑(if, else, return, dropEvent, ignoreError...)

|

||||

|

||||

可用于:

|

||||

- 日志提取出日志级别level,并且drop掉DEBUG日志

|

||||

- 日志里混合包括有json和plain的日志形式,可以判断json形式的日志并且进行处理

|

||||

- 根据访问日志里的status code,增加不同的topic字段

|

||||

|

||||

示例:

|

||||

```yaml

|

||||

interceptors:

|

||||

- type: transformer

|

||||

actions:

|

||||

- action: regex(body)

|

||||

pattern: (?<ip>\S+) (?<id>\S+) (?<u>\S+) (?<time>\[.*?\]) (?<url>\".*?\") (?<status>\S+) (?<size>\S+)

|

||||

- if: equal(status, 404)

|

||||

then:

|

||||

- action: add(topic, not_found)

|

||||

- action: return()

|

||||

- if: equal(status, 500)

|

||||

then:

|

||||

- action: dropEvent()

|

||||

```

|

||||

|

||||

#### 检测识别与报警

|

||||

帮你快速检测到数据中可能出现的问题和异常,及时发出报警。

|

||||

|

||||

支持匹配方式:

|

||||

- 无数据:配置的时间段内无日志数据产生

|

||||

- 匹配

|

||||

- 模糊匹配

|

||||

- 正则匹配

|

||||

- 条件判断

|

||||

- 字段比较:equal/less/greater…

|

||||

|

||||

支持部署形态:

|

||||

- 在数据采集链路检测:简单易用,无需额外部署

|

||||

- 独立链路检测两种形态:独立部署Aggregator,消费Kafka/Elasticsearch等进行数据的匹配和报警

|

||||

|

||||

均可支持自定义webhook对接各类报警渠道。

|

||||

|

||||

#### 业务数据聚合与监控

|

||||

很多时候指标数据Metrics不仅仅是通过prometheus exporter来暴露,日志数据本身也可以提供指标的来源。

|

||||

比如说,通过统计网关的access日志,可以计算出一段时间间隔内5xx或者4xx的statusCode个数,聚合某个接口的qps,计算出传输body的总量等等。

|

||||

|

||||

该功能正在内测中,敬请期待。

|

||||

|

||||

示例:

|

||||

```yaml

|

||||

- type: aggregator

|

||||

interval: 1m

|

||||

select:

|

||||

# 算子:COUNT/COUNT-DISTINCT/SUM/AVG/MAX/MIN

|

||||

- {key: amount, operator: SUM, as: amount_total}

|

||||

- {key: quantity, operator: SUM, as: qty_total}

|

||||

groupby: ["city"]

|

||||

# 计算:根据字段中的值,再计算处理

|

||||

calculate:

|

||||

- {expression: " ${amount_total} / ${qty_total} ", as: avg_amount}

|

||||

```

|

||||

|

||||

### 全链路的快速排障与可观测性

|

||||

|

||||

- Loggie提供了可配置的、丰富的数据指标,还有dashboard可以一键导入到grafana中

|

||||

|

||||

<img src="https://loggie-io.github.io/docs/user-guide/monitor/img/grafana-agent-1.png" width="1000"/>

|

||||

|

||||

- 使用Loggie terminal和help接口快速便捷的排查Loggie本身的问题,数据传输过程中的问题

|

||||

|

||||

<img src="https://loggie-io.github.io/docs/user-guide/troubleshot/img/loggie-dashboard.png" width="1000"/>

|

||||

|

||||

## FAQs

|

||||

|

||||

### Loggie vs Filebeat/Fluentd/Logstash/Flume

|

||||

|

||||

| | Loggie | Filebeat | Fluentd | Logstash | Flume |

|

||||

|------------------|-----------------------------------------------------|------------------------------------|--------------------------|----------------|----------------|

|

||||

| 开发语言 | Golang | Golang | Ruby | JRuby | Java |

|

||||

| 多Pipeline | 支持 | 单队列 | 单队列 | 支持 | 支持 |

|

||||

| 多输出源 | 支持 | 不支持,仅一个Output | 配置copy | 支持 | 支持 |

|

||||

| 中转机 | 支持 | 不支持 | 支持 | 支持 | 支持 |

|

||||

| 日志报警 | 支持 | 不支持 | 不支持 | 不支持 | 不支持 |

|

||||

| Kubernetes容器日志采集 | 支持容器的stdout和容器内部日志文件 | 只支持容器stdout | 只支持容器stdout | 不支持 | 不支持 |

|

||||

| 配置下发 | Kubernetes下可通过CRD配置,主机场景配置中心陆续支持中 | 手动配置 | 手动配置 | 手动配置 | 手动配置 |

|

||||

| 监控 | 原生支持Prometheus metrics,同时可配置单独输出指标日志文件、发送metrics等方式 | API接口暴露,接入Prometheus需使用额外的exporter | 支持API和Prometheus metrics | 需使用额外的exporter | 需使用额外的exporter |

|

||||

| 资源占用 | 低 | 低 | 一般 | 较高 | 较高 |

|

||||

|

||||

|

||||

## 文档

|

||||

请参考Loggie[文档](https://loggie-io.github.io/docs/)。

|

||||

|

||||

### 开始

|

||||

## 快速上手

|

||||

|

||||

- [快速上手](https://loggie-io.github.io/docs/getting-started/quick-start/quick-start/)

|

||||

- 部署([Kubernetes](https://loggie-io.github.io/docs/getting-started/install/kubernetes/), [主机](https://loggie-io.github.io/docs/getting-started/install/node/))

|

||||

|

||||

### 用户指南

|

||||

|

||||

- [设计与架构](https://loggie-io.github.io/docs/user-guide/architecture/core-arch/)

|

||||

- [在Kubernetes下使用](https://loggie-io.github.io/docs/user-guide/use-in-kubernetes/general-usage/)

|

||||

- [监控与告警](https://loggie-io.github.io/docs/user-guide/monitor/loggie-monitor/)

|

||||

|

||||

### 组件配置

|

||||

## 组件配置

|

||||

|

||||

- [启动参数](https://loggie-io.github.io/docs/reference/global/args/)

|

||||

- [系统配置](https://loggie-io.github.io/docs/reference/global/system/)

|

||||

- Pipeline配置

|

||||

- source: [file](https://loggie-io.github.io/docs/reference/pipelines/source/file/), [kafka](https://loggie-io.github.io/docs/reference/pipelines/source/kafka/), [kubeEvent](https://loggie-io.github.io/docs/reference/pipelines/source/kubeEvent/), [grpc](https://loggie-io.github.io/docs/reference/pipelines/source/grpc/)..

|

||||

- sink: [elassticsearch](https://loggie-io.github.io/docs/reference/pipelines/sink/elasticsearch/), [kafka](https://loggie-io.github.io/docs/reference/pipelines/sink/kafka/), [grpc](https://loggie-io.github.io/docs/reference/pipelines/sink/grpc/), [dev](https://loggie-io.github.io/docs/reference/pipelines/sink/dev/)..

|

||||

- interceptor: [normalize](https://loggie-io.github.io/docs/reference/pipelines/interceptor/normalize/), [limit](https://loggie-io.github.io/docs/reference/pipelines/interceptor/limit/), [logAlert](https://loggie-io.github.io/docs/reference/pipelines/interceptor/logalert/), [maxbytes](https://loggie-io.github.io/docs/reference/pipelines/interceptor/maxbytes/)..

|

||||

- CRD([logConfig](https://loggie-io.github.io/docs/reference/discovery/kubernetes/logconfig/), [sink](https://loggie-io.github.io/docs/reference/discovery/kubernetes/sink/), [interceptor](https://loggie-io.github.io/docs/reference/discovery/kubernetes/interceptors/))

|

||||

- Source: [file](https://loggie-io.github.io/docs/reference/pipelines/source/file/), [kafka](https://loggie-io.github.io/docs/reference/pipelines/source/kafka/), [kubeEvent](https://loggie-io.github.io/docs/reference/pipelines/source/kubeEvent/), [grpc](https://loggie-io.github.io/docs/reference/pipelines/source/grpc/), [elasticsearch](https://loggie-io.github.io/docs/reference/pipelines/source/elasticsearch/), [prometheusExporter](https://loggie-io.github.io/docs/reference/pipelines/source/prometheus-exporter/)..

|

||||

- Sink: [elasticsearch](https://loggie-io.github.io/docs/reference/pipelines/sink/elasticsearch/), [kafka](https://loggie-io.github.io/docs/reference/pipelines/sink/kafka/), [grpc](https://loggie-io.github.io/docs/reference/pipelines/sink/grpc/), [loki](https://loggie-io.github.io/docs/reference/pipelines/sink/loki/), [zinc](https://loggie-io.github.io/docs/reference/pipelines/sink/zinc/), [alertWebhook](https://loggie-io.github.io/docs/reference/pipelines/sink/webhook/), [dev](https://loggie-io.github.io/docs/reference/pipelines/sink/dev/)..

|

||||

- Interceptor: [transformer](https://loggie-io.github.io/docs/reference/pipelines/interceptor/transformer/), [schema](https://loggie-io.github.io/docs/reference/pipelines/interceptor/schema/), [limit](https://loggie-io.github.io/docs/reference/pipelines/interceptor/limit/), [logAlert](https://loggie-io.github.io/docs/reference/pipelines/interceptor/logalert/), [maxbytes](https://loggie-io.github.io/docs/reference/pipelines/interceptor/maxbytes/)..

|

||||

- CRD: [LogConfig](https://loggie-io.github.io/docs/reference/discovery/kubernetes/logconfig/), [ClusterLogConfig](https://loggie-io.github.io/docs/reference/discovery/kubernetes/clusterlogconfig/), [Sink](https://loggie-io.github.io/docs/reference/discovery/kubernetes/sink/), [Interceptor](https://loggie-io.github.io/docs/reference/discovery/kubernetes/interceptors/)

|

||||

|

||||

## RoadMap

|

||||

- [RoadMap 2023](https://loggie-io.github.io/docs/getting-started/roadmap/roadmap-2023/)

|

||||

|

||||

## 交流讨论

|

||||

在使用Loggie的时候遇到问题? 请提issues或者联系我们。

|

||||

|

|

|

|||

|

|

@ -19,6 +19,13 @@ package main

|

|||

import (

|

||||

"flag"

|

||||

"fmt"

|

||||

"net"

|

||||

"net/http"

|

||||

"os"

|

||||

"path/filepath"

|

||||

"runtime"

|

||||

"strings"

|

||||

|

||||

"github.com/loggie-io/loggie/cmd/subcmd"

|

||||

"github.com/loggie-io/loggie/pkg/control"

|

||||

"github.com/loggie-io/loggie/pkg/core/cfg"

|

||||

|

|

@ -30,15 +37,13 @@ import (

|

|||

"github.com/loggie-io/loggie/pkg/discovery/kubernetes"

|

||||

"github.com/loggie-io/loggie/pkg/eventbus"

|

||||

_ "github.com/loggie-io/loggie/pkg/include"

|

||||

"github.com/loggie-io/loggie/pkg/ops"

|

||||

"github.com/loggie-io/loggie/pkg/ops/helper"

|

||||

"github.com/loggie-io/loggie/pkg/util/json"

|

||||

"github.com/loggie-io/loggie/pkg/util/persistence"

|

||||

"github.com/loggie-io/loggie/pkg/util/yaml"

|

||||

"github.com/pkg/errors"

|

||||

"go.uber.org/automaxprocs/maxprocs"

|

||||

"net/http"

|

||||

"os"

|

||||

"path/filepath"

|

||||

"runtime"

|

||||

"strings"

|

||||

)

|

||||

|

||||

var (

|

||||

|

|

@ -68,7 +73,7 @@ func main() {

|

|||

|

||||

log.Info("version: %s", global.GetVersion())

|

||||

|

||||

// set up signals so we handle the first shutdown signal gracefully

|

||||

// set up signals, so we handle the first shutdown signal gracefully

|

||||

stopCh := signals.SetupSignalHandler()

|

||||

|

||||

// Automatically set GOMAXPROCS to match Linux container CPU quota

|

||||

|

|

@ -83,11 +88,13 @@ func main() {

|

|||

// system config file

|

||||

syscfg := sysconfig.Config{}

|

||||

cfg.UnpackTypeDefaultsAndValidate(strings.ToLower(configType), globalConfigFile, &syscfg)

|

||||

|

||||

// register jsonEngine

|

||||

json.SetDefaultEngine(syscfg.Loggie.JSONEngine)

|

||||

// start eventBus listeners

|

||||

eventbus.StartAndRun(syscfg.Loggie.MonitorEventBus)

|

||||

// init log after error func

|

||||

log.AfterError = eventbus.AfterErrorFunc

|

||||

log.AfterErrorConfig = syscfg.Loggie.ErrorAlertConfig

|

||||

|

||||

log.Info("pipelines config path: %s", pipelineConfigPath)

|

||||

// pipeline config file

|

||||

|

|

@ -106,6 +113,9 @@ func main() {

|

|||

log.Fatal("unpack config.pipeline config file err: %v", err)

|

||||

}

|

||||

|

||||

persistence.SetConfig(syscfg.Loggie.Db)

|

||||

defer persistence.StopDbHandler()

|

||||

|

||||

controller := control.NewController()

|

||||

controller.Start(pipecfgs)

|

||||

|

||||

|

|

@ -125,11 +135,23 @@ func main() {

|

|||

|

||||

// api for debugging

|

||||

helper.Setup(controller)

|

||||

// api for get loggie Version

|

||||

ops.Setup(controller)

|

||||

|

||||

if syscfg.Loggie.Http.Enabled {

|

||||

go func() {

|

||||

if err = http.ListenAndServe(fmt.Sprintf("%s:%d", syscfg.Loggie.Http.Host, syscfg.Loggie.Http.Port), nil); err != nil {

|

||||

log.Fatal("http listen and serve err: %v", err)

|

||||

if syscfg.Loggie.Http.RandPort {

|

||||

syscfg.Loggie.Http.Port = 0

|

||||

}

|

||||

|

||||

listener, err := net.Listen("tcp", fmt.Sprintf("%s:%d", syscfg.Loggie.Http.Host, syscfg.Loggie.Http.Port))

|

||||

if err != nil {

|

||||

log.Fatal("http listen err: %v", err)

|

||||

}

|

||||

|

||||

log.Info("http listen addr %s", listener.Addr().String())

|

||||

if err = http.Serve(listener, nil); err != nil {

|

||||

log.Fatal("http serve err: %v", err)

|

||||

}

|

||||

}()

|

||||

}

|

||||

|

|

|

|||

|

|

@ -0,0 +1,60 @@

|

|||

/*

|

||||

Copyright 2023 Loggie Authors

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

*/

|

||||

|

||||

package genfiles

|

||||

|

||||

import (

|

||||

"errors"

|

||||

"flag"

|

||||

"github.com/loggie-io/loggie/pkg/core/log"

|

||||

"github.com/loggie-io/loggie/pkg/source/dev"

|

||||

"os"

|

||||

)

|

||||

|

||||

const (

|

||||

SubCommandGenFiles = "genfiles"

|

||||

)

|

||||

|

||||

var (

|

||||

genFilesCmd *flag.FlagSet

|

||||

totalCount int64

|

||||

lineBytes int

|

||||

qps int

|

||||

)

|

||||

|

||||

func init() {

|

||||

genFilesCmd = flag.NewFlagSet(SubCommandGenFiles, flag.ExitOnError)

|

||||

genFilesCmd.Int64Var(&totalCount, "totalCount", -1, "total line count")

|

||||

genFilesCmd.IntVar(&lineBytes, "lineBytes", 1024, "bytes per line")

|

||||

genFilesCmd.IntVar(&qps, "qps", 10, "line qps")

|

||||

log.SetFlag(genFilesCmd)

|

||||

}

|

||||

|

||||

func RunGenFiles() error {

|

||||

if len(os.Args) > 2 {

|

||||

if err := genFilesCmd.Parse(os.Args[2:]); err != nil {

|

||||

return err

|

||||

}

|

||||

}

|

||||

|

||||

log.InitDefaultLogger()

|

||||

|

||||

stop := make(chan struct{})

|

||||

dev.GenLines(stop, totalCount, lineBytes, qps, func(content []byte, index int64) {

|

||||

log.Info("%d %s", index, content)

|

||||

})

|

||||

return errors.New("exit")

|

||||

}

|

||||

|

|

@ -14,13 +14,13 @@ See the License for the specific language governing permissions and

|

|||

limitations under the License.

|

||||

*/

|

||||

|

||||

package subcmd

|

||||

package inspect

|

||||

|

||||

import (

|

||||

"errors"

|

||||

"flag"

|

||||

"github.com/loggie-io/loggie/pkg/ops/dashboard"

|

||||

"github.com/loggie-io/loggie/pkg/ops/dashboard/gui"

|

||||

"github.com/pkg/errors"

|

||||

"os"

|

||||

)

|

||||

|

||||

|

|

@ -28,31 +28,15 @@ const SubCommandInspect = "inspect"

|

|||

|

||||

var (

|

||||

inspectCmd *flag.FlagSet

|

||||

|

||||

LoggiePort int

|

||||

loggiePort int

|

||||

)

|

||||

|

||||

func init() {

|

||||

inspectCmd = flag.NewFlagSet(SubCommandInspect, flag.ExitOnError)

|

||||

|

||||

inspectCmd.IntVar(&LoggiePort, "loggiePort", 9196, "Loggie http port")

|

||||

inspectCmd.IntVar(&loggiePort, "loggiePort", 9196, "Loggie http port")

|

||||

}

|

||||

|

||||

func SwitchSubCommand() error {

|

||||

if len(os.Args) == 1 {

|

||||

return nil

|

||||

}

|

||||

switch os.Args[1] {

|

||||

case SubCommandInspect:

|

||||

if err := runInspect(); err != nil {

|

||||

return err

|

||||

}

|

||||

return errors.New("exit")

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

func runInspect() error {

|

||||

func RunInspect() error {

|

||||

if len(os.Args) > 2 {

|

||||

if err := inspectCmd.Parse(os.Args[2:]); err != nil {

|

||||

return err

|

||||

|

|

@ -60,12 +44,12 @@ func runInspect() error {

|

|||

}

|

||||

|

||||

d := dashboard.New(&gui.Config{

|

||||

LoggiePort: LoggiePort,

|

||||

LoggiePort: loggiePort,

|

||||

})

|

||||

if err := d.Start(); err != nil {

|

||||

d.Stop()

|

||||

return err

|

||||

}

|

||||

|

||||

return nil

|

||||

return errors.New("exit")

|

||||

}

|

||||

|

|

@ -0,0 +1,48 @@

|

|||

/*

|

||||

Copyright 2023 Loggie Authors

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

*/

|

||||

|

||||

package subcmd

|

||||

|

||||

import (

|

||||

"github.com/loggie-io/loggie/cmd/subcmd/genfiles"

|

||||

"github.com/loggie-io/loggie/cmd/subcmd/inspect"

|

||||

"github.com/loggie-io/loggie/cmd/subcmd/version"

|

||||

"os"

|

||||

)

|

||||

|

||||

func SwitchSubCommand() error {

|

||||

if len(os.Args) == 1 {

|

||||

return nil

|

||||

}

|

||||

switch os.Args[1] {

|

||||

case inspect.SubCommandInspect:

|

||||

if err := inspect.RunInspect(); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

case genfiles.SubCommandGenFiles:

|

||||

if err := genfiles.RunGenFiles(); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

case version.SubCommandVersion:

|

||||

if err := version.RunVersion(); err != nil {

|

||||

return err

|

||||

}

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

|

|

@ -0,0 +1,48 @@

|

|||

/*

|

||||

Copyright 2023 Loggie Authors

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

*/

|

||||

|

||||

package version

|

||||

|

||||

import (

|

||||

"errors"

|

||||

"flag"

|

||||

"fmt"

|

||||

"github.com/loggie-io/loggie/pkg/core/global"

|

||||

"os"

|

||||

)

|

||||

|

||||

const (

|

||||

SubCommandVersion = "version"

|

||||

)

|

||||

|

||||

var (

|

||||

versionCmd *flag.FlagSet

|

||||

)

|

||||

|

||||

func init() {

|

||||

versionCmd = flag.NewFlagSet(SubCommandVersion, flag.ExitOnError)

|

||||

}

|

||||

|

||||

func RunVersion() error {

|

||||

if len(os.Args) > 2 {

|

||||

if err := versionCmd.Parse(os.Args[2:]); err != nil {

|

||||

return err

|

||||

}

|

||||

}

|

||||

|

||||

fmt.Printf("Loggie version: %s\n", global.GetVersion())

|

||||

return errors.New("exit")

|

||||

}

|

||||

|

|

@ -1,3 +1,127 @@

|

|||

# Release v1.5.0-rc.0

|

||||

|

||||

### :star2: Features

|

||||

- [breaking]: The `db` in `file source` is moved to the [`loggie.yml`](https://loggie-io.github.io/docs/main/reference/global/db/). If upgrading from an earlier version to v1.5, be sure to check whether `db` has been configured for `file source`. If it is not configured, you can just ignore it, and the default value will remain compatible.

|

||||

|

||||

- Added rocketmq sink (#530)

|

||||

- Added franzKafka source (#573)

|

||||

- Added kata runtime (#554)

|

||||

- `typePodFields`/`typeNodeFields` is supported in LogConfig/ClusterLogConfig (#450)

|

||||

- sink codec support printEvents (#448)

|

||||

- Added queue in LogConfig/ClusterLogConfig (#457)

|

||||

- Changed `olivere/elastic` to the official elasticsearch go client (#581)

|

||||

- Supported `copytruncate` in file source (#571)

|

||||

- Added `genfiles` sub command (#471)

|

||||

- Added queue in LogConfig/ClusterLogConfig queue (#457)

|

||||

- Added `sortBy` field in elasticsearch source (#473)

|

||||

- Added host VM mode with Kubernetes as the configuration center (#449) (#489)

|

||||

- New `addHostMeta` interceptor (#474)

|

||||

- Added persistence driver `badger` (#475) (#584)

|

||||

- Ignore LogConfig with sidecar injection annotation (#478)

|

||||

- Added `toStr` action in transformer interceptor (#482)

|

||||

- You can mount the root directory of a node to the Loggie container without mounting additional Loggie volumes (#460)

|

||||

- Get loggie version with api and sub command (#496) (#508)

|

||||

- Added the `worker` and the `clientId` in Kafka source (#506) (#507)

|

||||

- Upgrade `kafka-go` version (#506) (#567)

|

||||

- Added resultStatus in dev sink which can be used to simulate failure, drop (#531)

|

||||

- Pretty error when unmarshal yaml configuration failed (#539)

|

||||

- Added default topic if render kafka topic failed (#550)

|

||||

- Added `ignoreUnknownTopicOrPartition` in kafka sink (#560)

|

||||

- Supported multiple topics in kafka source (#548)

|

||||

- Added default index if render elasticsearch index failed (#551) (#553)

|

||||

- The default `maxOpenFds` is set to 4096 (#559)

|

||||

- Supported default `sinkRef` in kubernetes discovery (#555)

|

||||

- Added `${_k8s.clusterlogconfig}` in `typePodFields` (#569)

|

||||

- Supported omit empty fields in Kubernetes discovery (#570)

|

||||

- Optimizes `maxbytes` interceptors (#575)

|

||||

- Moved `readFromTail`, `cleanFiles`, `fdHoldTimeoutWhenInactive`, `fdHoldTimeoutWhenRemove` from watcher to outer layer in `file source` (#579) (#585)

|

||||

- Added `cheanUnfinished` in cleanFiles (#580)

|

||||

- Added `target` in `maxbyte` interceptor (#588)

|

||||

- Added `partionKey` in franzKafka (#562)

|

||||

- Added `highPrecision` in `rateLimit` interceptor (#525)

|

||||

|

||||

### :bug: Bug Fixes

|

||||

- Fixed panic when kubeEvent Series is nil (#459)

|

||||

- Upgraded `automaxprocs` version to v1.5.1 (#488)

|

||||

- Fixed set defaults failed in `fieldsUnderKey` (#513)

|

||||

- Fixed parse condition failed when contain ERROR in transformer interceptor (#514) (#515)

|

||||

- Fixed grpc batch out-of-order data streams (#517)

|

||||

- Fixed large line may cause oom (#529)

|

||||

- Fixed duplicated batchSize in queue (#533)

|

||||

- Fixed sqlite locked panic (#524)

|

||||

- Fixed command can't be used in multi-arch container (#541)

|

||||

- Fixed `logger listener` may cause block (#561) (#552)

|

||||

- Fixed `sink concurrency` deepCopy failed (#563)

|

||||

- Drop events when partial error in elasticsearch sink (#572)

|

||||

|

||||

# Release v1.4.0

|

||||

|

||||

### :star2: Features

|

||||

|

||||

- Added Loggie dashboard feature for easier troubleshooting (#416)

|

||||

- Enhanced log alerting function with more flexible log alert detection rules and added alertWebhook sink (#392)

|

||||

- Added sink concurrency support for automatic adaptation based on downstream delay (#376)

|

||||

- Added franzKafka sink for users who prefer the franz kafka library (#423)

|

||||

- Added elasticsearch source (#345)

|

||||

- Added zinc sink (#254)

|

||||

- Added pulsar sink (#417)

|

||||

- Added grok action to transformer interceptor (#418)

|

||||

- Added split action to transformer interceptor (#411)

|

||||

- Added jsonEncode action to transformer interceptor (#421)

|

||||

- Added fieldsFromPath configuration to source for obtaining fields from file content (#401)

|

||||

- Added fieldsRef parameter to filesource listener for obtaining key value from fields configuration and adding to metrics as label (#402)

|

||||

- In transformer interceptor, added dropIfError support to drop event if action execution fails (#409)

|

||||

- Added info listener which currently exposes loggie_info_stat metrics and displays version label (#410)

|

||||

- Added support for customized kafka sink partition key

|

||||

- Added sasl support to Kafka source (#415)

|

||||

- Added https insecureSkipVerify support to loki sink (#422)

|

||||

- Optimized file source for large files (#430)

|

||||

- Changed default value of file source maxOpenFds to 1024 (#437)

|

||||

- ContainerRuntime can now be set to none (#439)

|

||||

- Upgraded to go 1.18 (#440)

|

||||

- Optimize the configuration parameters to remove the redundancy generated by rendering

|

||||

|

||||

### :bug: Bug Fixes

|

||||

|

||||

- Added source fields to filesource listener (#402)

|

||||

- Fixed issue of transformer copy action not copying non-string body (#420)

|

||||

- Added fetching of logs file from UpperDir when rootfs collection is enabled (#414)

|

||||

- Fix pipeline restart npe (#454)

|

||||

- Fix create dir soft link job (#453)

|

||||

|

||||

# Release v1.4.0-rc.0

|

||||

|

||||

### :star2: Features

|

||||

|

||||

- Added Loggie dashboard feature for easier troubleshooting (#416)

|

||||

- Enhanced log alerting function with more flexible log alert detection rules and added alertWebhook sink (#392)

|

||||

- Added sink concurrency support for automatic adaptation based on downstream delay (#376)

|

||||

- Added franzKafka sink for users who prefer the franz kafka library (#423)

|

||||

- Added elasticsearch source (#345)

|

||||

- Added zinc sink (#254)

|

||||

- Added pulsar sink (#417)

|

||||

- Added grok action to transformer interceptor (#418)

|

||||

- Added split action to transformer interceptor (#411)

|

||||

- Added jsonEncode action to transformer interceptor (#421)

|

||||

- Added fieldsFromPath configuration to source for obtaining fields from file content (#401)

|

||||

- Added fieldsRef parameter to filesource listener for obtaining key value from fields configuration and adding to metrics as label (#402)

|

||||

- In transformer interceptor, added dropIfError support to drop event if action execution fails (#409)

|

||||

- Added info listener which currently exposes loggie_info_stat metrics and displays version label (#410)

|

||||

- Added support for customized kafka sink partition key

|

||||

- Added sasl support to Kafka source (#415)

|

||||

- Added https insecureSkipVerify support to loki sink (#422)

|

||||

- Optimized file source for large files (#430)

|

||||

- Changed default value of file source maxOpenFds to 1024 (#437)

|

||||

- ContainerRuntime can now be set to none (#439)

|

||||

- Upgraded to go 1.18 (#440)

|

||||

|

||||

### :bug: Bug Fixes

|

||||

|

||||

- Added source fields to filesource listener (#402)

|

||||

- Fixed issue of transformer copy action not copying non-string body (#420)

|

||||

- Added fetching of logs file from UpperDir when rootfs collection is enabled (#414)

|

||||

|

||||

|

||||

# Release v1.3.0

|

||||

|

||||

### :star2: Features

|

||||

|

|

|

|||

70

go.mod

70

go.mod

|

|

@ -6,18 +6,18 @@ require (

|

|||

github.com/aliyun/aliyun-log-go-sdk v0.1.35

|

||||

github.com/andres-erbsen/clock v0.0.0-20160526145045-9e14626cd129

|

||||

github.com/bmatcuk/doublestar/v4 v4.0.2

|

||||

github.com/creasty/defaults v1.5.1

|

||||

github.com/creasty/defaults v1.7.0

|

||||

github.com/dgraph-io/badger/v3 v3.2103.5

|

||||

github.com/docker/docker v17.12.0-ce-rc1.0.20200706150819-a40b877fbb9e+incompatible

|

||||

github.com/fsnotify/fsnotify v1.5.4

|

||||

github.com/gdamore/tcell/v2 v2.4.1-0.20210905002822-f057f0a857a1

|

||||

github.com/go-playground/validator/v10 v10.4.1

|

||||

github.com/gogo/protobuf v1.3.2

|

||||

github.com/golang/snappy v0.0.3

|

||||

github.com/google/go-cmp v0.5.8

|

||||

github.com/google/go-cmp v0.5.9

|

||||

github.com/hpcloud/tail v1.0.0

|

||||

github.com/jcmturner/gokrb5/v8 v8.4.3

|

||||

github.com/json-iterator/go v1.1.12

|

||||

github.com/mattn/go-sqlite3 v1.14.6

|

||||

github.com/mattn/go-zglob v0.0.3

|

||||

github.com/mmaxiaolei/backoff v0.0.0-20210104115436-e015e09efaba

|

||||

github.com/olivere/elastic/v7 v7.0.28

|

||||

|

|

@ -29,27 +29,25 @@ require (

|

|||

github.com/prometheus/prometheus v1.8.2-0.20201028100903-3245b3267b24

|

||||

github.com/rivo/tview v0.0.0-20221029100920-c4a7e501810d

|

||||

github.com/rs/zerolog v1.20.0

|

||||

github.com/segmentio/kafka-go v0.4.23

|

||||

github.com/segmentio/kafka-go v0.4.39

|

||||

github.com/shirou/gopsutil/v3 v3.22.2

|

||||

github.com/smartystreets-prototypes/go-disruptor v0.0.0-20200316140655-c96477fd7a6a

|

||||

github.com/stretchr/testify v1.8.0

|

||||

github.com/thinkeridea/go-extend v1.3.2

|

||||

github.com/stretchr/testify v1.8.2

|

||||

github.com/twmb/franz-go v1.10.4

|

||||

github.com/twmb/franz-go/pkg/sasl/kerberos v1.1.0

|

||||

go.uber.org/atomic v1.7.0

|

||||

go.uber.org/automaxprocs v0.0.0-20200415073007-b685be8c1c23

|

||||

golang.org/x/net v0.0.0-20220812174116-3211cb980234

|

||||

golang.org/x/text v0.3.7

|

||||

go.uber.org/automaxprocs v1.5.1

|

||||

golang.org/x/net v0.17.0

|

||||

golang.org/x/text v0.13.0

|

||||

golang.org/x/time v0.0.0-20220609170525-579cf78fd858

|

||||

google.golang.org/grpc v1.47.0

|

||||

google.golang.org/protobuf v1.28.0

|

||||

google.golang.org/grpc v1.54.0

|

||||

google.golang.org/protobuf v1.30.0

|

||||

gopkg.in/natefinch/lumberjack.v2 v2.0.0

|

||||

gopkg.in/yaml.v2 v2.4.0

|

||||

k8s.io/api v0.25.4

|

||||

k8s.io/apimachinery v0.25.4

|

||||

k8s.io/client-go v0.25.4

|

||||

k8s.io/code-generator v0.25.4

|

||||

k8s.io/cri-api v0.24.0

|

||||

sigs.k8s.io/yaml v1.3.0

|

||||

)

|

||||

|

||||

|

|

@ -59,10 +57,18 @@ require (

|

|||

github.com/DataDog/zstd v1.5.0 // indirect

|

||||

github.com/apache/pulsar-client-go/oauth2 v0.0.0-20220120090717-25e59572242e // indirect

|

||||

github.com/ardielle/ardielle-go v1.5.2 // indirect

|

||||

github.com/cespare/xxhash v1.1.0 // indirect

|

||||

github.com/chenzhuoyu/base64x v0.0.0-20221115062448-fe3a3abad311 // indirect

|

||||

github.com/danieljoos/wincred v1.0.2 // indirect

|

||||

github.com/dgraph-io/ristretto v0.1.1 // indirect

|

||||

github.com/dvsekhvalnov/jose2go v0.0.0-20200901110807-248326c1351b // indirect

|

||||

github.com/emirpasic/gods v1.12.0 // indirect

|

||||

github.com/fatih/color v1.10.0 // indirect

|

||||

github.com/godbus/dbus v0.0.0-20190726142602-4481cbc300e2 // indirect

|

||||

github.com/golang-jwt/jwt v3.2.2+incompatible // indirect

|

||||

github.com/golang/glog v0.0.0-20160126235308-23def4e6c14b // indirect

|

||||

github.com/golang/mock v1.5.0 // indirect

|

||||

github.com/google/flatbuffers v1.12.1 // indirect

|

||||

github.com/gsterjov/go-libsecret v0.0.0-20161001094733-a6f4afe4910c // indirect

|

||||

github.com/hashicorp/go-uuid v1.0.3 // indirect

|

||||

github.com/jcmturner/aescts/v2 v2.0.0 // indirect

|

||||

|

|

@ -71,19 +77,32 @@ require (

|

|||

github.com/jcmturner/rpc/v2 v2.0.3 // indirect

|

||||

github.com/keybase/go-keychain v0.0.0-20190712205309-48d3d31d256d // indirect

|

||||

github.com/klauspost/compress v1.15.9 // indirect

|

||||

github.com/klauspost/cpuid/v2 v2.0.9 // indirect

|

||||

github.com/konsorten/go-windows-terminal-sequences v1.0.3 // indirect

|

||||

github.com/linkedin/goavro/v2 v2.9.8 // indirect

|

||||

github.com/mattn/go-colorable v0.1.8 // indirect

|

||||

github.com/mattn/go-isatty v0.0.12 // indirect

|

||||

github.com/mitchellh/go-homedir v1.1.0 // indirect

|

||||

github.com/mtibben/percent v0.2.1 // indirect

|

||||

github.com/patrickmn/go-cache v2.1.0+incompatible // indirect

|

||||

github.com/pierrec/lz4/v4 v4.1.15 // indirect

|

||||

github.com/satori/go.uuid v1.2.0 // indirect

|

||||

github.com/spaolacci/murmur3 v1.1.0 // indirect

|

||||

github.com/stathat/consistent v1.0.0 // indirect

|

||||

github.com/tidwall/gjson v1.13.0 // indirect

|

||||

github.com/tidwall/match v1.1.1 // indirect

|

||||

github.com/tidwall/pretty v1.2.0 // indirect

|

||||

github.com/twitchyliquid64/golang-asm v0.15.1 // indirect

|

||||

github.com/twmb/franz-go/pkg/kmsg v1.2.0 // indirect

|

||||

golang.org/x/crypto v0.0.0-20220817201139-bc19a97f63c8 // indirect

|

||||

golang.org/x/mod v0.6.0-dev.0.20220419223038-86c51ed26bb4 // indirect

|

||||

go.opencensus.io v0.23.0 // indirect

|

||||

golang.org/x/arch v0.0.0-20210923205945-b76863e36670 // indirect

|

||||

golang.org/x/crypto v0.14.0 // indirect

|

||||

golang.org/x/mod v0.8.0 // indirect

|

||||

golang.org/x/oauth2 v0.0.0-20211104180415-d3ed0bb246c8 // indirect

|

||||

golang.org/x/sys v0.0.0-20220728004956-3c1f35247d10 // indirect

|

||||

golang.org/x/term v0.0.0-20210927222741-03fcf44c2211 // indirect

|

||||

golang.org/x/tools v0.1.12 // indirect

|

||||

golang.org/x/sys v0.13.0 // indirect

|

||||

golang.org/x/term v0.13.0 // indirect

|

||||

golang.org/x/tools v0.6.0 // indirect

|

||||

golang.org/x/xerrors v0.0.0-20200804184101-5ec99f83aff1 // indirect

|

||||

google.golang.org/appengine v1.6.7 // indirect

|

||||

google.golang.org/genproto v0.0.0-20220107163113-42d7afdf6368 // indirect

|

||||

)

|

||||

|

|

@ -97,7 +116,7 @@ require (

|

|||

github.com/cenkalti/backoff v2.2.1+incompatible // indirect

|

||||

github.com/cespare/xxhash/v2 v2.1.2 // indirect

|

||||

github.com/davecgh/go-spew v1.1.1 // indirect

|

||||

github.com/docker/distribution v2.7.1+incompatible // indirect

|

||||

github.com/docker/distribution v2.8.2+incompatible // indirect

|

||||

github.com/docker/go-connections v0.4.0 // indirect

|

||||

github.com/docker/go-units v0.4.0 // indirect

|

||||

github.com/emicklei/go-restful/v3 v3.8.0 // indirect

|

||||

|

|

@ -113,7 +132,7 @@ require (

|

|||

github.com/go-playground/locales v0.13.0 // indirect

|

||||

github.com/go-playground/universal-translator v0.17.0 // indirect

|

||||

github.com/golang/groupcache v0.0.0-20210331224755-41bb18bfe9da // indirect

|

||||

github.com/golang/protobuf v1.5.2 // indirect

|

||||

github.com/golang/protobuf v1.5.3 // indirect

|

||||

github.com/google/gnostic v0.5.7-v3refs // indirect

|

||||

github.com/google/gofuzz v1.1.0 // indirect

|

||||

github.com/imdario/mergo v0.3.12 // indirect

|

||||

|

|

@ -140,8 +159,8 @@ require (

|

|||

github.com/spf13/pflag v1.0.5 // indirect

|

||||

github.com/tklauser/go-sysconf v0.3.9 // indirect

|

||||

github.com/tklauser/numcpus v0.3.0 // indirect

|

||||

github.com/xdg/scram v0.0.0-20180814205039-7eeb5667e42c // indirect

|

||||

github.com/xdg/stringprep v1.0.0 // indirect

|

||||

github.com/xdg/scram v1.0.5 // indirect

|

||||

github.com/xdg/stringprep v1.0.3 // indirect

|

||||

github.com/yusufpapurcu/wmi v1.2.2 // indirect

|

||||

gopkg.in/fsnotify.v1 v1.4.7 // indirect

|

||||

gopkg.in/inf.v0 v0.9.1 // indirect

|

||||

|

|

@ -156,12 +175,21 @@ require (

|

|||

)

|

||||

|

||||

require (

|

||||

github.com/apache/rocketmq-client-go/v2 v2.1.1

|

||||

github.com/bytedance/sonic v1.9.2

|

||||

github.com/dustin/go-humanize v1.0.0

|

||||

github.com/elastic/go-elasticsearch/v7 v7.17.10

|

||||

github.com/goccy/go-json v0.10.2

|

||||

github.com/goccy/go-yaml v1.11.0

|

||||

github.com/mattn/go-sqlite3 v1.11.0

|

||||

k8s.io/cri-api v0.28.3

|

||||

k8s.io/metrics v0.25.4

|

||||

sigs.k8s.io/controller-runtime v0.13.1

|

||||

)

|

||||

|

||||

replace (

|

||||

github.com/docker/docker => github.com/docker/docker v1.13.1

|

||||

github.com/elastic/go-elasticsearch/v7 => github.com/loggie-io/go-elasticsearch/v7 v7.17.11-0.20230703032733-f33cec60fa85

|

||||

google.golang.org/grpc => google.golang.org/grpc v1.33.2

|

||||

google.golang.org/protobuf => google.golang.org/protobuf v1.26.0

|

||||

gopkg.in/natefinch/lumberjack.v2 v2.0.0 => github.com/machine3/lumberjack v0.2.0

|

||||

|

|

|

|||

171

go.sum

171

go.sum

|

|

@ -66,8 +66,9 @@ github.com/Azure/go-autorest/logger v0.1.0/go.mod h1:oExouG+K6PryycPJfVSxi/koC6L

|

|||

github.com/Azure/go-autorest/logger v0.2.0/go.mod h1:T9E3cAhj2VqvPOtCYAvby9aBXkZmbF5NWuPV8+WeEW8=

|

||||

github.com/Azure/go-autorest/tracing v0.5.0/go.mod h1:r/s2XiOKccPW3HrqB+W0TQzfbtp2fGCgRFtBroKn4Dk=

|

||||

github.com/Azure/go-autorest/tracing v0.6.0/go.mod h1:+vhtPC754Xsa23ID7GlGsrdKBpUA79WCAKPPZVC2DeU=

|

||||

github.com/BurntSushi/toml v0.3.1 h1:WXkYYl6Yr3qBf1K79EBnL4mak0OimBfB0XUf9Vl28OQ=

|

||||

github.com/BurntSushi/toml v0.3.1/go.mod h1:xHWCNGjB5oqiDr8zfno3MHue2Ht5sIBksp03qcyfWMU=

|

||||

github.com/BurntSushi/toml v1.1.0 h1:ksErzDEI1khOiGPgpwuI7x2ebx/uXQNw7xJpn9Eq1+I=

|

||||

github.com/BurntSushi/toml v1.1.0/go.mod h1:CxXYINrC8qIiEnFrOxCa7Jy5BFHlXnUU2pbicEuybxQ=

|

||||

github.com/BurntSushi/xgb v0.0.0-20160522181843-27f122750802/go.mod h1:IVnqGOEym/WlBOVXweHU+Q+/VP0lqqI8lqeDx9IjBqo=

|

||||

github.com/DATA-DOG/go-sqlmock v1.3.3/go.mod h1:f/Ixk793poVmq4qj/V1dPUg2JEAKC73Q5eFN3EC/SaM=

|

||||

github.com/DataDog/datadog-go v3.2.0+incompatible/go.mod h1:LButxg5PwREeZtORoXG3tL4fMGNddJ+vMq1mwgfaqoQ=

|

||||

|

|

@ -78,6 +79,7 @@ github.com/Knetic/govaluate v3.0.1-0.20171022003610-9aa49832a739+incompatible/go

|

|||

github.com/Microsoft/go-winio v0.4.14 h1:+hMXMk01us9KgxGb7ftKQt2Xpf5hH/yky+TDA+qxleU=

|

||||

github.com/Microsoft/go-winio v0.4.14/go.mod h1:qXqCSQ3Xa7+6tgxaGTIe4Kpcdsi+P8jBhyzoq1bpyYA=

|

||||

github.com/NYTimes/gziphandler v0.0.0-20170623195520-56545f4a5d46/go.mod h1:3wb06e3pkSAbeQ52E9H9iFoQsEEwGN64994WTCIhntQ=

|

||||

github.com/OneOfOne/xxhash v1.2.2 h1:KMrpdQIwFcEqXDklaen+P1axHaj9BSKzvpUUfnHldSE=

|

||||

github.com/OneOfOne/xxhash v1.2.2/go.mod h1:HSdplMjZKSmBqAxg5vPj2TmRDmfkzw+cTzAElWljhcU=

|

||||

github.com/PuerkitoBio/purell v1.0.0/go.mod h1:c11w/QuzBsJSee3cPx9rAFu61PvFxuPbtSwDGJws/X0=

|

||||

github.com/PuerkitoBio/purell v1.1.0/go.mod h1:c11w/QuzBsJSee3cPx9rAFu61PvFxuPbtSwDGJws/X0=

|

||||

|

|

@ -108,12 +110,15 @@ github.com/apache/pulsar-client-go v0.8.1 h1:UZINLbH3I5YtNzqkju7g9vrl4CKrEgYSx2r

|

|||

github.com/apache/pulsar-client-go v0.8.1/go.mod h1:yJNcvn/IurarFDxwmoZvb2Ieylg630ifxeO/iXpk27I=

|

||||