7.5 KiB

| title | description | keywords |

|---|---|---|

| Service clusters | Learn about Interlock, an application routing and load balancing system for Docker Swarm. | ucp, interlock, load balancing |

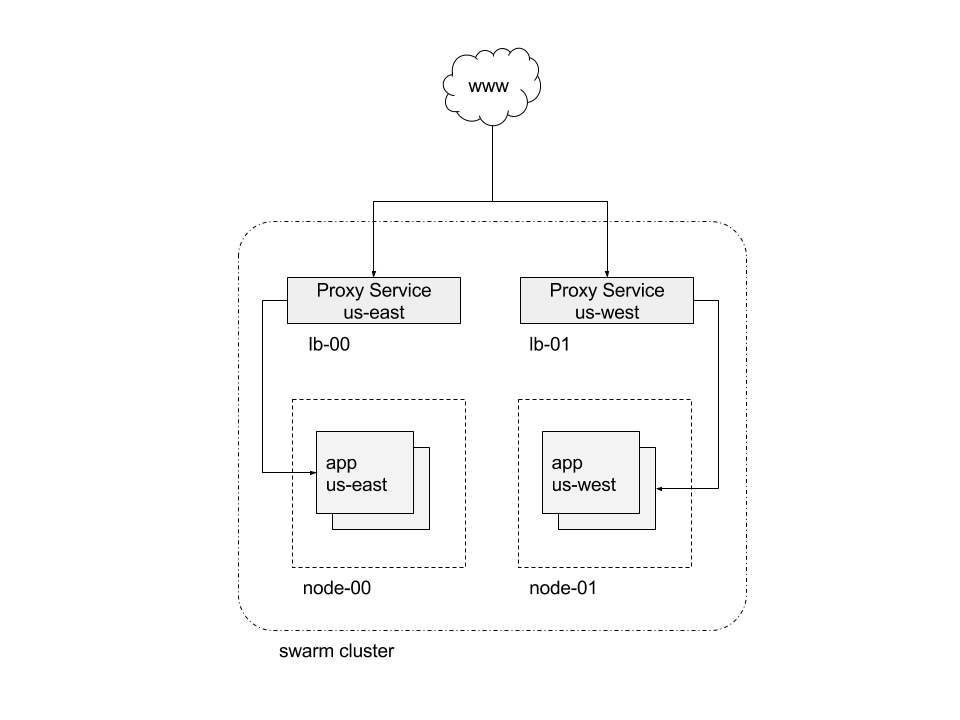

In this example we will configure an eight (8) node Swarm cluster that uses service clusters to route traffic to different proxies. There are three (3) managers and five (5) workers. Four of the workers are configured with node labels to be dedicated ingress cluster load balancer nodes. These will receive all application traffic.

This example will not cover the actual deployment of infrastructure.

It assumes you have a vanilla Swarm cluster (docker init and docker swarm join from the nodes).

See the Swarm documentation if you need help

getting a Swarm cluster deployed.

We will configure four load balancer worker nodes (lb-00 through lb-03) with node labels in order to pin the Interlock Proxy

service for each Interlock service cluster. Once you are logged into one of the Swarm managers run the following to add node labels to the dedicated ingress workers:

$> docker node update --label-add nodetype=loadbalancer --label-add region=us-east lb-00

lb-00

$> docker node update --label-add nodetype=loadbalancer --label-add region=us-east lb-01

lb-01

$> docker node update --label-add nodetype=loadbalancer --label-add region=us-west lb-02

lb-02

$> docker node update --label-add nodetype=loadbalancer --label-add region=us-west lb-03

lb-03

You can inspect each node to ensure the labels were successfully added:

{% raw %}

$> docker node inspect -f '{{ .Spec.Labels }}' lb-00

map[nodetype:loadbalancer region:us-east]

$> docker node inspect -f '{{ .Spec.Labels }}' lb-02

map[nodetype:loadbalancer region:us-west]

{% endraw %}

Next, we will create a configuration object for Interlock that contains multiple extensions with varying service clusters:

$> cat << EOF | docker config create com.docker.ucp.interlock.conf-1 -

ListenAddr = ":8080"

DockerURL = "unix:///var/run/docker.sock"

PollInterval = "3s"

[Extensions]

[Extensions.us-east]

Image = "{{ page.ucp_org }}/ucp-interlock-extension:{{ page.ucp_version }}"

Args = []

ServiceName = "ucp-interlock-extension-us-east"

ProxyImage = "{{ page.ucp_org }}/ucp-interlock-proxy:{{ page.ucp_version }}"

ProxyArgs = []

ProxyServiceName = "ucp-interlock-proxy-us-east"

ProxyConfigPath = "/etc/nginx/nginx.conf"

ProxyReplicas = 2

ServiceCluster = "us-east"

PublishMode = "host"

PublishedPort = 8080

TargetPort = 80

PublishedSSLPort = 8443

TargetSSLPort = 443

[Extensions.us-east.Config]

User = "nginx"

PidPath = "/var/run/proxy.pid"

WorkerProcesses = 1

RlimitNoFile = 65535

MaxConnections = 2048

[Extensions.us-east.Labels]

ext_region = "us-east"

[Extensions.us-east.ProxyLabels]

proxy_region = "us-east"

[Extensions.us-west]

Image = "{{ page.ucp_org }}/ucp-interlock-extension:{{ page.ucp_version }}"

Args = []

ServiceName = "ucp-interlock-extension-us-west"

ProxyImage = "nginx:alpine"

ProxyArgs = []

ProxyServiceName = "ucp-interlock-proxy-us-west"

ProxyConfigPath = "/etc/nginx/nginx.conf"

ProxyReplicas = 2

ServiceCluster = "us-west"

PublishMode = "host"

PublishedPort = 8080

TargetPort = 80

PublishedSSLPort = 8443

TargetSSLPort = 443

[Extensions.us-west.Config]

User = "nginx"

PidPath = "/var/run/proxy.pid"

WorkerProcesses = 1

RlimitNoFile = 65535

MaxConnections = 2048

[Extensions.us-west.Labels]

ext_region = "us-west"

[Extensions.us-west.ProxyLabels]

proxy_region = "us-west"

EOF

oqkvv1asncf6p2axhx41vylgt

Note that we are using "host" mode networking in order to use the same ports (8080 and 8443) in the cluster. We cannot use ingress

networking as it reserves the port across all nodes. If you want to use ingress networking you will have to use different ports

for each service cluster.

Next we will create a dedicated network for Interlock and the extensions:

$> docker network create -d overlay ucp-interlock

Now we can create the Interlock service:

$> docker service create \

--name ucp-interlock \

--mount src=/var/run/docker.sock,dst=/var/run/docker.sock,type=bind \

--network ucp-interlock \

--constraint node.role==manager \

--config src=com.docker.ucp.interlock.conf-1,target=/config.toml \

{{ page.ucp_org }}/ucp-interlock:{{ page.ucp_version }} run -c /config.toml

sjpgq7h621exno6svdnsvpv9z

Configure Proxy Services

Once we have the node labels we can re-configure the Interlock Proxy services to be constrained to the workers for each region. Again, from a manager run the following to pin the proxy services to the ingress workers:

$> docker service update \

--constraint-add node.labels.nodetype==loadbalancer \

--constraint-add node.labels.region==us-east \

ucp-interlock-proxy-us-east

$> docker service update \

--constraint-add node.labels.nodetype==loadbalancer \

--constraint-add node.labels.region==us-west \

ucp-interlock-proxy-us-west

We are now ready to deploy applications. First we will create individual networks for each application:

$> docker network create -d overlay demo-east

$> docker network create -d overlay demo-west

Next we will deploy the application in the us-east service cluster:

$> docker service create \

--name demo-east \

--network demo-east \

--detach=true \

--label com.docker.lb.hosts=demo-east.local \

--label com.docker.lb.port=8080 \

--label com.docker.lb.service_cluster=us-east \

--env METADATA="us-east" \

ehazlett/docker-demo

Now we deploy the application in the us-west service cluster:

$> docker service create \

--name demo-west \

--network demo-west \

--detach=true \

--label com.docker.lb.hosts=demo-west.local \

--label com.docker.lb.port=8080 \

--label com.docker.lb.service_cluster=us-west \

--env METADATA="us-west" \

ehazlett/docker-demo

Only the service cluster that is designated will be configured for the applications. For example, the us-east service cluster

will not be configured to serve traffic for the us-west service cluster and vice versa. We can see this in action when we

send requests to each service cluster.

When we send a request to the us-east service cluster it only knows about the us-east application. This example uses IP address lookup from the swarm API, so ssh to a manager node or configure your shell with a UCP client bundle before testing:

{% raw %}

$> curl -H "Host: demo-east.local" http://$(docker node inspect -f '{{ .Status.Addr }}' lb-00):8080/ping

{"instance":"1b2d71619592","version":"0.1","metadata":"us-east","request_id":"3d57404cf90112eee861f9d7955d044b"}

$> curl -H "Host: demo-west.local" http://$(docker node inspect -f '{{ .Status.Addr }}' lb-00):8080/ping

<html>

<head><title>404 Not Found</title></head>

<body bgcolor="white">

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.13.6</center>

</body>

</html>

{% endraw %}

Application traffic is isolated to each service cluster. Interlock also ensures that a proxy will only be updated if it has corresponding updates

to its designated service cluster. So in this example, updates to the us-east cluster will not affect the us-west cluster. If there is a problem

the others will not be affected.