7.0 KiB

Introduction

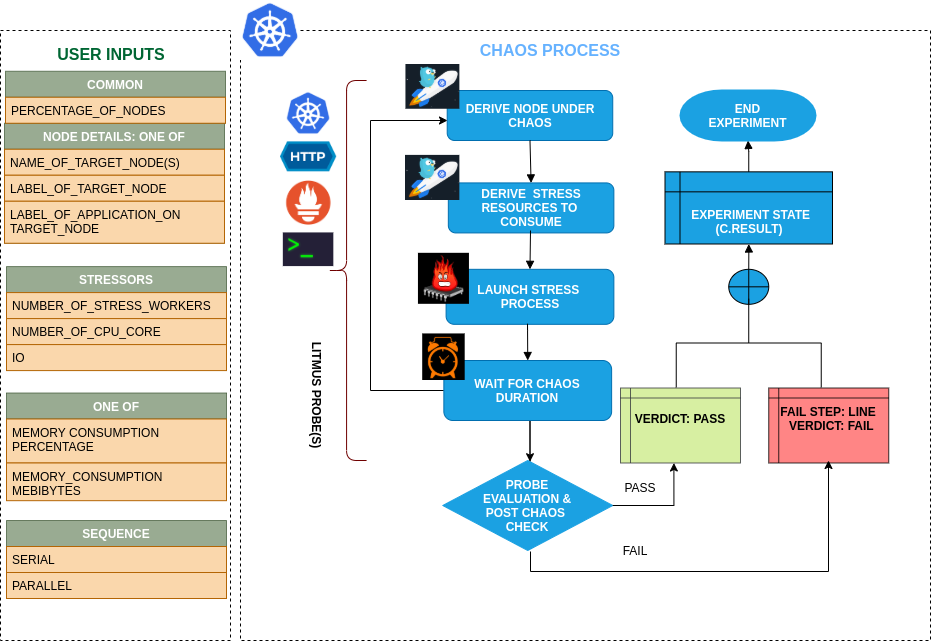

- This experiment causes CPU resource exhaustion on the Kubernetes node. The experiment aims to verify resiliency of applications whose replicas may be evicted on account on nodes turning unschedulable (Not Ready) due to lack of CPU resources.

- The CPU chaos is injected using a helper pod running the linux stress tool (a workload generator). The chaos is effected for a period equalling the TOTAL_CHAOS_DURATION Application implies services. Can be reframed as: Tests application resiliency upon replica evictions caused due to lack of CPU resources

!!! tip "Scenario: Stress the CPU of node"

Uses

??? info "View the uses of the experiment" coming soon

Prerequisites

??? info "Verify the prerequisites"

- Ensure that Kubernetes Version > 1.16

- Ensure that the Litmus Chaos Operator is running by executing kubectl get pods in operator namespace (typically, litmus).If not, install from here

- Ensure that the node-cpu-hog experiment resource is available in the cluster by executing kubectl get chaosexperiments in the desired namespace. If not, install from here

Default Validations

??? info "View the default validations" The target nodes should be in ready state before and after chaos injection.

Minimal RBAC configuration example (optional)

!!! tip "NOTE"

If you are using this experiment as part of a litmus workflow scheduled constructed & executed from chaos-center, then you may be making use of the litmus-admin RBAC, which is pre installed in the cluster as part of the agent setup.

??? note "View the Minimal RBAC permissions"

[embedmd]:# (https://raw.githubusercontent.com/litmuschaos/chaos-charts/master/charts/generic/node-cpu-hog/rbac.yaml yaml)

```yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: node-cpu-hog-sa

namespace: default

labels:

name: node-cpu-hog-sa

app.kubernetes.io/part-of: litmus

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: node-cpu-hog-sa

labels:

name: node-cpu-hog-sa

app.kubernetes.io/part-of: litmus

rules:

- apiGroups: [""]

resources: ["pods","events"]

verbs: ["create","list","get","patch","update","delete","deletecollection"]

- apiGroups: [""]

resources: ["pods/exec","pods/log"]

verbs: ["list","get","create"]

- apiGroups: ["batch"]

resources: ["jobs"]

verbs: ["create","list","get","delete","deletecollection"]

- apiGroups: ["litmuschaos.io"]

resources: ["chaosengines","chaosexperiments","chaosresults"]

verbs: ["create","list","get","patch","update"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get","list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-cpu-hog-sa

labels:

name: node-cpu-hog-sa

app.kubernetes.io/part-of: litmus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: node-cpu-hog-sa

subjects:

- kind: ServiceAccount

name: node-cpu-hog-sa

namespace: default

```

Use this sample RBAC manifest to create a chaosServiceAccount in the desired (app) namespace. This example consists of the minimum necessary role permissions to execute the experiment.

Experiment tunables

??? info "check the experiment tunables"

Mandatory Fields

<table>

<tr>

<th> Variables </th>

<th> Description </th>

<th> Notes </th>

</tr>

<tr>

<td> TARGET_NODES </td>

<td> Comma separated list of nodes, subjected to node cpu hog chaos</td>

<td> </td>

</tr>

<tr>

<td> NODE_LABEL </td>

<td> It contains node label, which will be used to filter the target nodes if TARGET_NODES ENV is not set </td>

<td>It is mutually exclusive with the TARGET_NODES ENV. If both are provided then it will use the TARGET_NODES</td>

</tr>

</table>

<h2>Optional Fields</h2>

<table>

<tr>

<th> Variables </th>

<th> Description </th>

<th> Notes </th>

</tr>

<tr>

<td> TOTAL_CHAOS_DURATION </td>

<td> The time duration for chaos insertion (seconds) </td>

<td> Defaults to 60 </td>

</tr>

<tr>

<td> LIB </td>

<td> The chaos lib used to inject the chaos </td>

<td> Defaults to <code>litmus</code> </td>

</tr>

<tr>

<td> LIB_IMAGE </td>

<td> Image used to run the stress command </td>

<td> Defaults to <code>litmuschaos/go-runner:latest</code> </td>

</tr>

<tr>

<td> RAMP_TIME </td>

<td> Period to wait before & after injection of chaos in sec </td>

<td> Optional </td>

<td> </td>

</tr>

<tr>

<td> NODE_CPU_CORE </td>

<td> Number of cores of node CPU to be consumed </td>

<td> Defaults to <code>2</code> </td>

<td> </td>

</tr>

<tr>

<td> NODES_AFFECTED_PERC </td>

<td> The Percentage of total nodes to target </td>

<td> Defaults to 0 (corresponds to 1 node), provide numeric value only </td>

</tr>

<tr>

<td> SEQUENCE </td>

<td> It defines sequence of chaos execution for multiple target pods </td>

<td> Default value: parallel. Supported: serial, parallel </td>

</tr>

</table>

Experiment Examples

Common and Node specific tunables

Refer the common attributes and Node specific tunable to tune the common tunables for all experiments and node specific tunables.

Node CPU Cores

It contains number of cores of node CPU to be consumed. It can be tuned via NODE_CPU_CORE ENV.

Use the following example to tune this:

# stress the cpu of the targeted nodes

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

chaosServiceAccount: node-cpu-hog-sa

experiments:

- name: node-cpu-hog

spec:

components:

env:

# number of cpu cores to be stressed

- name: NODE_CPU_CORE

value: '2'

- name: TOTAL_CHAOS_DURATION

VALUE: '60'